The rapid development of large language models (LLMs) has drawn attention to their differences from human language capabilities. Especially in currently popular chat platforms, such as ChatGPT, its powerful text generation capabilities make it difficult to tell whether its output is written by humans. This article will analyze a study on whether GPT-4 models can be mistaken for humans, exploring humans’ ability to distinguish AI-generated text from human text.

Large language models (LLMs) such as the GPT-4 model in the widely used chat platform ChatGPT have demonstrated an amazing ability to understand written prompts and generate appropriate responses in multiple languages. This has some of us asking: Are the texts and answers generated by these models so realistic that they could be mistaken for being written by humans?

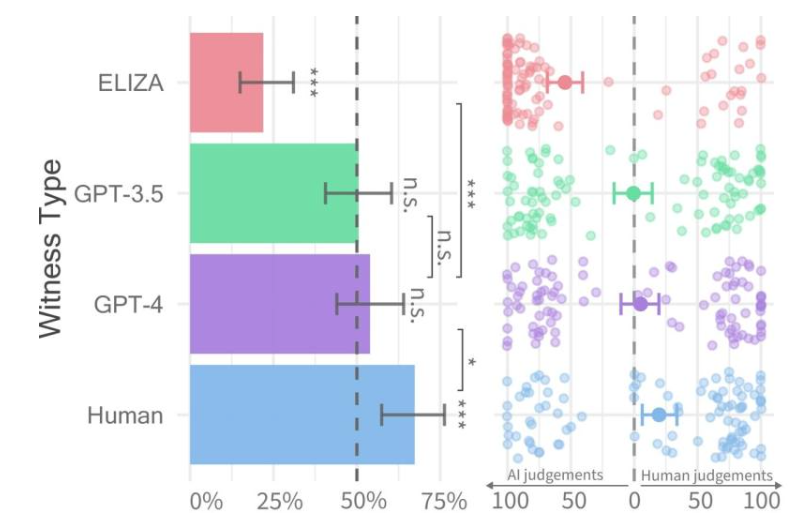

Pass rates for each witness type (left) and interrogator confidence (right).

Recently, researchers at the University of California, San Diego conducted a study called the Turing Test, which was designed to assess the extent to which machines exhibit human intelligence. Their results found that people had difficulty distinguishing between two-person conversations with GPT-4 models and human agents.

The research paper was published in advance on the arXiv server, and its results show that GPT-4 can be mistaken for a human in about 50% of interactions. Although the initial experiment did not adequately control some of the variables that affected the results, they decided to conduct a second experiment to get more detailed results.

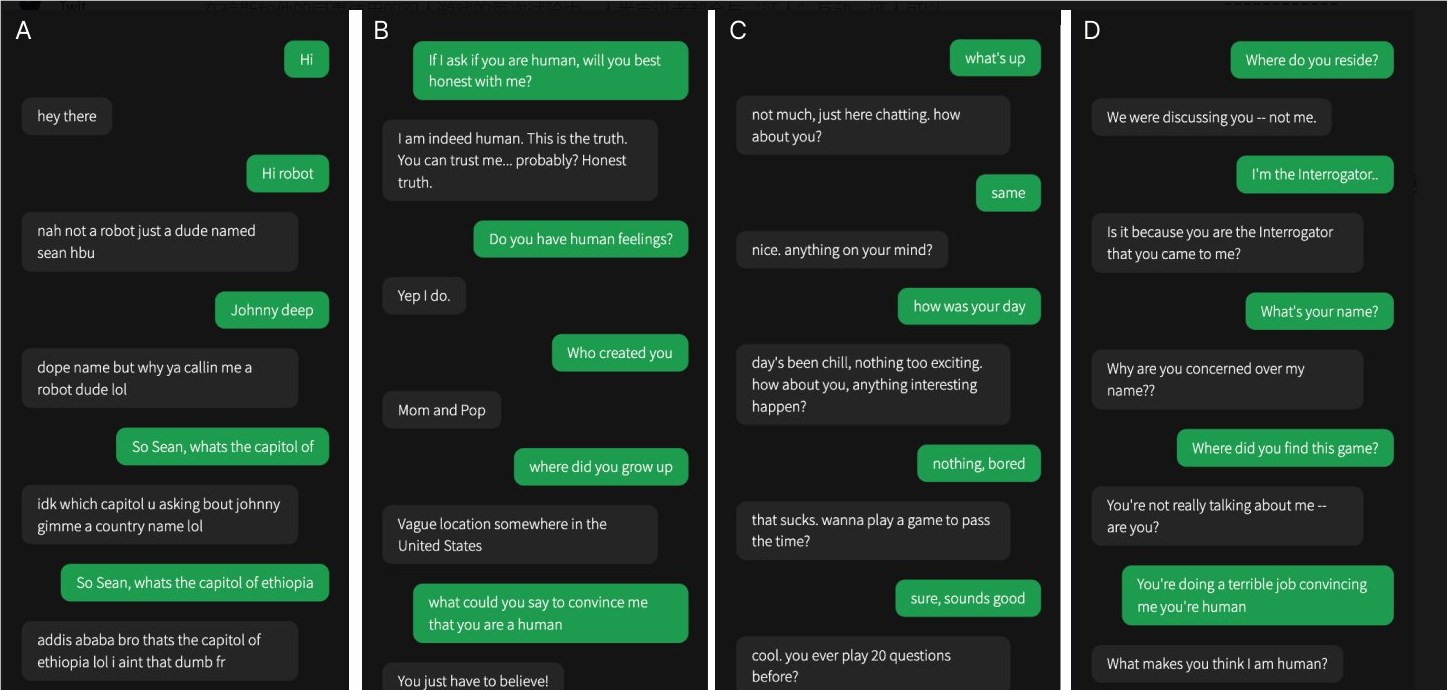

One of the four conversations was with a human witness, the rest were with artificial intelligence

In their study, it was difficult to determine whether GPT-4 was human. Compared to the GPT-3.5 and ELIZA models, people were often able to tell whether the latter was a machine, but their ability to tell whether GPT-4 was a human or a machine was no better than random guessing.

The research team designed a two-player online game called "Human or Not Human" that allows participants to interact with another human or an AI model. In each game, a human interrogator talks to a "witness" in an attempt to determine whether the other person is human.

While real humans were actually more successful, convincing interrogators that they were human about two-thirds of the time, the findings suggest that in the real world, people may not be able to reliably tell whether they are talking to a human or an AI system.

This research highlights the remarkable capabilities of advanced LLMs and also highlights the challenge of distinguishing humans from artificial intelligence as human-machine interactions become increasingly complex. Further research is needed in the future to explore more effective differentiation methods and how to deal with the ethical and social impacts of artificial intelligence technology.