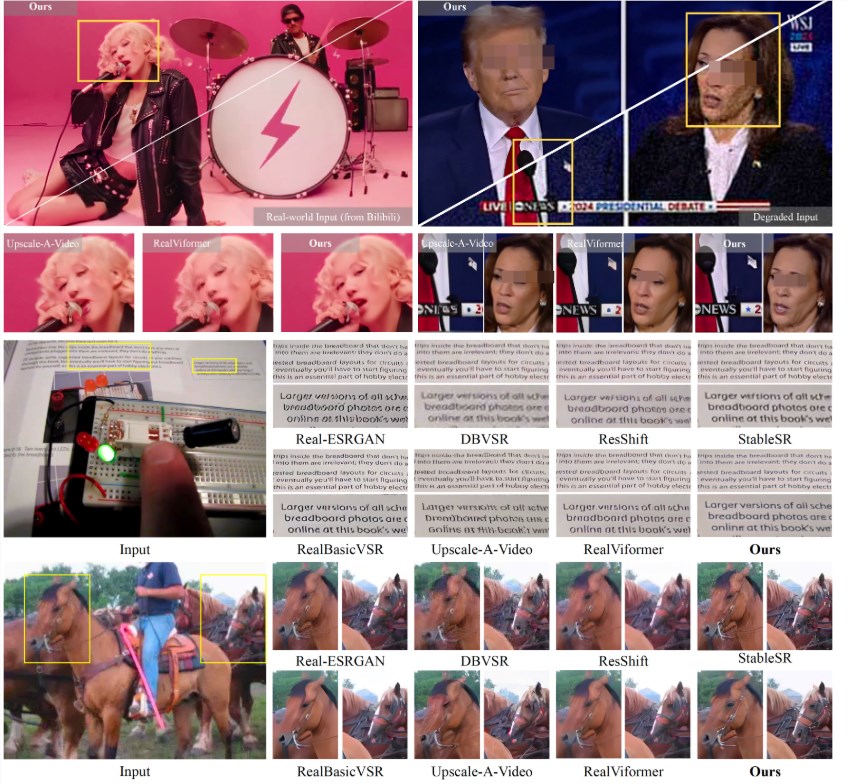

A research team from Nanjing University collaborated with ByteDance and Southwest University to launch an innovative video super-resolution technology called STAR. This technology cleverly combines spatiotemporal enhancement methods and text-to-video models, which can significantly improve the clarity of low-resolution videos, especially those downloaded from video platforms. The pre-trained version of the STAR model has been open sourced on GitHub for the convenience of researchers and developers. This marks an important breakthrough in the field of video processing. The project provides two models, I2VGen-XL and CogVideoX-5B, and supports multiple input formats and prompt options to meet different needs.

In order to facilitate researchers and developers, the research team has released the pre-trained version of the STAR model on GitHub, including two models, I2VGen-XL and CogVideoX-5B, as well as related inference code. The introduction of these tools marks an important advance in the field of video processing.

The process of using this model is relatively simple. First, users need to download the pre-trained STAR model from HuggingFace and put it into the specified directory. Next, prepare the video file to be tested and select the appropriate text prompt options, including no prompts, automatically generated, or manually entered prompts. Users only need to adjust the path settings in the script to easily process video super-resolution.

This project specially designed two models based on I2VGen-XL, which are used for different degrees of video degradation processing to ensure that they can meet a variety of needs. In addition, the CogVideoX-5B model specifically supports the 720x480 input format, providing flexible options for specific scenarios.

This research not only provides new ideas for the development of video super-resolution technology, but also opens up new research directions for researchers in related fields. The research team expresses its gratitude to cutting-edge technologies such as I2VGen-XL, VEnhancer, CogVideoX and OpenVid-1M, which they believe laid the foundation for their project.

Project entrance: https://github.com/NJU-PCALab/STAR

Highlights:

The new technology STAR combines text-to-video models to achieve video super-resolution and improve video quality.

The research team has released pre-trained models and inference codes, and the usage process is simple and clear.

Provide contact information to encourage users to communicate and discuss with the research team.

The STAR project is open sourced through GitHub, making it easy for developers and researchers to use. Its simple and easy-to-use operation process and powerful functions bring new possibilities to the field of video super-resolution and provide new directions for future research. We look forward to STAR technology playing a greater role in practical applications.