With the rapid development of multimodal large language models (MLLM), the effective integration of visual, language and speech modalities has become a research hotspot. However, the challenges brought by the essential differences in different modal data, such as the spatial information of vision and the time series information of speech, hinder efficient multi-modal interaction. Existing methods often rely on independent ASR and TTS modules, which increases latency and reduces the smoothness of interaction. This article introduces VITA-1.5, a multimodal large-scale language model that integrates vision, language, and speech, aiming to solve these problems.

Recently, significant progress has been made in multimodal large language models (MLLM), especially in the integration of visual and textual modalities. However, with the increasing popularity of human-computer interaction, the importance of speech modalities has become increasingly prominent, especially in multi-modal dialogue systems. Voice is not only a key medium for information transmission, but also significantly improves the naturalness and convenience of interactions.

However, due to the essential differences between visual and speech data, integrating them into MLLM is not trivial. For example, visual data conveys spatial information, while speech data conveys dynamic changes in time series. These fundamental differences bring challenges to the simultaneous optimization of the two modalities, often leading to conflicts during the training process. Additionally, traditional speech-to-speech systems rely on separate automatic speech recognition (ASR) and text-to-speech (TTS) modules, which increases latency and reduces coherence, limiting their usefulness in real-time applications.

To address these challenges, researchers launched VITA-1.5, a multimodal large-scale language model that integrates vision, language, and speech. VITA-1.5 uses a carefully designed three-stage training method to gradually introduce visual and speech data to alleviate modal conflicts while maintaining strong multi-modal performance.

In the first stage, the model focuses on visual-linguistic training, building strong visual capabilities by training visual adapters and fine-tuning the model using descriptive captions and visual question and answer data.

The second stage introduces audio input processing, by training the audio encoder using speech transcription paired data, and then fine-tuning it using speech question and answer data, so that the model can effectively understand and respond to the audio input. Finally, in the third stage, the audio decoder is trained to achieve end-to-end speech output without the need for external TTS modules, thus enabling VITA-1.5 to generate smooth speech replies and enhance the naturalness and interactivity of multi-modal dialogue systems.

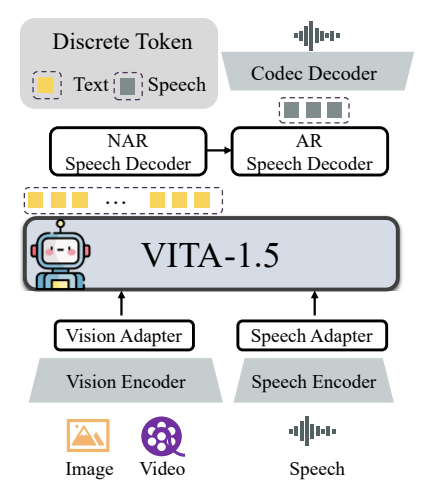

The overall architecture of VITA-1.5 includes visual and audio encoders and adapters to connect to large language models. The output has an end-to-end speech generation module instead of using an external TTS model like the original VITA-1.0 version. The visual encoder uses InternViT-300M, the input image size is 448×448 pixels, and each image generates 256 visual tokens.

For high-resolution images, VITA-1.5 adopts a dynamic patching strategy to capture local details. Video is treated as a special multi-image input type, with frames sampled based on the length of the video. The audio coding module consists of multiple downsampling convolutional layers and 24 Transformer blocks, with an output frame rate of 12.5Hz. The audio adapter consists of multiple convolutional layers with 2x downsampling. TiCodec is used as the codec model, which encodes a continuous speech signal into discrete speech tokens with a frequency of 40Hz and is able to decode them back to a speech signal with a sampling rate of 24,000Hz. To enable the model to output speech tokens, two speech decoders are added after the text tokens: a non-autoregressive (NAR) speech decoder and an autoregressive (AR) speech decoder.

The training data of VITA-1.5 covers a wide range of categories, such as subtitle data and question and answer data, including Chinese and English. At different training stages, subsets of the entire dataset are selectively sampled to serve different goals. The training strategy is carried out in three stages:

The first stage: visual-linguistic training, including visual alignment, visual understanding and visual supervised fine-tuning, aims to bridge the gap between vision and language and enable the model to understand image content and answer visual questions.

Phase 2: Audio input tuning, including audio alignment and audio supervised fine-tuning, is designed to enable the model to understand the audio input and interact through voice questions and text answers.

The third stage: Audio output tuning, including codec training and NAR + AR decoder training, is designed to enable the model to generate speech output and achieve end-to-end voice interaction.

The researchers conducted an extensive evaluation on various benchmarks for image, video, and speech understanding and compared the results with open source and proprietary models. The results show that VITA-1.5 exhibits comparable perception and reasoning capabilities to the leading MLLM on image and video tasks, and achieves significant improvements in speech capabilities. For example, on the image understanding benchmark, VITA-1.5 performs on par with state-of-the-art open source models and even exceeds some closed source models. In terms of video understanding, VITA-1.5 performs on par with top open source models. In addition, VITA-1.5 achieved leading accuracy in both Chinese and English ASR tasks, surpassing professional speech models.

Overall, VITA-1.5 successfully integrates vision and speech through a carefully designed three-stage training strategy, achieving strong visual and speech understanding capabilities, enabling efficient speech-to-speech interaction without relying on Separate ASR or TTS module. This research is expected to promote the advancement of open source models in the field of real-time multi-modal interaction.

Project address: https://github.com/VITA-MLLM/VITA

The emergence of VITA-1.5 marks a new stage in the development of multi-modal large-scale language models. Its end-to-end speech generation capabilities and excellent performance in image, video and speech understanding tasks will build a more natural and smooth multi-modal language model for the future. Dynamic interactive systems provide new possibilities. This research result deserves attention and is expected to play an important role in practical applications.