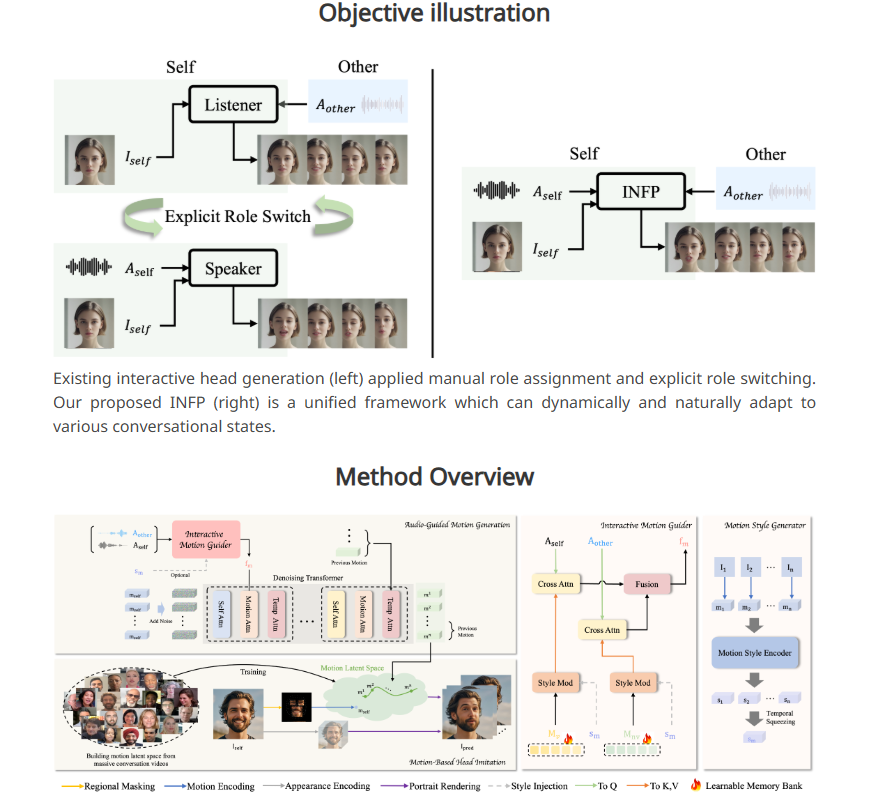

ByteDance has launched a new AI system, INFP, which enables static portrait photos to "speak" and react through audio input. Unlike traditional technology, INFP does not need to manually specify roles. The system can automatically judge based on the conversation, which greatly improves efficiency and convenience. Its core technology lies in the two steps of "motion-based head imitation" and "audio-guided motion generation". By analyzing facial expressions, head movements and audio input in conversations, it generates natural and smooth motion patterns to make static images come to life. In order to train INFP, ByteDance also built a DyConv data set containing more than 200 hours of real conversation videos to ensure high-quality output of the system.

There are two main steps to the INFP's workflow. In the first step, called "motion-based head mimicry," the system extracts details from the video by analyzing people's facial expressions and head movements during conversations. This motion data is converted into a format that can be used in subsequent animations, allowing still photos to match the original character's motion.

The second step is "audio-guided motion generation", where the system generates natural motion patterns based on audio input. The research team developed a "motion guide" that analyzes audio from both parties in a conversation to create movement patterns for speaking and listening. An AI component called a Diffusion Transformer then progressively optimizes these patterns, resulting in smooth and realistic motion that perfectly matches the audio content.

In order to effectively train the system, the research team also established a dialogue data set called DyConv, which collects more than 200 hours of real dialogue videos. Compared with existing conversation databases such as ViCo and RealTalk, DyConv has unique advantages in emotional expression and video quality.

ByteDance says INFP outperforms existing tools in several key areas, particularly in matching lip movements to speech, preserving individual facial features, and creating diverse natural movements. Furthermore, the system performed equally well when generating videos in which only the interlocutor was heard.

Although INFP currently only supports audio input, the research team is exploring the possibility of extending the system to images and text. The future goal is to be able to create realistic animations of the character's whole body. However, considering that this type of technology could be used to create fake videos and spread misinformation, the research team plans to restrict the use of the core technology to research institutions, similar to Microsoft's management of its advanced voice cloning system.

This technology is part of ByteDance's broader AI strategy. Relying on its popular applications TikTok and CapCut, ByteDance has a broad AI innovative application platform.

Project entrance: https://grisoon.github.io/INFP/

Highlights:

INFP can allow static portraits to "speak" through audio and automatically determine the dialogue role.

The system works in two steps: first, it extracts motion details in human conversations, and second, it converts the audio into natural motion patterns.

ByteDance's DyConv data set contains more than 200 hours of high-quality conversation videos to help improve system performance.

The launch of the INFP system demonstrates ByteDance's innovative strength in the field of artificial intelligence. Its future development potential is huge, but potential ethical risks also need to be dealt with carefully. The advancement of technology should always be oriented towards social interests and ensure that it is used to benefit mankind.