The latest LatentSync lip synchronization framework released by ByteDance uses the audio conditional latent diffusion model based on Stable Diffusion to achieve a more accurate and efficient lip synchronization effect. Different from previous methods, LatentSync adopts an end-to-end approach to directly model the complex relationship between audio and vision without requiring intermediate motion representation, significantly improving processing efficiency and synchronization accuracy. This framework cleverly uses Whisper for audio embedding and combines it with the TREPA mechanism to enhance temporal consistency, ensuring that the output video maintains temporal coherence while maintaining lip synchronization accuracy.

Recently, ByteDance released a new lip synchronization framework called LatentSync, which aims to use the audio condition latent diffusion model to achieve more accurate lip synchronization. The framework is based on Stable Diffusion and is optimized for time consistency.

Unlike previous methods based on pixel spatial diffusion or two-stage generation, LatentSync adopts an end-to-end approach without the need for intermediate motion representation and can directly model complex audio-visual relationships.

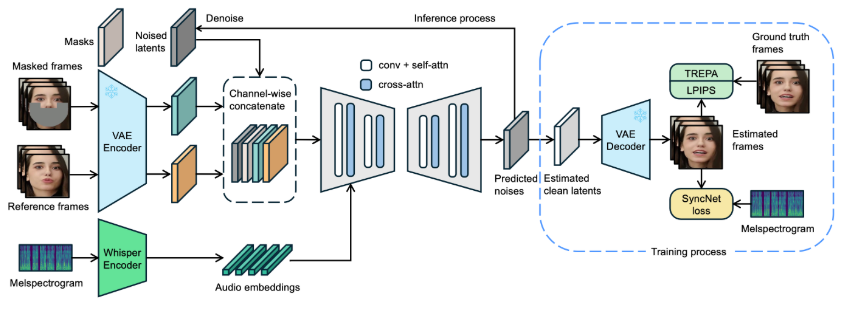

In the framework of LatentSync, Whisper is first used to convert audio spectrograms into audio embeddings and integrated into the U-Net model through cross-attention layers. The framework performs channel-level concatenation of reference frames and mask frames with noise latent variables as input to U-Net.

During training, a one-step approach is used to estimate clean latent variables from prediction noise and then decode to generate clean frames. At the same time, the model introduces the Temporal REPresentation Alignment (TREPA) mechanism to enhance temporal consistency and ensure that the generated video can maintain temporal coherence while maintaining lip synchronization accuracy.

To demonstrate the effectiveness of this technology, the project provides a series of sample videos, showing the original video and the lip-synced video. Through examples, users can intuitively feel the significant progress of LatentSync in video lip synchronization.

Original video:

Output video:

In addition, the project also plans to open source the inference code and checkpoints to facilitate users for training and testing. For users who want to try inference, just download the necessary model weight files and you are ready to go. A complete data processing process has also been designed, covering every step from video file processing to facial alignment, ensuring that users can get started easily.

Model project entrance: https://github.com/bytedance/LatentSync

Highlights:

LatentSync is an end-to-port synchronization framework based on the audio conditional latent diffusion model without the need for intermediate motion representations.

The framework utilizes Whisper to convert audio spectrograms into embeddings, which enhances the model’s accuracy and temporal consistency during lip synchronization.

The project provides a series of sample videos, and plans to open source relevant codes and data processing processes to facilitate user use and training.

LatentSync's open source and ease of use will promote the further development and application of lip synchronization technology, bringing new possibilities to the fields of video editing and content creation. Looking forward to subsequent updates of this project, which will bring more surprises.