The Microsoft research team released a new artificial intelligence technology - Large Action Model (LAM), which can operate Windows programs autonomously, marking a new stage of AI moving from simple dialogue and suggestions to actual task execution. Unlike traditional language models, LAM can understand a variety of inputs such as text, voice, and images, and convert them into detailed action plans. It can even adjust strategies based on real-time situations to solve some problems that other AI systems cannot cope with. This breakthrough technology provides broader possibilities for AI in practical applications and points the way for the development of future artificial intelligence assistants.

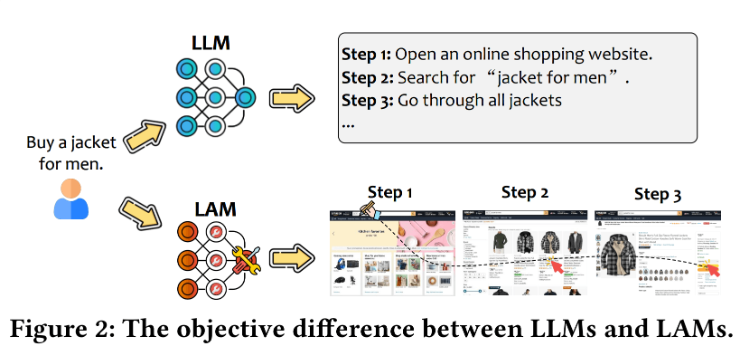

Microsoft's research team recently launched an artificial intelligence technology called the "Large Action Model" (LAM), marking a new stage in the development of AI. Unlike traditional language models such as GPT-4o, LAM can operate Windows programs autonomously, which means that AI can not only talk or provide suggestions, but can actually perform tasks.

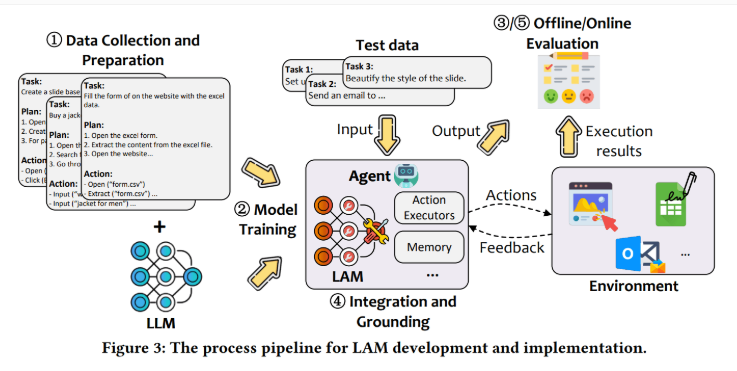

The strength of LAM is its ability to understand a variety of user inputs, including text, speech, and images, and then translate these requests into detailed step-by-step plans. LAM not only develops plans but also adapts its action strategies based on real-time conditions. The process of building a LAM is mainly divided into four steps: first, the model learns to break down the task into logical steps; then, through more advanced AI systems (such as GPT-4o), it learns how to translate these plans into specific actions; then, the LAM will Independently explore new solutions and even solve problems that other AI systems cannot cope with; finally, fine-tune training through a reward mechanism.

In the experiment, the research team built a LAM model based on Mistral-7B and tested it in the Word test environment. The results showed that the model successfully completed the task 71% of the time, compared to 63% of GPT-4o without visual information.

In addition, LAM also performs well in task execution speed, with each task taking only 30 seconds, while GPT-4o takes 86 seconds. Although the success rate of GPT-4o increases to 75.5% when processing visual information, overall, LAM has significant advantages in speed and effect.

To build the training data, the research team initially collected 29,000 examples of task and plan pairs from Microsoft documents, wikiHow articles, and Bing searches. They then used GPT-4o to transform simple tasks into complex tasks, thereby expanding the dataset to 76,000 pairs, an increase of 150%. Ultimately, approximately 2,000 successful action sequences were included in the final training set.

Although LAM has demonstrated its potential in AI development, the research team still faces some challenges, such as the problem of possible errors in AI actions, regulatory-related issues, and technical limitations in scaling and adapting in different applications. However, researchers believe that LAM represents an important shift in the development of AI, indicating that artificial intelligence assistants will be able to more actively assist humans in completing practical tasks.

Highlights:

LAM can execute Windows programs autonomously, breaking through the limitations of traditional AI that can only talk.

⏱ In the Word test, LAM's probability of successfully completing the task reached 71%, which is higher than GPT-4o's 63%, and the execution speed is faster.

The research team used a data expansion strategy to increase the number of mission plan pairs to 76,000 pairs, further improving the training effect of the model.

The emergence of LAM heralds the transformation of artificial intelligence from information provider to actual action executor, bringing revolutionary changes to future human-computer interaction and automated office. Although it still faces challenges, LAM has great potential, and it is worth looking forward to its wide application and further development in various fields.