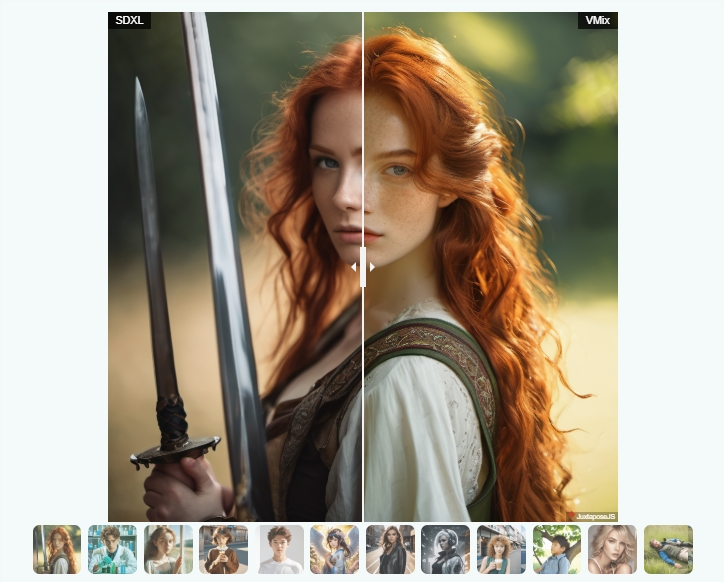

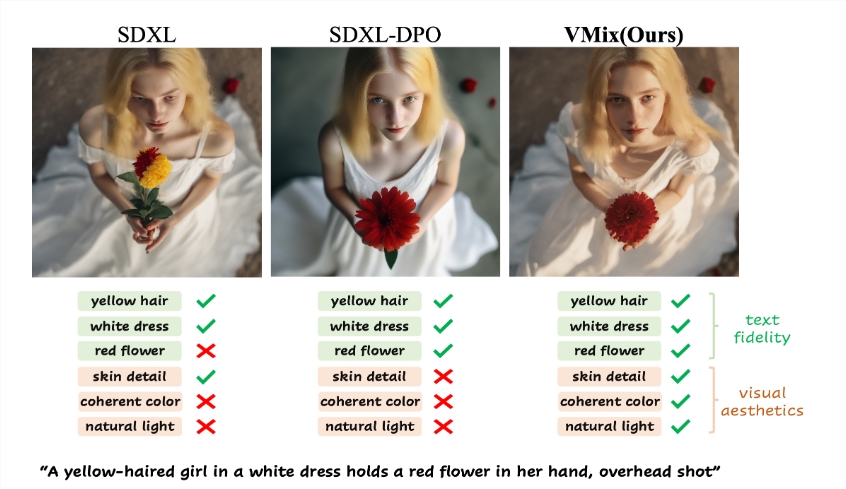

This article introduces the new diffusion model adapter VMix proposed by the research team of ByteDance and the University of Science and Technology of China, aiming to improve the quality and aesthetic effect of text-to-image generation. VMix uses a clever conditional control method to enhance the aesthetic performance of existing diffusion models and maintain the consistency between images and text descriptions without retraining the model. It decomposes text cues into content and aesthetic descriptions, and integrates aesthetic information into the image generation process through a hybrid cross-attention mechanism to achieve fine-grained control over image aesthetics. The adapter is compatible with a variety of community models and has a wide range of application prospects.

In the field of image generation from text, the diffusion model has demonstrated extraordinary capabilities, but there are still certain shortcomings in aesthetic image generation. Recently, a research team from ByteDance and the University of Science and Technology of China proposed a new technology called the "Cross-Attention Value Mixing Control" (VMix) adapter, which aims to improve the quality of generated images and maintain sensitivity to various visual Concept versatility.

The core idea of the VMix adapter is to enhance the aesthetic performance of existing diffusion models by designing superior conditional control methods while ensuring alignment between images and text.

This adapter mainly achieves its goal through two steps: first, it decomposes the input text cues into content descriptions and aesthetic descriptions by initializing aesthetic embeddings; second, during the denoising process, by mixing cross-attention, Incorporate aesthetic conditions into it to enhance the aesthetic effect of the picture and maintain the consistency between the picture and the prompt word. . The flexibility of this approach enables VMix to be applied to multiple community models without retraining, thereby improving visual performance.

The researchers verified the effectiveness of VMix through a series of experiments, and the results showed that the method outperformed other state-of-the-art methods in aesthetic image generation. At the same time, VMix is also compatible with a variety of community modules (such as LoRA, ControlNet and IPAdapter), further broadening its application scope.

VMix's fine-grained control over aesthetics is reflected in the ability to adjust aesthetic embeddings, which can improve specific dimensions of the image through single-dimensional aesthetic labels, or improve overall image quality through complete frontal aesthetic labels. In experiments, when the user is given a text description such as "a girl leaning against the window, breeze blowing, summer portrait, mid-length mid-length shot", the VMix adapter can significantly improve the beauty of the generated image.

The VMix adapter opens new directions for improving the aesthetic quality of text-to-image generation and is expected to realize its potential in a wider range of applications in the future.

Project entrance: https://vmix-diffusion.github.io/VMix/

Highlights:

The VMix adapter decomposes text prompts into content and aesthetic descriptions through aesthetic embedding, enhancing the quality of image generation.

This adapter is compatible with multiple community models, allowing users to improve image visual effects without retraining.

Experimental results show that VMix outperforms existing technologies in aesthetic generation and has broad application potential.

All in all, the VMix adapter provides an effective solution for improving the artistry and beauty of AI image generation. It also performs outstandingly in terms of compatibility and ease of use, providing new directions and possibilities for the development of future image generation technology.