aiOla recently released an open source AI audio transcription model called Whisper-NER, which is based on OpenAI's Whisper model and adds the function of masking sensitive information in real time. This innovation effectively solves the risk of privacy leakage during the audio transcription process, providing a safer solution for legal, medical, education and other fields. Whisper-NER is not only able to accurately transcribe audio in multiple languages and accents, but its flexible configuration options allow users to customize sensitive information masking strategies, further enhancing the model's practicality and security. The open source feature also allows developers and researchers to participate in model improvement and optimization, and jointly promote the advancement of AI technology.

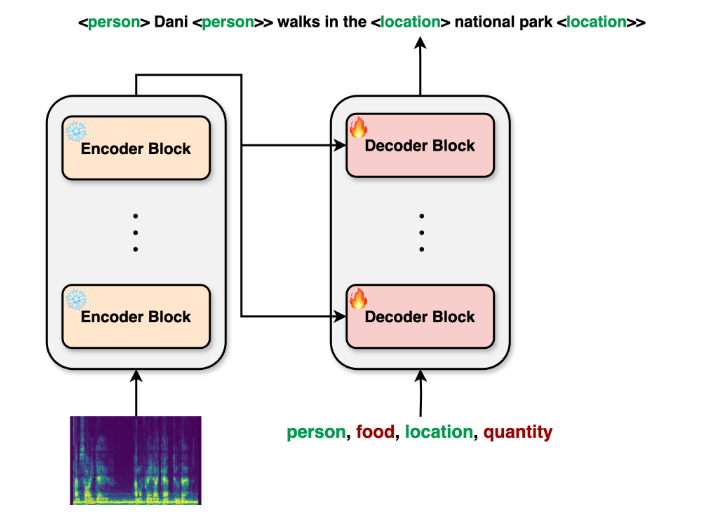

Recently, aiOla announced the launch of Whisper-NER, an open source AI audio transcription model that can mask sensitive information in real time during the transcription process.

aiOla's new Whisper-NER is built on OpenAI's industry-standard open source model Whisper, itself fully open source, and now available on Hugging Face and Github for enterprises, organizations and individuals to use, adapt, modify and deploy.

The audio transcription model has flexible configuration options, and users can choose whether to mask sensitive information according to their needs. When the user selects the masking function, the model will automatically identify and hide sensitive information such as personal names, addresses, phone numbers, etc., effectively preventing privacy leakage in the transcribed text. This ability makes the model particularly important in application scenarios in legal, medical, education and other fields.

In addition to protecting sensitive information, the model also has efficient and accurate transcription capabilities that work well across multiple languages and accents. This makes its application in multi-language environments even more widespread. For example, when companies deal with customer feedback, they can accurately record and analyze audio information from different regions, thereby improving service quality.

In addition, aiOla encourages developers and researchers to use this open source model to further enhance its capabilities. Users can obtain the source code on the open source platform and modify and optimize it according to their own needs. This approach not only improves the usability of the model, but also promotes the innovation and development of AI technology.

This new product from aiOla demonstrates its emphasis on privacy protection in the field of audio transcription, and also opens up more possibilities for future AI applications. As more users and developers join, we expect this open source model to bring wider application scenarios and influence.

Whisper-NER is completely open source and available under the MIT license, allowing users to freely adopt, modify and deploy it, including for commercial applications. Users can now also try out the demo model on Hugging Face, which allows them to record speech clips and have the model mask the specific words they type in the generated typing script.

huggingface: https://huggingface.co/aiola/whisper-ner-v1

github:https://github.com/aiola-lab/whisper-ner

Highlight:

The audio transcription model launched by aiOla can mask sensitive information in real time and protect user privacy.

The model supports multiple languages and accents and is suitable for many fields such as law, medical and education.

The open source feature allows users to customize and optimize models, promoting innovation in AI technology.

All in all, Whisper-NER's open source and privacy-protecting features make it a major advancement in the field of audio transcription. Its application prospects are broad, and it is worth looking forward to more possibilities it will bring to the development of AI technology in the future. Developers are welcome to participate and work together to improve and improve the model.