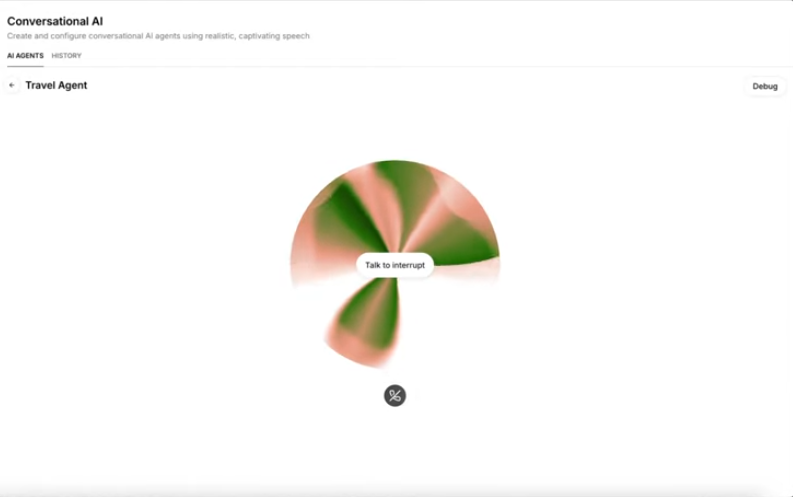

ElevenLabs, a startup company focusing on AI voice cloning and text-to-speech API, recently launched a new feature: users can build complete conversational AI agents independently. This new feature allows users to customize many parameters of the conversational agent according to their own needs on the ElevenLabs developer platform, such as voice intonation, reply length, etc., which greatly improves the customization and practicality of the AI agent. This move not only simplifies the process of creating conversational bots, but also provides developers with more flexible and powerful tools to meet various application scenarios.

ElevenLabs, a startup company focusing on AI voice cloning and text-to-speech API, recently announced the launch of new features that allow users to build complete conversational AI agents.

Users can now customize various variables of the conversational agent according to their own needs on the ElevenLabs developer platform, such as voice intonation and reply length.

ElevenLabs has primarily provided different speech and text-to-speech services in the past. Sam Sklar, the company's head of growth, told TechCrunch that many customers are already using the platform to create conversational AI agents. But integrating the knowledge base and handling customer outages are the biggest challenges. So ElevenLabs decided to build a complete conversational bot pipeline to make this process easier.

Users can start building conversational agents by logging into their ElevenLabs account, selecting a template, or creating a new project. They can select the agent's primary language, first message, and system prompts to determine the agent's personality.

In addition, developers need to choose a large language model (such as Gemini, GPT, or Claude), the temperature of the response (determines creativity), and token usage restrictions.

Users can also add knowledge bases such as files, URLs, or text blocks according to their needs to enhance the capabilities of the conversational bot. At the same time, they can integrate their own custom large language models with the bot. ElevenLabs' SDK is compatible with Python, JavaScript, React, and Swift, and the company also provides a WebSocket API for further customization.

The company also allows users to define data collection criteria, such as the name and email of the customer who spoke to the agent, and use natural language to define criteria for evaluating the success of the call.

ElevenLabs is leveraging its existing text-to-speech pipeline while also developing speech-to-text capabilities for new conversational AI products. Currently, the company does not offer a separate speech-to-text API, but it may launch it in the future, thus competing with the speech-to-text APIs of companies such as Google, Microsoft, and Amazon, as well as with OpenAI's Whisper, AssemblyAI, Deepgram, Speechmatics, and Gladia. APIs compete.

The company plans to raise a new round of funding at a valuation of more than $3 billion and is competing with other voice AI startups such as Vapi and Retell, which are also building conversational agents. What's more, ElevenLabs will compete with OpenAI's real-time conversation API. However, ElevenLabs believes its ability to customize and the flexibility to switch models will give it an edge over the competition.

Highlight:

ElevenLabs has launched a new feature for building conversational AI agents that allows users to customize multiple variables.

Users can add knowledge bases to enhance agent capabilities and integrate custom large language models with them.

ElevenLabs plans to raise funding at a valuation of more than $3 billion and compete with rivals such as OpenAI.

All in all, ElevenLabs’ new capabilities provide unprecedented flexibility and convenience for building custom conversational AI agents, which will propel it to a more favorable position in the highly competitive AI market. In the future, the development of ElevenLabs deserves continued attention.