Artificial intelligence has made great progress in multi-modal processing, but high-performance models often require huge computing resources, limiting its application on edge devices. In response to this challenge, OpenBMB launched MiniCPM-o2.6, an efficient multi-modal model, aiming to bridge the gap between advanced AI technology and resource-constrained devices. MiniCPM-o2.6 has 8 billion parameters, integrates vision, speech and language processing modules, and is optimized to run smoothly on devices such as smartphones and tablets, providing developers and enterprises with more convenient AI solution deployment way.

Artificial intelligence technology has made significant progress in recent years, but challenges remain between computational efficiency and versatility. Many advanced multi-modal models, such as GPT-4, usually require large amounts of computing resources, which limits their use on high-end servers, making it difficult for smart technologies to be effectively utilized on edge devices such as smartphones and tablets. In addition, there are still technical barriers to processing tasks such as video analysis or speech-to-text in real time, highlighting the need for efficient and flexible AI models that can operate seamlessly under limited hardware conditions.

To solve these problems, OpenBMB recently launched MiniCPM-o2.6, a model with an 8 billion parameter architecture designed to support vision, speech and language processing, and can efficiently run on edge devices such as smartphones, tablets and iPads. run. MiniCPM-o2.6 adopts a modular design and integrates multiple powerful components:

- SigLip-400M for visual understanding.

- Whisper-300M implements multi-language speech processing.

- ChatTTS-200M provides conversational capabilities.

- Qwen2.5-7B for advanced text understanding.

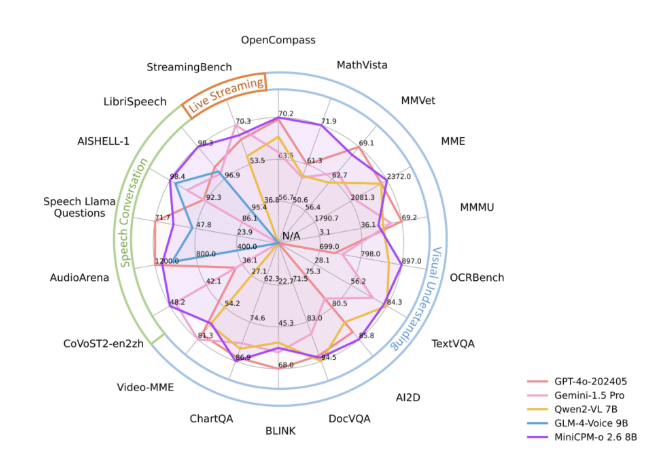

The model achieved an average score of 70.2 on the OpenCompass benchmark, surpassing GPT-4V on visual tasks. Its multi-language support and efficient operation on consumer-grade devices make it practical in a variety of application scenarios.

MiniCPM-o2.6 achieves powerful performance through the following technical details:

- Parameter optimization: Despite its large size, it is optimized through frameworks such as llama.cpp and vLLM to maintain accuracy and reduce resource requirements.

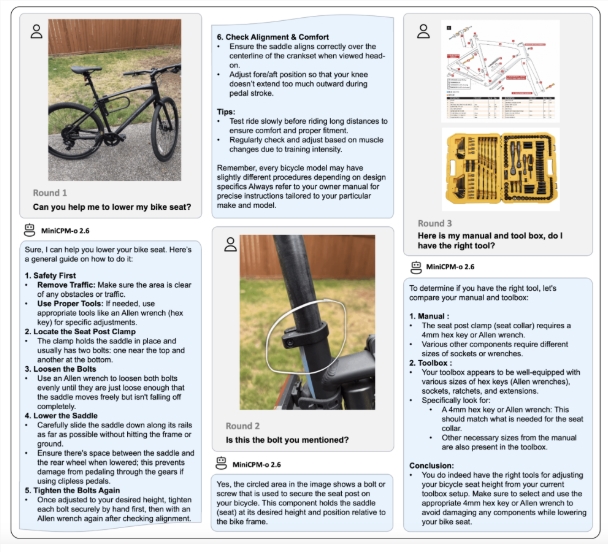

- Multi-modal processing: supports image processing up to 1344×1344 resolution, and has OCR function for excellent performance.

- Streaming media support: Supports continuous video and audio processing, making it applicable to real-time monitoring and live broadcast scenarios.

- Voice features: Provides bilingual speech understanding, voice cloning and emotion control to promote natural real-time interaction.

- Easy to integrate: Compatible with platforms such as Gradio, simplifying the deployment process and suitable for commercial applications with less than one million daily active users.

These features make MiniCPM-o2.6 an opportunity for developers and enterprises to deploy complex AI solutions without relying on huge infrastructure.

MiniCPM-o2.6 performs well in various fields. It surpasses GPT-4V in visual tasks, realizes real-time Chinese and English dialogue, emotion control and voice cloning in terms of speech processing, and has excellent natural language interaction capabilities. At the same time, continuous video and audio processing makes it suitable for real-time translation and interactive learning tools, ensuring high accuracy in OCR tasks such as document digitization.

The launch of MiniCPM-o2.6 represents an important development in artificial intelligence technology, successfully solving the long-standing challenge between resource-intensive models and edge device compatibility. By combining advanced multimodal capabilities with efficient edge device operations, OpenBMB creates a powerful and accessible model. As artificial intelligence becomes increasingly important in daily life, MiniCPM-o2.6 demonstrates how innovation can narrow the gap between performance and practicality, making it possible for developers and users in various industries to effectively utilize cutting-edge technologies.

Model: https://huggingface.co/openbmb/MiniCPM-o-2_6

Highlight:

MiniCPM-o2.6 is a multi-modal model with 8 billion parameters that can run efficiently on edge devices and supports vision, speech and language processing.

The model performed well in the OpenCompass benchmark, surpassed GPT-4V in visual tasks, and has multi-language processing capabilities.

MiniCPM-o2.6 has functions such as real-time processing, voice cloning and emotion control, and is suitable for innovative applications in education, medical and other industries.

All in all, the emergence of MiniCPM-o2.6 marks a major breakthrough in the application of AI technology. It successfully combines powerful multi-modal capabilities with the low resource consumption requirements of edge devices, paving the way for the widespread application of AI technology. It has extremely high application value and development prospects.