DeepSeek released its first inference model DeepSeek-R1 based on reinforcement learning training on January 20, 2025. The model showed performance comparable to or even better than OpenAI-o1-1217 in multiple benchmark tests. DeepSeek-R1 is not trained directly, but through multi-stage training and cold start data. Based on the DeepSeek-V3-Base model, it overcomes the problems caused by only using the reinforcement learning training model (DeepSeek-R1-Zero). Problems such as poor readability and mixed languages ultimately resulted in significant performance improvements. The model is open sourced and offers competitive pricing for API access, providing users with a more convenient and economical option.

On January 20, 2025, DeepSeek announced the launch of its first inference model DeepSeek-R1 trained through reinforcement learning (RL), which achieved comparable performance to OpenAI-o1-1217 in multiple inference benchmarks. DeepSeek-R1 is based on the DeepSeek-V3-Base model and uses multi-stage training and cold start data to improve inference capabilities.

DeepSeek researchers first developed DeepSeek-R1-Zero, a model trained entirely through large-scale reinforcement learning without any preparatory steps for supervised fine-tuning. DeepSeek-R1-Zero has demonstrated excellent performance in inference benchmarks. For example, in the AIME2024 exam, its pass@1 score increased from 15.6% to 71.0%. However, DeepSeek-R1-Zero also has some problems, such as poor readability and mixed languages.

In order to solve these problems and further improve inference performance, the DeepSeek team developed DeepSeek-R1. DeepSeek-R1 introduces multi-stage training and cold-start data before reinforcement learning. Specifically, the researchers first collected thousands of cold start data to fine-tune the DeepSeek-V3-Base model. Then, they performed inference-oriented reinforcement learning as they trained DeepSeek-R1-Zero. When the reinforcement learning process was close to convergence, they created new supervised fine-tuning data by rejection sampling of reinforcement learning checkpoints, combined with DeepSeek-V3's supervised data in areas such as writing, fact answering, and self-awareness, and then retrained DeepSeek-V3-Base model. Finally, additional reinforcement learning is performed on the fine-tuned checkpoints using cues from all scenarios.

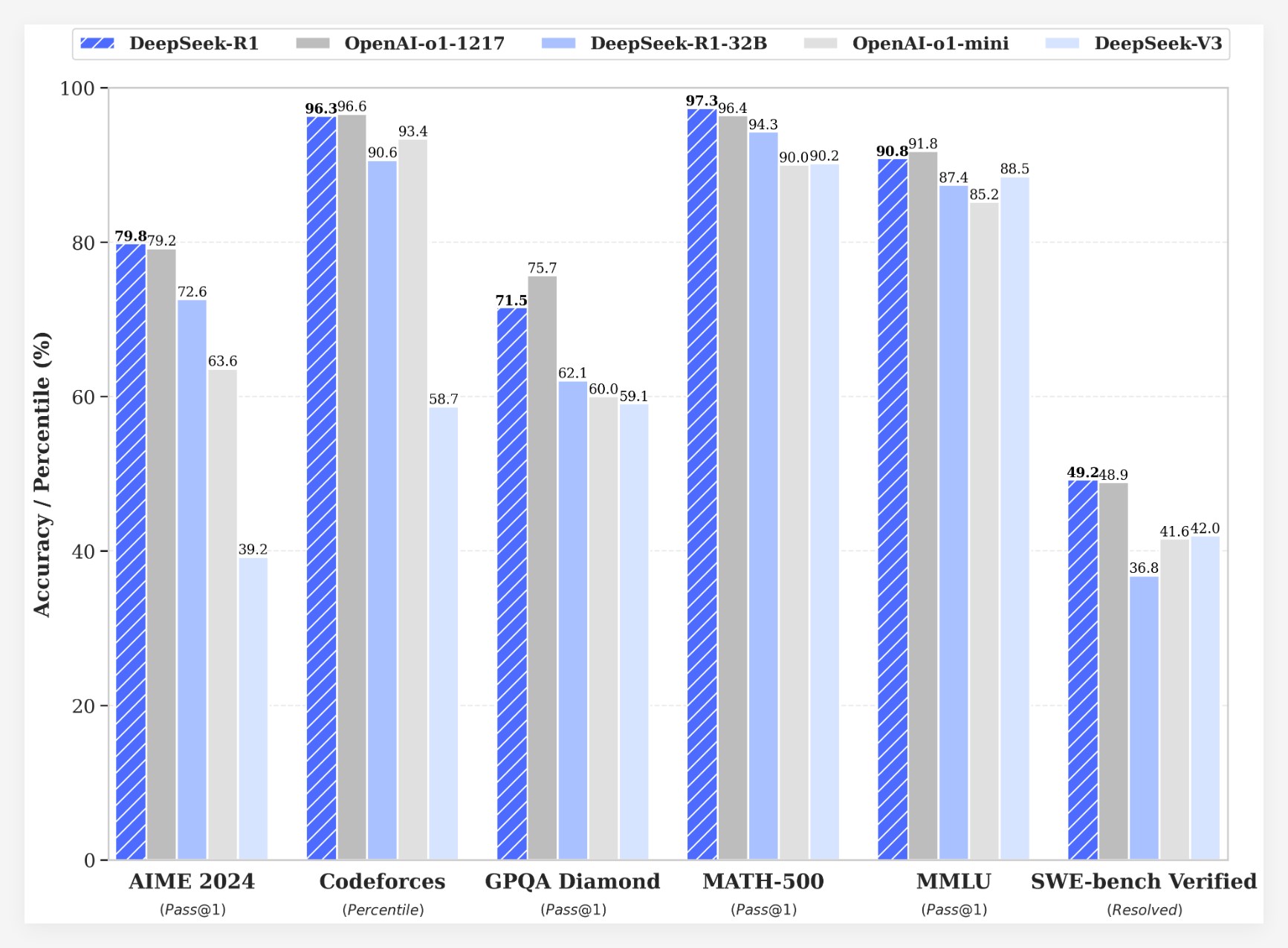

DeepSeek-R1 achieves impressive results on multiple benchmarks:

•In the AIME2024 exam, DeepSeek-R1’s pass@1 score reached 79.8%, slightly higher than OpenAI-o1-1217.

•In the MATH-500 exam, DeepSeek-R1’s pass@1 score reached 97.3%, which is the same as OpenAI-o1-1217.

•In the code competition task, DeepSeek-R1 achieved an Elo rating of 2029 on Codeforces, surpassing 96.3% of human participants.

•In knowledge benchmarks such as MMLU, MMLU-Pro and GPQA Diamond, DeepSeek-R1 scores 90.8%, 84.0% and 71.5% respectively, significantly better than DeepSeek-V3.

•DeepSeek-R1 also performs well in other tasks such as creative writing, general Q&A, editing, summarization, etc.

In addition, DeepSeek also explores distilling the inference capabilities of DeepSeek-R1 into smaller models. It was found that distillation directly from DeepSeek-R1 performed better than applying reinforcement learning on a small model. This suggests that the inference patterns discovered by large base models are critical to improving inference capabilities. DeepSeek has open sourced DeepSeek-R1-Zero, DeepSeek-R1, and six dense models (1.5B, 7B, 8B, 14B, 32B, 70B) distilled from DeepSeek-R1 based on Qwen and Llama. The launch of DeepSeek-R1 marks significant progress in reinforcement learning in improving the reasoning capabilities of large language models.

cost advantageIn terms of cost, DeepSeek-R1 offers a very competitive pricing strategy. Its API access pricing is $0.14 (cache hit) and $0.55 (cache miss) per million input tokens, and $2.19 per million output tokens. This price strategy is more attractive than other similar products and has been described as a "game changer" by users. The official website and API are now online! Visit https://chat.deepseek.com to experience DeepThink!

The release of DeepSeek-R1 triggered heated discussions in the community. Many users appreciate the open source nature and cost advantages of the model, believing that it provides developers with more choices and freedom. However, some users have raised questions about the context window size of the model and hope that future versions can be further optimized.

The DeepSeek team stated that they will continue to work on improving the performance and user experience of the model, and plan to launch more features in the future, including advanced data analysis, to meet user expectations for AGI (Artificial General Intelligence).

The launch of DeepSeek-R1 not only demonstrates the great potential of reinforcement learning in improving the reasoning capabilities of large language models, but also brings new directions and possibilities to the development of the AI field. Its open source features and competitive pricing strategy will further promote the popularization and application of AI technology.