This article introduces the LLM2CLIP method developed by Microsoft in collaboration with Tongji University, which aims to improve the visual encoder performance of the CLIP model and solve its limitations in handling long and complex text descriptions. LLM2CLIP significantly enhances the model's ability to match images and text by integrating large language models (LLMs) and introducing "title contrast fine-tuning" technology. The experimental results of this method on multiple data sets show that in image-to-text and text-to-image retrieval tasks, especially long and short text retrieval tasks, they surpass the traditional CLIP and EVA models and show powerful cross-language Processing ability.

In today's science and technology field, CLIP (Contrastive Language-Image Pre-training) is an important multimodal basic model. It combines visual and text signals into a shared feature space by using contrast learning losses on large-scale image-text pairs.

As a searcher, CLIP can support various tasks such as zero-shot classification, detection, segmentation, and image-text retrieval. Meanwhile, as a feature extractor, it dominates nearly all cross-modal representation tasks such as image comprehension, video comprehension, and text-to-image or video generation. What is powerful about CLIP is its ability to connect images to natural language and capture human knowledge, thanks to its training on large-scale network data, which contains detailed text descriptions.

However, CLIP has certain limitations in dealing with long and complex text descriptions. To overcome this problem, researchers from Microsoft and Tongji University proposed the LLM2CLIP method, aiming to enhance visual representation learning by integrating large language models (LLMs). This method boldly replaces the original CLIP text encoder and uses the rich knowledge of LLMs to improve the visual encoder performance of CLIP. The study found that directly integrating LLMs into CLIP results in performance degradation, so this challenge needs to be addressed.

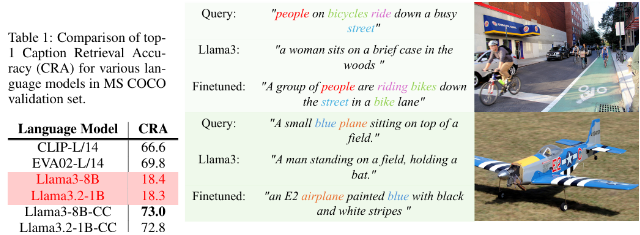

The LLM2CLIP method greatly improves LLM's ability to separate image titles by introducing the "title contrast fine-tuning" technology, thus achieving a significant performance improvement.

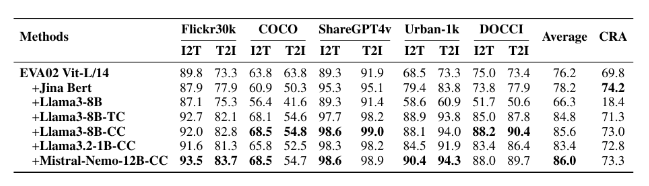

The researchers used data sets of different sizes for fine-tuning experiments, including small CC-3M, medium CC-3M and CC-12M, as well as large CC-3M, CC-12M, YFCC-15M and Recaption-1B. The results show that the model trained using LLM2CLIP performs better than the traditional CLIP and EVA models in image-to-text and text-to-image retrieval tasks.

By combining multimodal training with models such as Llava 1.5, LLM2CLIP performed well in almost all benchmarks, especially when dealing with long and short text retrieval tasks, improving the performance of the previous EVA02 model by 16.5%. This innovative approach not only transforms CLIP from simply processing English data to a powerful cross-language model, but also lays the foundation for future research on CLIP training.

Model: https://huggingface.co/collections/microsoft/llm2clip-672323a266173cfa40b32d4c

Code: https://github.com/microsoft/LLM2CLIP/

Paper: https://arxiv.org/abs/2411.04997

Points:

LLM2CLIP is an innovative approach proposed by Microsoft and Tongji University in collaboration with Tongji University, aiming to improve the performance of its visual encoder by replacing CLIP's text encoder.

This method significantly enhances the model's ability to match images with text through the "title contrast fine-tuning" technique, surpassing existing state-of-the-art models.

LLM2CLIP's experiments on multiple data sets show that it performs better than traditional models in long and short text retrieval tasks, driving the development of cross-language models.

In short, the LLM2CLIP method provides new ideas for the improvement of the CLIP model. Its significant improvement in image text retrieval and the enhancement of cross-language capabilities provide important reference value for the future development of multimodal models. Related resource links are convenient for readers to learn and research.