iFLYTEK's launch of iFLYTEK's multi-modal interaction model marks a new milestone in the field of artificial intelligence. This model breaks through the limitations of single voice interaction in the past, realizes a one-click seamless integration of voice, visual and digital human interaction functions, bringing users a more vivid, more realistic and more convenient interactive experience. Its super-anthropomorphic digital human technology can accurately match voice content to generate expressions and actions, and supports super-anthropomorphic interaction, adjust sound parameters according to instructions, and provide personalized services. The multimodal visual interaction function gives the model the ability to "understand the world and recognize everything", accurately perceive environmental information, and make more appropriate responses.

The launch of iFLYTEK's multi-modal interaction model not only reflects iFLYTEK's leading position in multi-modal interaction technology, but also provides new ideas for the future development direction of artificial intelligence applications. By integrating multiple interaction methods, this model can better understand user needs and provide more accurate and richer services. Its open SDK also provides developers with more possibilities to promote the popularization and application of multimodal artificial intelligence technology. In the future, we can expect more innovative applications based on this model to further improve people's life efficiency and experience quality.

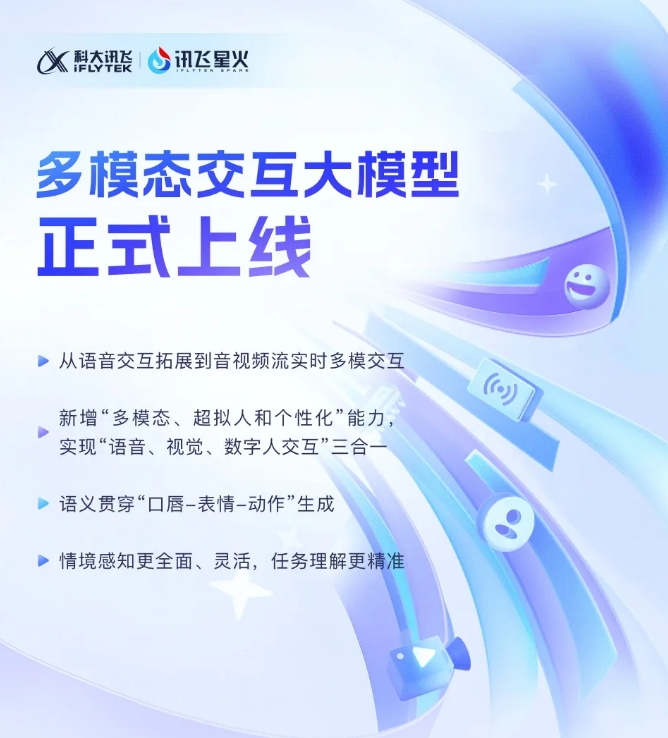

iFLYTEK recently announced that its latest development of iFLYTEK multimodal interaction model has officially been put into operation. This technological breakthrough marks a new stage in iFLYTEK's expansion from a single voice interaction technology to a new stage of real-time multimodal interaction of audio and video streams. The new model integrates voice, visual and digital human interaction functions, and users can achieve a seamless combination of the three through one-click call.

The launch of iFLYTEK multimodal interaction model has introduced the super-anthropomorphic digital human technology for the first time. This technology can accurately match the movements of digital human torso and limbs with voice content, quickly generate expressions and movements, greatly improving the AI Vivid and real. By integrating text, speech and expressions, the new model can achieve cross-modal semantic consistency, making emotional expression more realistic and coherent.

In addition, iFLYTEK Spark supports super-anthropomorphic super-fast interaction technology, using a unified neural network to directly realize end-to-end modeling of voice to voice, making the response speed faster and smoother. This technology can keenly perceive emotional changes and freely adjust the rhythm, size and character of the sound according to the instructions, providing a more personalized interactive experience.

In terms of multimodal visual interaction, iFLYTEK Spark can "understand the world" and "recognize everything", and fully perceive specific background scenes, logistics status and other information, making the understanding of the task more accurate. By integrating various information such as voice, gestures, behaviors, and emotions, the model can make appropriate responses, providing users with a richer and more accurate interactive experience.

Multimodal interaction big model SDK: https://www.xfyun.cn/solutions/Multimodel

In short, the emergence of iFLYTEK multimodal interaction model indicates that artificial intelligence technology has entered a new stage of development. Its powerful functions and convenient interactive experience will bring more possibilities to users and promote artificial intelligence. Intelligence is widely used in various fields. Looking forward to iFLYTEK Spark will bring more surprises in the future.