Nvidia recently released the new Blackwell platform and showed impressive performance improvements in the MLPerf Training 4.1 benchmark. Compared with previous Hopper platforms, Blackwell doubled its performance in multiple AI training tasks, especially in large language model (LLM) training, and its efficiency has been significantly improved. This breakthrough progress indicates another leap in AI computing power and lays a solid foundation for the widespread implementation of AI applications in the future. This article will conduct in-depth analysis of the performance of the Blackwell platform and discuss its technological innovation and future development.

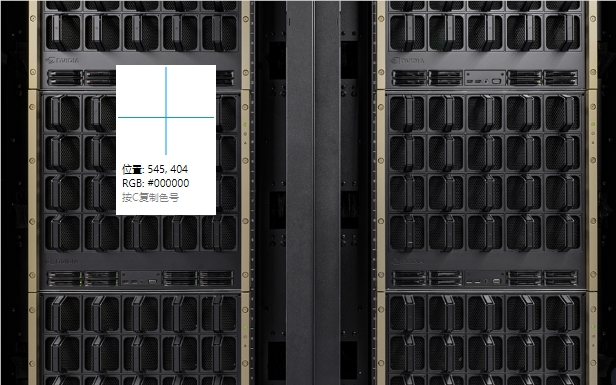

Recently, Nvidia released its new Blackwell platform and demonstrated preliminary performance in the MLPerf Training 4.1 benchmark. According to the test results, Blackwell's performance in some aspects has doubled compared to the previous generation of Hopper platforms, and this achievement has attracted widespread attention from the industry.

In the MLPerf Training4.1 benchmark, the Blackwell platform achieved 2.2 times the performance of each GPU in the Llama270B fine-tuning task of the LLM (large language model) benchmark, and 2 times the performance of each GPU in the pre-training of the GPT-3175B Double the improvement. In addition, in other benchmarks such as Stable Diffusion v2 training, the new generation of Blackwell also surpassed the previous generation by 1.7 times.

It is worth noting that while Hopper continues to show improvements, Hopper also has a 1.3x performance improvement in language model pre-training compared to the previous round of MLPerf Training benchmarks. This shows that Nvidia's technology continues to improve. In the recent GPT-3175B benchmark, Nvidia submitted 11,616 Hopper GPUs, setting a new expansion record.

Regarding Blackwell's technical details, Nvidia said the new architecture uses optimized Tensor Cores and faster high bandwidth memory. This makes it only 64 GPUs to run the GPT-3175B benchmark, while 256 GPUs to achieve the same performance using the Hopper platform.

Nvidia also emphasized the performance improvement of Hopper's generation products in software and network updates at the press conference, and it is expected that Blackwell will continue to improve with future submissions. In addition, Nvidia plans to launch the next generation of AI accelerator Blackwell Ultra next year, which is expected to provide more memory and stronger computing power.

Blackwell also debuted last September in the MLPerf Inference v4.1 benchmark, and in terms of AI inference, it achieves 4 times more amazing performance per GPU than the H100, especially with lower FP4 accuracy Essence This new trend is designed to cope with the growing demand for low-latency chatbots and smart computing such as OpenAI's o1 model.

Points:

- ** Nvidia Blackwell platform doubles its performance in AI training, breaking industry standards!**

- ** Blackwell only requires 64 GPUs in the GPT-3175B benchmark, significantly improving efficiency!**

- ** Blackwell Ultra will be launched next year, which is expected to provide higher memory and computing power!**

In short, the emergence of Nvidia's Blackwell platform marks a major breakthrough in the field of AI computing. Its significant performance improvement and the future launch of Blackwell Ultra will further promote the development and application of AI technology and bring more possibilities to all industries. We have reason to expect the Blackwell platform to play a greater role in the future.