The Salesforce AI research team released the latest multimodal language model, BLIP-3-Video, aiming to efficiently process growing video data. The traditional video understanding model is inefficient. BLIP-3-Video compresses video information to 16 to 32 visual markers through an innovative timing encoder, greatly improving computing efficiency while maintaining high accuracy. This move solves the problem of dealing with long videos and provides stronger video understanding capabilities for industries such as autonomous driving and entertainment.

Recently, the Salesforce AI research team launched a new multimodal language model - BLIP-3-Video. With the rapid increase in video content, how to efficiently process video data has become an urgent problem. The emergence of this model is designed to improve the efficiency and effectiveness of video understanding and is suitable for industries from autonomous driving to entertainment.

Traditional video understanding models often process video frame by frame, generating a large amount of visual information. This process not only consumes a lot of computing resources, but also greatly limits the ability to process long videos. As the amount of video data continues to grow, this approach becomes increasingly inefficient, so it is crucial to find a solution that captures the critical information of the video while reducing the computing burden.

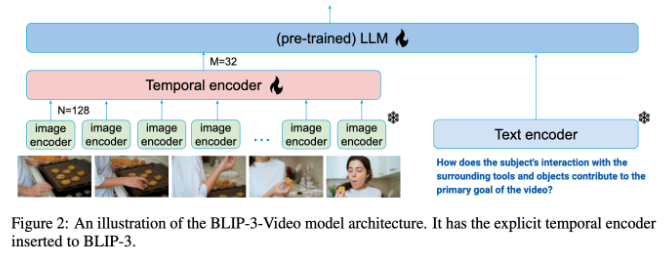

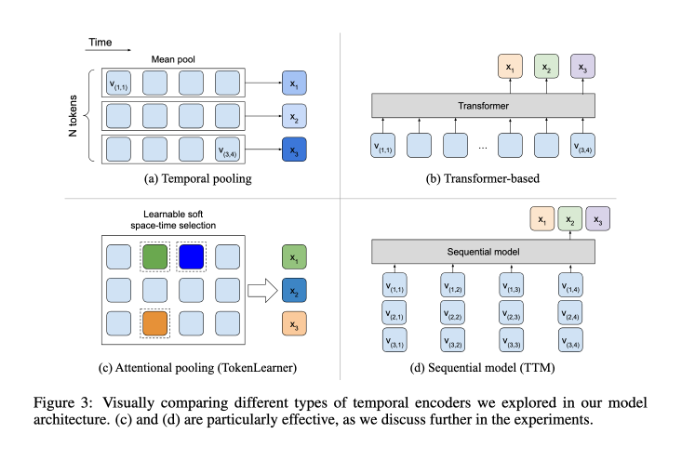

In this regard, BLIP-3-Video performed quite well. By introducing a "time sequence encoder", the model successfully reduced the amount of visual information required in the video to 16 to 32 visual markers. This innovative design greatly improves computing efficiency, allowing models to complete complex video tasks at a lower cost. This timing encoder uses a learnable spatiotemporal attention pooling mechanism that extracts the most important information from each frame and integrates it into a compact set of visual markers.

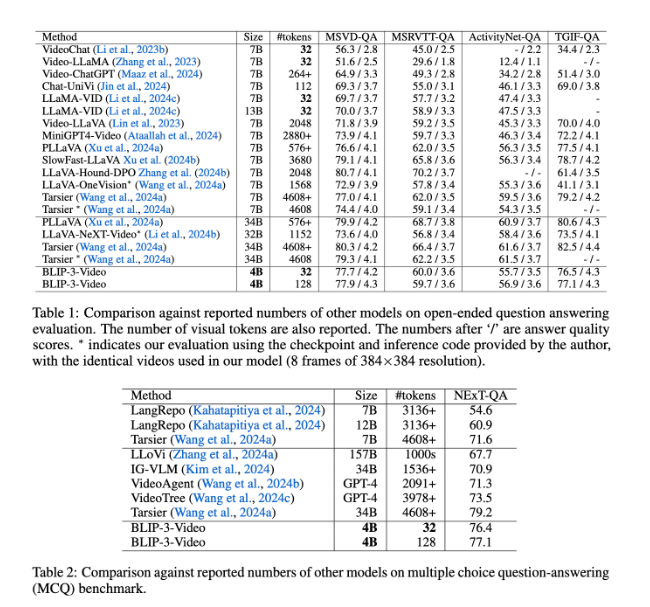

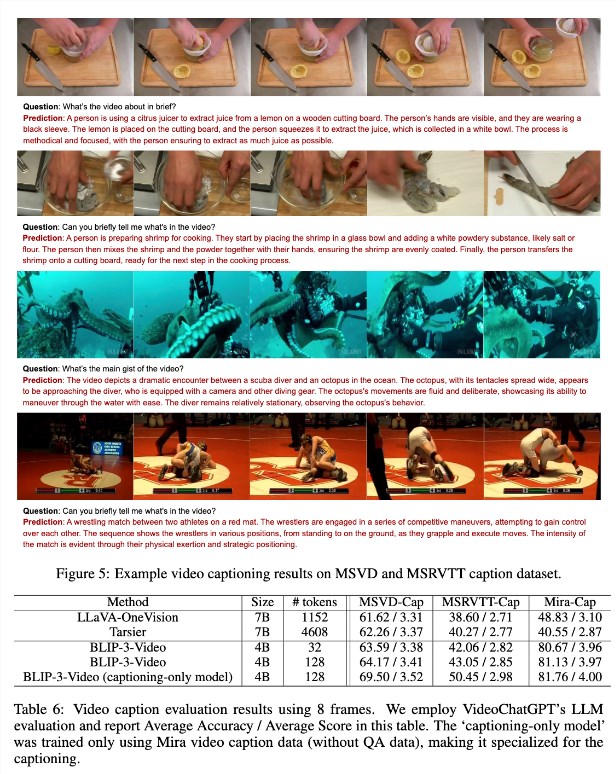

BLIP-3-Video also performed very well. Through comparison with other large models, the study found that the model has an accuracy rate of comparable top models in video Q&A tasks. For example, the Tarsier-34B model requires 4608 marks to process 8 frames of video, while BLIP-3-Video only needs 32 marks to achieve a 77.7% MSVD-QA benchmark score. This shows that BLIP-3-Video significantly reduces resource consumption while maintaining high performance.

In addition, BLIP-3-Video's performance in multiple-choice question-and-answer tasks should not be underestimated. In the NExT-QA dataset, the model achieved a high score of 77.1%, while in the TGIF-QA dataset, it also achieved an accuracy rate of 77.1%. All these data indicate the efficiency of BLIP-3-Video when dealing with complex video problems.

BLIP-3-Video opens up new possibilities in the field of video processing through innovative timing encoders. The launch of this model not only improves the efficiency of video understanding, but also provides more possibilities for future video applications.

Project entrance: https://www.salesforceairesearch.com/opensource/xGen-MM-Vid/index.html

Key points:

- ** New model release**: Salesforce AI research launches BLIP-3-Video, a multimodal language model, focusing on video processing.

- ** Efficient processing**: The use of a timing encoder greatly reduces the number of visual marks required and significantly improves computing efficiency.

- ** Superior Performance**: Excellent performance in video Q&A tasks, maintain high accuracy while reducing resource consumption.

In short, BLIP-3-Video has brought significant progress to the field of video understanding with its efficient processing capabilities and excellent performance, and its application prospects are broad. The open source of this model also provides a good foundation for further research and application.