With the rapid development of multimodal large language model (MLLM), efficient processing of ultra-long video has become a hot topic in current research. Existing models are often limited by context length and computational cost, making it difficult to effectively understand hourly videos. In response to this challenge, Zhiyuan Research Institute and several universities have launched Video-XL, an ultra-long visual language model designed specifically for efficient hour-level video understanding.

Currently, multimodal large language model (MLLM) has made significant progress in the field of video comprehension, but handling ultra-long videos remains a challenge. This is because MLLMs often struggle to handle thousands of visual markers that exceed the maximum context length and are affected by information attenuation caused by mark aggregation. At the same time, a large number of video tags will also bring high computing costs.

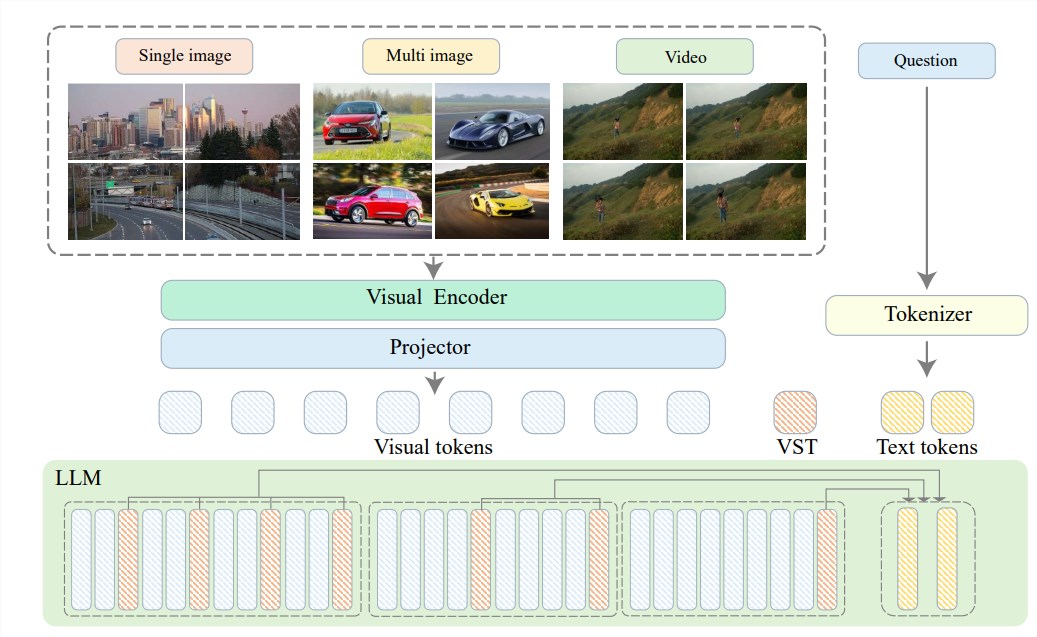

To solve these problems, Zhiyuan Research Institute has proposed Video-XL in conjunction with Shanghai Jiaotong University, Renmin University of China, Peking University and Beijing University of Posts and Telecommunications, a super-designed specialized for efficient hour-level video understanding. Long visual language model. At the heart of Video-XL lies in the “visual context potential summary” technology, which utilizes the context modeling capabilities inherent in LLM to effectively compress long visual representations into more compact forms.

Simply put, it is to compress the video content into a more streamlined form, just like concentrating a whole beef into a bowl of beef essence, which is convenient for the model to digest and absorb.

This compression technology not only improves efficiency, but also effectively retains the key information of the video. You should know that long videos are often filled with a lot of redundant information, just like an old lady's foot binding, which is long and smelly. Video-XL can accurately eliminate these useless information and retain only the essence, which ensures that the model will not lose its direction when understanding long video content.

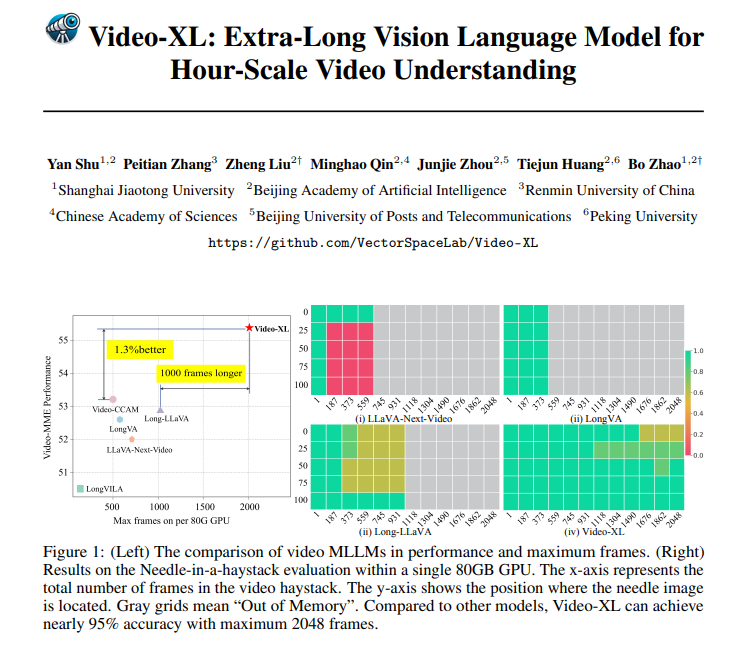

Video-XL is not only very powerful in theory, but also has a very powerful practical ability. Video-XL has been leading the way in multiple long video understanding benchmarks, especially in the VNBench test, with an accuracy of nearly 10% higher than the best existing methods.

What’s even more impressive is that Video-XL strikes an amazing balance between efficiency and effectiveness, it can process 2048 frames of video on a single 80GB GPU while still maintaining nearly 95% accuracy in the “Find a Needle in a Haystack” evaluation Rate.

Video-XL has a very broad application prospect. In addition to understanding general long videos, it can also be competent for certain tasks, such as movie summary, monitoring anomaly detection and advertising implant recognition.

This means that you don’t have to endure the lengthy plot when watching movies in the future. You can directly use Video-XL to generate a streamlined summary, saving time and effort; or use it to monitor the monitoring screen and automatically identify abnormal events, which is much more efficient than manual stalking. .

Project address: https://github.com/VectorSpaceLab/Video-XL

Paper: https://arxiv.org/pdf/2409.14485

In short, Video-XL has made breakthrough progress in the field of ultra-long video understanding. Its efficiency and accuracy provide strong technical support for future video analysis applications. It is worth looking forward to its application and further development in more fields.