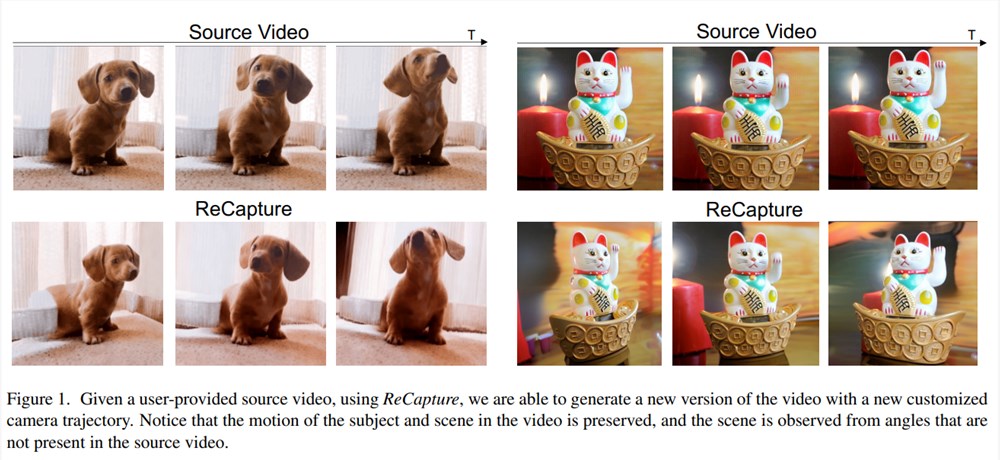

The latest ReCapture technology released by Google Research Institute has brought innovation to the field of video editing. It can generate a new version with a custom camera track based on existing videos, enabling viewing of video content from different perspectives, while perfectly retaining the original motion state of characters and scenes in the original video. This technology is like magic, which can transform an ordinary video into exciting images from multiple angles and perspectives.

Google Research recently launched a new technology called ReCapture, which allows you to re-experience your own videos from a completely new perspective. ReCapture technology can generate a new version with a custom camera track based on the video provided by the user, which means you can watch the video content from a perspective that is not available in the original video, and also maintain the original motion of the characters and scenes in the video.

ReCapture is like a magical editor who can generate a new version with a completely new perspective based on the videos you provide. For example, if you took a video of a dog playing with your mobile phone, ReCapture can help you generate a video taken from the dog's perspective. Isn't it amazing?

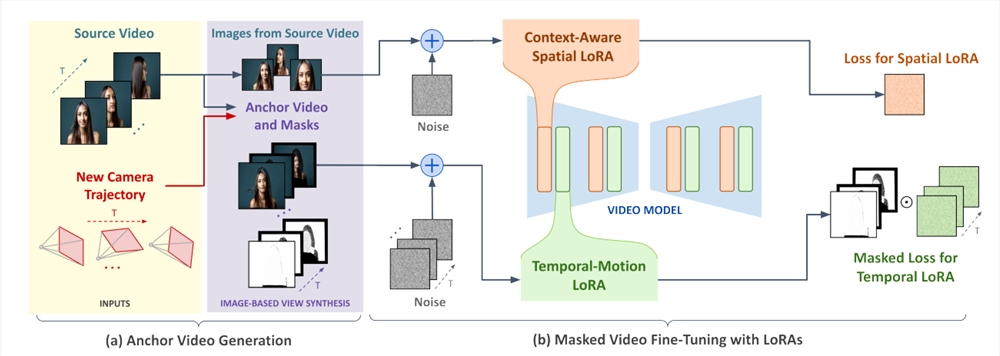

So, how exactly does ReCapture implement this "magic"? In fact, the principles behind it are not complicated. It first uses multi-view diffusion model or point cloud rendering technology to generate a rough video based on the new perspective you want. This rough video is like an uncarved piece of jade. The picture may be incomplete and the time is not coherent, and it staggers like drunkenness.

Next, ReCapture will use its secret weapon - the "mask video fine-tuning" technology to "exquisitely craft" this rough video. This technology is like a skilled craftsman who can repair and optimize videos with two special tools - Space LoRA and Time LoRA. Space LoRA is like a "beautician" who is responsible for learning the characters and scene information in the original video, making the picture clearer and more beautiful. Time LoRA is a "rhythm master" who is responsible for learning scene movement from a new perspective to make the video playback more smooth and natural.

After the joint creation of these two "masters", the rough video has been transformed into a clear, coherent and dynamic new video. Not only that, in order to make the video more perfect, ReCapture will also use SDEdit technology to finish the video, just like makeup, making the video more delicate and delicate.

Researchers at Google say ReCapture can handle various types of video and viewpoint conversions without requiring a lot of training data. This means that even if you are just an ordinary video enthusiast, you can easily create professional-grade "multi-camera" videos with ReCapture.

Project address: https://generative-video-camera-controls.github.io/

With its simple and easy-to-use features and powerful functions, ReCapture has greatly reduced the threshold for multi-view video production, providing new possibilities for video creation. In the future, this technology may be widely used in film production, game development, and virtual reality fields, bringing a more immersive visual experience.