Faced with the increasingly complex interface interactions in the multi-screen era such as mobile phones, tablets, computers, and TVs, Apple has launched its powerful UI understanding model, Ferret-UI2, aiming to unify user interface understanding of different platforms. Ferret-UI2 is not a simple upgrade, but a new model with cross-platform capabilities. It can understand UI screens from various devices such as iPhone, Android, iPad, web pages and Apple TV, greatly expanding the application scenarios. Its core advantages lie in support of multi-platforms, dynamic high-resolution image coding technology, and GPT-4o-based "market set visual cues" technology, which make Ferret-UI2 significant in both UI perception and task processing capabilities. promote.

Mobile phones, tablets, computers, and TVs have more and more screens and more complex operations. Isn’t it dazzling? Apple recently threw out a king - Ferret-UI2, a super UI understanding model, claiming to unify the world !

This is not a brag. Ferret-UI2's goal is to become a real hexagonal warrior, able to understand the user interface on various platforms, whether it is iPhone, Android, iPad, web page or Apple TV, it can easily win.

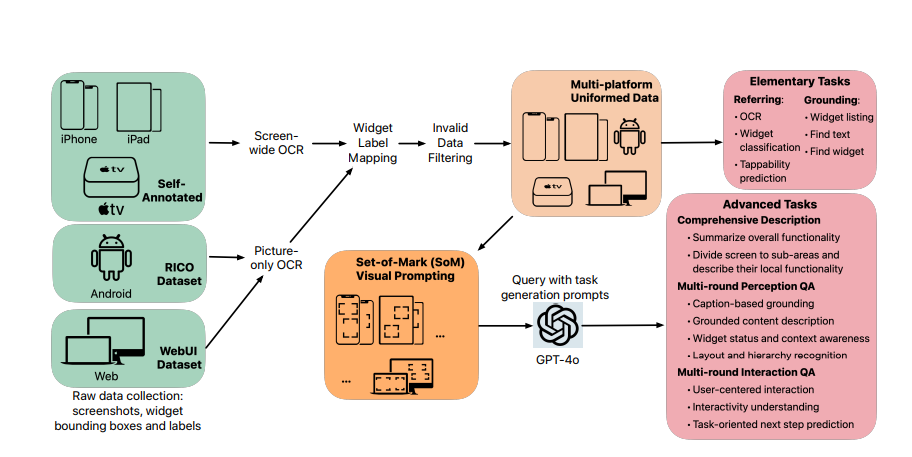

A highlight of Ferret-UI2 is its support for multiple platforms. Unlike Ferret-UI, which is limited to mobile platforms, Ferret-UI2 is able to understand UI screens from various devices such as tablets, web pages, and smart TVs. This multi-platform support enables it to adapt to today's diverse device ecosystem and provide users with a wider range of application scenarios.

To improve UI perception, Ferret-UI2 introduced dynamic high-resolution image coding technology and adopted an enhancement method called "adaptive grid". In this way, Ferret-UI2 is able to maintain perception at the original resolution of the UI screenshot, thereby more accurately identifying visual elements and their relationships.

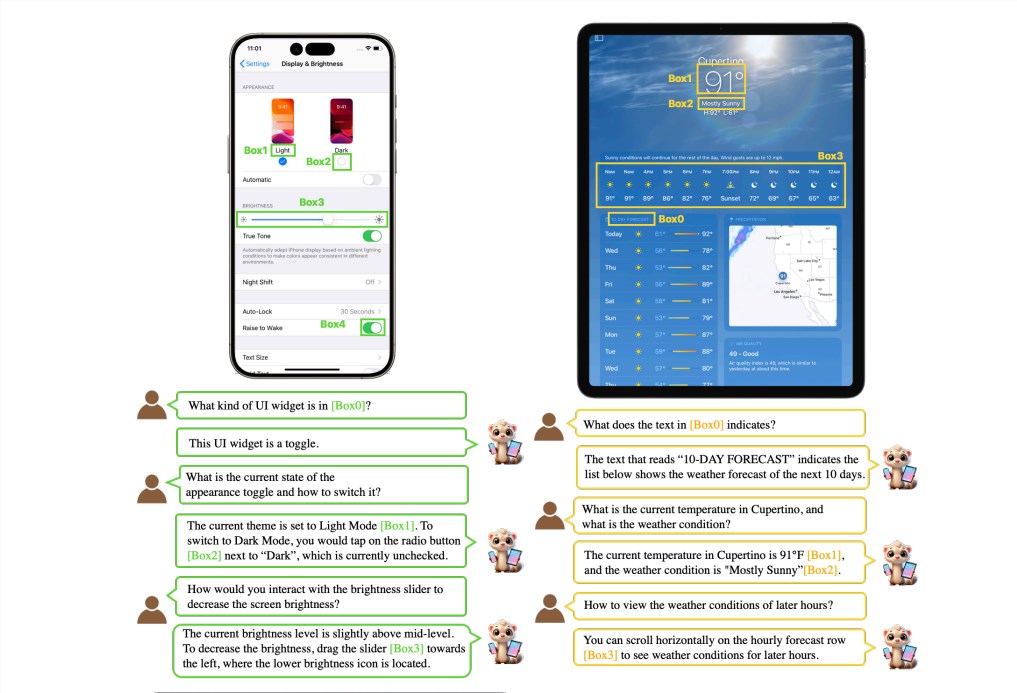

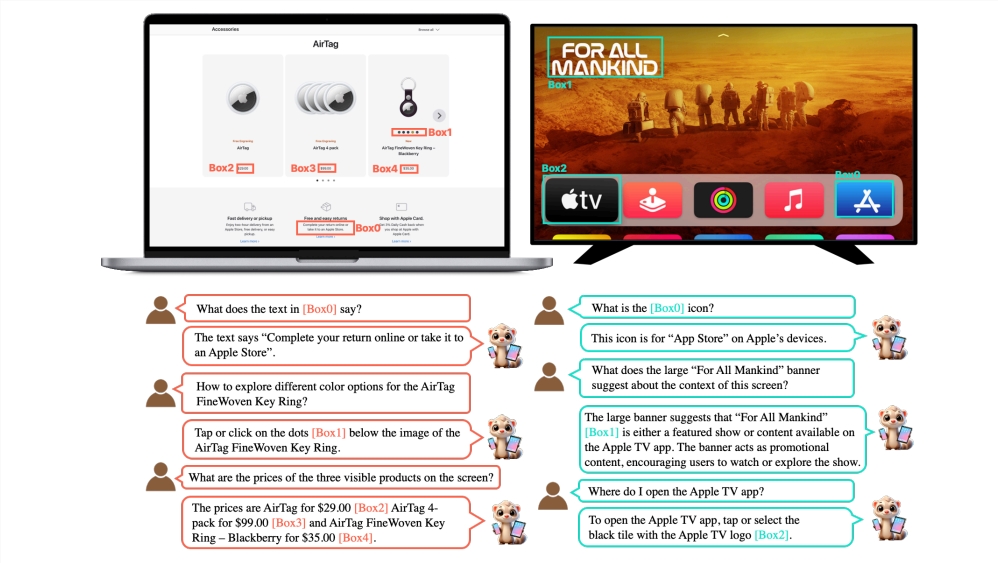

In addition, Ferret-UI2 also uses high-quality training data to learn basic and advanced tasks. For basic tasks, Ferret-UI2 converts simple reference and positioning data into dialogue formats, allowing the model to establish a basic understanding of various UI screens. For advanced tasks that focus more on user experience, Ferret-UI2 uses the GPT-4o-based "Tag Set Visual Prompt" technology to generate training data, and replaces the simple clicks in the previous method with single-step user-center interaction. instruction.

To evaluate the performance of Ferret-UI2, the researchers built 45 benchmarks covering five platforms, including 6 basic tasks and 3 advanced tasks for each platform. In addition, they also used public benchmarks such as GUIDE and GUI-World. The results show that Ferret-UI2 outperforms Ferret-UI in all test benchmarks, especially with significant advancements in advanced tasks, demonstrating its versatility in handling cross-platform UI understanding tasks.

Ablation studies further show that both Ferret-UI2 architecture improvements and dataset improvements contribute to performance improvements, with the impact of new datasets on more challenging tasks more significantly. In addition, Ferret-UI2 also performed well in cross-platform transfer learning, especially in good generalization capabilities between iPhone, iPad and Android platforms.

Model address: https://huggingface.co/jadechoghari/Ferret-UI-Llama8b

Paper address: https://arxiv.org/pdf/2410.18967

In short, Ferret-UI2 provides new possibilities for smarter and more convenient human-computer interaction in the future with its powerful cross-platform UI understanding capabilities and significant performance improvements. Its open source model and paper also provide valuable resources for further research and application.