The Xiaohongshu FireRed team has opened the new speech recognition model FireRedASR, which has made significant breakthroughs in the field of Chinese speech recognition. Its word error rate (CER) is as low as 3.05%, down 8.4% from the previous best model, and shows powerful performance in multiple practical application scenarios such as short video, live broadcast and voice input. FireRedASR offers two core structures: FireRedASR-LLM focuses on accuracy, while FireRedASR-AED balances accuracy and efficiency. The model supports multiple locales, including Mandarin, Chinese dialect and English, and is open sourced on GitHub and Hugging Face.

The core indicator of FireRedASR is word error rate (CER). The lower the indicator, the better the recognition effect of the model. In recent public testing, FireRedASR's CER reached 3.05%, down 8.4% from the previous best model, Seed-ASR. This result shows the FireRed team's innovative ability in speech recognition technology.

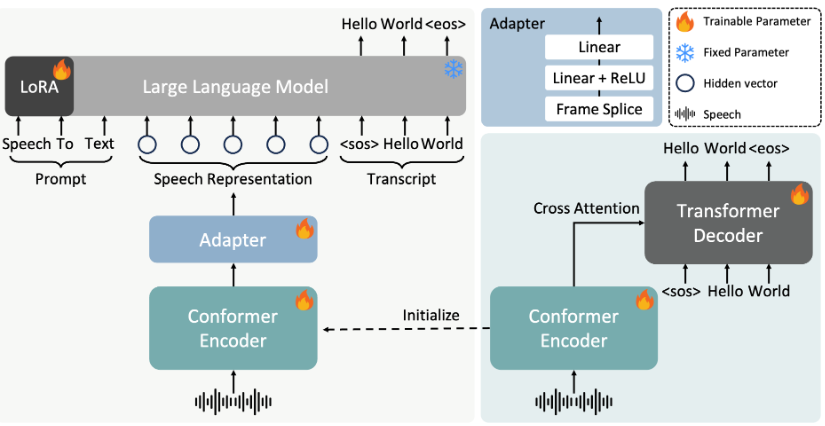

The FireRedASR model is divided into two core structures: FireRedASR-LLM and FireRedASR-AED. The former focuses on the ultimate speech recognition accuracy, while the latter achieves a good balance between accuracy and reasoning efficiency. The team provides models and inference codes of different sizes to meet the needs of various application scenarios.

FireRedASR also demonstrates powerful performance in multiple daily application scenarios. In a test set consisting of a variety of sources such as short video, live streaming and voice input, FireRedASR-LLM's CER has been reduced by 23.7% to 40% compared to the industry's leading service providers. Especially in scenarios where lyric recognition is required, the model is particularly prominent, with CER achieving a relative decrease of 50.2% to 66.7%.

In addition, FireRedASR has performed well in Chinese dialect and English scenarios, with its CER significantly superior to previous open source models on the KeSpeech and LibriSpeech test sets, demonstrating its robustness and adaptability in multiple locales.

The FireRed team hopes to promote the development and application of speech recognition technology through this new model of open source and contribute to the future of voice interaction. All models and code have been published on GitHub, encouraging more developers and researchers to participate.

huggingface:https://huggingface.co/FireRedTeam

github:https://github.com/FireRedTeam/FireRedASR

Key points:

- FireRedASR is a newly released open source speech recognition model by the Xiaohongshu team, with excellent Chinese recognition accuracy.

- The model is divided into FireRedASR-LLM and FireRedASR-AED, respectively, for accuracy and efficiency requirements.

- FireRedASR performs excellently in many scenarios and is suitable for various language environments such as Mandarin, Chinese dialect and English.

The open source of FireRedASR will undoubtedly accelerate the development of Chinese voice recognition technology, provide a powerful tool for developers and researchers, and also indicate that a more convenient and intelligent voice interaction experience will be coming in the future. Looking forward to more innovative applications based on FireRedASR!