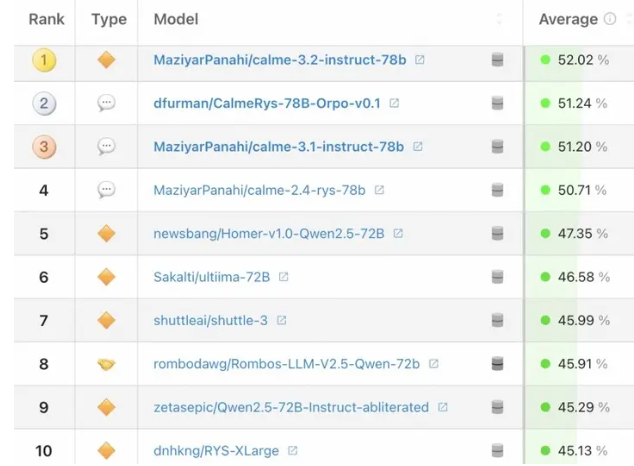

Recently, the list of open source big models released by Huggingface shows that the top ten models are based on Alibaba Tongyi Qianwen’s secondary training, which marks that Qwen’s dominance in the field of open source AI and its global influence has further increased. The list covers multiple dimensions such as reading comprehension and logical reasoning, and is highly authoritative. The number of Qwen and its derivative models has exceeded 90,000, surpassing Meta's Llama series and becoming the world's largest open source model group. The Qwen2.5-1.5B-Instruct model downloads account for 26.6% of Huggingface's total open source model downloads in 2024, becoming the most downloaded open source model in the world. This result fully demonstrates the superiority and wide application of the Qwen model.

Recently, Huggingface, the world's largest open source community for artificial intelligence, released the latest open source model rankings (Open LLM Leaderboard). The results show that the top ten open source models are all secondary based on Alibaba Tongyi Qianwen (Qwen) open source model. Training derivative model. This achievement marks Qwen's dominance in the field of open source AI, further driving its global presence.

Open LLM Leaderboard is widely regarded as the most authoritative open source model list at present, and the test dimensions cover multiple fields, including reading comprehension, logical reasoning, mathematical calculations, factual Q&A, etc. Surprisingly, Tongyi Qianwen Qwen has developed into the world's largest open source model group, with the number of derivative models exceeding 90,000, surpassing Meta's Llama series and ranking first in the world. In Huggingface's open source model download statistics in 2024, the Qwen2.5-1.5B-Instruct model downloads in the Qwen series account for 26.6% of the total downloads, becoming the most downloaded open source model in the world.

In addition, the popular DeepSeek company has recently opened sourced six models to the community based on its R1 inference model, of which 4 models were developed based on Qwen. The team of famous AI scientist Li Feifei also successfully trained the s1 inference model based on Qwen and used fewer resources and data. This series of achievements once again proved the superiority and flexibility of the Qwen model.

In short, Alibaba Tongyi Qianwen's rapid rise in the field of open source big model has not only enhanced its brand influence, but also provided rich tools and resources for global developers. With the continuous development of open source technology, future AI applications will be more diversified and intelligent.

Alibaba Tongyi Qianwen’s success is not only due to its excellent performance of its model itself, but also because it actively embraces the open source community, provides convenient resources and support to global developers, and jointly promotes the development and progress of AI technology. This indicates that the open source big model ecosystem will be more prosperous in the future and the application of AI will be more extensive.