Video generation technology has made significant progress in recent years, but existing models still have limitations in capturing complex motion and physical phenomena. Meta's research team proposed the VideoJAM framework, aiming to enhance the motion expressiveness of video generation models through joint appearance-motion representation.

In the field of video generation, despite significant progress in recent years, existing generative models still struggle to capture complex motion, dynamic and physical phenomena in reality. This limitation stems mainly from the traditional pixel reconstruction objectives, which tend to tend to improve the realism of the appearance and ignore the consistency of the motion.

To address this problem, Meta's research team proposed a new framework called VideoJAM, which aims to inject effective motion priors into video-generating models by encouraging models to learn joint appearance-motion representations.

The VideoJAM framework contains two complementary units. During the training phase, the framework extends the goal to predict both the generated pixels and the corresponding motion, both from a single learning representation.

During the reasoning phase, the research team introduced a mechanism called "intrinsic guidance" that guides the generation process toward a consistent motion direction by utilizing the model's own evolving motion prediction as a dynamic guidance signal. It is worth noting that VideoJAM can be applied to any video generation model without modifying the training data or extending the model.

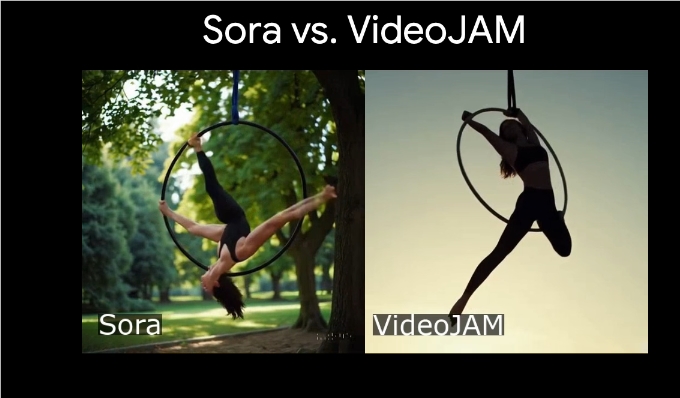

VideoJAM has proven to be an industry-leading level in motion consistency, surpassing multiple highly competitive proprietary models while also improving the visual quality of generated images. This study results emphasize the complementary relationship between appearance and movement, which can significantly improve the visual effect and motion coherence of video generation when the two are effectively combined.

In addition, the research team demonstrated the excellent performance of VideoJAM-30B in the generation of complex sports types, including scenes such as skateboarders jumping and ballet dancers spinning on the lake. By comparing the bibase model DiT-30B, the study found that VideoJAM has significantly improved the quality of motion generation.

Project entrance: https://hila-chefer.github.io/videojam-paper.github.io/

Key points:

VideoJAM framework enhances the motion expressiveness of video generation models through joint appearance-motion representation.

During training, VideoJAM can predict pixels and motion simultaneously, enhancing consistency of generated content.

It has been proven that VideoJAM surpasses multiple competitive models in both motion consistency and visual quality.

Meta's VideoJAM framework has brought new breakthroughs to video generation technology. Through the combined appearance and motion representation, it has significantly improved the motion consistency and visual quality of generated videos, providing a new direction for the future development of video generation technology.