Research teams from Stanford University and the University of Washington recently jointly released a breakthrough AI training method called S1. The core concept is to use minimalist test-time scaling technology to significantly improve the inference ability of language models. Unlike the past reliance on huge computing power or complex algorithms, the S1 method cleverly achieves a performance leap by controlling the allocation of computing resources of the model during testing.

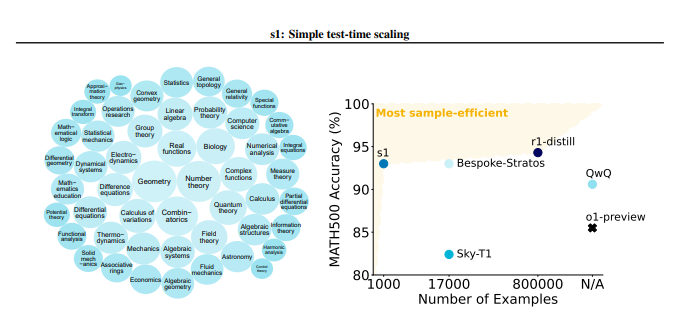

The S1 method first carefully constructed a small dataset called s1K that contained 1000 high-quality inference problems. The screening standards of this data set are very strict and must meet the three conditions of high difficulty, strong diversity and excellent quality at the same time. The research team verified the importance of these three criteria through detailed ablation experiments, and the results showed that random selection or focusing on a single criteria would lead to a significant decline in performance. It is worth mentioning that even if trained using a superset containing 59,000 samples, its effect is far less than that of carefully selected 1,000 samples, which highlights the criticality of data selection.

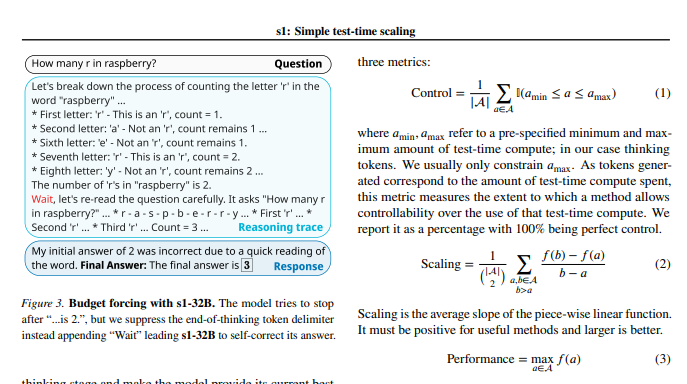

After the model training was completed, the researchers used a technique called "budget mandatory" to control the amount of calculations during testing. Simply put, this method extends the thinking time of the model by forcibly terminating the model's thinking process or adding "wait" instructions, thereby guiding the model for more in-depth exploration and verification. In this way, the model can repeatedly check the inference steps and effectively correct the errors.

Experimental results show that with fine-tuning on the s1K dataset and the support of "budget mandatory" technology, the s1-32B model's performance in competition-level mathematical problems exceeded OpenAI's o1-preview model by up to 27%. What is even more surprising is that through "budget mandatory" scaling, the s1-32B model also showed generalization ability beyond its own training level, and its score on the AIME24 test set increased from 50% to 57%.

The core contribution of this study is that it provides a simple and efficient method for creating data sets with high inference capabilities and achieving performance scaling when testing. Based on this, the research team created the s1-32B model, whose performance is comparable to or even surpasses the closed source model, and at the same time achieves open source and high sample efficiency. The code, model and data for the study are open sourced on GitHub.

The researchers also conducted in-depth ablation experiments on the subtleties of the data and the scaling technique during testing. On the data side, they found it crucial to consider difficulty, diversity and quality at the same time. In terms of scaling at test time, the "budget mandatory" approach shows excellent controllability and performance improvements. The study also explores two different methods, parallel scaling and sequential scaling, and introduces advanced technologies such as REBASE, providing important inspiration for future research directions.

This study not only brings a new low-cost and high-efficiency idea to the field of AI training, but also lays a solid foundation for a wider range of AI applications.

Paper address: https://arxiv.org/pdf/2501.19393

This study demonstrates that through careful data set construction and time-testing computing resource management, the inference capabilities of AI models can be significantly improved, providing new directions for future AI development.