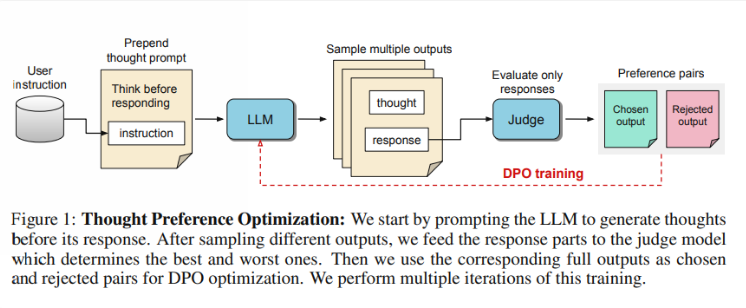

The Meta research team launches a new method to let AI models "think" before answering!

Meta, the University of California, Berkeley, and New York University collaborated to develop "Thinking Preference Optimization" (TPO) technology, aiming to improve the performance of large language models (LLM). Downcodes editor’s interpretatio

2024-12-03