Read this in English

GLM-4-Voice is an end-to-end speech model launched by Zhipu AI. GLM-4-Voice can directly understand and generate Chinese and English voices, conduct real-time voice conversations, and can change the emotion, intonation, speed, dialect and other attributes of the voice according to the user's instructions.

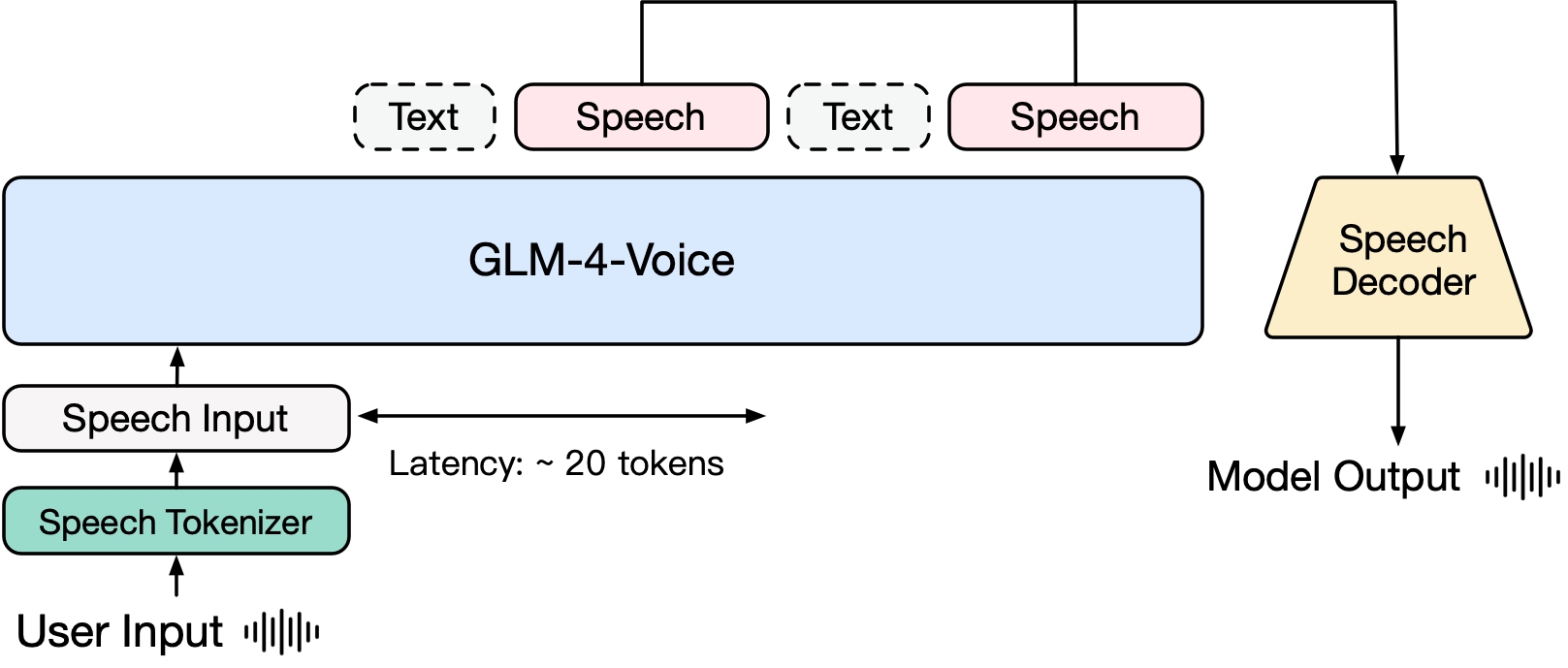

GLM-4-Voice consists of three parts:

GLM-4-Voice-Tokenizer: By adding Vector Quantization to the Encoder part of Whisper and supervised training on ASR data, continuous voice input is converted into discrete tokens. On average, audio only needs to be represented by 12.5 discrete tokens per second.

GLM-4-Voice-Decoder: A speech decoder that supports streaming reasoning and is trained based on CosyVoice's Flow Matching model structure to convert discrete speech tokens into continuous speech output. At least 10 voice tokens are needed to start generating, reducing end-to-end conversation delay.

GLM-4-Voice-9B: Based on GLM-4-9B, pre-training and alignment of voice modalities are performed to understand and generate discretized voice tokens.

In terms of pre-training, in order to overcome the two difficulties of the model's IQ and synthetic expressiveness in the speech mode, we decoupled the Speech2Speech task into "making a text reply based on the user's audio" and "synthesizing a speech based on the text reply and the user's speech" Two tasks, and two pre-training objectives are designed to synthesize speech-text interleaved data based on text pre-training data and unsupervised audio data to adapt to these two task forms. Based on the base model of GLM-4-9B, GLM-4-Voice-9B has been pre-trained on millions of hours of audio and hundreds of billions of tokens with audio-text interleaved data, and has strong audio understanding and modeling. ability.

In terms of alignment, in order to support high-quality voice dialogue, we have designed a streaming thinking architecture: according to the user’s voice, GLM-4-Voice can alternately output content in two modes: text and voice in a streaming format. The voice mode is represented by The text is used as a reference to ensure the high quality of the reply content, and corresponding voice changes are made according to the user's voice command requirements. It still has the ability to model end-to-end while retaining the IQ of the language model to the greatest extent, and at the same time has low latency. It only needs to output a minimum of 20 tokens to synthesize speech.

A more detailed technical report will be released later.

| Model | Type | Download |

|---|---|---|

| GLM-4-Voice-Tokenizer | Speech Tokenizer | ? Huggingface ? ModelScope |

| GLM-4-Voice-9B | Chat Model | ? Huggingface ? ModelScope |

| GLM-4-Voice-Decoder | Speech Decoder | ? Huggingface ? ModelScope |

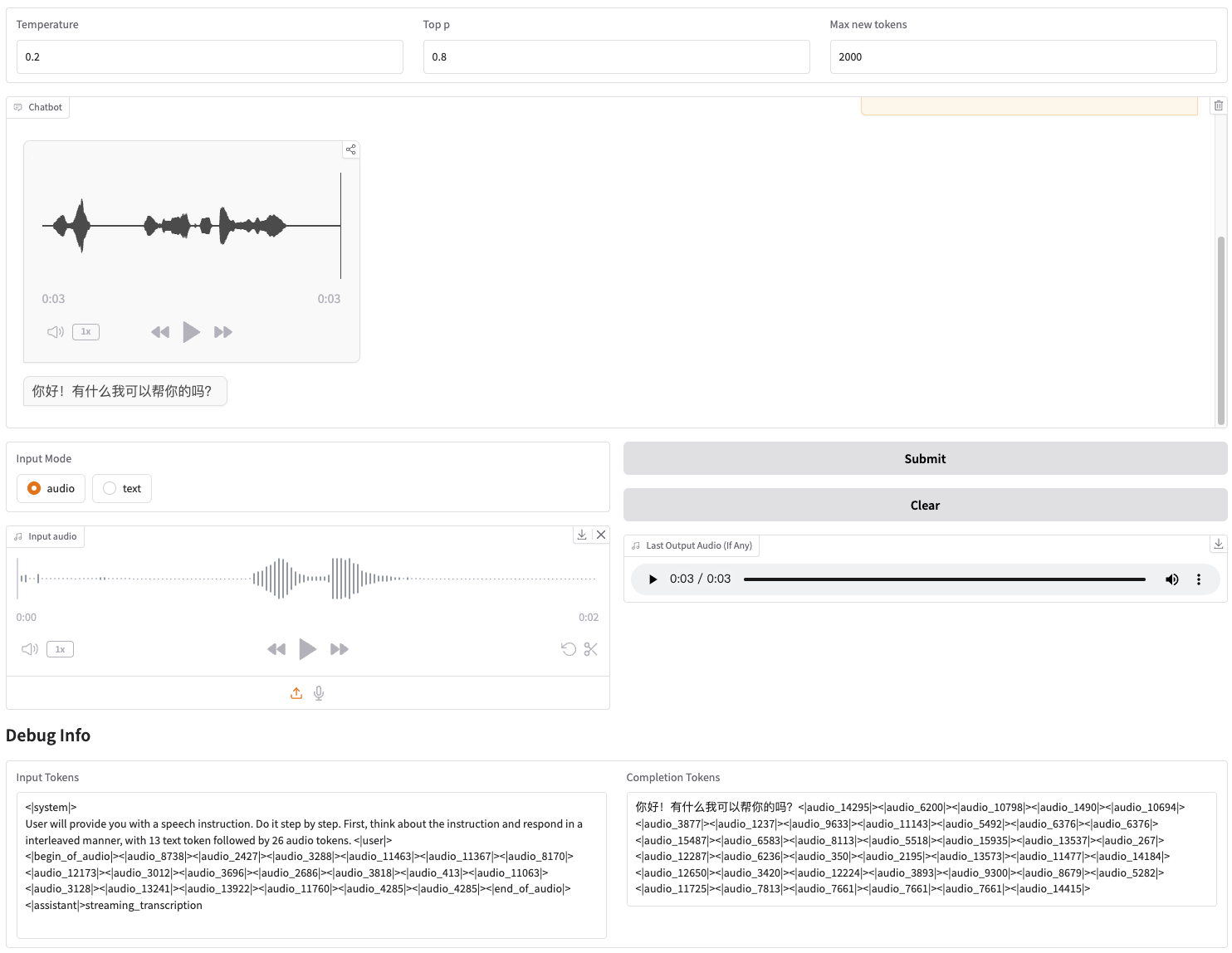

We provide a Web Demo that can be started directly. Users can enter voice or text, and the model will give both voice and text responses.

First download the repository

git clone --recurse-submodules https://github.com/THUDM/GLM-4-Voicecd GLM-4-Voice

Then install the dependencies. You can also use the image zhipuai/glm-4-voice:0.1 we provide to skip this step.

pip install -r requirements.txt

Since the Decoder model does not support initialization through transformers , checkpoint needs to be downloaded separately.

# git model download, please make sure git-lfsgit lfs install is installed git clone https://huggingface.co/THUDM/glm-4-voice-decoder

Start model service

python model_server.py --host localhost --model-path THUDM/glm-4-voice-9b --port 10000 --dtype bfloat16 --device cuda:0

If you need to boot with Int4 precision, run

python model_server.py --host localhost --model-path THUDM/glm-4-voice-9b --port 10000 --dtype int4 --device cuda:0

This command will automatically download glm-4-voice-9b . If the network conditions are not good, you can also manually download and specify the local path through --model-path .

Start web service

python web_demo.py --tokenizer-path THUDM/glm-4-voice-tokenizer --model-path THUDM/glm-4-voice-9b --flow-path ./glm-4-voice-decoder

You can access the web demo at http://127.0.0.1:8888.

This command automatically downloads glm-4-voice-tokenizer and glm-4-voice-9b . Please note that glm-4-voice-decoder needs to be downloaded manually.

If the network conditions are not good, you can manually download these three models and then specify the local path through --tokenizer-path , --flow-path and --model-path .

Gradio's streaming audio playback is unstable. The audio quality will be higher when clicked in the dialog box after the generation is complete.

We provide some conversation examples of GLM-4-Voice, including controlling emotions, changing speaking speed, generating dialects, etc.

Guide me to relax with a soft voice

Commentary on football matches with an excited voice

Tell a ghost story in a plaintive voice

Introduce how cold winter is in Northeastern dialect

Say "Eat grapes without spitting out grape skins" in Chongqing dialect

Say a tongue twister in Beijing dialect

speak faster

faster

Part of the code for this project comes from:

CosyVoice

transformers

GLM-4

The use of GLM-4 model weights needs to follow the model protocol.

The code of this open source repository follows the Apache 2.0 protocol.