DdddOcr is a collaboration between the author and kerlomz. It generates large batches of random data and conducts deep network training. It is not made for any verification code manufacturer. The effect of using this library depends entirely on metaphysics. It may or may not be identifiable.

DdddOcr, the concept of minimal dependence, minimizes user configuration and usage costs, hoping to bring a comfortable experience to every tester

Project address: Click here to send

An easy-to-use universal captcha recognition python library

Explore the documentation for this project »

· Report bugs · Propose new features

Sponsorship partners

Getting Started Guide

Environmental support

Installation steps

File directory description

Project underlying support

Use documentation

Basic OCR recognition ability

Target detection capability

Slider detection

OCR probability output

Custom OCR training model import

version control

Related recommended articles or projects

author

Donate

Star history

| Sponsorship partners | Reasons for recommendation | |

|---|---|---|

| YesCaptcha | Google reCaptcha verification code/hCaptcha verification code/funCaptcha verification code commercial grade identification interface click me directly to VIP4 | |

| super eagle | The world's leading intelligent image classification and recognition business, safe, accurate, efficient, stable and open, with a strong technical and verification team that supports large concurrency. 7*24h job progress management | |

| Malenia | Malenia enterprise-level proxy IP gateway platform / proxy IP distribution software | |

| Nimbus VPS | 50% off for the first month of registration | Zhejiang node low price and large bandwidth, 100M 30 yuan per month |

| system | CPU | GPU | Maximum supported py version | Remark |

|---|---|---|---|---|

| Windows 64 bit | √ | √ | 3.12 | Some versions of windows need to install the vc runtime library |

| Windows 32-bit | × | × | - | |

| Linux64/ARM64 | √ | √ | 3.12 | |

| Linux 32 | × | × | - | |

| Macos X64 | √ | √ | 3.12 | M1/M2/M3...Chip Reference #67 |

i. Install from pypi

pip install dddddocr

ii. Install from source

git clone https://github.com/sml2h3/ddddocr.gitcd ddddocr python setup.py

Please do not import ddddocr directly in the root directory of the dddddocr project . Please make sure that the name of your development project directory is not dddddocr. This is basic common sense.

eg:

ddddocr ├── MANIFEST.in ├── LICENSE ├── README.md ├── /ddddocr/ │ │── __init__.py 主代码库文件 │ │── common.onnx 新ocr模型 │ │── common_det.onnx 目标检测模型 │ │── common_old.onnx 老ocr模型 │ │── logo.png │ │── README.md │ │── requirements.txt ├── logo.png └── setup.py

This project is based on the training results of dddd_trainer. The underlying training framework is pytorch. The underlying reasoning of dddddocr relies on onnxruntime. Therefore, the maximum compatibility and python version support of this project mainly depends on onnxruntime.

It is mainly used to identify single lines of text, that is, the text part occupies the main part of the picture, such as common English and numerical verification codes, etc. This project can recognize Chinese, English (random case or case by setting the result range), numbers and some special character.

# example.pyimport ddddocrocr = ddddocr.DdddOcr()image = open("example.jpg", "rb").read()result = ocr.classification(image)print(result)There are two sets of OCR models built into this library. They will not switch automatically by default. You need to switch through parameters when initializing ddddocr.

# example.pyimport ddddocrocr = ddddocr.DdddOcr(beta=True) # Switch to the second set of ocr model image = open("example.jpg", "rb").read()result = ocr.classification(image)print( result) Tips for recognition support for some transparent black png format pictures: classification method uses the png_fix parameter, the default is False

ocr.classification(image, png_fix=True)

Notice

Previously I found that many people like to re-initialize ddddocr every time OCR is recognized, that is, execute ocr = ddddocr.DdddOcr() every time. This is wrong. Generally speaking, it only needs to be initialized once, because each initialization and The first recognition speed after initialization is very slow.

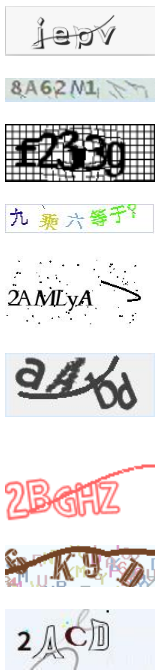

Reference example picture

Including but not limited to the following pictures

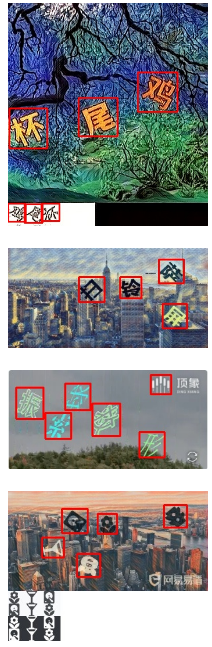

It is mainly used to quickly detect the possible target subject position in the image. Since the detected target is not necessarily text, this function only provides the bbox position of the target (in target detection, we usually use bbox (bounding box, The abbreviation is bbox) to describe the target position. bbox is a rectangular box, which can be determined by the x and y axis coordinates of the upper left corner of the rectangle and the x and y axis coordinates of the lower right corner)

If there is no need to call the ocr function during use, you can turn off the ocr function by passing the parameter ocr=False during initialization. To enable target detection, you need to pass the parameter det=True

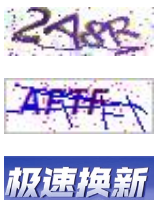

import ddddocrimport cv2det = ddddocr.DdddOcr(det=True)with open("test.jpg", 'rb') as f:image = f.read()bboxes = det.detection(image)print(bboxes)im = cv2 .imread("test.jpg")for bbox in bboxes:x1, y1, x2, y2 = bboxim = cv2.rectangle(im, (x1, y1), (x2, y2), color=(0, 0, 255), thickness=2)cv2.imwrite("result.jpg", im)Reference example picture

Including but not limited to the following pictures

The slider detection function of this project is not implemented by AI recognition, but is implemented by opencv's built-in algorithm. It may not be so friendly to users who take screenshots. If there is no need to call the ocr function or target detection function during use, you can turn off the ocr function or det=False ocr=False during initialization to turn off the target detection function.

This function has two built-in algorithm implementations, which are suitable for two different situations. Please refer to the following instructions for details.

a. Algorithm 1

The principle of Algorithm 1 is to calculate the corresponding pit position in the background image through the edge of the slider image. The slider image and the background image can be obtained respectively. The slider image is a transparent background image.

Slider chart

Background image

det = dddddocr.DdddOcr(det=False, ocr=False) with open('target.png', 'rb') as f:target_bytes = f.read() with open('background.png', 'rb') as f:background_bytes = f.read() res = det.slide_match(target_bytes, background_bytes) print(res)Since the slider chart may have a transparent border problem, the calculation results may not be accurate. You need to estimate the width of the transparent border of the slider chart yourself to correct the bbox.

Tip: If the slider does not have too much background, you can add the simple_target parameter, which is usually a picture in jpg or bmp format.

slide = dddddocr.DdddOcr(det=False, ocr=False) with open('target.jpg', 'rb') as f:target_bytes = f.read() with open('background.jpg', 'rb') as f:background_bytes = f.read() res = slide.slide_match(target_bytes, background_bytes, simple_target=True) print(res)a. Algorithm 2

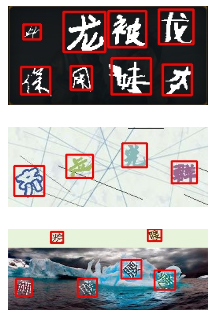

Algorithm 2 is to determine the position of the slider target pit by comparing the differences between the two pictures.

Refer to Figure A, the full picture with the shadow of the target pit.

Reference picture b, full picture

slide = dddddocr.DdddOcr(det=False, ocr=False)with open('bg.jpg', 'rb') as f:target_bytes = f.read() with open('fullpage.jpg', 'rb') as f:background_bytes = f.read() img = cv2.imread("bg.jpg") res = slide.slide_comparison(target_bytes, background_bytes)print(res)In order to provide more flexible OCR result control and scope limitation, the project supports scope limitation of OCR results.

You can pass probability=True when calling the classification method. At this time, classification method will return the probability of the entire character table. Of course, you can also set the output character range through set_ranges method to limit the returned results.

Ⅰ. The set_ranges method limits the return characters to return

This method accepts 1 parameter. If the input is an int type, it is a built-in character set limit, and if the string type is a custom character set,

If it is int type, please refer to the following table

| Parameter value | significance |

|---|---|

| 0 | Pure integer 0-9 |

| 1 | Pure lowercase English az |

| 2 | Pure uppercase English AZ |

| 3 | Lowercase English az + Uppercase English AZ |

| 4 | Lowercase English az + integer 0-9 |

| 5 | Uppercase English AZ + integer 0-9 |

| 6 | Lowercase English az + uppercase English AZ + integer 0-9 |

| 7 | Default character library - lowercase English az - uppercase English AZ - integer 0-9 |

If it is a string type, please pass in a text that does not contain spaces. Each character in it is a candidate word, such as: "0123456789+-x/=""

import ddddocrocr = ddddocr.DdddOcr()image = open("test.jpg", "rb").read()ocr.set_ranges("0123456789+-x/=")result = ocr.classification(image, probability=True )s = ""for i in result['probability']:s += result['charsets'][i.index(max(i))]print(s)This project supports the import of customized trained models from dddd_trainer. The reference import code is

import ddddocrocr = ddddocr.DdddOcr(det=False, ocr=False, import_onnx_path="myproject_0.984375_139_13000_2022-02-26-15-34-13.onnx", charsets_path="charsets.json")with open('test.jpg ', 'rb') as f:image_bytes = f.read()res = ocr.classification(image_bytes)print(res)This project uses Git for version management. You can see the currently available versions in the repository.

Bring your younger brother OCR, an overall solution for obtaining network verification codes locally using pure VBA

dddddocr rust version

Modified version of captcha-killer

Train an alphanumeric CAPTCHA model and identify deployment calls via ddddocr

...

Welcome to submit more excellent cases or tutorials. You can directly create a new issue title starting with [Submission] and attach a link to the public tutorial site. I will choose to display the readme based on the article content, which is relatively non-repetitive or has key content. Thank you all. Friend~

If you have too many friends, you may not be able to pass. If you have any questions, you can communicate in the issue.

This project is licensed under the MIT license, please see LICENSE for details