Paper: On the Test-Time Zero-Shot Generalization of Vision-Language Models: Do We Really Need Prompt Learning?.

Authors: Maxime Zanella, Ismail Ben Ayed.

This is the official GitHub repository for our paper accepted at CVPR '24. This work introduces the MeanShift Test-time Augmentation (MTA) method, leveraging Vision-Language models without the necessity for prompt learning. Our method randomly augments a single image into N augmented views, then alternates between two key steps (see mta.py and Details on the code section.):

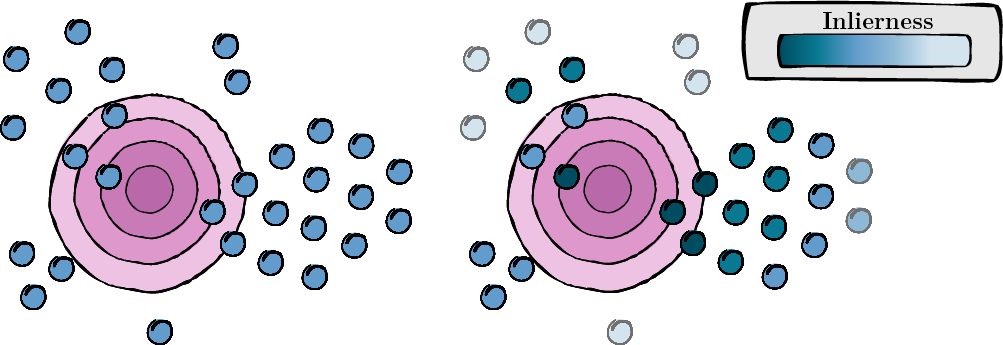

This step involves calculating a score for each augmented view to assess its relevance and quality (inlierness score).

Figure 1: Score computation for each augmented view.

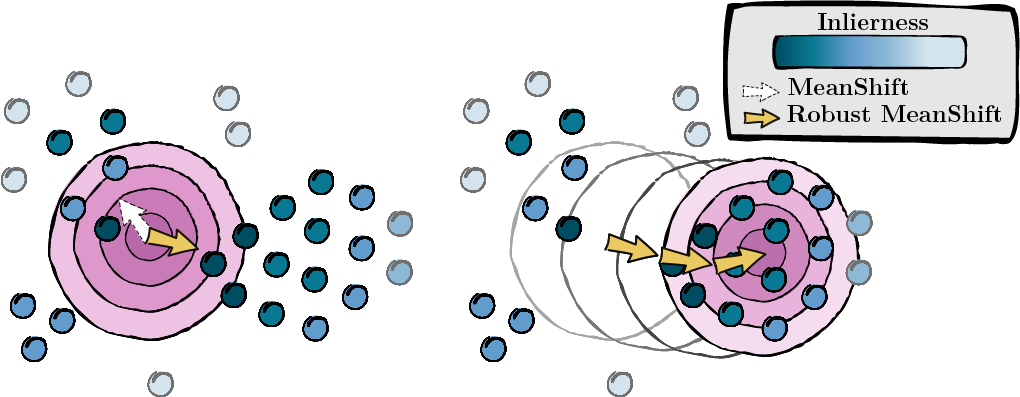

Based on the scores computed in the previous step, we seek the mode of the data points (MeanShift).

Figure 2: Seeking the mode, weighted by inlierness scores.

We follow TPT installation and preprocessing. This ensures that your dataset is formatted appropriately. You can find their repository here. If more convenient you can change the folder names of each dataset in the dictionnary ID_to_DIRNAME in data/datautils.py (line 20).

Execute MTA on the ImageNet dataset with a random seed of 1 and 'a photo of a' prompt by entering the following command:

python main.py --data /path/to/your/data --mta --testsets I --seed 1Or the 15 datasets at once:

python main.py --data /path/to/your/data --mta --testsets I/A/R/V/K/DTD/Flower102/Food101/Cars/SUN397/Aircraft/Pets/Caltech101/UCF101/eurosat --seed 1More information on the procedure in mta.py.

gaussian_kernel

solve_mta

y) uniformly.If you find this project useful, please cite it as follows:

@inproceedings{zanella2024test,

title={On the test-time zero-shot generalization of vision-language models: Do we really need prompt learning?},

author={Zanella, Maxime and Ben Ayed, Ismail},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={23783--23793},

year={2024}

}We express our gratitude to the TPT authors for their open-source contribution. You can find their repository here.