microGPT is a lightweight implementation of the Generative Pre-trained Transformer (GPT) model for natural language processing tasks. It is designed to be simple and easy to use, making it a great option for small-scale applications or for learning and experimenting with generative models.

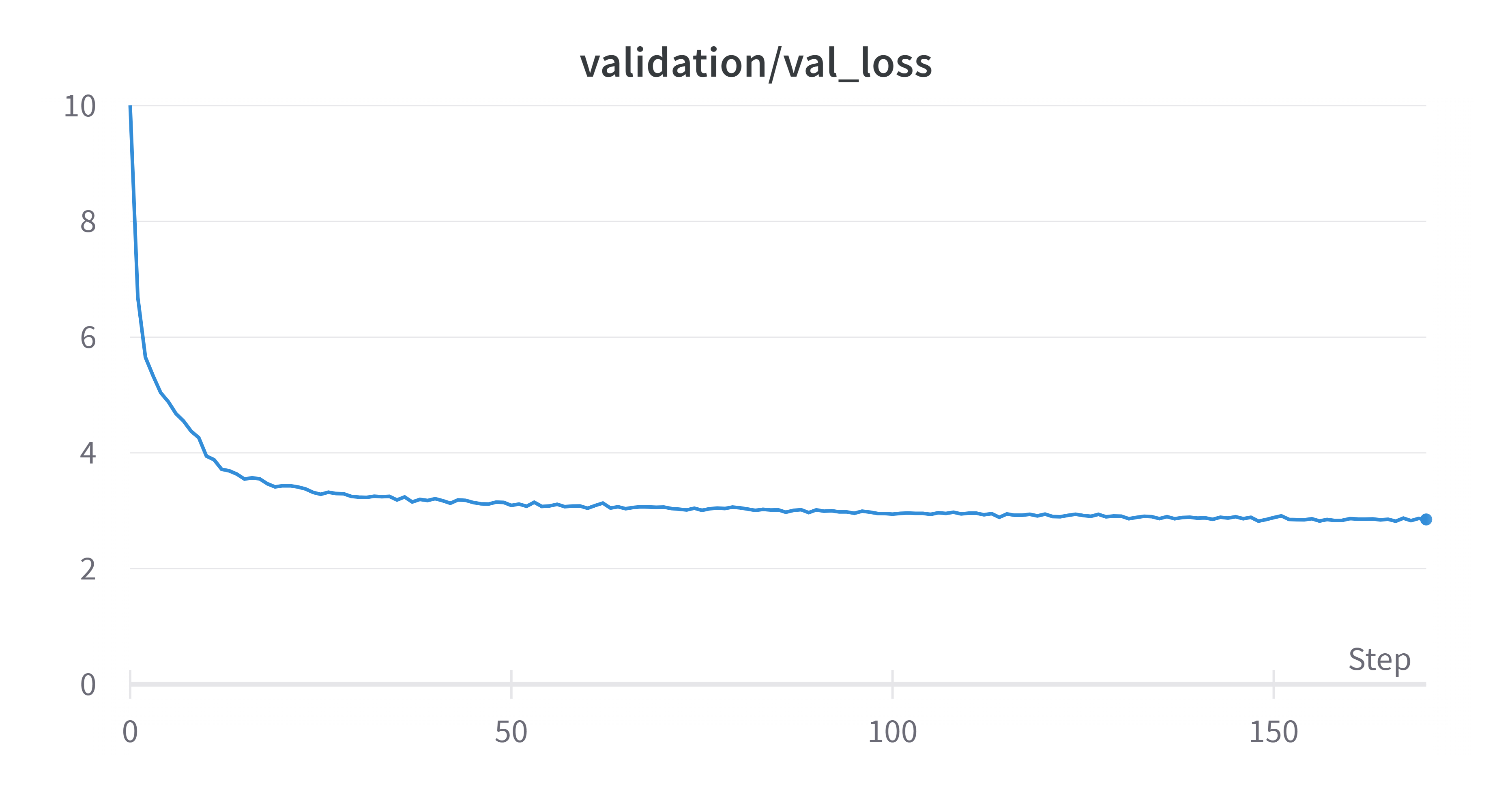

300k iterations of training

300k iterations of training

pip install -r requirements.txt

tokenizer/train_tokenizer.py to generate the tokenizer file. The model will tokenize text based on it.datasets/prepare_dataset.py to generate dataset files.train.py to start training~Modify the files stated above if you wish to change their parameters.

To edit model generation parameters, head over inference.py to this section:

# Parameters (Edit here):

n_tokens = 1000

temperature = 0.8

top_k = 0

top_p = 0.9

model_path = 'models/microGPT.pth'

# Edit input here

context = "The magical wonderland of"Interested to deploy as a web app? Check out microGPT-deploy !

From Scratch Efficiency: Developed from the ground up, microGPT represents a streamlined approach to the esteemed GPT model. It showcases remarkable efficiency while maintaining a slight trade-off in quality.

Learning Playground: Designed for individuals eager to delve into the world of AI, microGPT's architecture offers a unique opportunity to grasp the inner workings of generative models. It's a launchpad for honing your skills and deepening your understanding.

Small-Scale Powerhouse: Beyond learning and experimentation, microGPT is a suitable option for small-scale applications. It empowers you to integrate AI-powered language generation into projects where efficiency and performance are paramount.

Customization Capabilities: microGPT's adaptability empowers you to modify and fine-tune the model to suit your specific goals, offering a canvas for creating AI solutions tailored to your requirements.

Learning Journey: Use microGPT as a stepping stone to comprehend the foundations of generative models. Its accessible design and documentation provide an ideal environment for those new to AI.

Experimentation Lab: Engage in experiments by tweaking and testing microGPT's parameters. The model's simplicity and versatility provide a fertile ground for innovation.

If you would like to contribute, please follow these guidelines:

By contributing to this repository, you agree to abide by our Code of Conduct and that your contributions will be released under the same License as the repository.

This model is inspired by Andrej Karpathy Let's build GPT from scratch video and Andrej Kaparthy nanoGPT with modifications for this project.