Bahri Batuhan Bilecen, Ahmet Berke Gokmen, Furkan Guzelant, and Aysegul Dundar

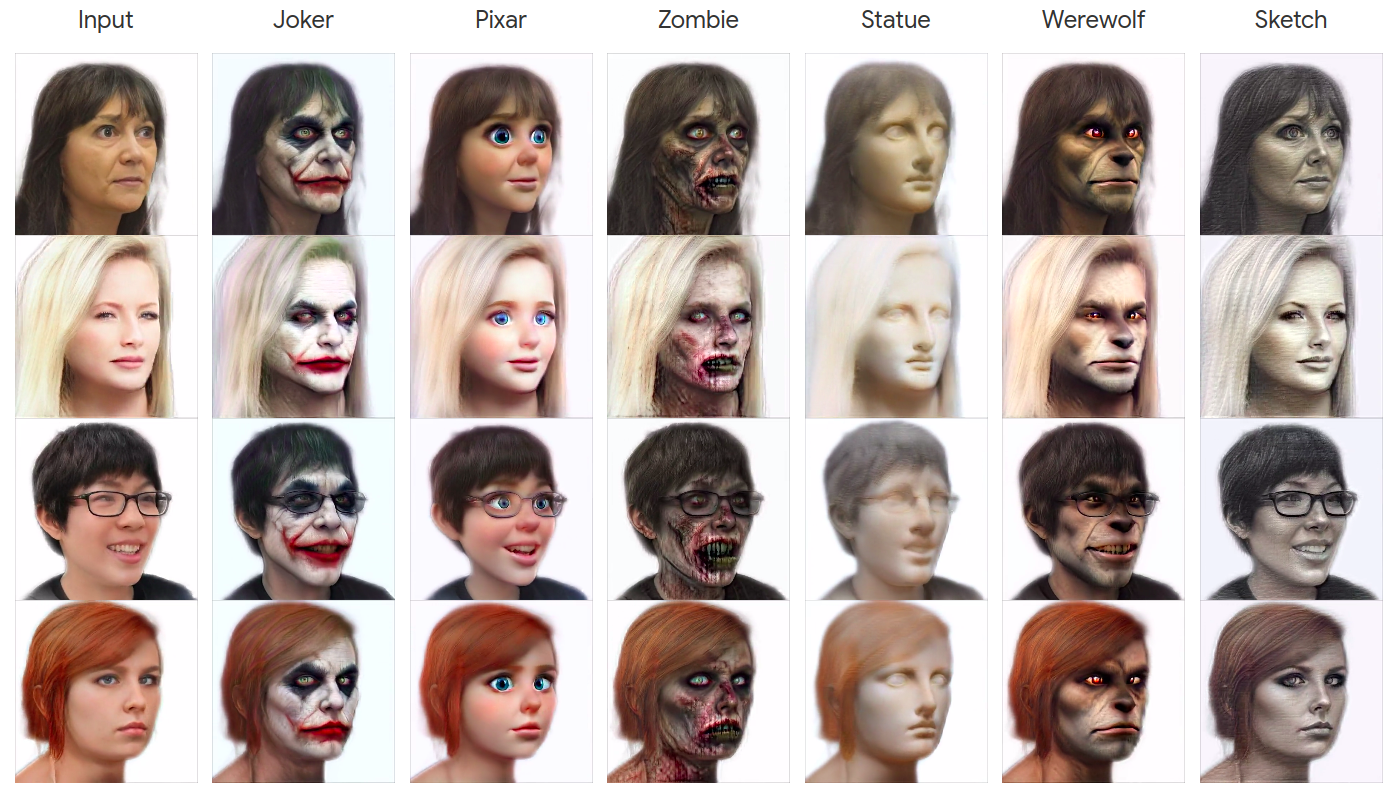

3D head stylization transforms realistic facial features into artistic representations, enhancing user engagement across gaming and virtual reality applications. While 3D-aware generators have made significant advancements, many 3D stylization methods primarily provide near-frontal views and struggle to preserve the unique identities of original subjects, often resulting in outputs that lack diversity and individuality. This paper addresses these challenges by leveraging the PanoHead model, synthesizing images from a comprehensive 360-degree perspective. We propose a novel framework that employs negative log-likelihood distillation (LD) to enhance identity preservation and improve stylization quality. By integrating multi-view grid score and mirror gradients within the 3D GAN architecture and introducing a score rank weighing technique, our approach achieves substantial qualitative and quantitative improvements. Our findings not only advance the state of 3D head stylization but also provide valuable insights into effective distillation processes between diffusion models and GANs, focusing on the critical issue of identity preservation.

git clone --recursive https://github.com/three-bee/3d_head_stylization.git

cd ./3d_head_stylization && pip install -r requirements.txt

We follow PanoHead's approach for pose extraction and face alignment. For this, you need to follow the setup procedure of PanoHead and ensure that you do not skip the setup of 3DDFA_V2. Then, run PanoHead/projector.py and omit the project_pti stage to only perform W+ encoding.

For your convenience, we provide W+ latents of several real-life identities in example folder.

Download all networks to your desired locations. We also provide stylized generator checkpoints for several prompts in this link.

| Network | Filename | Location |

|---|---|---|

| PanoHead | easy-khair-180-gpc0.8-trans10-025000.pkl |

${G_ckpt_path} |

| RealisticVision v5.1 | Realistic_Vision_V5.1_noVAE/ |

${diff_ckpt_path} |

| ControlNet edge | sd-controlnet-canny/ |

${controlnet_edge_path} |

| ControlNet depth | sd-controlnet-depth/ |

${controlnet_depth_path} |

| DepthAnythingV2 | depth_anything_v2_vitb.pth |

${depth_path} |

Change ${stylized_G_ckpt_path} with checkpoint paths given in this link. example folder provides several real-life W+ encoded heads. Giving an invalid path to latent_list_path will stylize synth_sample_num of synthetic samples.

python infer_LD.py

--save_path "work_dirs/demo"

--G_ckpt_path ${G_ckpt_path}

--stylized_G_ckpt_path ${stylized_G_ckpt_path}

--latent_list_path "example"

--synth_sample_num 10

Change prompt and save_path. You may play with other hyperparameters in the training file.

python train_LD.py

--prompt "Portrait of a werewolf"

--save_path "work_dirs/demo"

--diff_ckpt_path ${diff_ckpt_path}

--depth_path ${depth_path}

--G_ckpt_path ${G_ckpt_path}

--controlnet_edge_path ${controlnet_edge_path}

--controlnet_depth_path ${controlnet_depth_path}

@misc{bilecen2024identitypreserving3dhead,

title={Identity Preserving 3D Head Stylization with Multiview Score Distillation},

author={Bahri Batuhan Bilecen and Ahmet Berke Gokmen and Furkan Guzelant and Aysegul Dundar},

year={2024},

url={https://arxiv.org/abs/2411.13536},

}

Copyright 2024 Bilkent DLR. Licensed under the Apache License, Version 2.0 (the "License").