To access the archived version of the tool, navigate to the Archive branch.

The Azure Cosmos DB Desktop Data Migration Tool is an open-source project containing a command-line application that provides import and export functionality for Azure Cosmos DB.

To use the tool, download the latest zip file for your platform (win-x64, mac-x64, or linux-x64) from Releases and extract all files to your desired install location. To begin a data transfer operation, first populate the migrationsettings.json file with appropriate settings for your data source and sink (see detailed instructions below or review examples), and then run the application from a command line: dmt.exe on Windows or dmt on other platforms.

Multiple extensions are provided in this repository. Find the documentation for the usage and configuration of each using the links provided:

Azure Cosmos DB

Azure Table API

JSON

MongoDB

SQL Server

Parquet

CSV

File Storage

Azure Blob Storage

AWS S3

Azure Cognitive Search

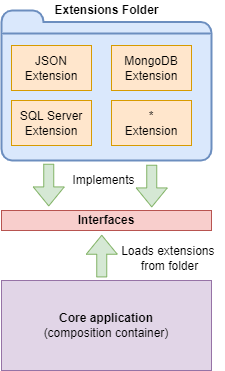

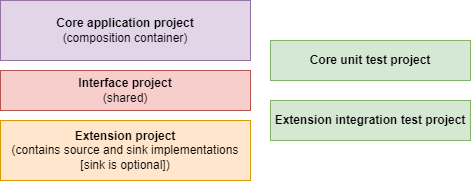

The Azure Cosmos DB Desktop Data Migration Tool is a lightweight executable that leverages the Managed Extensibility Framework (MEF). MEF enables decoupled implementation of the core project and its extensions. The core application is a command-line executable responsible for composing the required extensions at runtime by automatically loading them from the Extensions folder of the application. An Extension is a class library that includes the implementation of a System as a Source and (optionally) Sink for data transfer. The core application project does not contain direct references to any extension implementation. Instead, these projects share a common interface.

The Cosmos DB Data Migration Tool core project is a C# command-line executable. The core application serves as the composition container for the required Source and Sink extensions. Therefore, the application user needs to put only the desired Extension class library assembly into the Extensions folder before running the application. In addition, the core project has a unit test project to exercise the application's behavior, whereas extension projects contain concrete integration tests that rely on external systems.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.

git clone https://github.com/AzureCosmosDB/data-migration-desktop-tool.gitUsing Visual Studio 2022, open CosmosDbDataMigrationTool.sln.

Build the project using the keyboard shortcut Ctrl+Shift+B (Cmd+Shift+B on a Mac). This will build all current extension projects as well as the command-line Core application. The extension projects build assemblies get written to the Extensions folder of the Core application build. This way all extension options are available when the application is run.

This tutorial outlines how to use the Azure Cosmos DB Desktop Data Migration Tool to move JSON data to Azure Cosmos DB. This tutorial uses the Azure Cosmos DB Emulator.

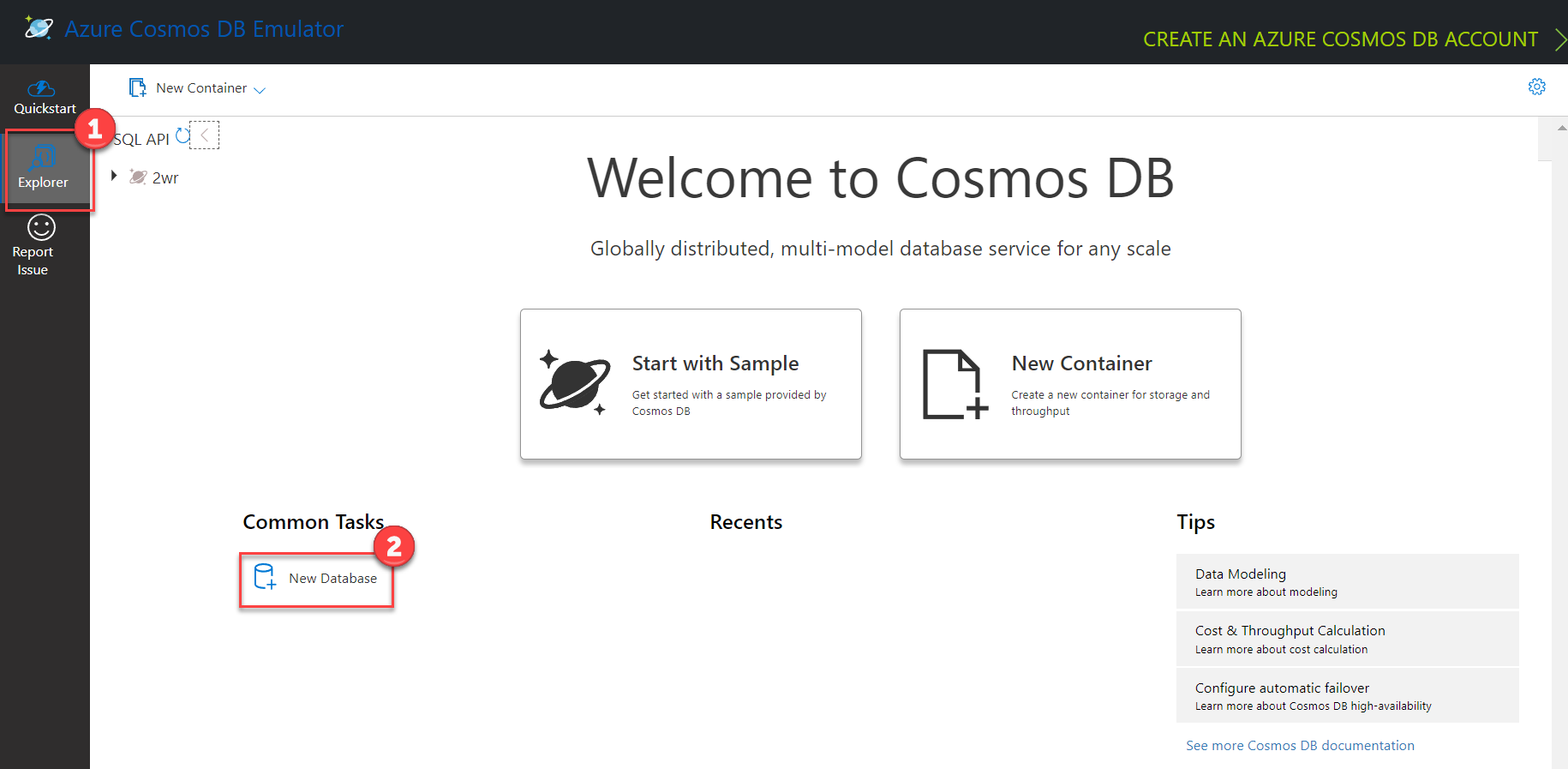

Launch the Azure Cosmos DB emulator application and open https://localhost:8081/_explorer/index.html in a browser.

Select the Explorer option from the left menu. Then choose the New Database link found beneath the Common Tasks heading.

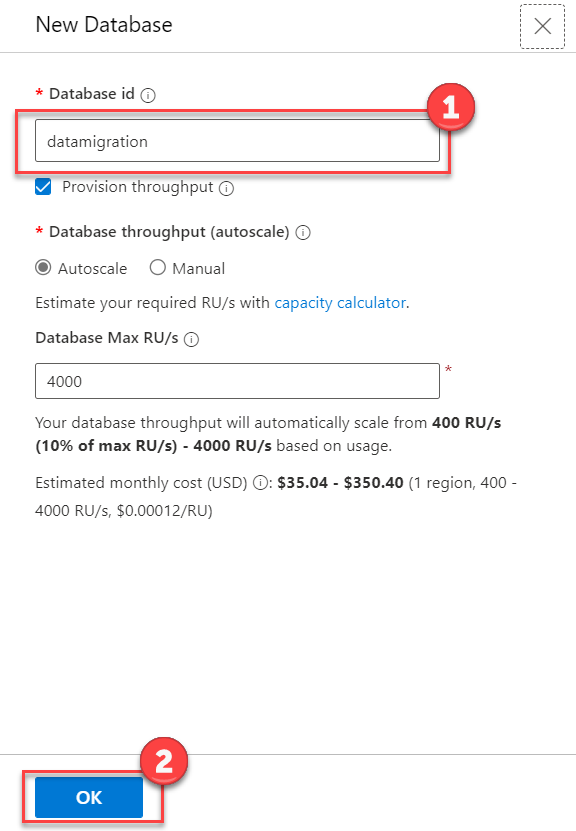

On the New Database blade, enter datamigration in the Database id field, then select OK.

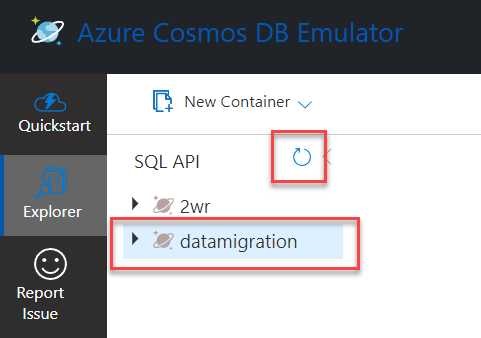

If the datamigration database doesn't appear in the list of databases, select the Refresh icon.

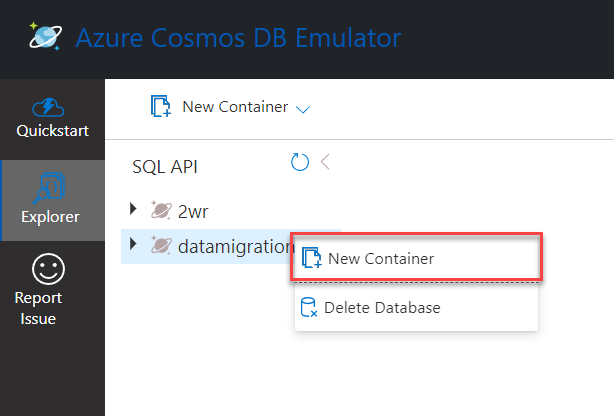

Expand the ellipsis menu next to the datamigration database and select New Container.

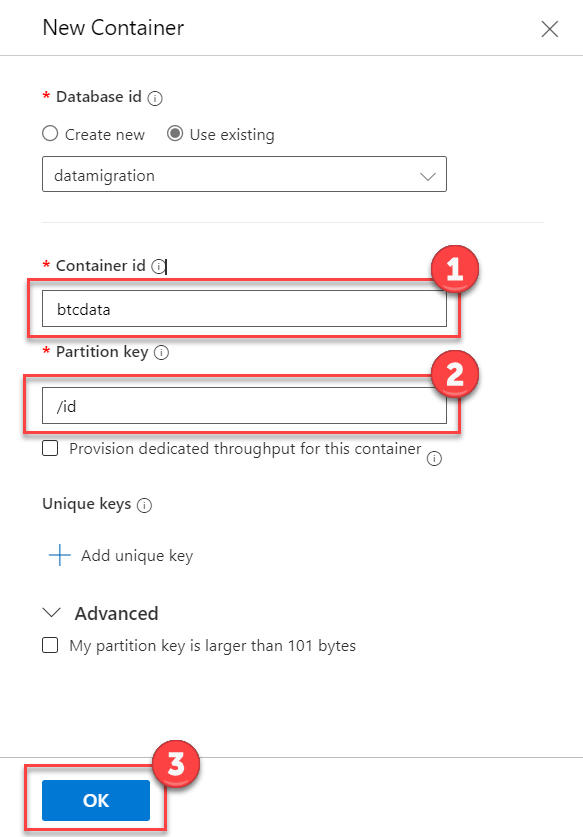

On the New Container blade, enter btcdata in the Container id field, and /id in the Partition key field. Select the OK button.

Note: When using the Cosmos DB Data Migration tool, the container doesn't have to previously exist, it will be created automatically using the partition key specified in the sink configuration.

Each extension contains a README document that outlines configuration for the data migration. In this case, locate the configuration for JSON (Source) and Cosmos DB (Sink).

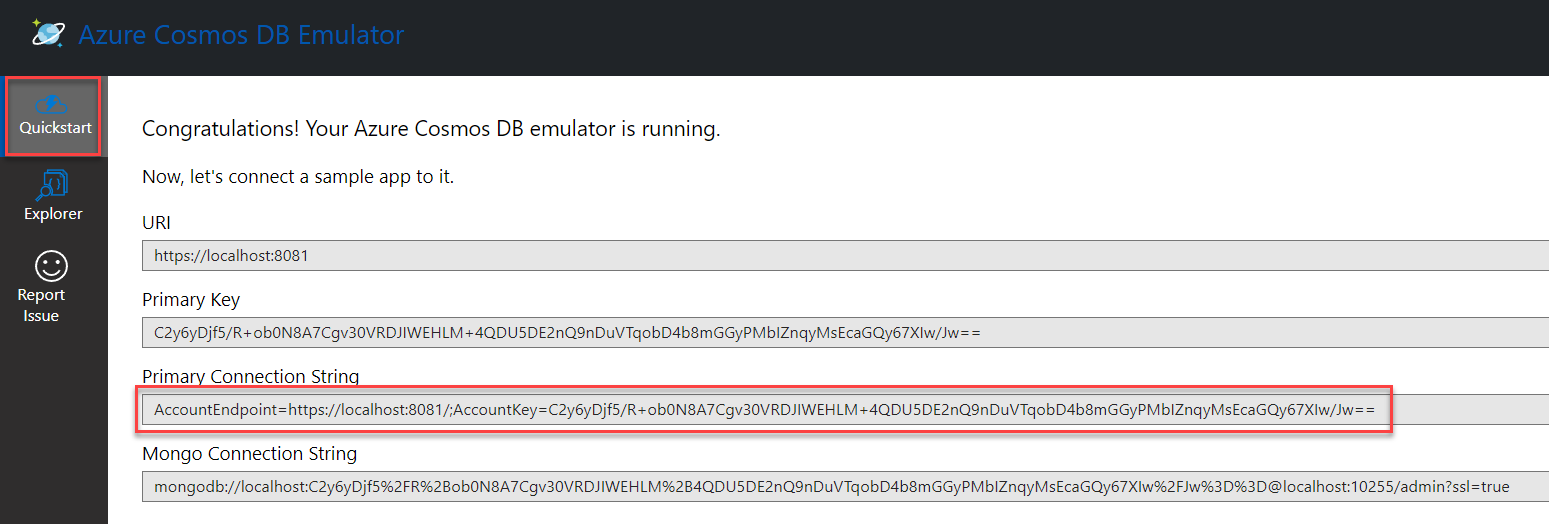

In the Visual Studio Solution Explorer, expand the Microsoft.Data.Transfer.Core project, and open migrationsettings.json. This file provides an example outline of the settings file structure. Using the documentation linked above, configure the SourceSettings and SinkSettings sections. Ensure the FilePath setting is the location where the sample data is extracted. The ConnectionString setting can be found on the Cosmos DB Emulator Quickstart screen as the Primary Connection String. Save the file.

Note: The alternate terms Target and Destination can be used in place of Sink in configuration files and command line parameters. For example

"Target"and"TargetSettings"would also be valid in the below example.

{

"Source": "JSON",

"Sink": "Cosmos-nosql",

"SourceSettings": {

"FilePath": "C:\btcdata\simple_json.json"

},

"SinkSettings": {

"ConnectionString": "AccountEndpoint=https://localhost:8081/;AccountKey=C2y6yDj...",

"Database": "datamigration",

"Container": "btcdata",

"PartitionKeyPath": "/id",

"RecreateContainer": false,

"IncludeMetadataFields": false

}

}

Ensure the Cosmos.DataTransfer.Core project is set as the startup project then press F5 to run the application.

The application then performs the data migration. After a few moments the process will indicate Data transfer complete. or Data transfer failed.

Note: The

SourceandSinkproperties should match the DisplayName set in the code for the extensions.

Download the latest release, or ensure the project is built.

The Extensions folder contains the plug-ins available for use in the migration. Each extension is located in a folder with the name of the data source. For example, the Cosmos DB extension is located in the folder Cosmos. Before running the application, you can open the Extensions folder and remove any folders for the extensions that are not required for the migration.

In the root of the build folder, locate the migrationsettings.json and update settings as documented in the Extension documentation. Example file (similar to tutorial above):

{

"Source": "JSON",

"Sink": "Cosmos-nosql",

"SourceSettings": {

"FilePath": "C:\btcdata\simple_json.json"

},

"SinkSettings": {

"ConnectionString": "AccountEndpoint=https://localhost:8081/;AccountKey=C2y6yDj...",

"Database": "datamigration",

"Container": "btcdata",

"PartitionKeyPath": "/id",

"RecreateContainer": false,

"IncludeMetadataFields": false

}

}Note: migrationsettings.json can also be configured to execute multiple data transfer operations with a single run command. To do this, include an

Operationsproperty consisting of an array of objects that includeSourceSettingsandSinkSettingsproperties using the same format as those shown above for single operations. Additional details and examples can be found in this blog post.

Execute the program using the following command:

Using Windows

dmt.exeNote: Use the

--settingsoption with a file path to specify a different settings file (overriding the default migrationsettings.json file). This facilitates automating running of different migration jobs in a programmatic loop.

Using macOS

./dmtNote: Before you run the tool on macOS, you'll need to follow Apple's instructions on how to Open a Mac app from an unidentified developer.

Decide what type of extension you want to create. There are 3 different types of extensions and each of those can be implemented to read data, write data, or both.

Add a new folder in the Extensions folder with the name of your extension.

Create the extension project and an accompanying test project.

Cosmos.DataTransfer.<Name>Extension.Binary File Storage extensions are only used in combination with other extensions so should be placed in a .NET 6 Class Library without the additional debugging configuration needed below.

Add the new projects to the CosmosDbDataMigrationTool solution.

In order to facilitate local debugging the extension build output along with any dependencies needs to be copied into the CoreCosmos.DataTransfer.CorebinDebugnet6.0Extensions folder. To set up the project to automatically copy add the following changes.

LocalDebugFolder with a Target Location of ......CoreCosmos.DataTransfer.CorebinDebugnet6.0Extensions

<Target Name="PublishDebug" AfterTargets="Build" Condition=" '$(Configuration)' == 'Debug' ">

<Exec Command="dotnet publish --no-build -p:PublishProfile=LocalDebugFolder" />

</Target>Add references to the System.ComponentModel.Composition NuGet package and the Cosmos.DataTransfer.Interfaces project.

Extensions can implement either IDataSourceExtension to read data or IDataSinkExtension to write data. Classes implementing these interfaces should include a class level System.ComponentModel.Composition.ExportAttribute with the implemented interface type as a parameter. This will allow the plugin to get picked up by the main application.

IComposableDataSource or IComposableDataSink interfaces. To be used with different file formats, the projects containing the formatters should reference the extension's project and add new CompositeSourceExtension or CompositeSinkExtension referencing the storage and formatter extensions.IFormattedDataReader or IFormattedDataWriter interfaces. In order to be usable each should also declare one or more CompositeSourceExtension or CompositeSinkExtension to define available storage locations for the format. This will require adding references to Storage extension projects and adding a declaration for each file format/storage combination. Example:

[Export(typeof(IDataSinkExtension))]

public class JsonAzureBlobSink : CompositeSinkExtension<AzureBlobDataSink, JsonFormatWriter>

{

public override string DisplayName => "JSON-AzureBlob";

}IConfiguration instance passed into the ReadAsync and WriteAsync methods. Settings under SourceSettings/SinkSettings will be included as well as any settings included in JSON files specified by the SourceSettingsPath/SinkSettingsPath.Implement your extension to read and/or write using the generic IDataItem interface which exposes object properties as a list key-value pairs. Depending on the specific structure of the data storage type being implemented, you can choose to support nested objects and arrays or only flat top-level properties.

Binary File Storage extensions are only concerned with generic storage so only work with

Streaminstances representing whole files rather than individualIDataItem.