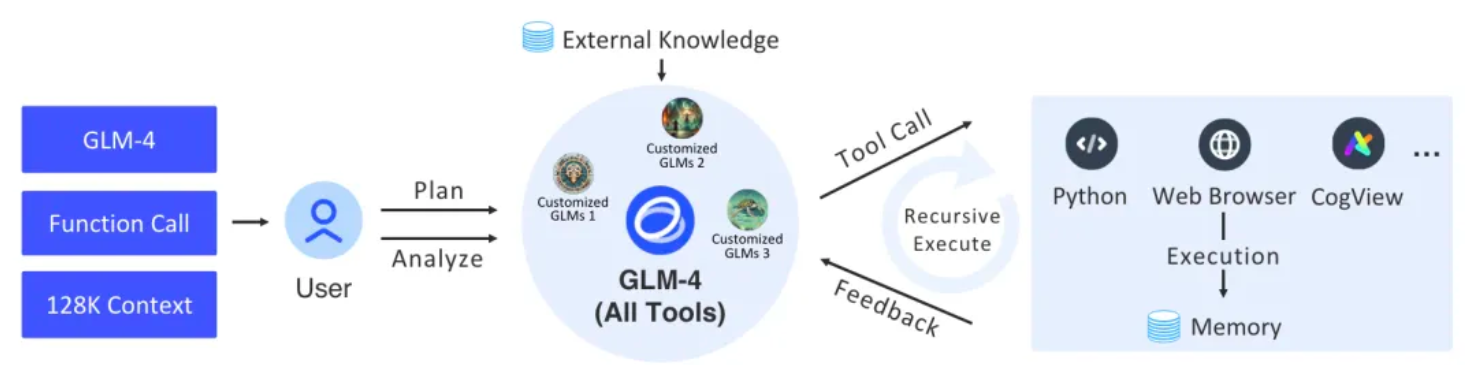

This project implements a complete supporting agent and related task architecture through the basic components of langchain. The bottom layer uses the latest GLM-4 All Tools of Zhipu AI. Through the API interface of Zhipu AI, it can independently understand the user's intentions, plan complex instructions, and call one or more tools (such as web browsers, Python interpretation processor and text-to-image model) to accomplish complex tasks.

Figure | The overall process of GLM-4 All Tools and customized GLMs (agents).

| package path | illustrate |

|---|---|

| agent_toolkits | Platform tool AdapterAllTool adapter is a platform adapter tool used to provide a unified interface for various tools, with the purpose of achieving seamless integration and execution on different platforms. The tool ensures compatibility and consistent output by adapting to specific platform parameters. |

| agents | Define the encapsulation of AgentExecutor's input, output, agent session, tool parameters, and tool execution strategy |

| callbacks | Abstract some interactive events in the AgentExecutor process and display information through events |

| chat_models | The encapsulation layer of zhipuai sdk provides langchain's BaseChatModel integration and formats input and output as message bodies. |

| embeddings | The encapsulation layer of zhipuai sdk provides langchain’s Embeddings integration. |

| utils | some conversational tools |

Official Python (3.8, 3.9, 3.10, 3.11, 3.12)

Please set the environment variable

ZHIPUAI_API_KEYbefore use. The value is the API Key of ZHIPUAI AI.

import getpass

import os

os . environ [ "ZHIPUAI_API_KEY" ] = getpass . getpass () from langchain_glm import ChatZhipuAI

llm = ChatZhipuAI ( model = "glm-4" ) from langchain_core . tools import tool

@ tool

def multiply ( first_int : int , second_int : int ) -> int :

"""Multiply two integers together."""

return first_int * second_int

@ tool

def add ( first_int : int , second_int : int ) -> int :

"Add two integers."

return first_int + second_int

@ tool

def exponentiate ( base : int , exponent : int ) -> int :

"Exponentiate the base to the exponent power."

return base ** exponent from operator import itemgetter

from typing import Dict , List , Union

from langchain_core . messages import AIMessage

from langchain_core . runnables import (

Runnable ,

RunnableLambda ,

RunnableMap ,

RunnablePassthrough ,

)

tools = [ multiply , exponentiate , add ]

llm_with_tools = llm . bind_tools ( tools )

tool_map = { tool . name : tool for tool in tools }

def call_tools ( msg : AIMessage ) -> Runnable :

"""Simple sequential tool calling helper."""

tool_map = { tool . name : tool for tool in tools }

tool_calls = msg . tool_calls . copy ()

for tool_call in tool_calls :

tool_call [ "output" ] = tool_map [ tool_call [ "name" ]]. invoke ( tool_call [ "args" ])

return tool_calls

chain = llm_with_tools | call_tools chain . invoke (

"What's 23 times 7, and what's five times 18 and add a million plus a billion and cube thirty-seven"

) code_interpreter: Use sandbox to specify the code sandbox environment. Default = auto, which means the sandbox environment is automatically called to execute the code. Set sandbox = none to disable the sandbox environment.

web_browser: Use web_browser to specify the browser tool. drawing_tool: Use drawing_tool to specify the drawing tool.

from langchain_glm . agents . zhipuai_all_tools import ZhipuAIAllToolsRunnable

agent_executor = ZhipuAIAllToolsRunnable . create_agent_executor (

model_name = "glm-4-alltools" ,

tools = [

{ "type" : "code_interpreter" , "code_interpreter" : { "sandbox" : "none" }},

{ "type" : "web_browser" },

{ "type" : "drawing_tool" },

multiply , exponentiate , add

],

) from langchain_glm . agents . zhipuai_all_tools . base import (

AllToolsAction ,

AllToolsActionToolEnd ,

AllToolsActionToolStart ,

AllToolsFinish ,

AllToolsLLMStatus

)

from langchain_glm . callbacks . agent_callback_handler import AgentStatus

chat_iterator = agent_executor . invoke (

chat_input = "看下本地文件有哪些,告诉我你用的是什么文件,查看当前目录"

)

async for item in chat_iterator :

if isinstance ( item , AllToolsAction ):

print ( "AllToolsAction:" + str ( item . to_json ()))

elif isinstance ( item , AllToolsFinish ):

print ( "AllToolsFinish:" + str ( item . to_json ()))

elif isinstance ( item , AllToolsActionToolStart ):

print ( "AllToolsActionToolStart:" + str ( item . to_json ()))

elif isinstance ( item , AllToolsActionToolEnd ):

print ( "AllToolsActionToolEnd:" + str ( item . to_json ()))

elif isinstance ( item , AllToolsLLMStatus ):

if item . status == AgentStatus . llm_end :

print ( "llm_end:" + item . text )We provide an integrated demo that can be run directly to see the effect.

fastapi = " ~0.109.2 "

sse_starlette = " ~1.8.2 "

uvicorn = " >=0.27.0.post1 "

# webui

streamlit = " 1.34.0 "

streamlit-option-menu = " 0.3.12 "

streamlit-antd-components = " 0.3.1 "

streamlit-chatbox = " 1.1.12.post4 "

streamlit-modal = " 0.1.0 "

streamlit-aggrid = " 1.0.5 "

streamlit-extras = " 0.4.2 "python tests/assistant/server/server.pypython tests/assistant/start_chat.pyexhibit