Welcome to join the Firefly large model technology exchange group, follow our official account, and click the join group button.

Welcome to follow our Zhihu for communication and discussion: Red Rain is Pouring

Firefly is an open source large model training project that supports pre-training, instruction fine-tuning and DPO for mainstream large models, including but not limited to Qwen2, Yi-1.5, Llama3, Gemma, Qwen1.5, MiniCPM, MiniCPM3, Lla ma, InternLM, Baichuan, ChatGLM, Yi, Deepseek, Qwen, Orion, Ziya, Xverse, Mistral, Mixtral-8x7B, Zephyr, Vicuna, Bloom, etc. This project supports full parameter training, LoRA, QLoRA efficient training , and supports pre-training, SFT, and DPO . If your training resources are limited, we highly recommend using QLoRA for instruction fine-tuning, because we have verified the effectiveness of this method on the Open LLM Leaderboard and achieved very good results.

?The main contents of this project are as follows:

The current version has been adapted to the templates of different chat models, and there are major updates to the code. If you prefer the previous version, you can download the code v0.0.1-alpha

The evaluation results come from Hugging Face’s Open LLM Leaderboard. Our models are trained using QLoRA scripts, and only 1 to 2 V100s are used for training.

| Model | Average | ARC | HellaSwag | MMLU | TruthfulQA |

|---|---|---|---|---|---|

| firefly-mixtral-8x7b | 70.16 | 68.09 | 85.76 | 71.49 | 55.31 |

| Yi-34B-Chat | 69.97 | 65.44 | 84.16 | 74.9 | 55.37 |

| firefly-llama-30b | 64.83 | 64.25 | 83.64 | 58.23 | 53.2 |

| falcon-40b-instruct | 63.47 | 61.6 | 84.31 | 55.45 | 52.52 |

| guanaco-33b | 62.98 | 62.46 | 84.48 | 53.78 | 51.22 |

| firefly-llama2-13b-v1.2 | 62.17 | 60.67 | 80.46 | 56.51 | 51.03 |

| firefly-llama2-13b | 62.04 | 59.13 | 81.99 | 55.49 | 51.57 |

| vicuna-13b-v1.5 | 61.63 | 56.57 | 81.24 | 56.67 | 51.51 |

| mpt-30b-chat | 61.21 | 58.7 | 82.54 | 51.16 | 52.42 |

| wizardlm-13b-v1.2 | 60.79 | 59.04 | 82.21 | 54.64 | 47.27 |

| vicuna-13b-v1.3 | 60.01 | 54.61 | 80.41 | 52.88 | 52.14 |

| llama-2-13b-chat | 59.93 | 59.04 | 81.94 | 54.64 | 44.12 |

| vicuna-13b-v1.1 | 59.21 | 52.73 | 80.14 | 51.9 | 52.08 |

| guanaco-13b | 59.18 | 57.85 | 83.84 | 48.28 | 46.73 |

? Using the training code of this project, and the above training data, we trained and open sourced the following model weights.

Chinese model:

| Model | base model | training length |

|---|---|---|

| firefly-baichuan2-13b | baichuan-inc/Baichuan2-13B-Base | 1024 |

| firefly-baichuan-13b | baichuan-inc/Baichuan-13B-Base | 1024 |

| firefly-qwen-7b | Qwen/Qwen-7B | 1024 |

| firefly-chatglm2-6b | THUDM/chatglm2-6b | 1024 |

| firefly-internlm-7b | internlm/internlm-7b | 1024 |

| firefly-baichuan-7b | baichuan-inc/baichuan-7B | 1024 |

| firefly-ziya-13b | YeungNLP/Ziya-LLaMA-13B-Pretrain-v1 | 1024 |

| firefly-bloom-7b1 | bigscience/bloom-7b1 | 1024 |

| firefly-bloom-2b6-v2 | YeungNLP/bloom-2b6-zh | 512 |

| firefly-bloom-2b6 | YeungNLP/bloom-2b6-zh | 512 |

| firefly-bloom-1b4 | YeungNLP/bloom-1b4-zh | 512 |

English model:

| Model | base model | training length |

|---|---|---|

| firefly-mixtral-8x7b | mistralai/Mixtral-8x7B-v0.1 | 1024 |

| firefly-llama-30b | huggyllama/llama-30b | 1024 |

| firefly-llama-13-v1.2 | NousResearch/Llama-2-13b-hf | 1024 |

| firefly-llama2-13b | NousResearch/Llama-2-13b-hf | 1024 |

| firefly-llama-13b-v1.2 | huggyllama/llama-13b | 1024 |

| firefly-llama-13b | huggyllama/llama-13b | 1024 |

? At present, this project mainly organizes the following instruction data sets and organizes them into a unified data format:

| Dataset | introduce |

|---|---|

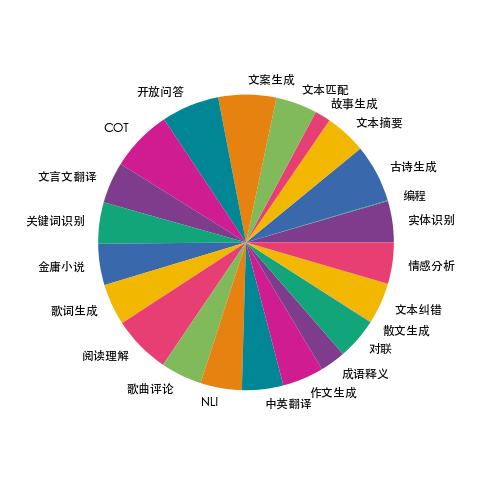

| firefly-train-1.1M | We collected data on 23 common Chinese NLP tasks and constructed many data related to Chinese culture, such as couplets, poetry, classical Chinese translation, prose, Jin Yong novels, etc. For each task, several instruction templates are manually written to ensure the high quality and richness of the data. The amount of data is 1.15 million. |

| moss-003-sft-data | Chinese and English multi-round dialogue data open sourced by the MOSS team of Fudan University, containing 1 million+ data |

| ultrachat | English multi-turn conversation data open sourced by Tsinghua University, containing 1.4 million+ data |

| WizardLM_evol_instruct_V2_143k | The English instruction fine-tuning data set open sourced by the WizardLM project uses the Evol-Instruct method to evolve the instructions and enhance the complexity of the instructions to improve the model's ability to follow complex instructions. Contains 143k pieces of data. |

| school_math_0.25M | The mathematical operation instruction data open sourced by the BELLE project team contains 250,000 pieces of data. |

| shareAI/CodeChat | It mainly includes corpus samples related to logical reasoning, code question and answer, and code generation. |

| shareAI/ShareGPT-Chinese-English-90k | Chinese and English parallel bilingual high-quality human-machine question and answer data set, covering user questions in real complex scenarios. |

| ultrachat_200k | The English command fine-tuning data open sourced by the Zephyr project is cleaned based on ultrachat data. |

| ultrafeedback_binarized | English preference data set, can be used for DPO training |

The above data can be selected, sampled or combined as needed. If you are training a Chinese model, it is recommended to try using moss data. In English, you can try ultrachat and WizardLM data.

The training data is in jsonl format. The data format of each row is as follows. The conversation field is required and other fields can be added or deleted according to actual needs. You can refer to the data/dummy_data.jsonl file in the project.

{

"conversation_id" : 44 ,

"category" : " Brainstorming " ,

"conversation" :[

{

"human" : "怎么能够有效地提升个人能力? " ,

"assistant" : "提升个人能力可以通过以下几个方面实现: nn 1. 学习新知识和技能:阅读相关书籍、参加课程和工作坊、观看教育视频等学习方式,可以帮助你掌握新的知识和技能,从而提升个人能力。 nn 2. 持续练习和实践:不断地练习和实践所学的知识和技能,可以帮助你更好地掌握和应用这些技能,提高你的表现和效率。 nn 3. 寻求反馈和改进:寻求他人的反馈和建议可以帮助你发现自己的不足之处,进一步完善和改进自己的能力。 nn 4. 建立良好的工作和生活习惯:建立良好的工作和生活习惯,如合理安排时间、注意健康、保持积极态度等,可以提高你的工作效率和生活品质,从而提升个人能力。 nn 5. 社交和交流:与他人交流和合作,可以帮助你更好地了解他人的观点和经验,从而提升自己的能力和见识。 "

},

{

"human" : "对于初学者来说,如何快速掌握编程技能? " ,

"assistant":"以下是几个建议,可以帮助初学者快速掌握编程技能:nn1. 学习基础知识:建议先学习编程语言的基础知识和语法,例如变量、数据类型、控制结构、函数等。可以通过在线课程、编程书籍、视频教程等方式学习。nn2. 实践编程:进行实际的编程练习是非常重要的,可以通过参加编程挑战、编写小项目等方式来进行实践。nn3. 寻求反馈:可以寻求他人的反馈和建议,例如在线编程社区、编程导师等,他们可以帮助你发现问题并及时纠正。nn4. 阅读优秀代码:阅读和分析优秀的代码可以帮助你学习其他程序员的编程思路和技巧,从而提高自己的编程能力。nn5. 不断学习和更新:编程技术和工具在不断更新和发展,因此需要不断学习新的知识和技能,例如学习新的编程语言、框架、工具等,以跟上技术的发展和变化。nn总之,学习编程需要耐心和坚持,并且需要不断地实践和学习。通过以上建议,可以帮助初学者快速掌握编程技能。"

},

{

"human" : "除了参加编程挑战和阅读代码,还有哪些方式可以提升自己的编程能力呢? " ,

"assistant":"除了参加编程挑战和阅读代码,还有以下几种方式可以提升自己的编程能力:nn1. 参与开源项目:加入一个开源项目,可以让你了解到实际的软件开发流程和团队合作方式,并且可以与其他开发人员合作,学习和分享编程技巧和经验。nn2. 进行代码重构:对已经编写的代码进行重构,可以帮助你了解更好的编程实践和设计模式,从而提高代码的可读性和可维护性。nn3. 练习算法和数据结构:算法和数据结构是编程中的重要基础,练习算法和数据结构可以帮助你了解不同的数据结构和算法,从而更好地解决实际问题。nn4. 学习软件工程和架构:学习软件工程和架构的知识,可以帮助你了解如何设计和构建可靠、可扩展和可维护的软件系统。nn5. 参加编程社区和论坛:参加编程社区和论坛可以让你与其他开发人员分享和讨论编程经验和技巧,了解最新的技术趋势和解决方案。nn总之,提升编程能力需要不断地学习和实践,并与其他开发人员和编程专家进行交流和合作,以获取更多的经验和知识。"

},

{

"human" : "你有没有推荐的编程挑战网站? " ,

"assistant":"当然,以下是一些值得推荐的编程挑战网站:nn1. HackerRank:HackerRank 是一个全球领先的技术招聘平台,提供数百种编程挑战和比赛,包括算法、数据结构、数学、SQL、AI 等多个技术领域。nn2. LeetCode:LeetCode 是一个在线编程学习和实践平台,提供数千道编程题目,主要涵盖算法和数据结构。nn3. Codeforces:Codeforces 是一个全球知名的编程竞赛网站,提供数百个比赛和练习题目,主要涵盖算法和数据结构。nn4. Codewars:Codewars 是一个在线编程学习和实践平台,提供数千道编程题目,主要涵盖算法、数据结构、面向对象编程等多个技术领域。nn5. Project Euler:Project Euler 是一个非营利性的在线编程挑战平台,提供一系列数学和计算机科学问题,需要用编程语言进行解答。nn以上这些编程挑战网站可以帮助你提高编程能力,并且可以与其他编程爱好者进行交流和竞争。"

},

{

"human" : "在学习编程的过程中,如何避免陷入困境和放弃呢? " ,

"assistant":"学习编程是一个需要长期坚持和不断学习的过程,以下是一些避免陷入困境和放弃的方法:nn1. 制定合理的学习计划:制定合理的学习计划,包括学习时间、学习内容、目标等,可以帮助你更好地管理时间和精力,避免学习过程中的松懈和分心。nn2. 寻找合适的学习资源:选择适合自己的学习资源,例如在线课程、编程书籍、视频教程等,可以让你更好地了解和掌握编程知识和技能。nn3. 寻求帮助和支持:在学习过程中,遇到问题和困难是很正常的,可以寻求他人的帮助和支持,例如参加编程社区、找到编程导师等。nn4. 进行实践和项目:实践和项目是学习编程的重要组成部分,可以帮助你更好地了解和掌握编程技能,同时也可以提高学习的兴趣和动力。nn5. 坚持并保持兴趣:坚持学习和保持兴趣是学习编程的关键。可以通过参加编程社区、参加编程竞赛、与其他编程爱好者交流等方式来保持兴趣和动力。nn总之,学习编程需要耐心和坚持,并需要不断学习和实践。通过以上方法可以帮助你避免陷入困境和放弃。"

}

],

}The data distribution of firefly-train-1.1M is shown in the figure below:

For the data format, please refer to the data/pretrain/dummy_pretrain.jsonl file in the project.

For the data format, please refer to the data/dummy_dpo.jsonl file in the project.

If an error is reported during training, you can check the FAQ first.

We extract various components used in training for subsequent expansion and optimization. For details, see the implementation in the component directory. Parameter configuration during training is stored in the train_args directory to facilitate unified management and changes. You can view the training configurations of different models in the train_args directory, and modify or add them as needed.

The versions of several major python packages are fixed under requirements.txt. Just execute the following script. Notice:

pip install requirements.txtIf you need to enable Unsloth, it is recommended to install or update the following Python packages:

pip install git+https://github.com/unslothai/unsloth.git

pip install bitsandbytes==0.43.1

pip install peft==0.10.0

pip install torch==2.2.2

pip install xformers==0.0.25.post1If you need to use Unsloth to train Qwen1.5, install the following packages:

pip install git+https://github.com/yangjianxin1/unsloth.gitDuring pre-training, we use the classic autoregressive loss, that is, the token at each position will participate in the loss calculation.

When fine-tuning the instruction, we only calculate the loss of the assistant's recovery part.

The train_args directory stores configuration files for different models using different training methods. The main parameters are described as follows:

The following parameters need to be set when using QLoRA training:

Regarding the parameter configuration of deepspeed, you can modify it as needed.

Full parameter pre-training, replace {num_gpus} with the number of graphics cards:

deepspeed --num_gpus={num_gpus} train.py --train_args_file train_args/pretrain/full/bloom-1b1-pretrain-full.jsonFine-tuning of all parameter instructions, replacing {num_gpus} with the number of graphics cards:

deepspeed --num_gpus={num_gpus} train.py --train_args_file train_args/sft/full/bloom-1b1-sft-full.jsonSingle card QLoRA pre-training:

python train.py --train_args_file train_args/pretrain/qlora/yi-6b-pretrain-qlora.jsonSingle card QLoRA instruction fine-tuning:

python train.py --train_args_file train_args/sft/qlora/yi-6b-sft-qlora.jsonDoka QLoRA pre-training:

torchrun --nproc_per_node={num_gpus} train.py --train_args_file train_args/pretrain/qlora/yi-6b-pretrain-qlora.jsonDoka QLoRA instruction fine-tuning:

torchrun --nproc_per_node={num_gpus} train.py --train_args_file train_args/sft/qlora/yi-6b-sft-qlora.jsonSingle card QLoRA for DPO training:

python train.py --train_args_file train_args/sft/qlora/minicpm-2b-dpo-qlora.jsonIf you use LoRA or QLoRA for training, this project only saves the weights and configuration files of the adapter, and you need to merge the adapter weights with the base model. For the script, see script/merge_lora.py

We provide an interactive script for multiple rounds of dialogue. Please see the script/chat directory for details. This script is compatible with all models trained in this project for inference. The template_name set in the script needs to be consistent with the template_name during model training.

cd script/chat

python chat.pyThe top_p, temperature, repetition_penalty, do_sample and other parameters in the generation script have a great impact on the generation effect of the model, and can be debugged and modified according to your own usage scenarios.

The inference script supports the use of base model and adapter for inference. The disadvantage is that each time the script is started, the weights need to be merged, which takes a long time.

Supports the use of 4bit for inference, low memory requirements, and the effect will be slightly reduced.

If OOM occurs, parameters such as per_device_train_batch_size and max_seq_length can be reduced to alleviate it. You can also set gradient_checkpointing=true, which can greatly reduce the memory usage, but the training speed will be slower.

There are versions of each python package in requirements.txt

pip install -r requirements.txtYou can specify the use of cards No. 0 and No. 1 for training in the following ways:

CUDA_VISIBLE_DEVICES=0,1 torchrun --nproc_per_node={num_gpus} train_qlora.py --train_args_file train_args/qlora/baichuan-7b-sft-qlora.jsonTraining Baichuan2 requires installing torch==2.0 and uninstalling xformers and apex, otherwise an error will be reported

RuntimeError: No such operator xformers::efficient_attention_forward_generic - did you forget to build xformers with `python setup.py develop`?

Qwen needs to uninstall flash-attn for QLoRA training, otherwise an error will be reported:

assert all((i.dtype in [torch.float16, torch.bfloat16] for i in (q, k, v)))

After inquiry, this problem widely exists in issues in the Qwen official code base. If you train Qwen-Base and Yi-Base, it is recommended to set template_name="default" to avoid this problem. If you perform SFT on the Qwen-Chat and Yi-Chat models, this problem will not occur. You can set template_name to "qwen" and "yi" respectively.

Note: This problem does not exist in Qwen1.5

Due to factors such as the limitation of model parameters and the degree of cleaning of training data, the open source model of this project may have the following limitations:

Based on the limitations of the above model, we require that the code, data, and models of this project must not be used for purposes that cause harm to society, and must comply with the commercial license of the base model.

If you use data, code or models from this project, please cite this project.

@misc{Firefly,

author = {Jianxin Yang},

title = {Firefly(流萤): 中文对话式大语言模型},

year = {2023},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {url{https://github.com/yangjianxin1/Firefly}},

}