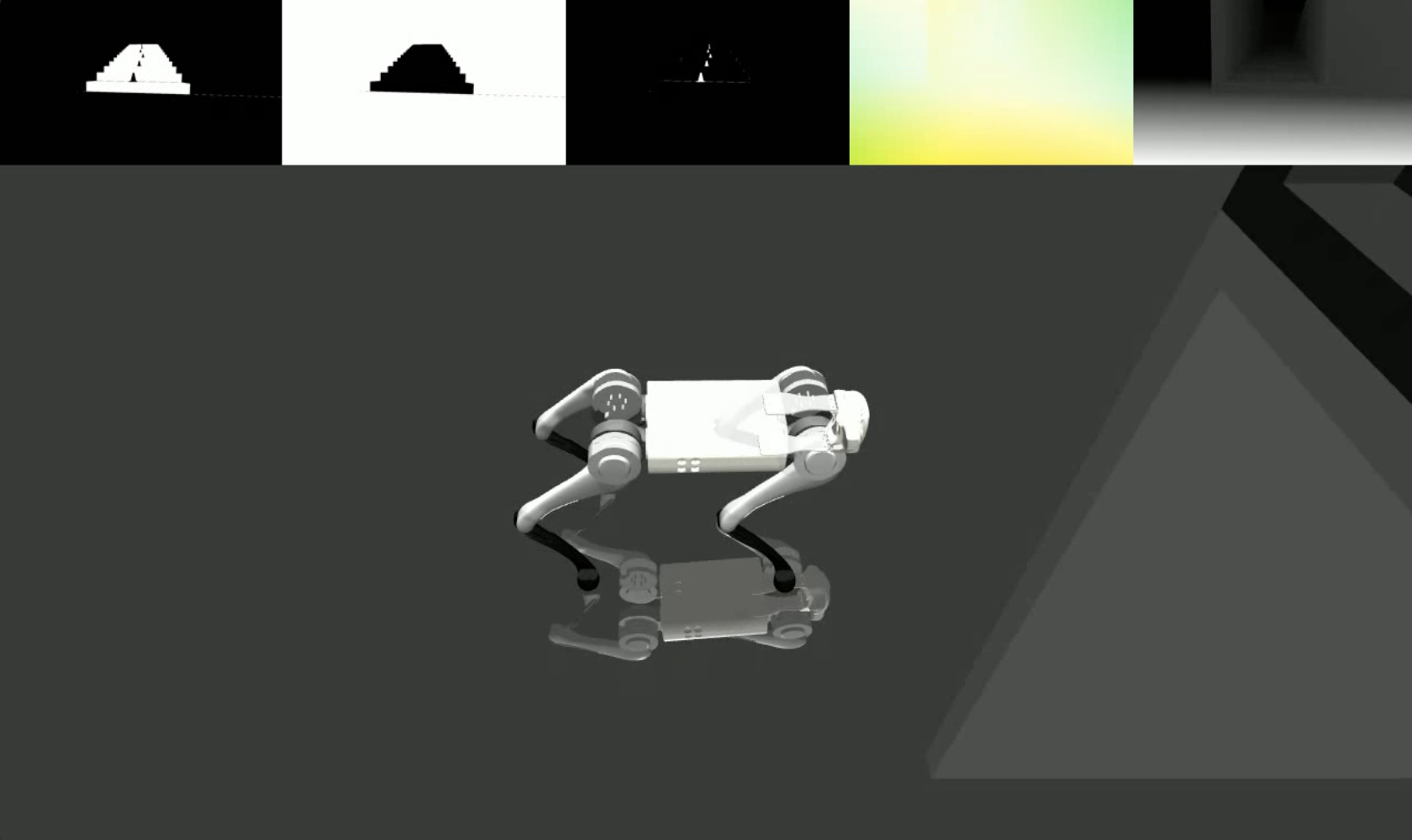

We bring realistic and diverse visual data from generative models to classical physics simulators, enabling robots to learn highly dynamic tasks like parkour without requiring depth.

lucidsim contains our simulated environments built using MuJoCo. We provide the environments and tools for running the

LucidSim rendering pipeline for quadruped parkour. Training code is not yet included.

If you're looking for the generative augmentation code (required for running the full rendering pipeline), please check out the weaver repo!

Alan Yu*1, Ge Yang*1,2,

Ran Choi1,

Yajvan Ravan1,

John Leonard1,

Phillip Isola1

1 MIT CSAIL,

2 Institute of AI and Fundamental Interactions (IAIFI)

* Indicates equal contribution

CoRL 2024

|

|

Table of Contents

If you followed the setup instructions from weaver, feel free to install on top of that environment.

conda create -n lucidsim python=3.10

conda activate lucidsim# Choose the CUDA version that your GPU supports. We will use CUDA 12.1

pip install torch==2.1.2 torchvision==0.16.2 torchaudio==2.1.2 --extra-index-url https://download.pytorch.org/whl/cu121

# Install lucidsim with more dependencies

git clone https://github.com/lucidsim/lucidsim

cd lucidsim

pip install -e .The last few dependencies require a downgraded setuptools and wheel to install. To install, please downgrade and

revert after.

pip install setuptools==65.5.0 wheel==0.38.4 pip==23

pip install gym==0.21.0

pip install gym-dmc==0.2.9

pip install -U setuptools wheel pipNote: On Linux, make sure to set the environment variable MUJOCO_GL=egl.

LucidSim generates photorealistic images by using a generative model to augment the simulator's rendering, using conditioning images to maintain control over the scene geometry.

We have provided an expert policy checkpoint under checkpoints/expert.pt. This policy was derived from that

of Extreme Parkour. You can use this policy to sample an environment

and visualize the conditioning images with:

# env-name: one of ['parkour', 'hurdle', 'gaps', 'stairs_v1', 'stairs_v2']

python play.py --save-path [--env-name] [--num-steps] [--seed]where save_path is where to save the resulting video.

To run the full generative augmentation pipeline, you will need to install the weaver package

from here. When done, please also make sure the environment variables are still

set correctly:

COMFYUI_CONFIG_PATH=/path/to/extra_model_paths.yaml

PYTHONPATH=/path/to/ComfyUI:$PYTHONPATHYou can then run the full pipeline with:

python play_three_mask_workflow.py --save-path --prompt-collection [--env-name] [--num-steps] [--seed]where save_path and env_name are the same as before. prompt_collection should be a path to a .jsonl file with

correctly formatted prompts, as in the weaver/examples folder.

We thank the authors of Extreme Parkour for their open-source codebase,

which we used as a starting point for our expert policy (lucidsim.model).

If you find our work useful, please consider citing:

@inproceedings{yu2024learning,

title={Learning Visual Parkour from Generated Images},

author={Alan Yu and Ge Yang and Ran Choi and Yajvan Ravan and John Leonard and Phillip Isola},

booktitle={8th Annual Conference on Robot Learning},

year={2024},

}