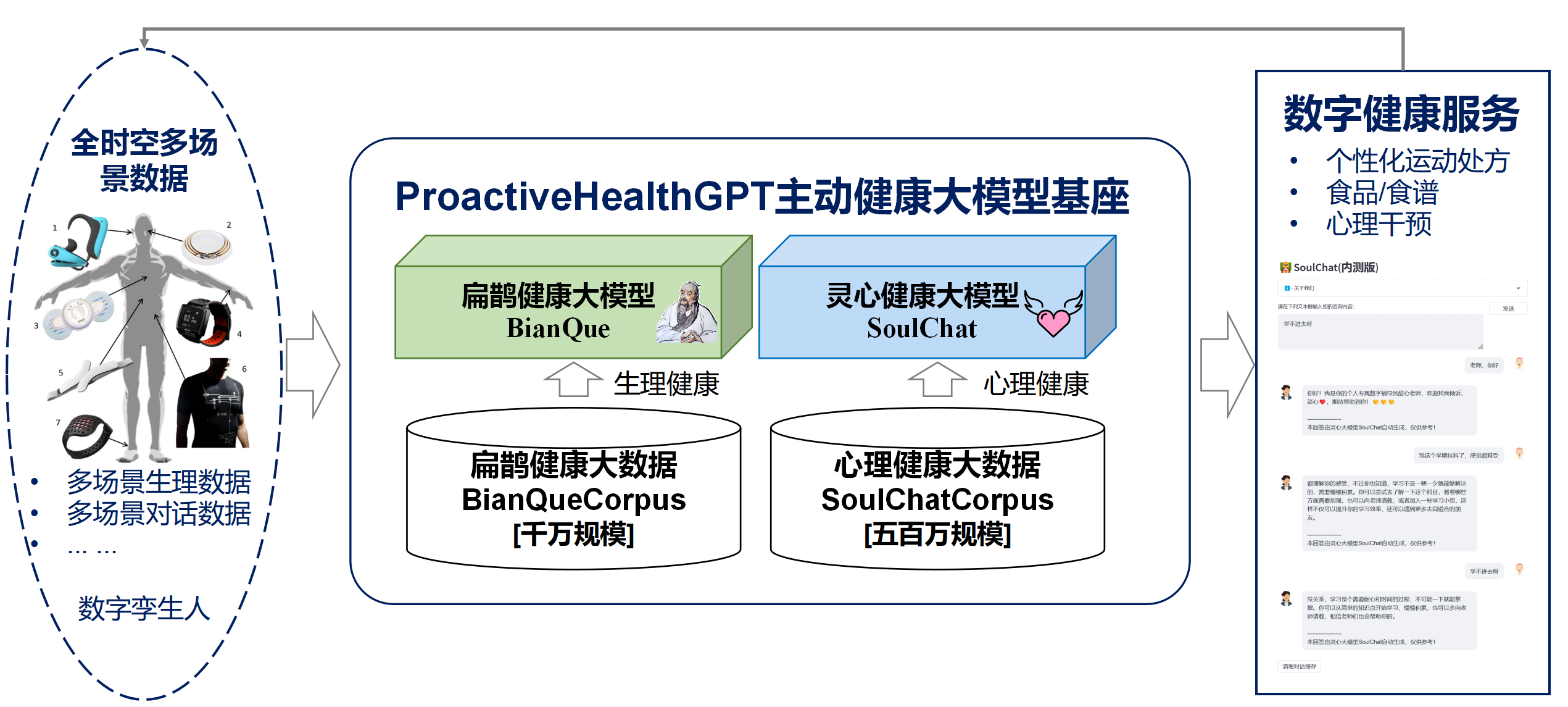

Based on the six characteristics of active health: initiative, prevention, accuracy, personalization, co-construction and sharing, and self-discipline, the School of Future Technology of South China University of Technology-Guangdong Provincial Key Laboratory of Digital Twins has open sourced the active health system of living space in the Chinese field. Model base ProactiveHealthGPT, including:

We hope that the living space active health large model base ProactiveHealthGPT can help the academic community accelerate the research and application of large models in active health fields such as chronic diseases and psychological counseling. This project is BianQue, a large model of living space health .

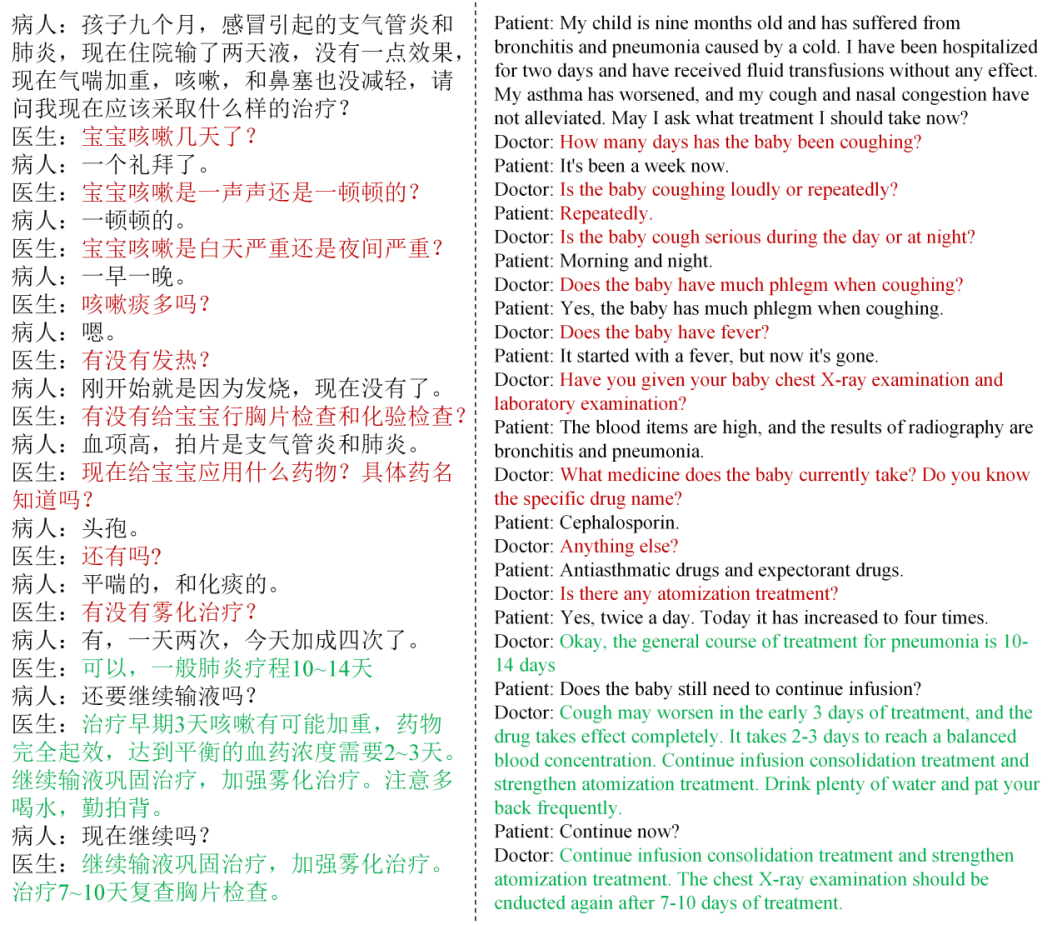

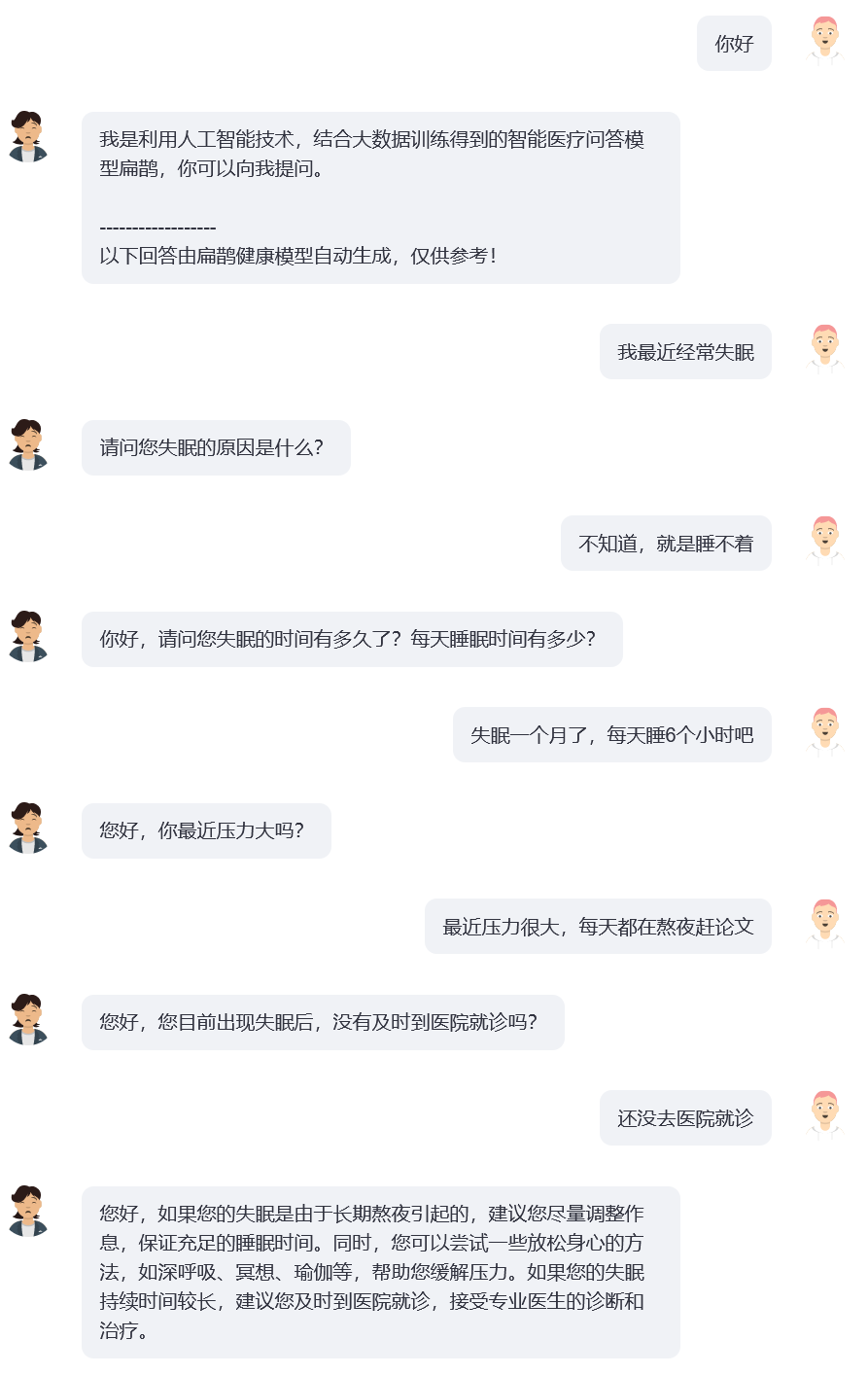

Through research, we found that in the health field, users usually do not clearly describe their problems in a round of interaction, and currently common open source medical question and answer models (such as: ChatDoctor, HuaTuo (formerly known as HuaTuo), DoctorGLM, MedicalGPT -zh) focuses on solving the problem of a single round of user descriptions, while ignoring the situation where "user descriptions may be insufficient". Even the currently popular ChatGPT will have similar problems: if the user does not force ChatGPT to adopt a question-and-answer format through text description, ChatGPT will also prefer the user's description and quickly provide suggestions and solutions it deems appropriate. However, actual conversations between doctors and users often involve "the doctor conducting multiple rounds of questioning based on the user's current description." And the doctor finally gives comprehensive suggestions based on the information provided by the user, as shown in the figure below. We define the process of the doctor's continuous questioning as a chain of questioning (CoQ, Chain of Questioning) . When the model is in the questioning chain stage, its next question is usually determined by the conversation context history.

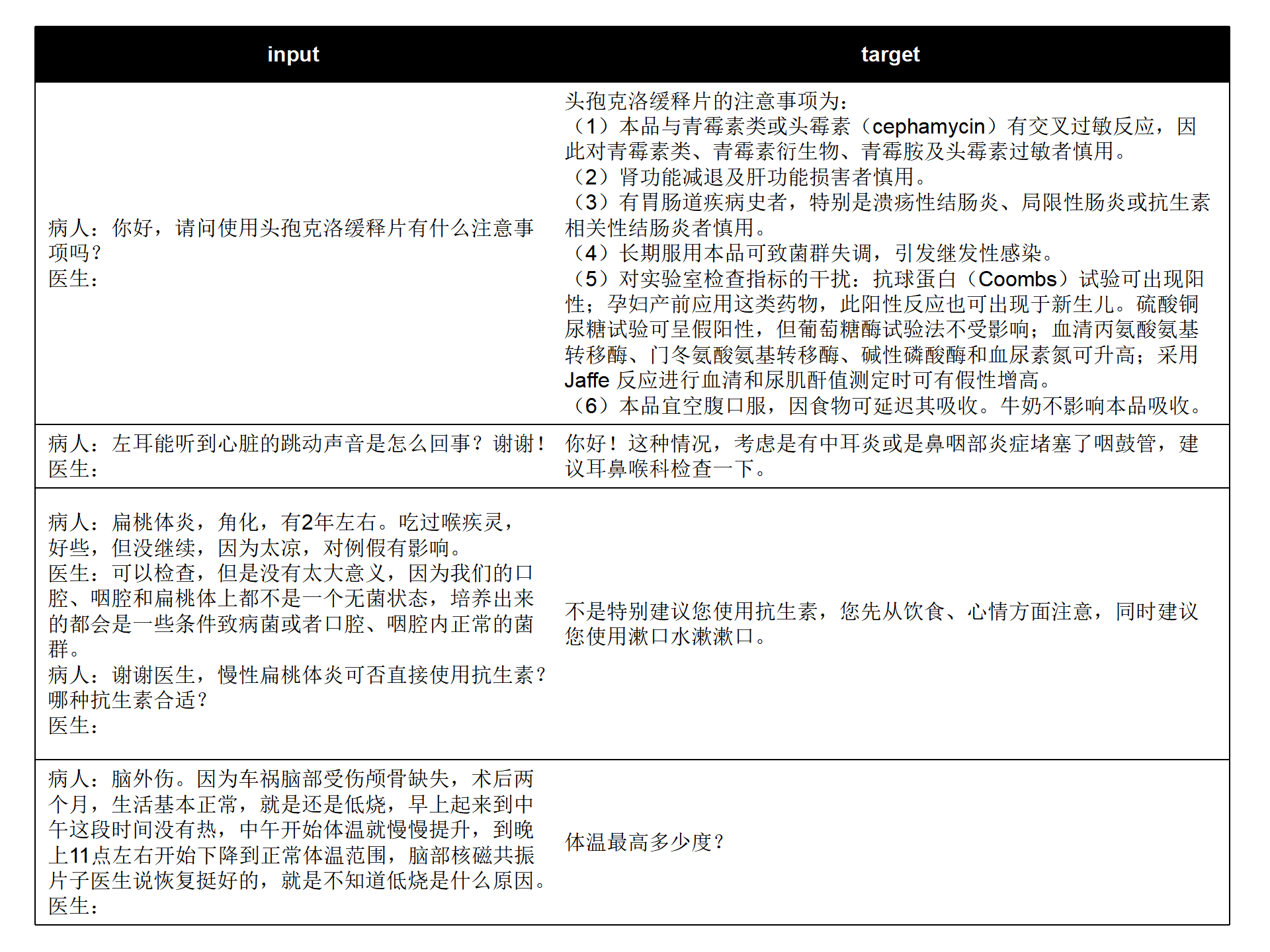

We combine the current open source Chinese medical question and answer data sets (MedDialog-CN, IMCS-V2, CHIP-MDCFNPC, MedDG, cMedQA2, Chinese-medical-dialogue-data) to analyze the single-round/multi-round characteristics and doctor inquiry characteristics. , combined with the long-term self-built living space health conversation big data of the laboratory, BianQueCorpus, a Bianque health big data with a scale of tens of millions, was constructed. The conversation data is unified into an instruction format in the form of "Patient: xxxnDoctor: xxxnPatient: xxxnDoctor:", as shown in the figure below.

input: "病人:六岁宝宝拉大便都是一个礼拜或者10天才一次正常吗,要去医院检查什么项目n医生:您好n病人:六岁宝宝拉大便都是一个礼拜或者10天才一次正常吗,要去医院检查什么项目n医生:宝宝之前大便什么样呢?多久一次呢n病人:一般都是一个礼拜,最近这几个月都是10多天n医生:大便干吗?n病人:每次10多天拉的很多n医生:"

target: "成形还是不成形呢?孩子吃饭怎么样呢?"

The training data contains a large amount of target text that is the content of the doctor's inquiry rather than direct advice, which will help improve the inquiry ability of the AI model.

cd ~

git clone https://github.com/scutcyr/BianQue.git cd BianQue

conda env create -n proactivehealthgpt_py38 --file proactivehealthgpt_py38.yml

conda activate proactivehealthgpt_py38

pip install cpm_kernels

pip install torch==1.13.1+cu116 torchvision==0.14.1+cu116 torchaudio==0.13.1 --extra-index-url https://download.pytorch.org/whl/cu116 cd BianQue

conda create -n proactivehealthgpt_py38 python=3.8

conda activate proactivehealthgpt_py38

pip install torch==1.13.1+cu116 torchvision==0.14.1+cu116 torchaudio==0.13.1 --extra-index-url https://download.pytorch.org/whl/cu116

pip install -r requirements.txt

pip install rouge_chinese nltk jieba datasets

# 以下安装为了运行demo

pip install streamlit

pip install streamlit_chat[Supplement] Configuring CUDA-11.6 under Windows: Download and install CUDA-11.6, download cudnn-8.4.0, unzip and copy the files to the path corresponding to CUDA-11.6, refer to: Using conda to install pytorch under win11-cuda11. 6-General installation ideas

Call the BianQue-2.0 model in Python:

import torch

from transformers import AutoModel , AutoTokenizer

# GPU设置

device = torch . device ( "cuda" if torch . cuda . is_available () else "cpu" )

# 加载模型与tokenizer

model_name_or_path = 'scutcyr/BianQue-2'

model = AutoModel . from_pretrained ( model_name_or_path , trust_remote_code = True ). half ()

model . to ( device )

tokenizer = AutoTokenizer . from_pretrained ( model_name_or_path , trust_remote_code = True )

# 单轮对话调用模型的chat函数

user_input = "我的宝宝发烧了,怎么办?"

input_text = "病人:" + user_input + " n医生:"

response , history = model . chat ( tokenizer , query = input_text , history = None , max_length = 2048 , num_beams = 1 , do_sample = True , top_p = 0.75 , temperature = 0.95 , logits_processor = None )

# 多轮对话调用模型的chat函数

# 注意:本项目使用"n病人:"和"n医生:"划分不同轮次的对话历史

# 注意:user_history比bot_history的长度多1

user_history = [ '你好' , '我最近失眠了' ]

bot_history = [ '我是利用人工智能技术,结合大数据训练得到的智能医疗问答模型扁鹊,你可以向我提问。' ]

# 拼接对话历史

context = " n " . join ([ f"病人: { user_history [ i ] } n医生: { bot_history [ i ] } " for i in range ( len ( bot_history ))])

input_text = context + " n病人:" + user_history [ - 1 ] + " n医生:"

response , history = model . chat ( tokenizer , query = input_text , history = None , max_length = 2048 , num_beams = 1 , do_sample = True , top_p = 0.75 , temperature = 0.95 , logits_processor = None )This project provides bianque_v2_app.py as an example of using the BianQue-2.0 model. You can start the service through the following command, and then access it through http://<your_ip>:9005.

streamlit run bianque_v2_app.py --server.port 9005In particular, in bianque_v2_app.py, you can modify the following code to replace the specified graphics card:

os . environ [ 'CUDA_VISIBLE_DEVICES' ] = '1' For Windows single graphics card users, you need to modify it to: os.environ['CUDA_VISIBLE_DEVICES'] = '0' , otherwise an error will be reported!

You can specify the model path to be a local path by changing the following code:

model_name_or_path = "scutcyr/BianQue-2"We also provide bianque_v1_app.py as a usage example of the BianQue-1.0 model, and bianque_v1_v2_app.py as a joint usage example of the BianQue-1.0 model and the BianQue-2.0 model.

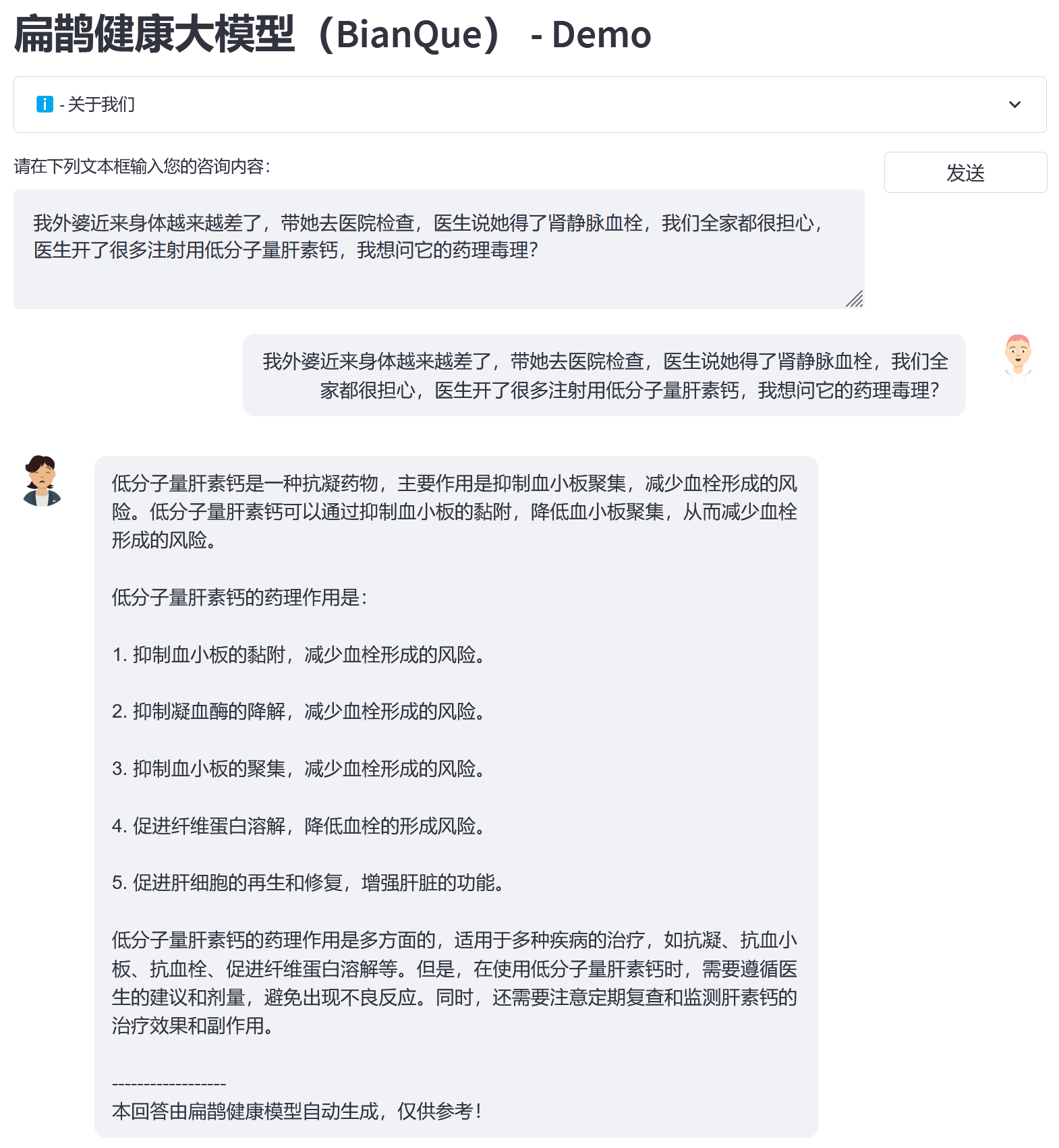

Based on the BianQue Health big data BianQueCorpus, we chose ChatGLM-6B as the initialization model, and obtained the new generation of BianQue [BianQue-2.0] after fine-tuning training of all parameters. Different from the Bianque-1.0 model, Bianque-2.0 has expanded data such as drug instruction instructions, medical encyclopedia knowledge instructions, and ChatGPT distillation instructions, strengthening the model's suggestion and knowledge query capabilities. Below are two test samples.

Use the following command to jointly use Bianque-2.0 and Bianque-1.0 to build active health services:

streamlit run bianque_v1_v2_app.py --server.port 9005The following is an application example: the first few rounds are the process of inquiry through the Bianque-1.0 model, and the last round of responses is the answer through the Bianque-2.0 model.

BianQue-1.0 (BianQue-1.0) is a large medical dialogue model that is fine-tuned through instructions and multiple rounds of inquiry dialogues. After research, we found that in the medical field, doctors often need to go through multiple rounds of inquiries before making decisions. This is not a simple "instruction-reply" model. When users consult a doctor, they often do not inform the doctor of the complete situation at the beginning, so the doctor needs to keep asking questions before he can finally make a diagnosis and give reasonable suggestions. Based on this, we built BianQue-1.0 (BianQue-1.0) , aiming to strengthen the interrogation capabilities of the AI system to simulate the process of doctor interrogation. We define this ability as the “asking” part of “looking, hearing, asking, and knowing”. Taking into account the current Chinese language model architecture, number of parameters, and required computing power, we used ClueAI/ChatYuan-large-v2 as the baseline model, and fine-tuned 1 epoch on 8 NVIDIA RTX 4090 graphics cards to obtain Bianque-1.0 ( BianQue-1.0) , the mixed data set of Chinese medical question and answer instructions and multi-round inquiry dialogues used for training contains more than 9 million samples, which took about 16 days to complete one epoch of training. We will plan to conduct multiple versions of model iterative research based on the "see, hear, ask, and feel" capabilities of the Bian Que model, combined with medical expert knowledge, multi-modal technology, and multi-physiological signal calculations. BianQue model welcomes your contributions! We encourage you to report issues, contribute PRs, and participate in discussions on the BianQue GitHub page. We look forward to cooperating with more universities, hospitals, research laboratories, companies, etc. to jointly carry out next-generation Bianque model research. For such requests (and other requests not appropriate to make on GitHub), please email directly to [email protected].

The four diagnostic methods of "looking, smelling, asking, and feeling" were invented by Bian Que. The "Four Diagnostic Methods" (inspection, smell, questioning, and palpation) are the basic methods of diagnosis in traditional Chinese medicine. Among them, the ability to "ask" can be modeled into the language model. We define the “asking” ability of the AI model as:

"Ask" ability : The AI model can conduct appropriate inquiries based on the information input by the patient and combined with medical knowledge, and give "appropriate diagnosis or advice" based on the patient's information obtained from further inquiries. The process of "asking" is usually a multi-round dialogue process.

Here are 2 simple examples:

Example 1

User: I feel completely exhausted recently. Bian Que-1.0: Is it because of tiredness or staying up late?

User: I have been staying up late this week to catch up on my paper. Bian Que-1.0: Well, staying up late can easily cause various discomforts, such as dizziness, fatigue, insomnia, dreaminess, etc. Get more rest. You can take oryzanol, vitamin B1 and Anshen Bu Nao Liquid to avoid stay up.

Example 2

User: I have been suffering from insomnia recently. Bianque-1.0: How long have you been suffering from insomnia?

User: It’s been two weeks Bianque-1.0: How is your sleep?

User: How many hours do you need to go to bed before you can sleep? Bian Que-1.0: You can take some medicines orally. User: Do you have any specific recommendations? Bian Que-1.0: I need to check first. User: What tests do I need?

Bian Que-1.0: Electrocardiogram

import os

from transformers import T5Tokenizer , T5ForConditionalGeneration , AutoTokenizer

import torch

device = torch . device ( "cuda" if torch . cuda . is_available () else "cpu" )

tokenizer = T5Tokenizer . from_pretrained ( "scutcyr/BianQue-1.0" )

model = T5ForConditionalGeneration . from_pretrained ( "scutcyr/BianQue-1.0" )

model = model . to ( device )

def preprocess ( text ):

text = text . replace ( " n " , " \ n" ). replace ( " t " , " \ t" )

return text

def postprocess ( text ):

return text . replace ( " \ n" , " n " ). replace ( " \ t" , " t " )

def answer ( user_history , bot_history , sample = True , top_p = 1 , temperature = 0.7 ):

'''sample:是否抽样。生成任务,可以设置为True;

top_p:0-1之间,生成的内容越多样

max_new_tokens=512 lost...'''

if len ( bot_history ) > 0 :

context = " n " . join ([ f"病人: { user_history [ i ] } n医生: { bot_history [ i ] } " for i in range ( len ( bot_history ))])

input_text = context + " n病人:" + user_history [ - 1 ] + " n医生:"

else :

input_text = "病人:" + user_history [ - 1 ] + " n医生:"

return "我是利用人工智能技术,结合大数据训练得到的智能医疗问答模型扁鹊,你可以向我提问。"

input_text = preprocess ( input_text )

print ( input_text )

encoding = tokenizer ( text = input_text , truncation = True , padding = True , max_length = 768 , return_tensors = "pt" ). to ( device )

if not sample :

out = model . generate ( ** encoding , return_dict_in_generate = True , output_scores = False , max_new_tokens = 512 , num_beams = 1 , length_penalty = 0.6 )

else :

out = model . generate ( ** encoding , return_dict_in_generate = True , output_scores = False , max_new_tokens = 512 , do_sample = True , top_p = top_p , temperature = temperature , no_repeat_ngram_size = 3 )

out_text = tokenizer . batch_decode ( out [ "sequences" ], skip_special_tokens = True )

print ( '医生: ' + postprocess ( out_text [ 0 ]))

return postprocess ( out_text [ 0 ])

answer_text = answer ( user_history = [ "你好!" ,

"我最近经常失眠" ,

"两周了" ,

"上床几小时才睡得着" ],

bot_history = [ "我是利用人工智能技术,结合大数据训练得到的智能医疗问答模型扁鹊,你可以向我提问。" ,

"失眠多久了?" ,

"睡眠怎么样?" ])conda env create -n bianque_py38 --file py38_conda_env.yml

conda activate bianque_py38

pip install torch==1.13.1+cu116 torchvision==0.14.1+cu116 torchaudio==0.13.1 --extra-index-url https://download.pytorch.org/whl/cu116 cd scripts

bash run_train_model_bianque.shBianQue-1.0 (BianQue-1.0) has only been trained for 1 epoch. Although the model has certain medical inquiry capabilities, it still has the following limitations:

**BianQue-2.0(BianQue-2.0)** uses the weights of the ChatGLM-6B model and needs to follow its MODEL_LICENSE. Therefore, this project can only be used for your non-commercial research purposes .

This project was initiated by the Guangdong Provincial Key Laboratory of Digital Twins, School of Future Technology, South China University of Technology. It is supported by the Information Network Engineering Research Center, School of Electronics and Information and other departments of South China University of Technology. It also thanks Guangdong Maternal and Child Health Hospital, Guangzhou Municipal Government Cooperating units include the Women and Children's Medical Center, the Third Affiliated Hospital of Sun Yat-sen University, and the Artificial Intelligence Research Institute of Hefei Comprehensive National Science Center.

At the same time, we would like to thank the following media or public accounts for reporting on this project (in no particular order):

Media coverage People's Daily, China.com, Guangming.com, TOM Technology, Future.com, Dazhong.com, China Development Report Network, China Daily Network, Xinhua News Network, China.com, Toutiao, Sohu, Tencent News, NetEase News, China Information Network , China Communication Network, China City Report Network, China City Network

Public Account Guangdong Laboratory Construction, Intelligent Voice New Youth, Deep Learning and NLP, AINLP

@misc { chen2023bianque ,

title = { BianQue: Balancing the Questioning and Suggestion Ability of Health LLMs with Multi-turn Health Conversations Polished by ChatGPT } ,

author = { Yirong Chen and Zhenyu Wang and Xiaofen Xing and huimin zheng and Zhipei Xu and Kai Fang and Junhong Wang and Sihang Li and Jieling Wu and Qi Liu and Xiangmin Xu } ,

year = { 2023 } ,

eprint = { 2310.15896 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CL }

}