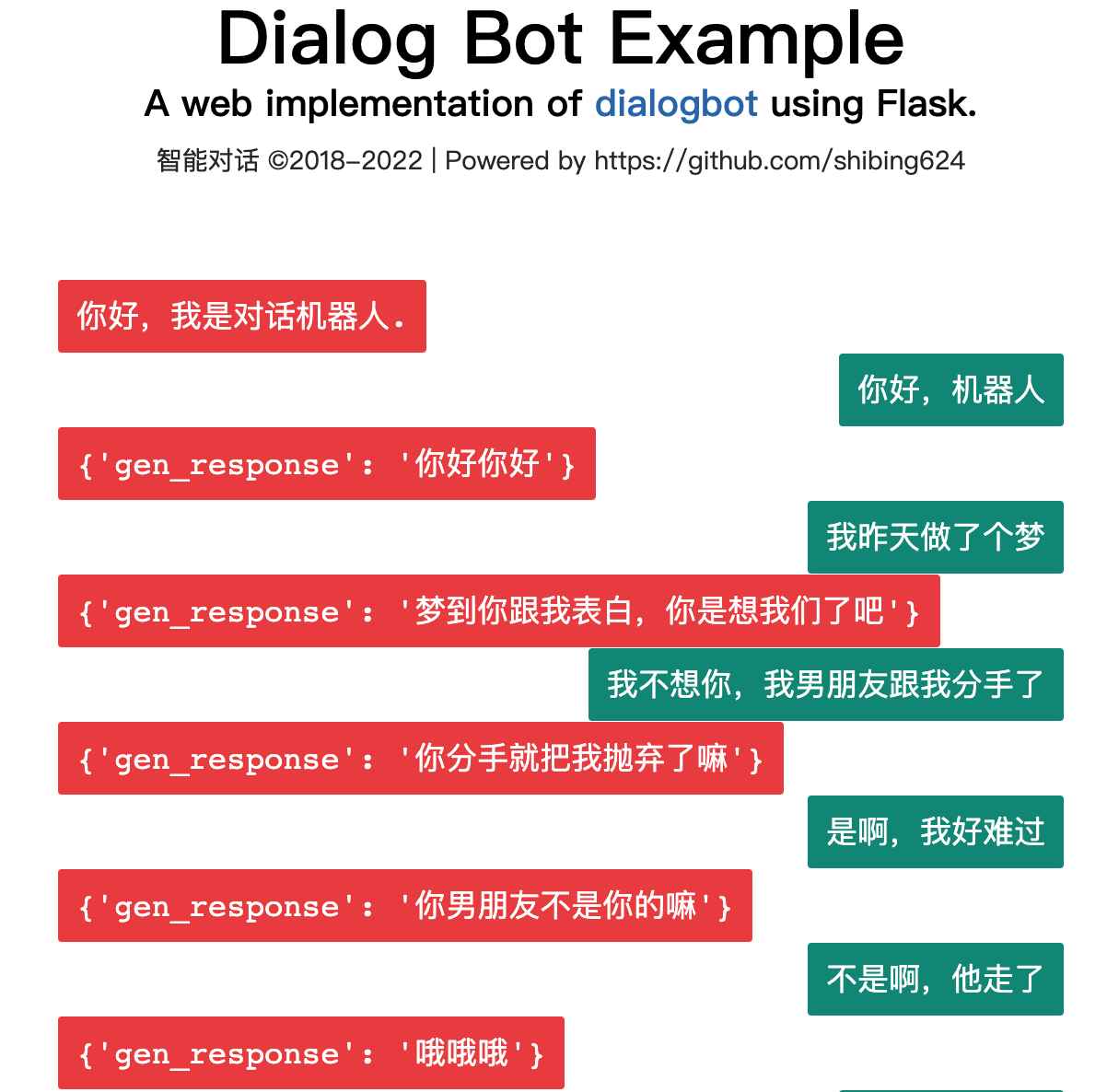

Dialogbot, provide complete dialogue model technology. Combining search-based dialogue model , task-based dialogue model and generative dialogue model , output the optimal dialogue response.

Dialogbot implements a variety of dialogue robot solutions such as question-and-answer dialogue, task-based dialogue, and chat-based dialogue. It supports network retrieval Q&A, domain knowledge Q&A, task-guided Q&A, and chat Q&A, right out of the box.

Guide

Human-machine dialogue systems have always been an important direction of AI. The Turing test uses dialogue to detect whether a machine has a high degree of intelligence.

How to build a human-computer dialogue system or dialogue robot?

The dialogue system has evolved through three generations:

Dialogue systems are divided into three categories:

Calculate the similarity between the user's question and the question in the question and answer database, select the most similar question, and give its corresponding answer.

Sentence similarity calculation includes the following methods:

Retrieve answers from search result summaries on Baidu and Bing

Official Demo: https://www.mulanai.com/product/dialogbot/

The project is based on transformers 4.4.2+, torch 1.6.0+ and Python 3.6+. Then, simply do:

pip3 install torch # conda install pytorch

pip3 install -U dialogbot

or

pip3 install torch # conda install pytorch

git clone https://github.com/shibing624/dialogbot.git

cd dialogbot

python3 setup.py install

example: examples/bot_demo.py

from dialogbot import Bot

bot = Bot ()

response = bot . answer ( '姚明多高呀?' )

print ( response )output:

query: "姚明多高呀?"

answer: "226cm"

example: examples/taskbot_demo.py

A chat-based dialogue model trained based on the GPT2 generative model.

The model has been released to huggingface models: shibing624/gpt2-dialogbot-base-chinese

example: examples/genbot_demo.py

from dialogbot import GPTBot

bot = GPTBot ()

r = bot . answer ( '亲 你吃了吗?' , use_history = False )

print ( 'gpt2' , r )output:

query: "亲 吃了吗?"

answer: "吃了"

Create a data folder in the project root directory, name the original training corpus train.txt, and store it in this directory. The format of train.txt is as follows. Each chat is separated by one line. The format is as follows:

真想找你一起去看电影

突然很想你

我也很想你

想看你的美照

亲我一口就给你看

我亲两口

讨厌人家拿小拳拳捶你胸口

今天好点了吗?

一天比一天严重

吃药不管用,去打一针。别拖着

Run preprocess.py, tokenize the data/train.txt dialogue material, and then serialize and save it to data/train.pkl. The type of object serialized in train.pkl is List[List], which records the token contained in each conversation in the conversation list.

cd dialogbot/gpt/

python preprocess.py --train_path data/train.txt --save_path data/train.pkl

Run train.py and use the preprocessed data to perform autoregressive training on the model. The model is saved in the model folder in the root directory.

During training, you can stop early by specifying the patience parameter. When patience=n, if the model's loss on the validation set does not decrease for n consecutive epochs, early stop will be performed to stop training. When patience=0, early stop is not performed.

Early stop is turned off by default in the code, because in practice, the model generated by early stop may not necessarily produce better results.

python train.py --epochs 40 --batch_size 8 --device 0,1 --train_path data/train.pkl

For more training parameter introduction, you can directly see the parameter description in the set_args() function in train.py

Run interact.py and use the trained model to perform human-computer interaction. After entering q to end the conversation, the chat record will be saved to the sample.txt file.

python interact.py --no_cuda --model_dir path_to_your_model

When executing interact.py, you can try to adjust the generated effect by adjusting parameters such as topk, topp, repetition_penalty, max_history_len, etc. For more parameter introduction, you can directly see the parameter description in the set_args() function of interact.py. If you want to use the GPU for generation, do not call the --no_cuda parameter, and specify which GPU to use through --device gpu_id.

user :在干嘛

chatbot:睡觉

user :这么早就睡觉啦

chatbot:嗯啊你也早点睡啊

user :我再玩一会儿手机

chatbot:早点休息晚安

user :拜拜啦

chatbot:晚安晚安

user :多喝热水

chatbot:哈哈哈

user :多喝热水

chatbot:好的咯

user :在吗

chatbot:在

user :我不开心

chatbot:怎么啦

user :最近好累啊

chatbot:是挺累的

user :作业好多啊

chatbot:还好还好

user :我觉得我快要挂科了

chatbot:我也是啊,但是我觉得我快熬出头了

user :不说了,我还有好多作业没写

chatbot:我也是啊好多啊

user :那先这样,下次再聊,再见了

chatbot:好哒

| Chinese chat corpus | Data set address | Corpus description |

|---|---|---|

| Common Chinese Chat | chinese_chatbot_corpus | Including Xiaohuangji corpus, Douban corpus, TV drama dialogue corpus, Tieba forum reply corpus, Weibo corpus, PTT gossip corpus, Qingyun corpus, etc. |

| 500,000 Chinese chat corpus | Baidu Netdisk [extraction code: 4g5e] or GoogleDrive | Contains original corpus and preprocessed data of 500,000 multi-turn conversations |

| 1 million Chinese chat corpus | Baidu Netdisk [extraction code: s908] or GoogleDrive | Contains original corpus and preprocessed data of 1 million multi-turn conversations |

Examples of Chinese chat corpus are as follows:

谢谢你所做的一切

你开心就好

开心

嗯因为你的心里只有学习

某某某,还有你

这个某某某用的好

你们宿舍都是这么厉害的人吗

眼睛特别搞笑这土也不好捏但就是觉得挺可爱

特别可爱啊

今天好点了吗?

一天比一天严重

吃药不管用,去打一针。别拖着

| Model | shared address | Model description |

|---|---|---|

| model_epoch40_50w | shibing624/gpt2-dialogbot-base-chinese or Baidu Cloud Disk (extraction code: taqh) or GoogleDrive | After training for 40 epochs using 500,000 rounds of dialogue data, the loss dropped to about 2.0. |

If you use Dialogbot in your research, please cite it in the following format:

@misc{dialogbot,

title={dialogbot: Dialogue Model Technology Tool},

author={Xu Ming},

year={2021},

howpublished={ url {https://github.com/shibing624/dialogbot}},

}The licensing agreement is The Apache License 2.0, which is free for commercial use. Please attach the link to dialogbot and the license agreement in the product description.

The project code is still very rough. If you have any improvements to the code, you are welcome to submit it back to this project. Before submitting, please pay attention to the following two points:

testspython -m pytest to run all unit tests to ensure that all unit tests passYou can then submit a PR.