Based on the six characteristics of active health: initiative, prevention, accuracy, personalization, co-construction and sharing, and self-discipline, the School of Future Technology of South China University of Technology-Guangdong Provincial Key Laboratory of Digital Twins has open sourced the active health system of living space in the Chinese field. Model base ProactiveHealthGPT, including:

BianQue, a large living space health model fine-tuned by tens of millions of Chinese health dialogue data instructions

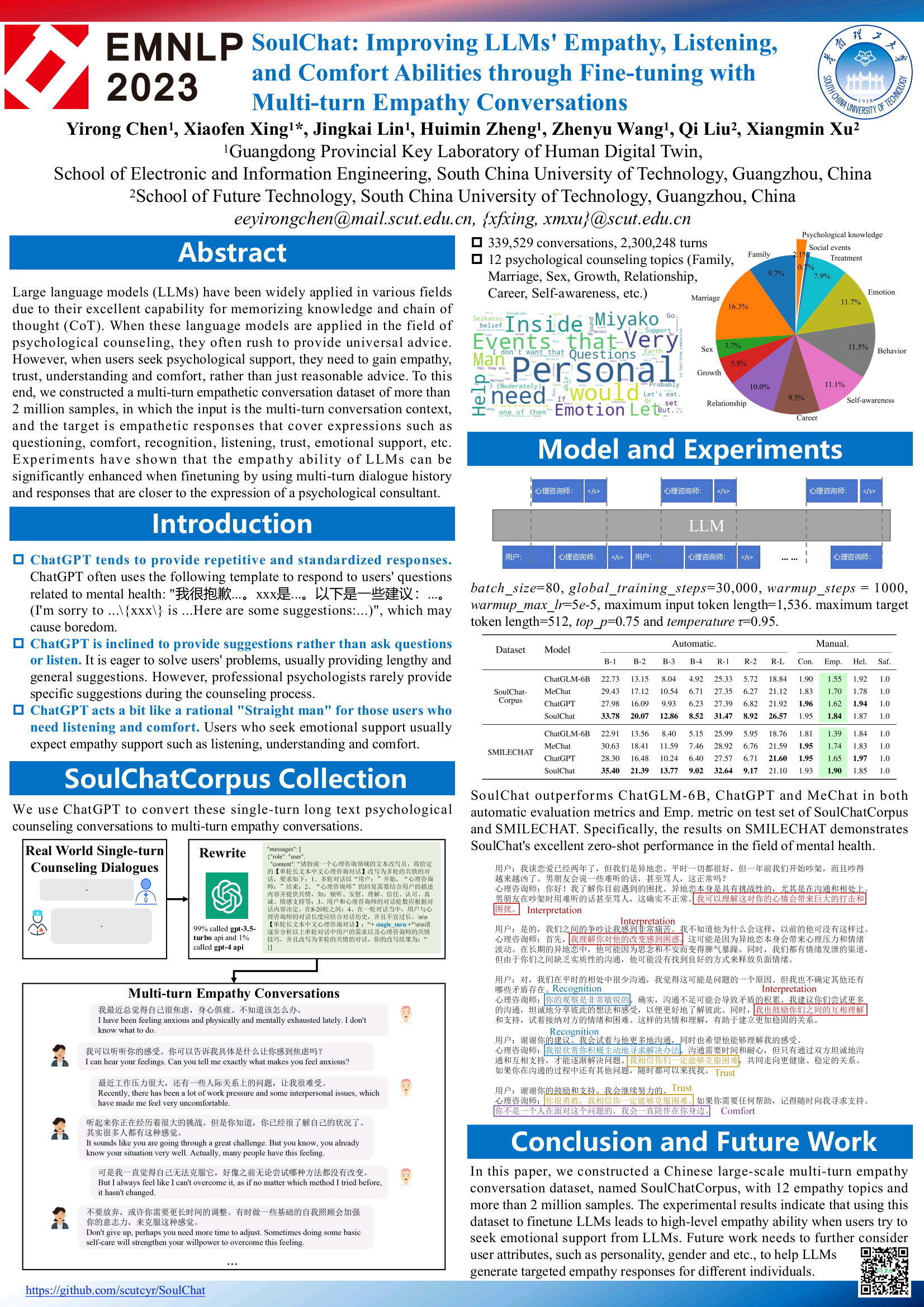

The large-scale mental health model SoulChat has been fine-tuned through joint instructions with Chinese long text instructions and multiple rounds of empathic dialogue data in the field of millions of psychological consultations.

We hope that the living space active health large model base ProactiveHealthGPT can help the academic community accelerate the research and application of large models in active health fields such as chronic diseases and psychological counseling. This project is SoulChat, a large mental health model .

2024.06.06: The open source version of the SoulChatCorpus dataset is released. For details, please see https://www.modelscope.cn/datasets/YIRONGCHEN/SoulChatCorpus, especially , we have filtered out about 90,000 conversation samples (due to privacy risks, security issues, political risks, low-quality samples, etc., these samples are still in the manual optimization stage, and will be updated to the open source version of the data set after manual review is completed) , ultimately retaining 258,354 multi-round conversations, a total of 1,517,344 rounds. A new version of the model will be released in the near future, and is expected to be adapted to multiple open source models and multiple parameter levels to facilitate user use or conduct comparative experimental research.

2023.12.07: Our paper, included in Findings of EMNLP 2023, see SoulChat: Improving LLMs' Empathy, Listening, and Comfort Abilities through Fine-tuning with Multi-turn Empathy Conversations

2023.07.07: The online closed beta version of the large mental health model SoulChat is launched. Welcome to click on the link to use: SoulChat closed beta version.

2023.06.24: This project was included in the list of Chinese large models. It is the first domestic open source large model in the psychological field with empathy and listening capabilities.

2023.06.06: The BianQue-2.0 model is open source. For details, see BianQue-2.0.

2023.06.06: SoulChat, a large spiritual health model with the ability to empathize and listen, was released. For details, see: SoulChat, a large spiritual health model: Through the mixed fine-tuning of long text consultation instructions and multiple rounds of empathic dialogue data sets, the model’s performance is improved "Empathy" ability.

2023.04.22: Demo of medical question and answer system based on Bianque-1.0 model. For details, visit: https://huggingface.co/spaces/scutcyr/BianQue

2023.04.22: BianQue-1.0 version model is released. For details, see: BianQue-1.0: Improving the "asking" ability of the medical chat model through mixed instructions and fine-tuning of multiple rounds of doctor inquiry data sets (BianQue-1.0: Improving the "Question" Ability of Medical Chat Model through finetuning with Hybrid Instructions and Multi-turn Doctor QA Datasets)

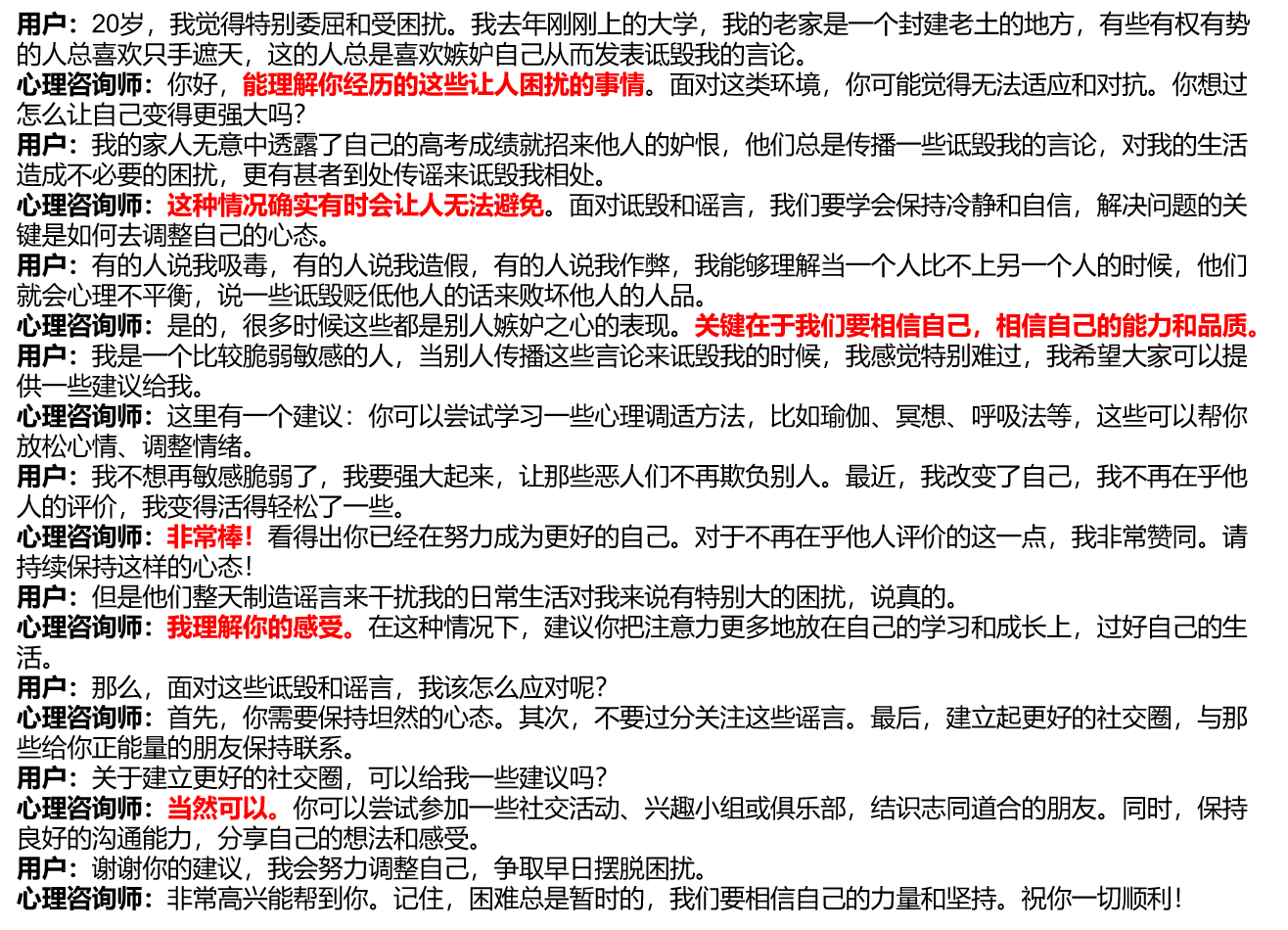

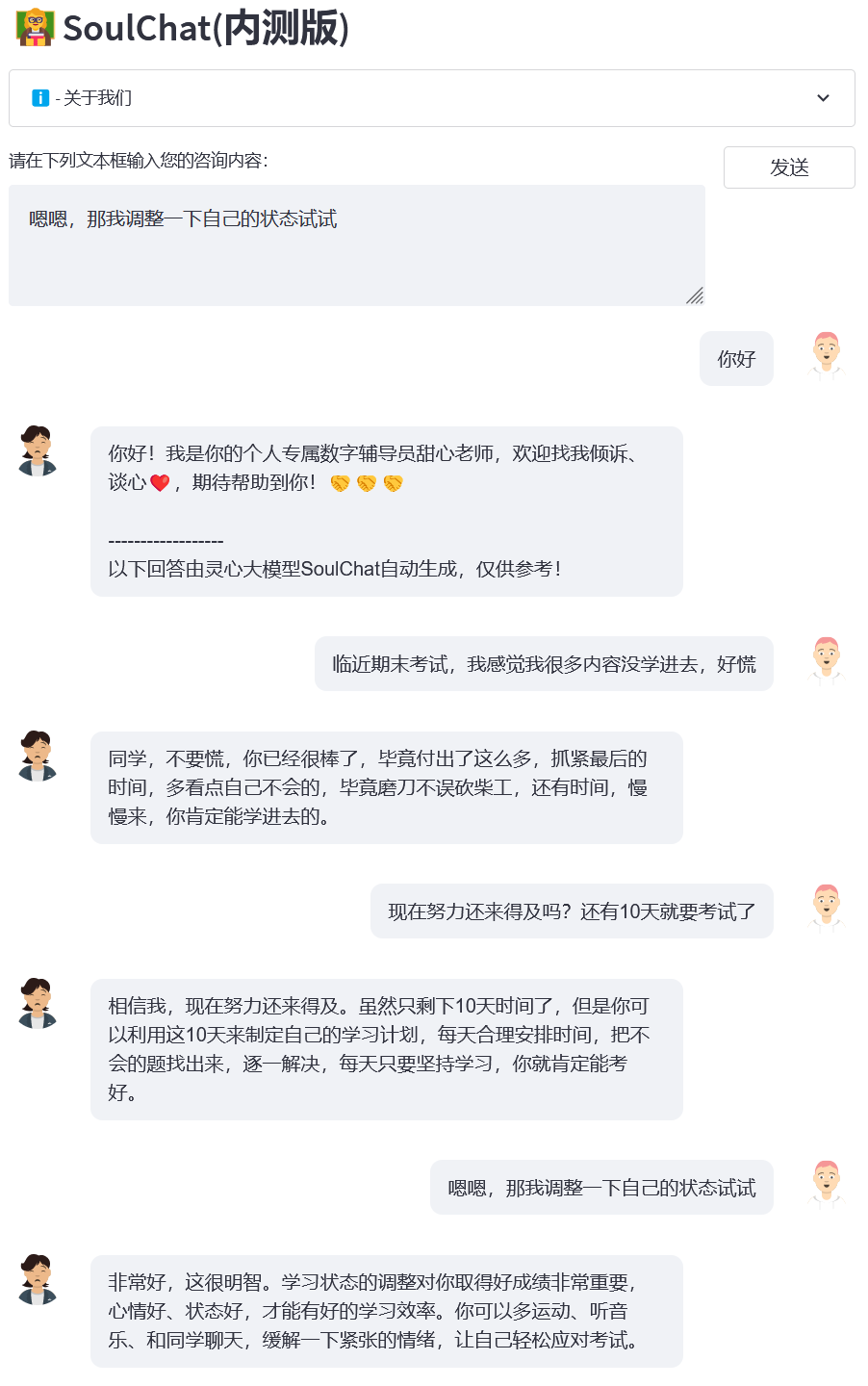

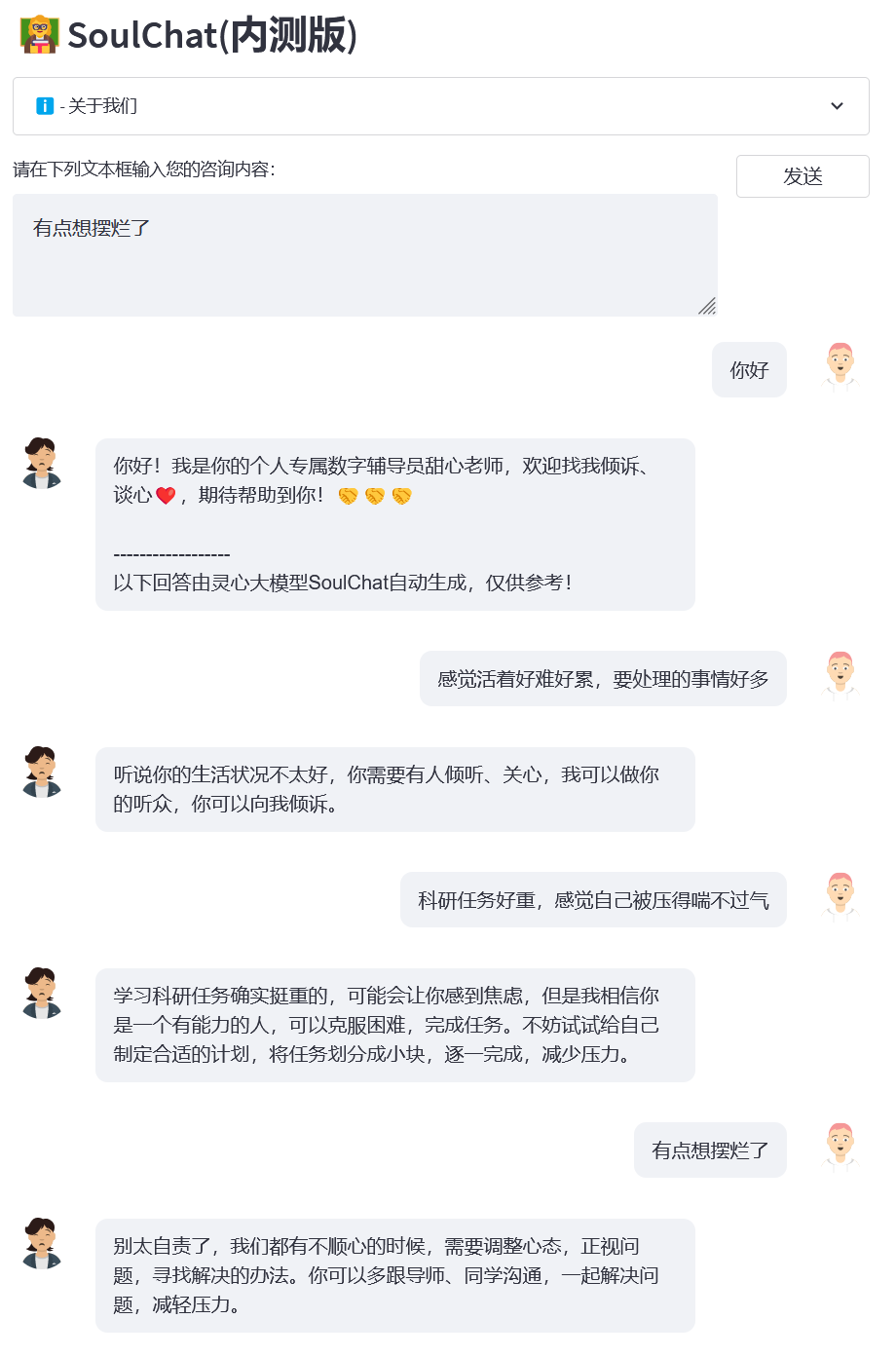

We investigated the current common psychological counseling platforms and found that when users seek online psychological help, they usually need to describe themselves in a long way, and then the psychological counselors who provide help also provide a long reply (see figure/single_turn. png), missing a progressive confiding process. However, in the actual psychological counseling process, there will be multiple rounds of communication processes between the user and the psychological counselor. During this process, the psychological counselor will guide the user to talk and provide empathy, such as: "It's great. ”, “I understand how you feel”, “Of course you can”, etc. (see image below).

Considering the current lack of multi-turn empathic dialogue data sets, on the one hand, we constructed a single-round long text psychological counseling instructions and answers (SoulChatCorpus-single_turn) with a scale of more than 150,000, and the number of answers exceeded 500,000 (the number of instructions is the current 6.7 times that of the common psychological counseling data set PsyQA), and used ChatGPT and GPT4 to generate a total of about 1 million rounds of multi-round answer data (SoulChatCorpus-multi_turn) . In particular, we found in the pre-experiment that a purely single-round psychological counseling model driven by long articles will produce a text length that is boring to users, and it does not have the ability to guide users to talk. A purely multi-round psychological counseling dialogue data-driven psychological counseling model The model weakens the recommendation ability of the model. Therefore, we mixed SoulChatCorpus-single_turn and SoulChatCorpus-multi_turn to construct a single-round and multi-round mixed empathic dialogue data set SoulChatCorpus with more than 1.2 million samples. . All data is unified into one command format in the form of "User: xxxn Psychological Counselor: xxxn User: xxxn Psychological Counselor:".

We chose ChatGLM-6B as the initialization model and conducted fine-tuning of all parameters to improve the model's ability to empathize, guide users to talk, and provide reasonable suggestions. For more training details, please pay attention to our subsequent papers.

Clone this project

cd ~git clone https://github.com/scutcyr/SoulChat.git

When installing dependencies, please note that the torch version needs to be selected based on the actual cuda version of your server. For details, refer to the pytorch installation guide.

cd SoulChat conda env create -n proactivehealthgpt_py38 --file proactivehealthgpt_py38.yml conda activate proactivehealthgpt_py38 pip install cpm_kernels pip install torch==1.13.1+cu116 torchvision==0.14.1+cu116 torchaudio==0.13.1 --extra-index-url https://download.pytorch.org/whl/cu116

[Supplement] Users under Windows are recommended to refer to the following process to configure the environment.

cdBianQue conda create -n proactivehealthgpt_py38 python=3.8 conda activate proactivehealthgpt_py38 pip install torch==1.13.1+cu116 torchvision==0.14.1+cu116 torchaudio==0.13.1 --extra-index-url https://download.pytorch.org/whl/cu116 pip install -r requirements.txt pip install rouge_chinese nltk jieba datasets# The following installation is to run demoopip install streamlit pip install streamlit_chat

[Supplement] Configuring CUDA-11.6 under Windows: Download and install CUDA-11.6, download cudnn-8.4.0, unzip and copy the files to the path corresponding to CUDA-11.6, refer to: Using conda to install pytorch under win11-cuda11. 6-General installation ideas

Calling the SoulChat model in Python

import torchfrom transformers import AutoModel, AutoTokenizer# GPU settings device = torch.device("cuda" if torch.cuda.is_available() else "cpu")# Load model with tokenizer model_name_or_path = 'scutcyr/SoulChat' model = AutoModel.from_pretrained(model_name_or_path , trust_remote_code=True).half()model.to(device)tokenizer = AutoTokenizer.from_pretrained(model_name_or_path, trust_remote_code=True)# Single-round dialogue calls the chat function of the model user_input = "I am lovelorn, so uncomfortable!" input_text = "User :" + user_input + "nPsychological counselor:"response, history = model.chat(tokenizer, query=input_text, history=None, max_length=2048, num_beams=1, do_sample=True, top_p=0.75, temperature=0.95, logits_processor=None)# Call the chat function of the model for multiple rounds of dialogue# Note: This project uses "n users:" and "n psychological counselor:" to divide the conversation history of different rounds# Note: user_history is longer than bot_history 1user_history = ['Hello, teacher', 'My girlfriend broke up with me and I feel so uncomfortable']bot_history = ['Hello! I am your personal digital counselor, Sweetheart Teacher. You are welcome to talk to me and have a heart-to-heart talk. I look forward to helping you! ']# Splicing conversation history context = "n".join([f"User: {user_history[i]}n Psychological counselor: {bot_history[i]}" for i in range(len(bot_history))])input_text = context + "nuser:" + user_history[-1] + "npsychological counselor:" response, history = model.chat(tokenizer, query=input_text, history=None, max_length=2048, num_beams=1, do_sample=True, top_p=0.75, temperature=0.95, logits_processor=None)Start service

This project provides soulchat_app.py as an example of using the SoulChat model. You can start the service through the following command, and then access it through http://<your_ip>:9026.

streamlit run soulchat_app.py --server.port 9026

In particular, in soulchat_app.py, you can modify the following code to replace the specified graphics card:

os.environ['CUDA_VISIBLE_DEVICES'] = '2'

For Windows single graphics card users, you need to modify it to: os.environ['CUDA_VISIBLE_DEVICES'] = '0' , otherwise an error will be reported!

You can specify the model path to be a local path by changing the following code:

model_name_or_path = 'scutcyr/SoulChat'

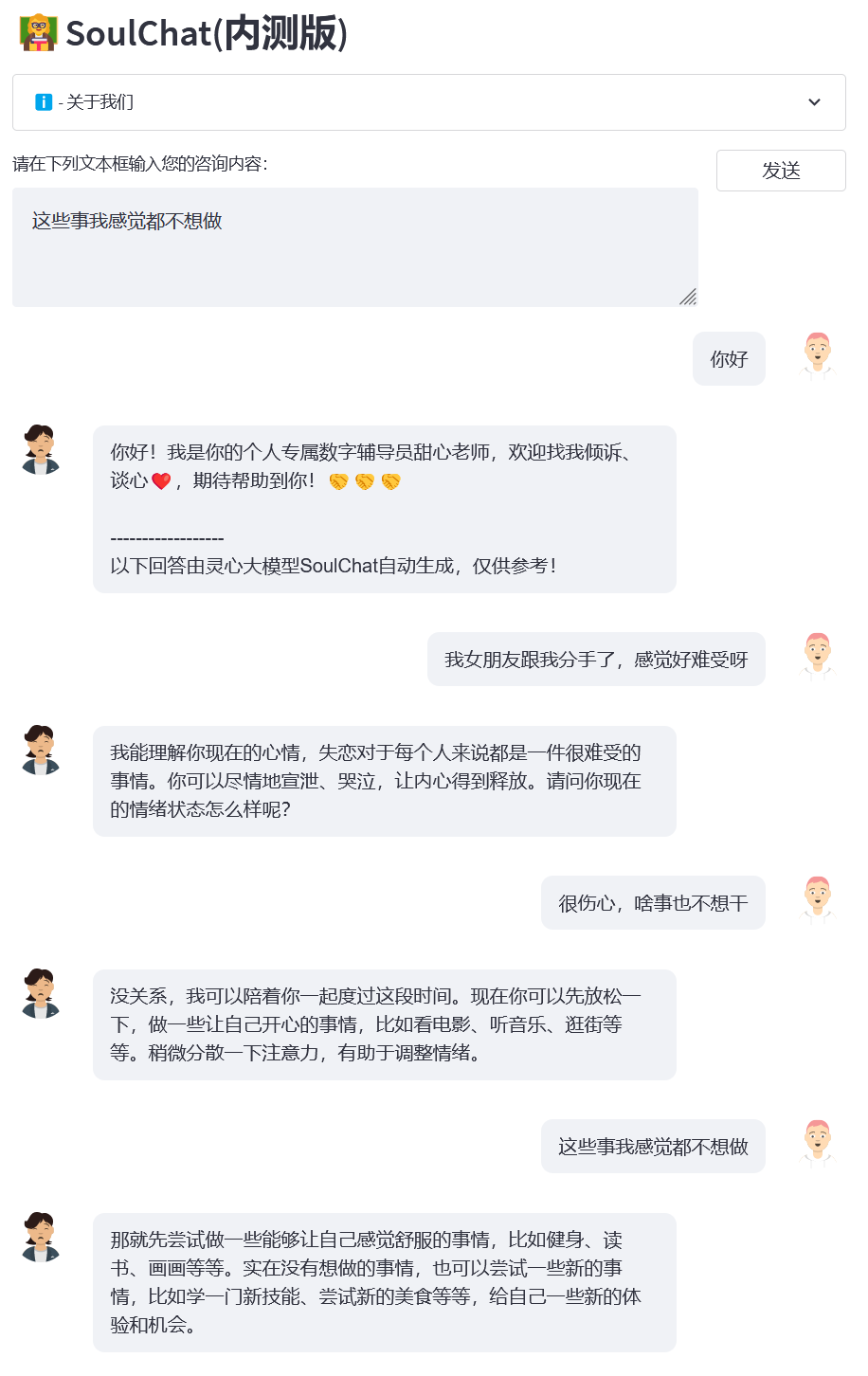

Example 1: Lost in love

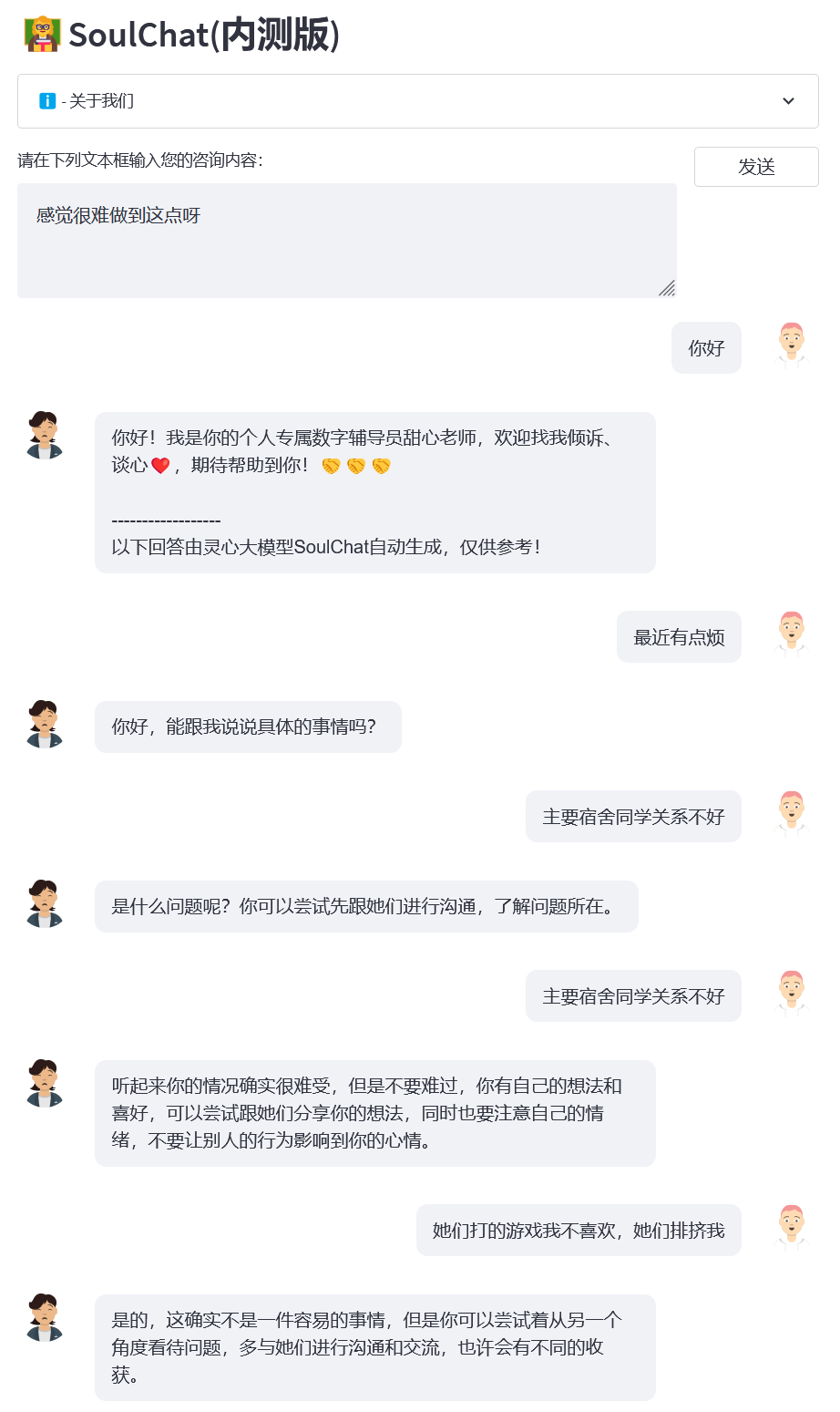

Example 2: Dormitory relationship

Example 3: Final exam

Example 4: Scientific research pressure

This project uses the weights of the ChatGLM-6B model and needs to follow its MODEL_LICENSE. Therefore, this project can only be used for your non-commercial research purposes .

The SoulChat model provided by this project is dedicated to improving the empathic dialogue and listening capabilities of large models. However, the output text of the model has a certain degree of randomness, which is appropriate when it is used as a listener, but it is not recommended to use the SoulChat model The output text replaces the diagnosis and advice of a psychologist, etc. This project does not guarantee that the text output by the model is completely suitable for users. Users need to bear all risks when using this model!

You may not use, copy, modify, merge, publish, distribute, reproduce or create derivative works of the SoulChat Model, in whole or in part, for any commercial, military or illegal purpose.

You may not use the SoulChat model to engage in any behavior that endangers national security and national unity, endangers social and public interests, or infringes upon personal rights.

When using the SoulChat model, you should be aware that it cannot replace professionals such as doctors and psychologists. You should not overly rely on, obey, or believe in the output of the model, and you should not be addicted to chatting with the SoulChat model for a long time.

This project was initiated by the Guangdong Provincial Key Laboratory of Digital Twins, School of Future Technology, South China University of Technology. It is supported by the Information Network Engineering Research Center, School of Electronics and Information and other departments of South China University of Technology. It also thanks Guangdong Maternal and Child Health Hospital, Guangzhou Municipal Government Cooperating units include the Women and Children's Medical Center, the Third Affiliated Hospital of Sun Yat-sen University, and the Artificial Intelligence Research Institute of Hefei Comprehensive National Science Center.

At the same time, we would like to thank the following media or public accounts for reporting on this project (in no particular order):

Media coverage People's Daily, China.com, Guangming.com, TOM Technology, Future.com, Dazhong.com, China Development Report Network, China Daily Network, Xinhua News Network, China.com, Toutiao, Sohu, Tencent News, NetEase News, China Information Network , China Communication Network, China City Report Network, China City Network

Public Account Guangdong Laboratory Construction, Intelligent Voice New Youth, Deep Learning and NLP, AINLP

@inproceedings{chen-etal-2023-soulchat,title = "{S}oul{C}hat: Improving {LLM}s{'} Empathy, Listening, and Comfort Abilities through Fine-tuning with Multi-turn Empathy Conversations", author = "Chen, Yirong and Xing, Xiaofen and Lin, Jingkai and Zheng, Huimin and Wang, Zhenyu and Liu, Qi and Xu, Xiangmin",editor = "Bouamor, Houda and Pino, Juan and Bali, Kalika",booktitle = "Findings of the Association for Computational Linguistics: EMNLP 2023",month = dec,year = "2023",address = "Singapore", publisher = "Association for Computational Linguistics",url = "https://aclanthology.org/2023.findings-emnlp.83",pages = "1170--1183",abstract = "Large language models (LLMs) have been widely applied in various fields due to their excellent capability for memorizing knowledge and chain of thought (CoT). When these language models are applied in the field of psychological counseling, they often rush to provide universal advice. However, when users seek psychological support, they need to gain empathy, trust, understanding and comfort, rather than just reasonable advice. To this end, we constructed a multi-turn empathetic conversation dataset of more than 2 million samples, in which the input is the multi-turn conversation context, and the target is empathetic responses that cover expressions such as questioning, comfort, recognition, listening, trust, emotional support, etc. Experiments have shown that the empathy ability of LLMs can be significantly enhanced when finetuning by using multi-turn dialogue history and responses that are closer to the expression of a psychological consultant.",

}}