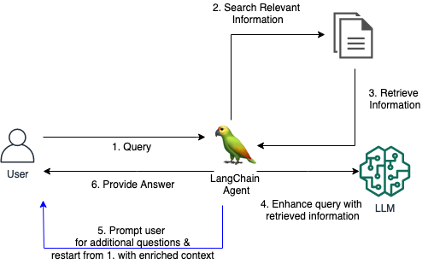

Traditional RAG systems often struggle to provide satisfactory answers when users ask vague or ambiguous questions without providing sufficient context. This leads to unhelpful responses like "I don't know" or incorrect, made-up answers provided by an LLM. This repo contains code to improve traditional RAG Agents.

We introduce a custom LangChain Tool for a RAG Agent, which enables the Agent to engage in a conversational dialogue with a user when the initial question is unclear or too vague. By asking clarifying questions, prompting the user for more details, and incorporating contextual information, the Agent can gather the necessary context to provide an accurate, helpful answer - even from an ambiguous initial query.

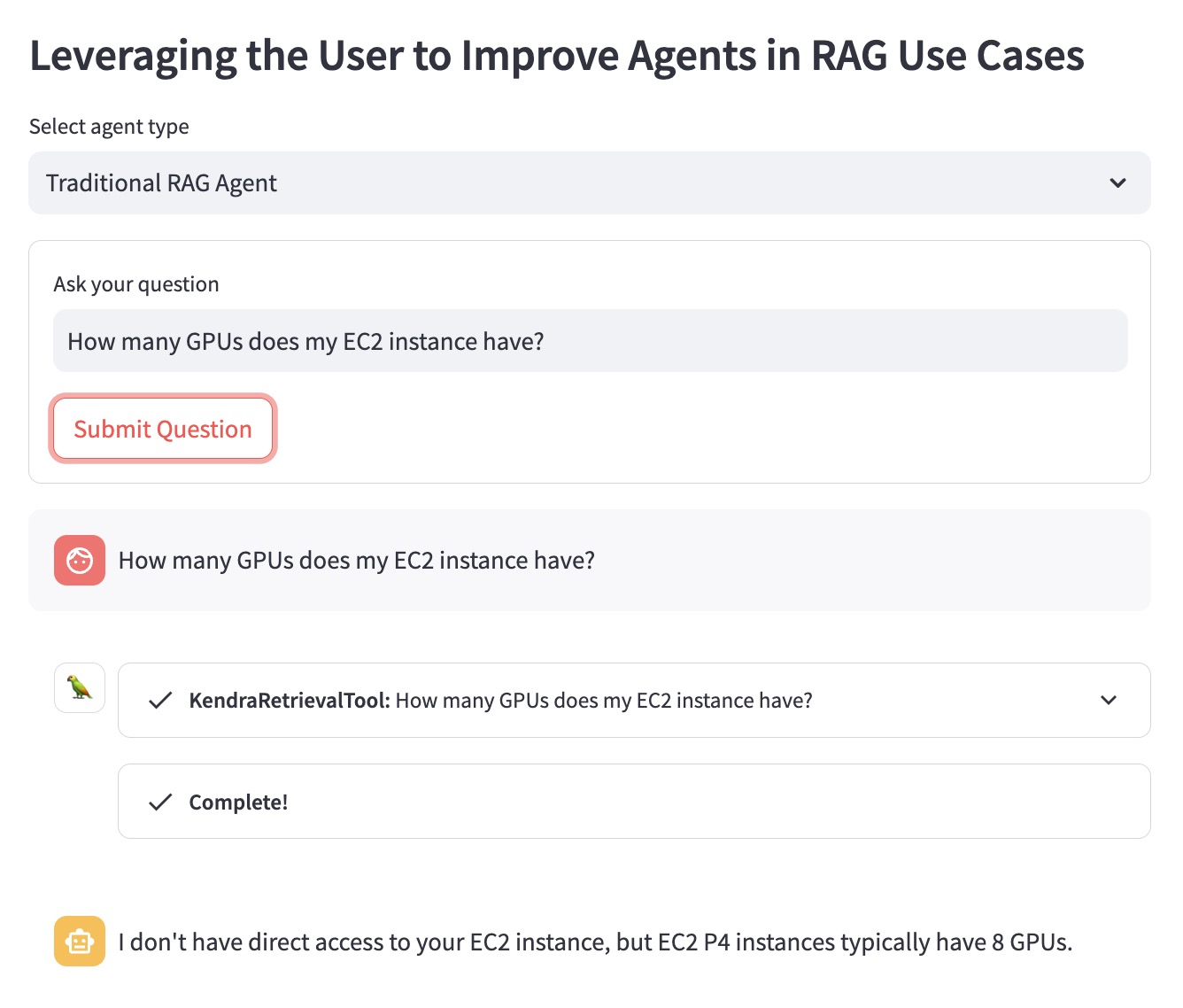

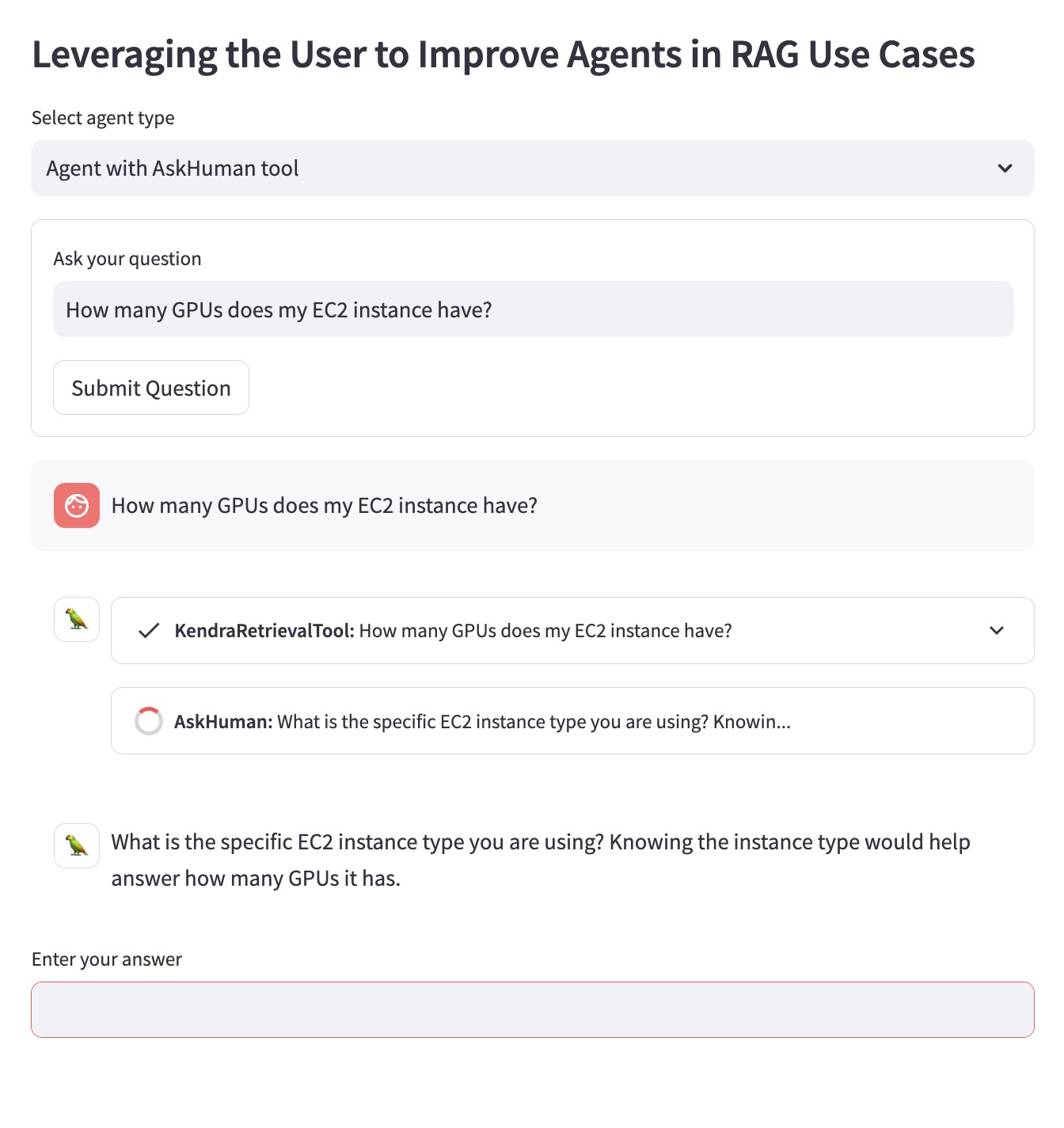

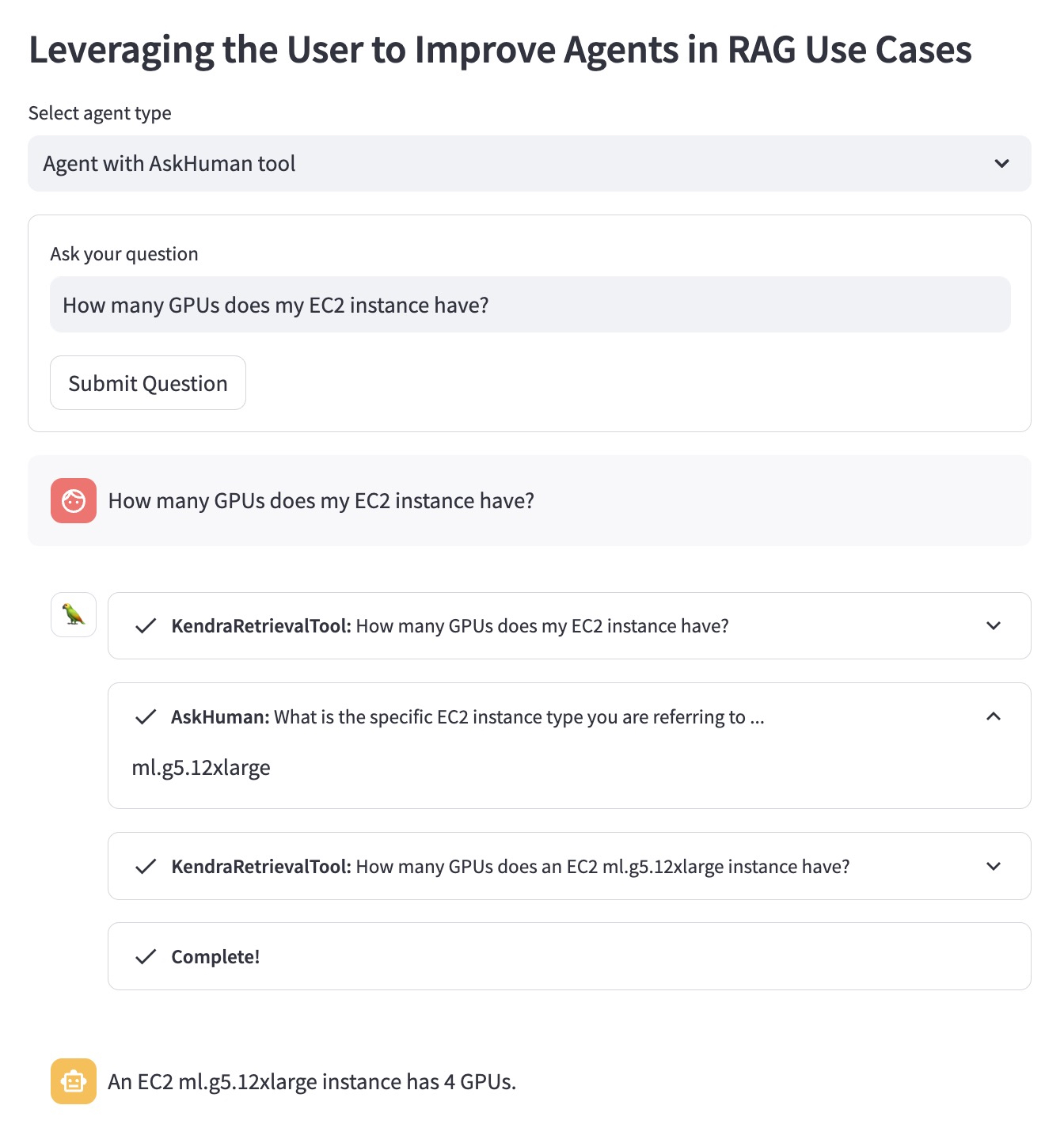

Let's illustrate the advantage using the following question example: "How many GPUs does my EC2 instance have?".

Traditional RAG Agent doesn't know which EC2 instance the user has in mind. Therefore, it provides an answer that is not very helpful:

Improved RAG Agent with `AskHuman`` tool performs two additional steps:

This helps the improved agent to provide a specific and helpful answer:

To run this demo in your AWS account, you need to follow these steps:

llm used in the LangChain Agent in demo.py with a supported LLM by LangChain.sh dependencies.sh in the Terminal.KENDRA_INDEX_ID in retriever parameters demo.py.streamlit run demo.py in the Terminal.Note that deploying a new Kendra index and running the demo might add additional charges to your bill. To avoid incurring unnecessary costs, please delete the Amazon Kendra Index if you do not use it anymore and shut down a SageMaker Studio instance if you used it to run the demo.