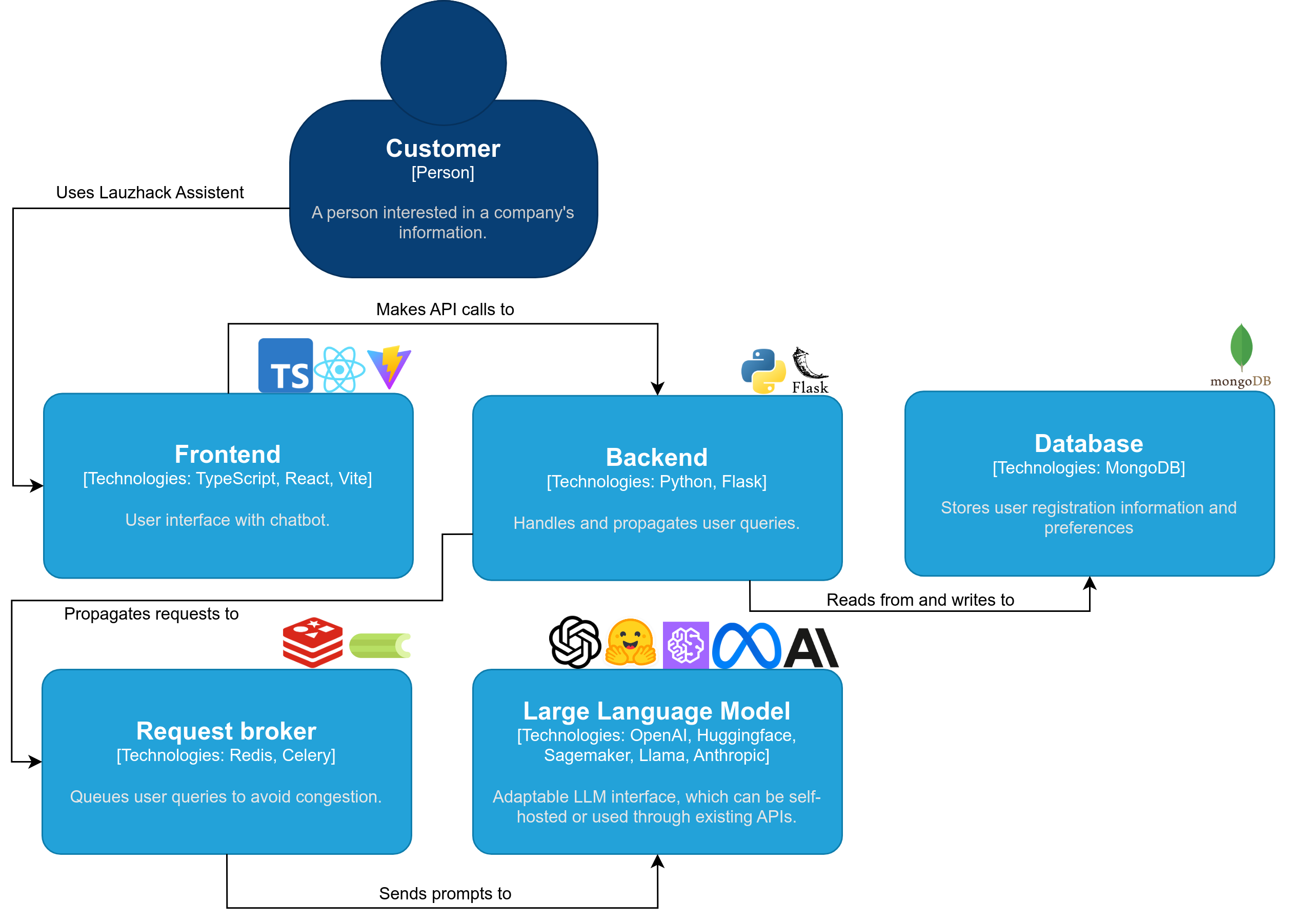

lauzcom assistant is an interactive and user-friendly solution designed to provide seamless access to critical Swisscom data. By integrating powerful GPT models, customers can easily ask questions about public Swisscom data and receive accurate answers swiftly.

Say goodbye to time-consuming manual searches, and let lauzcom assistant revolutionise your customer interactions.

The lauzcom assistant project is created by:

Demo video

Note

Make sure you have Docker installed

On macOS or Linux, run:

./setup.shIt installs all the dependencies and allows you to download a model locally or use OpenAI. LauzHack Assistant now runs at http://localhost:5173.

Otherwise, follow these steps:

Download and open this repository with git clone [email protected]:cern-lauzhack-2023/Lauzcom-Assistant.git.

Create a .env file in your root directory and set the env variable API_KEY with your OpenAI API key and VITE_API_STREAMING to true or false, depending on whether you want streaming answers or not.

API_KEY=<YourOpenAIKey>

VITE_API_STREAMING=trueSee optional environment variables in the /.env-template and /application/.env_sample files.

Run ./run-with-docker-compose.sh.

LauzHack Assistant now runs at http://localhost:5173.

To stop, press Ctrl + C.

For development, only two containers are used from docker-compose.yaml (by deleting all services except for Redis and Mongo). See file docker-compose-dev.yaml.

Run:

docker compose -f docker-compose-dev.yaml build

docker compose -f docker-compose-dev.yaml up -dNote

Make sure you have Python 3.10 or 3.11 installed.

.env file in the /application folder.

.env with your OpenAI API token for the API_KEY and EMBEDDINGS_KEY fields.(check out application/core/settings.py if you want to see more config options.)

(optional) Create a Python virtual environment: Follow the Python official documentation for virtual environments.

a) On Linux and macOS:

python -m venv venv

. venv/bin/activateb) On Windows:

python -m venv venv

venv/Scripts/activateInstall dependencies for the backend:

pip install -r application/requirements.txtflask --app application/app.py run --host=0.0.0.0 --port=7091The backend API now runs at http://localhost:7091.

celery -A application.app.celery worker -l INFONote

Make sure you have Node version 16 or higher.

husky and vite (ignore if already installed).npm install husky -g

npm install vite -gnpm install --include=devnpm run devThe frontend now runs at http://localhost:5173.

The source code license is MIT, as described in the LICENSE file.

Built with ? ? LangChain