MMDialog

1.0.0

This repository is the official site of ACL'23 paper: MMDialog: A Large-scale Multi-turn Dialogue Dataset Towards Multi-modal Open-domain Conversation

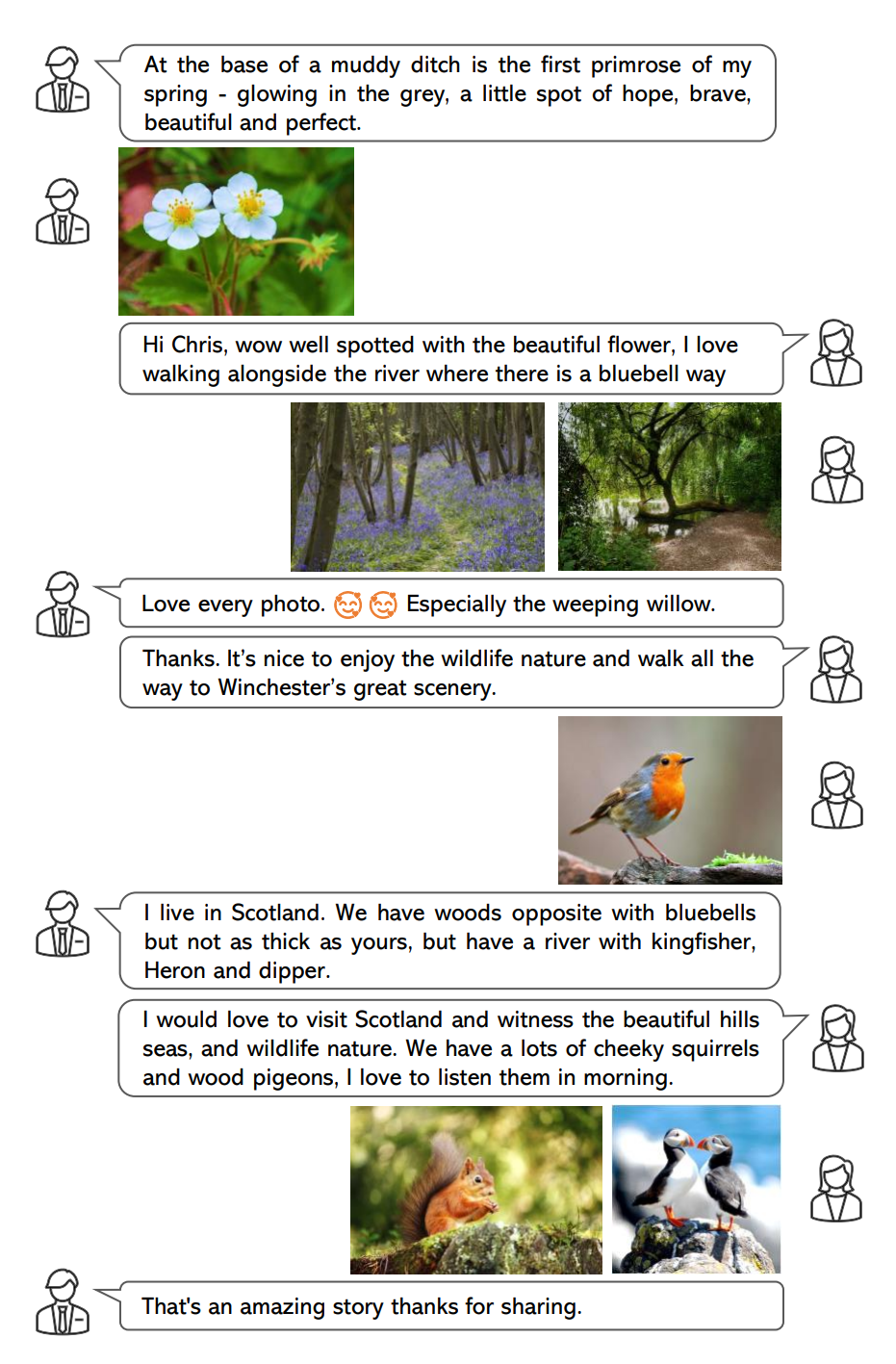

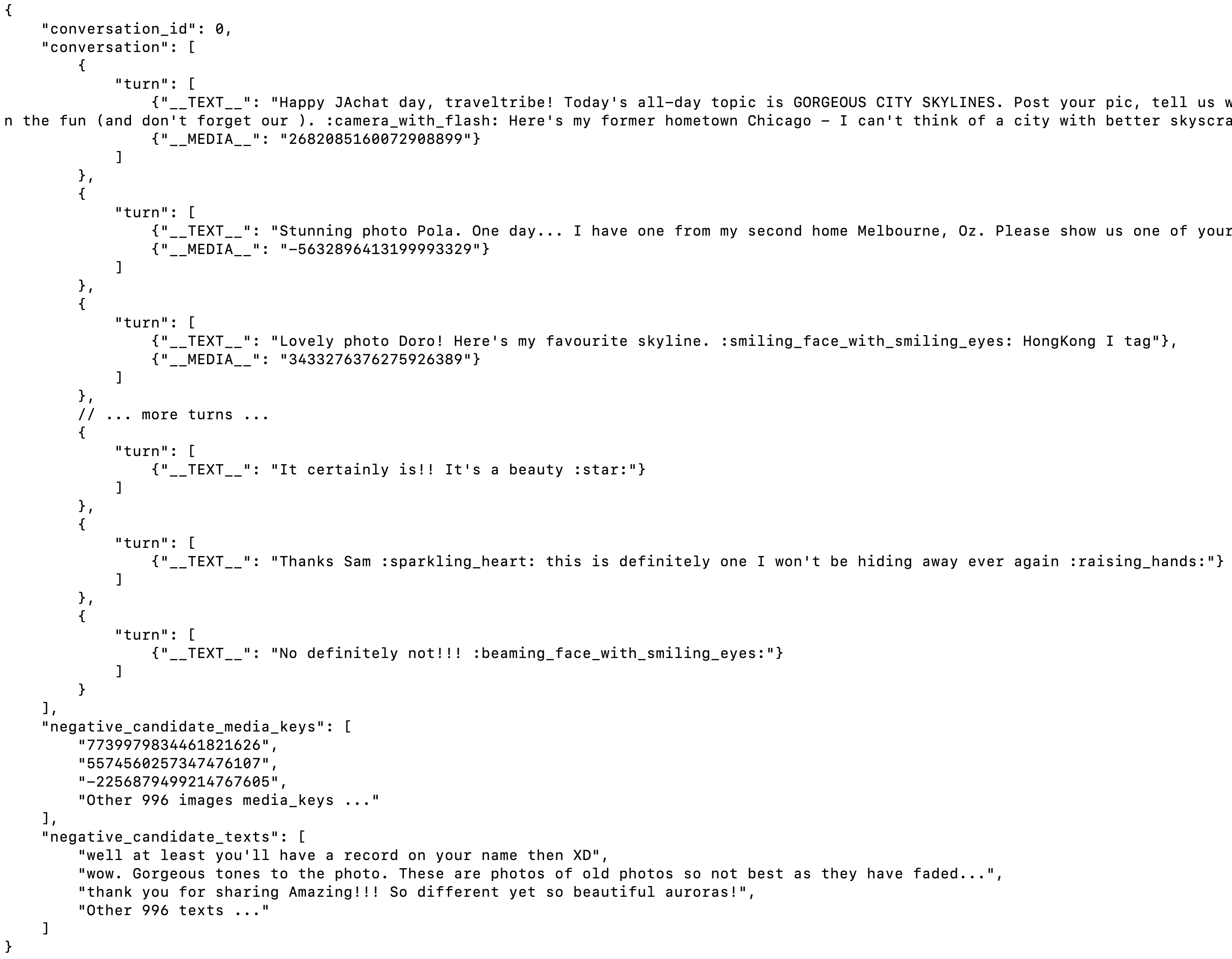

A Dialogue Case of MMDialog:

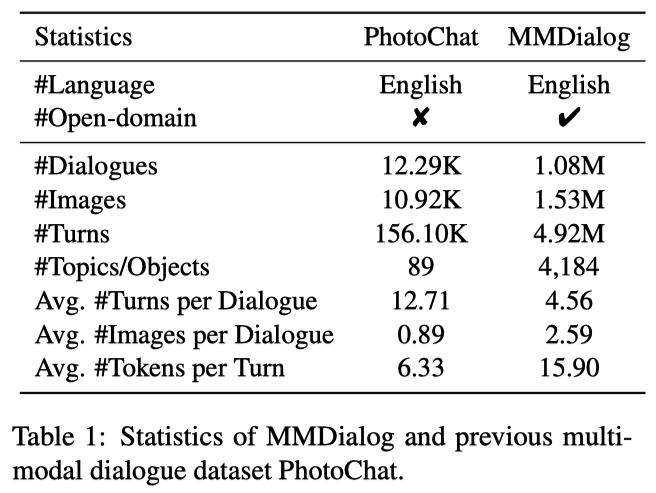

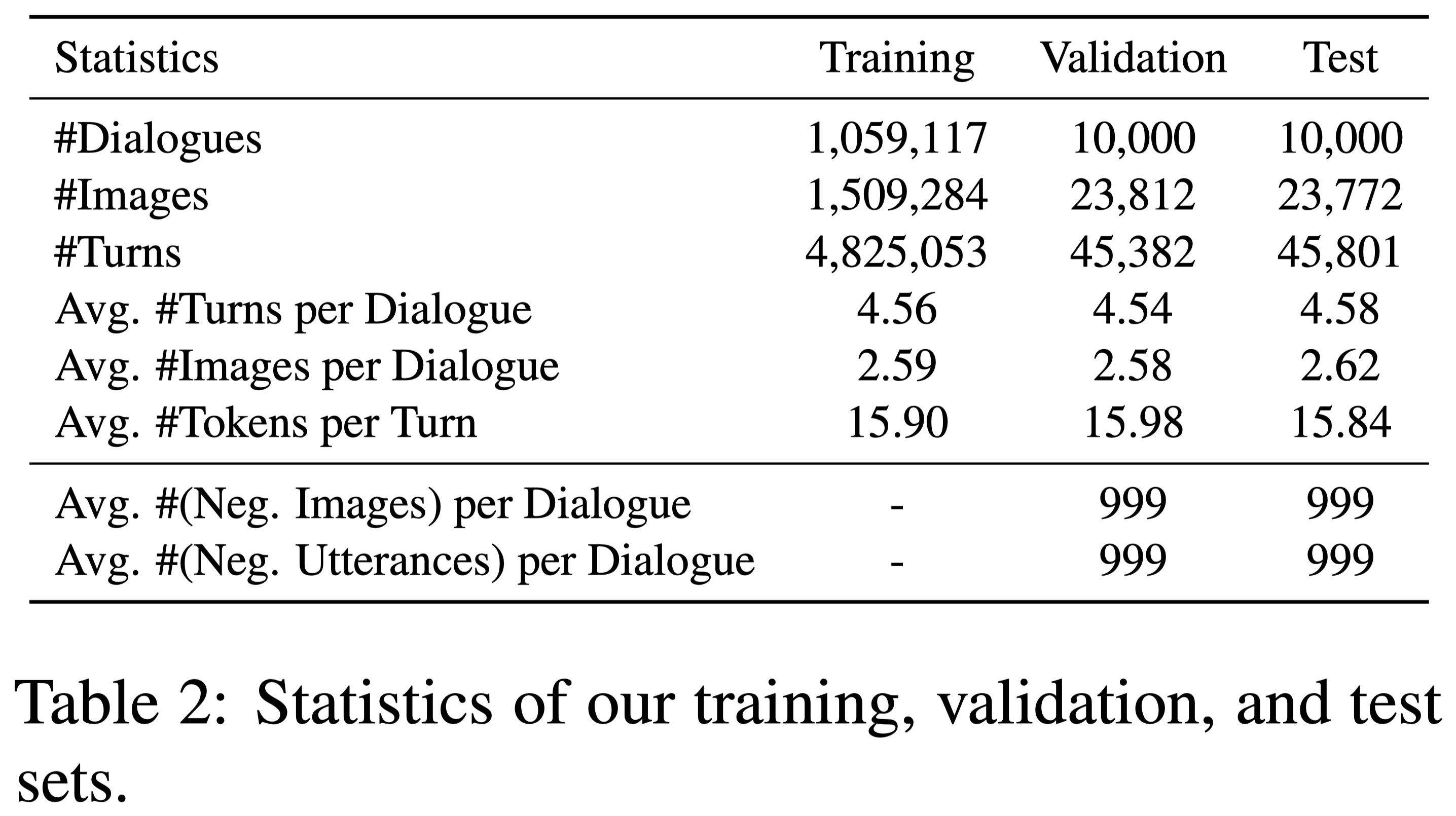

Statistics:

If you use it in your work, please cite our paper:

@inproceedings{feng-etal-2023-MMDialog,

title = "{MMD}ialog: A Large-scale Multi-turn Dialogue Dataset Towards Multi-modal Open-domain Conversation",

author = "Feng, Jiazhan and Sun, Qingfeng and Xu, Can and Zhao, Pu and Yang, Yaming and Tao, Chongyang and Zhao, Dongyan and Lin, Qingwei",

booktitle = "Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = jul,

year = "2023",

address = "Toronto, Canada",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.acl-long.405",

doi = "10.18653/v1/2023.acl-long.405",

pages = "7348--7363"

}

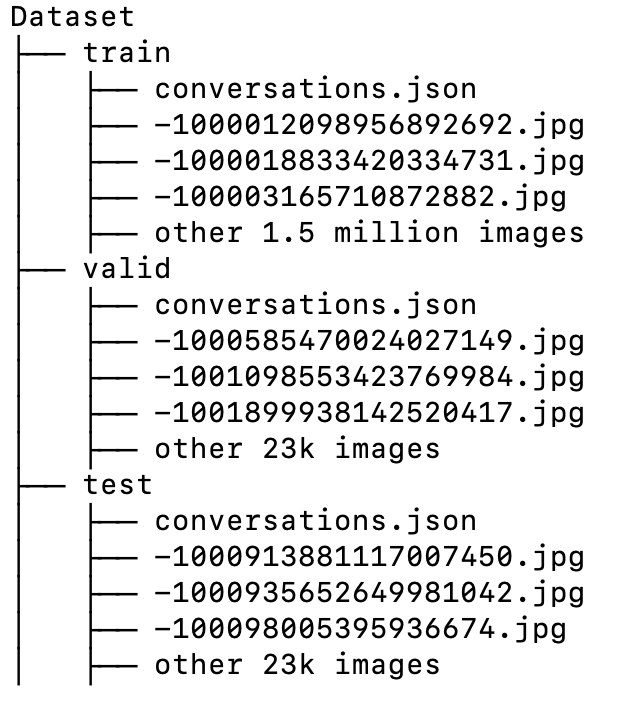

Dataset Folder Format:

File: conversations.json

Note:

If you don't meet all of the requirements above, we would not share you the dataset.

| Item | Description |

|---|---|

| Your Name | [Your name here] |

| Your Role | [master’s student / doctoral candidate / post-doc / faculty / research-focused employee / others] |

| Your Study or Work Organization | e.g. Microsoft Research, DeepMind, Cornell University, ... |

| Your Personal Academic Homepage With Publications | Your [Google Scholar] or [Homepage_URL running on your organization website (e.g. yourname.people.xxx.edu / yourname.xxx.people.msr.microsoft.com)] with publications. |

| Non-commercial Use | I [promise / cannot promise] that I will not apply this MMDialog dataset to commercial scenarios or products. |

| Sharing Limitation | I [promise / cannot promise] I would not share this MMDialog dataset without your qualification review and permission. |

| Your Plan | (Describe your research plan and how you intend to use and analyze this data from your research. >= 50 words) |

Then use your edu or research email account to send the form to [[email protected]] for a review, if you meet all the requirements, we would share you a cloud folder which stores the pre-processed dataset within a week.