Anthony Chen1,2 · Jianjin Xu3 · Wenzhao Zheng4 · Gaole Dai1 · Yida Wang5 · Renrui Zhang6 · Haofan Wang2 · Shanghang Zhang1*

1Peking University · 2InstantX Team · 3Carnegie Mellon University · 4UC Berkeley · 5Li Auto Inc. · 6CUHK

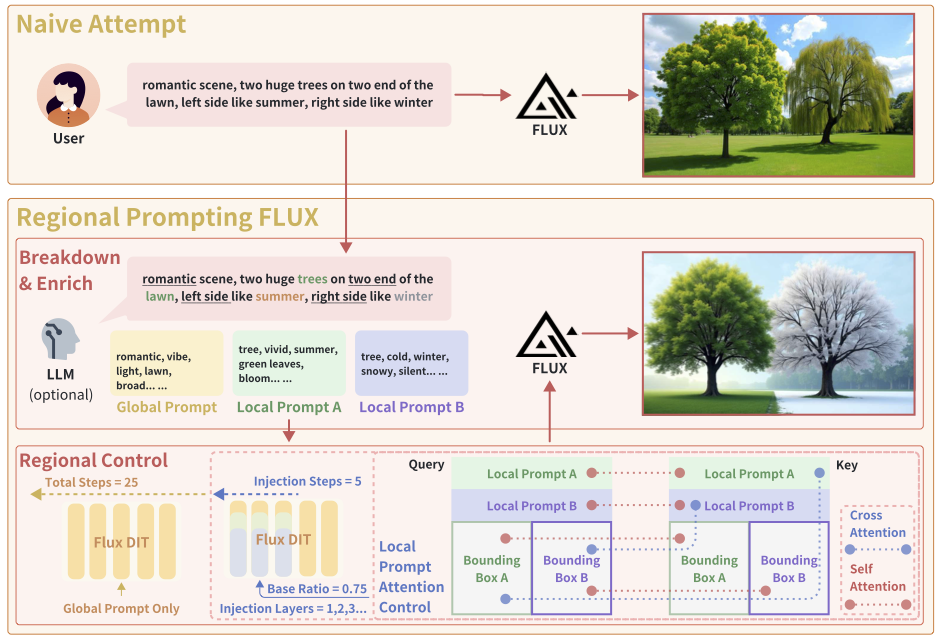

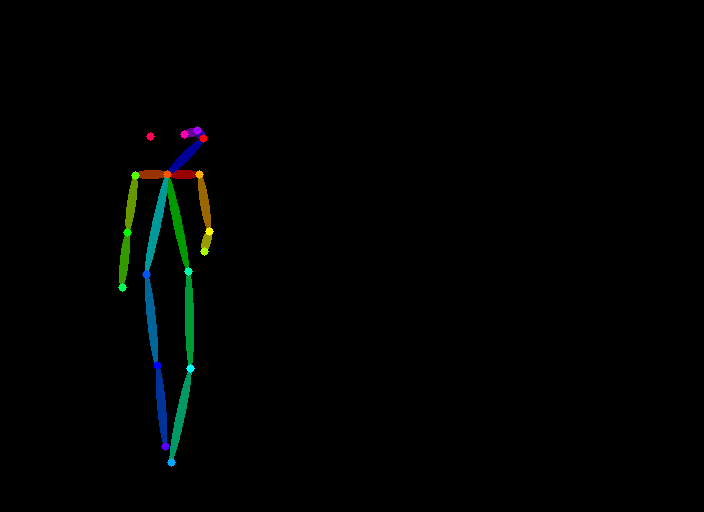

Training-free Regional Prompting for Diffusion Transformers(Regional-Prompting-FLUX) enables Diffusion Transformers (i.e., FLUX) with find-grained compositional text-to-image generation capability in a training-free manner. Empirically, we show that our method is highly effective and compatible with LoRA and ControlNet.

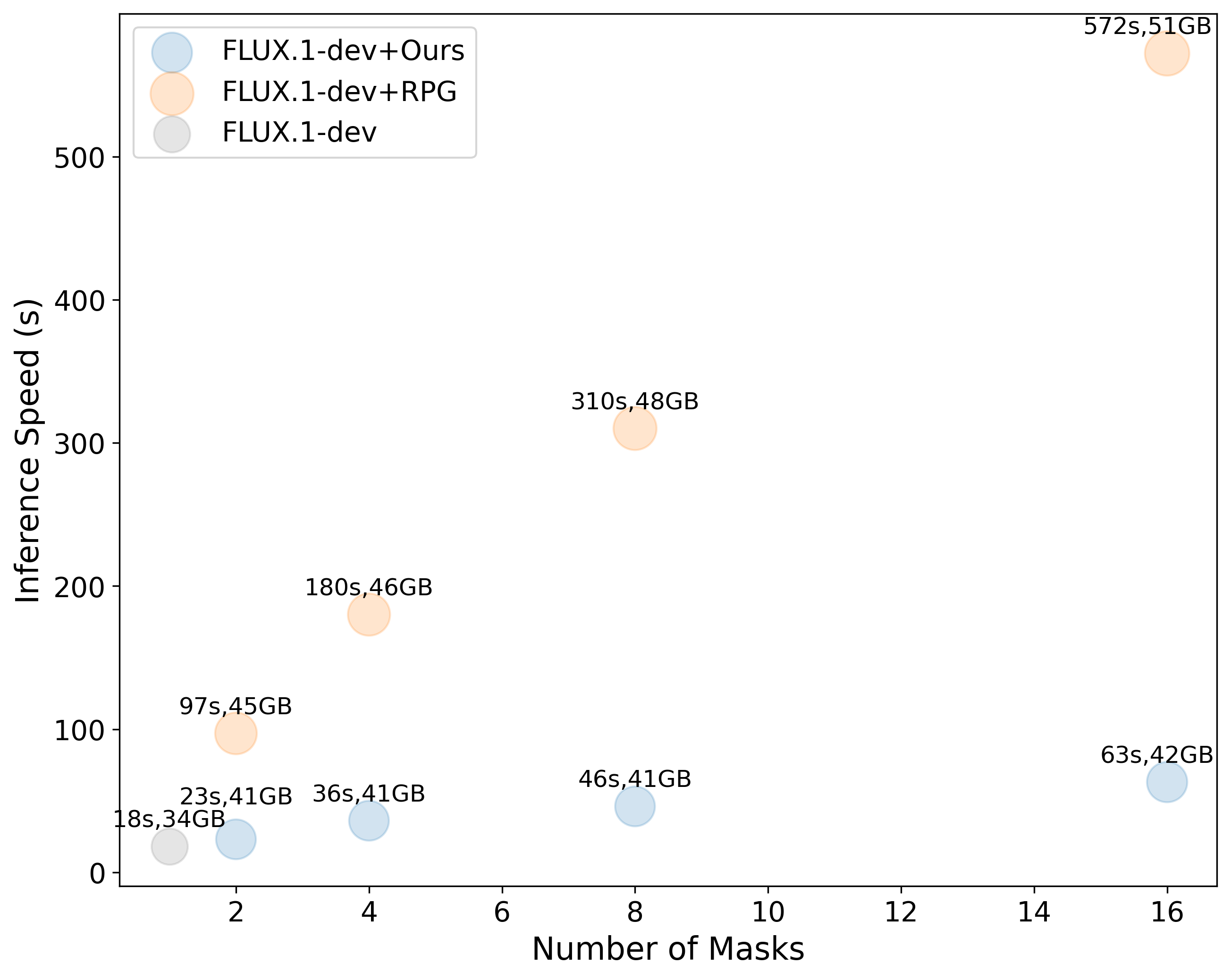

We inference at speed much faster than the RPG-based implementation, yet take up less GPU memory.

[2024/11/05] We release the code, feel free to try it out!

[2024/11/05] We release the technical report!

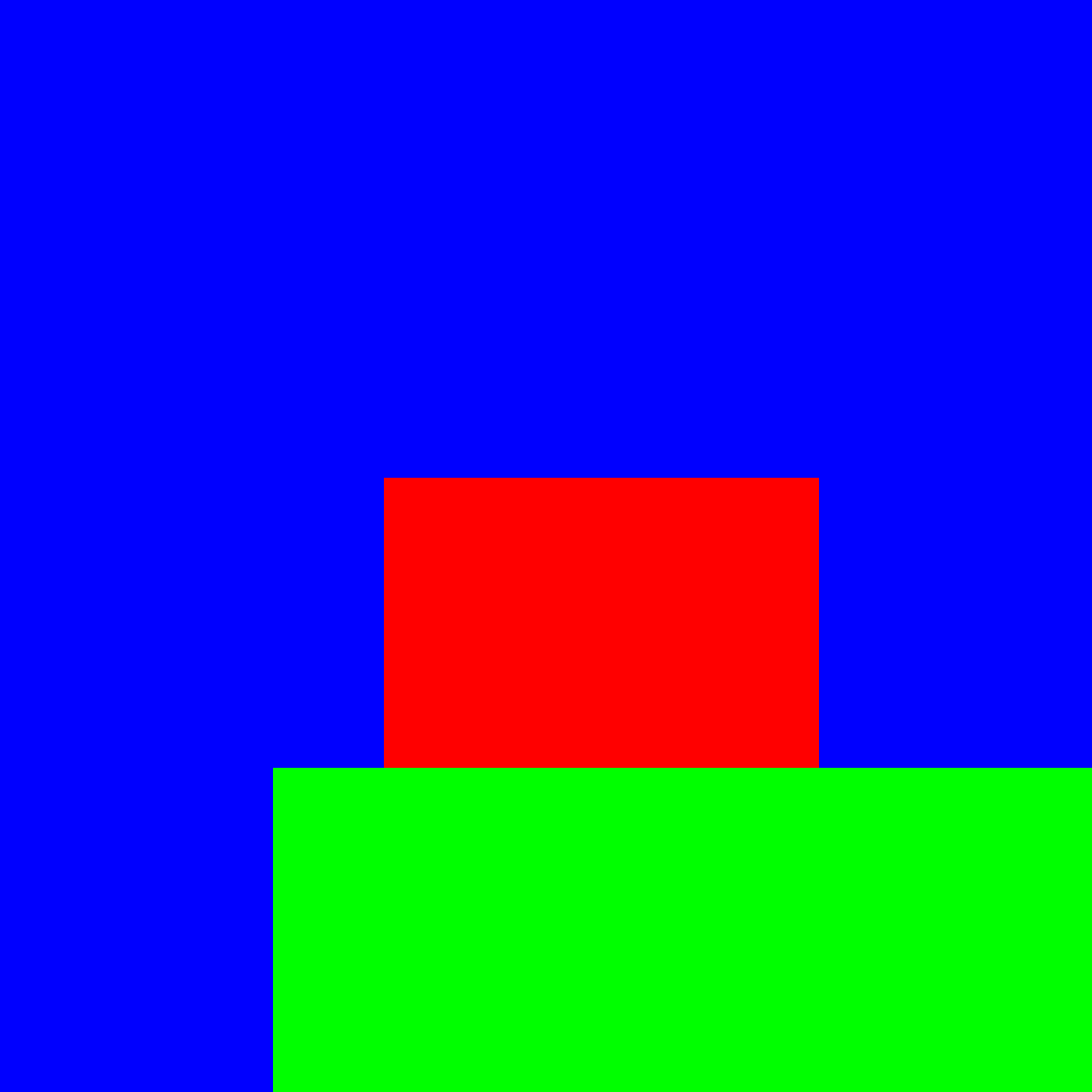

| Regional Masks | Configuration | Generated Result |

|---|---|---|

Red: Cocktail region (xyxy: [450, 560, 960, 900]) Green: Table region (xyxy: [320, 900, 1280, 1280]) Blue: Background | Base Prompt: "A tropical cocktail on a wooden table at a beach during sunset." Background Prompt: "A photo" Regional Prompts:

|  |

Red: Rainbow region (xyxy: [0, 0, 1280, 256]) Green: Ship region (xyxy: [0, 256, 1280, 520]) Yellow: Fish region (xyxy: [0, 520, 640, 768]) Blue: Treasure region (xyxy: [640, 520, 1280, 768]) | Base Prompt: "A majestic ship sails under a rainbow as vibrant marine creatures glide through crystal waters below, embodying nature's wonder, while an ancient, rusty treasure chest lies hidden on the ocean floor." Regional Prompts:

|  |

Red: Woman with torch region (xyxy: [128, 128, 640, 768]) Green: Background | Base Prompt: "An ancient woman stands solemnly holding a blazing torch, while a fierce battle rages in the background, capturing both strength and tragedy in a historical war scene." Background Prompt: "A photo." Regional Prompts:

|  |

Red: Dog region (assets/demo_custom_0_mask_0.png) Green: Cat region (assets/demo_custom_0_mask_1.png) Blue: Background | Base Prompt: "dog and cat sitting on lush green grass, in a sunny outdoor setting." Background Prompt: "A photo" Regional Prompts:

|  Note: Generation with segmentation mask is a experimental function, the generated image is not perfectly constrained by the regions, we assume it is because the mask suffers from degradation during the downsampling process. |

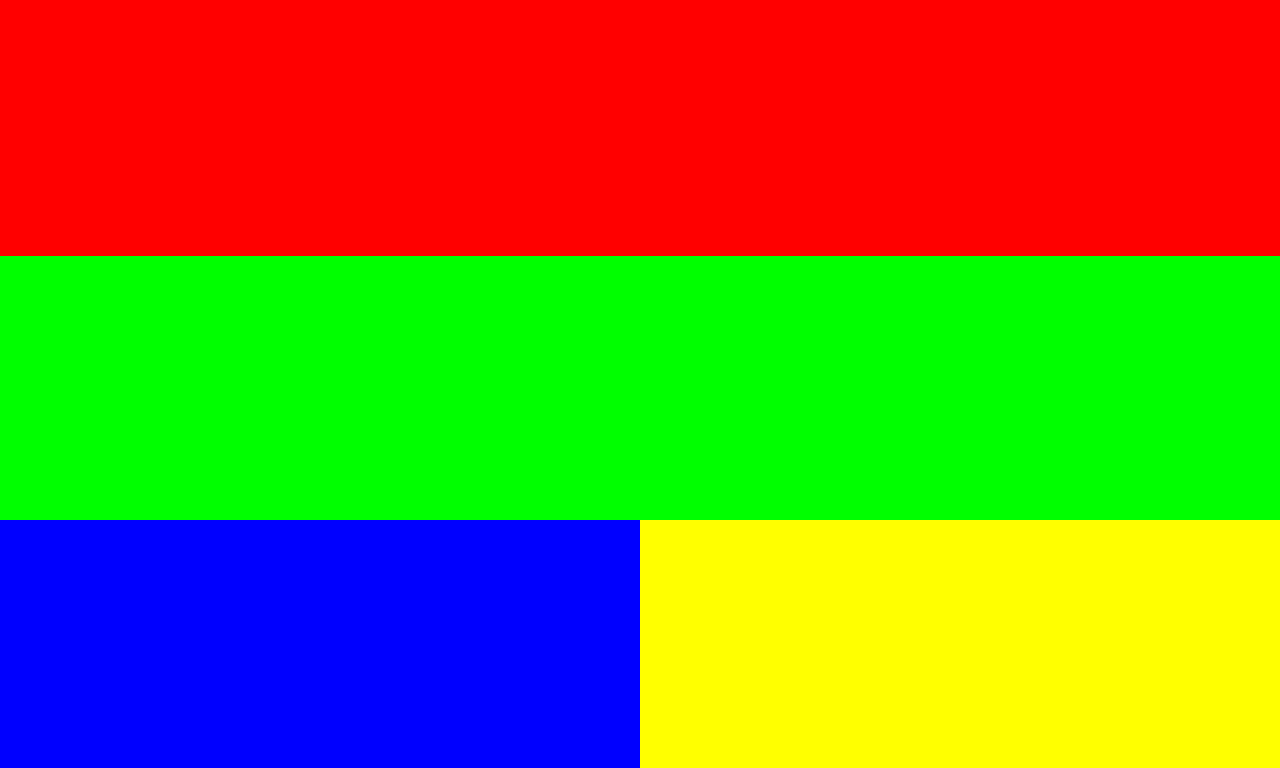

| Regional Masks | Configuration | Generated Result |

|---|---|---|

Red: Dinosaur region (xyxy: [0, 0, 640, 1280]) Blue: City region (xyxy: [640, 0, 1280, 1280]) | Base Prompt: "Sketched style: A cute dinosaur playfully blowing tiny fire puffs over a cartoon city in a cheerful scene." Regional Prompts:

|  |

Red: UFO region (xyxy: [320, 320, 640, 640]) | Base Prompt: "A cute cartoon-style UFO floating above a sunny city street, artistic style blends reality and illustration elements" Background Prompt: "A photo" Regional Prompts:

|  |

| Regional Masks | Configuration | Generated Result |

|---|---|---|

Red: First car region (xyxy: [0, 0, 426, 968]) Green: Second car region (xyxy: [426, 0, 853, 968]) Blue: Third car region (xyxy: [853, 0, 1280, 968])  | Base Prompt: "Three high-performance sports cars, red, blue, and yellow, are racing side by side on a city street" Regional Prompts:

|  |

Red: Woman region (xyxy: [0, 0, 640, 968]) Green: Beach region (xyxy: [640, 0, 1280, 968])  | Base Prompt: "A woman walking along a beautiful beach with a scenic coastal view." Regional Prompts:

|  |

We use previous commit from diffusers repo to ensure reproducibility, as we found new diffusers version may experience different results.

# install diffusers locally git clone https://github.com/huggingface/diffusers.git cd diffusers # reset diffusers version to 0.31.dev, where we developed Regional-Prompting-FLUX on, different version may experience different results git reset --hard d13b0d63c0208f2c4c078c4261caf8bf587beb3b pip install -e ".[torch]" cd .. # install other dependencies pip install -U transformers sentencepiece protobuf PEFT # clone this repo git clone https://github.com/antonioo-c/Regional-Prompting-FLUX.git # replace file in diffusers cd Regional-Prompting-FLUX cp transformer_flux.py ../diffusers/src/diffusers/models/transformers/transformer_flux.py

See detailed example (including LoRAs and ControlNets) in infer_flux_regional.py. Below is a quick start example.

import torchfrom pipeline_flux_regional import RegionalFluxPipeline, RegionalFluxAttnProcessor2_0pipeline = RegionalFluxPipeline.from_pretrained("black-forest-labs/FLUX.1-dev", torch_dtype=torch.bfloat16).to("cuda")attn_procs = {}for name in pipeline.transformer.attn_processors.keys():if 'transformer_blocks' in name and name.endswith("attn.processor"):attn_procs[name] = RegionalFluxAttnProcessor2_0()else:attn_procs[name] = pipeline.transformer.attn_processors[name]pipeline.transformer.set_attn_processor(attn_procs)## general settingsimage_width = 1280image_height = 768num_inference_steps = 24seed = 124base_prompt = "An ancient woman stands solemnly holding a blazing torch, while a fierce battle rages in the background, capturing both strength and tragedy in a historical war scene."background_prompt = "a photo" # set by default, but if you want to enrich background, you can set it to a more descriptive promptregional_prompt_mask_pairs = {"0": {"description": "A dignified woman in ancient robes stands in the foreground, her face illuminated by the torch she holds high. Her expression is one of determination and sorrow, her clothing and appearance reflecting the historical period. The torch casts dramatic shadows across her features, its flames dancing vibrantly against the darkness.","mask": [128, 128, 640, 768]

}

}## region control factor settingsmask_inject_steps = 10 # larger means stronger control, recommended between 5-10double_inject_blocks_interval = 1 # 1 means strongest controlsingle_inject_blocks_interval = 1 # 1 means strongest controlbase_ratio = 0.2 # smaller means stronger controlregional_prompts = []regional_masks = []background_mask = torch.ones((image_height, image_width))for region_idx, region in regional_prompt_mask_pairs.items():description = region['description']mask = region['mask']x1, y1, x2, y2 = maskmask = torch.zeros((image_height, image_width))mask[y1:y2, x1:x2] = 1.0background_mask -= maskregional_prompts.append(description)regional_masks.append(mask)# if regional masks don't cover the whole image, append background prompt and maskif background_mask.sum() > 0:regional_prompts.append(background_prompt)regional_masks.append(background_mask)image = pipeline(prompt=base_prompt,width=image_width, height=image_height,mask_inject_steps=mask_inject_steps,num_inference_steps=num_inference_steps,generator=torch.Generator("cuda").manual_seed(seed),joint_attention_kwargs={"regional_prompts": regional_prompts,"regional_masks": regional_masks,"double_inject_blocks_interval": double_inject_blocks_interval,"single_inject_blocks_interval": single_inject_blocks_interval,"base_ratio": base_ratio},

).images[0]image.save(f"output.jpg")Our work is sponsored by HuggingFace and fal.ai. Thanks!

If you find Regional-Prompting-FLUX useful for your research and applications, please cite us using this BibTeX:

@article{chen2024training, title={Training-free Regional Prompting for Diffusion Transformers}, author={Chen, Anthony and Xu, Jianjin and Zheng, Wenzhao and Dai, Gaole and Wang, Yida and Zhang, Renrui and Wang, Haofan and Zhang, Shanghang}, journal={arXiv preprint arXiv:2411.02395}, year={2024}}For any question, feel free to contact us via [email protected].