- Ümit Yılmaz @ylmz-dev

- Büşra Gökmen @newsteps8

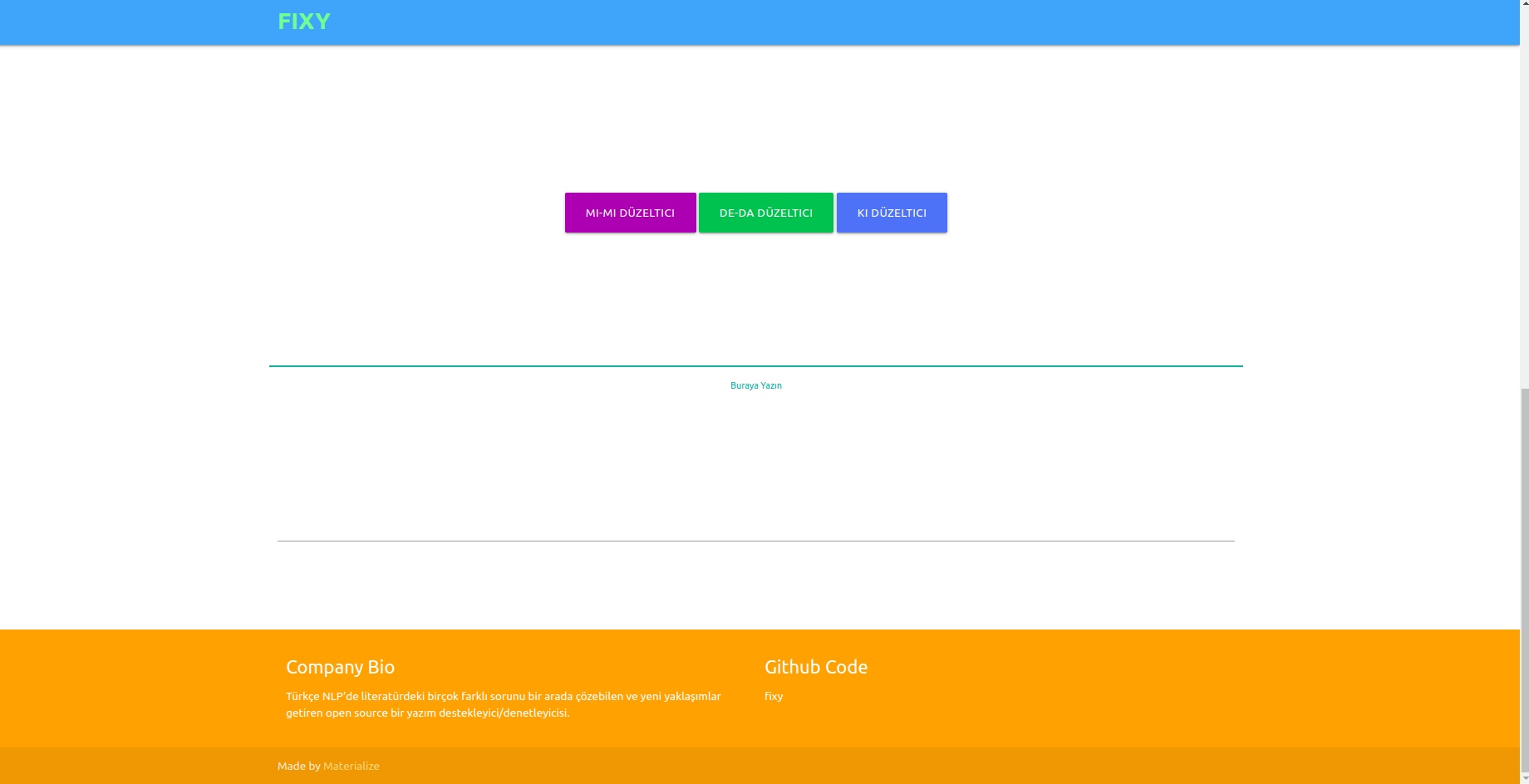

Our aim is to create an open source spelling supporter/checker that can solve many different problems in the Turkish NLP literature, put forward unique approaches and eliminate the deficiencies of the studies in the literature. To solve the spelling errors in the texts written by users with a deep learning approach and to also realize and correct the errors that occur in this context by performing semantic analysis on the texts.

Although there are many libraries in the literature that correct spelling errors, none of them had the capacity to correct errors that occurred in the semantic context. Examples of such errors are -da/-de, -ki, and -mi, which must be written separately or adjacently in conjunctions and suffixes. Our work is completely unique and performs much better than any other example in the literature. 1DCNN, GRU, LSTM RNN were tested as models, and 2-layer Bidirectional LSTM was chosen as the best performing model, and its parameters were reached to optimal values with the Bayesian search optimization technique.

Three different datasets were combined in order to generalize and provide good results in the performance of the model in both formal and informal languages. These are OPUS Subtitle dataset, TSCORPUS Wikipedia dataset and TSCORPUS newspaper dataset.

More than 85 million rows of data were processed in our study. As pre-processing, adjacent suffixes were separated from the words and replaced with "X". Separate suffixes have also been replaced with “X”. In this way, it is aimed that the model will give more accurate results even if there is more than one suffix in the same sentence. After pre-processing, sentences containing separate suffixes were labeled 0, and sentences containing adjacent suffixes were labeled 1. Then, the excess number of 0 and 1 labels were reduced by random undersampling to prevent incorrect learning of the model. As a result, the model learned how to write suffixes correctly, not mistakes. 20% of the created datasets were used as test data and 10% as validation data. You can access all datasets in csv format in the data folder or from the drive links under the headings below.

You can load and test models directly with the weights in the pre_trained_weights folder. Or you can train your own corrector with your own data or with the data we share and the models you create using train notebooks.

You can find performance results, comparisons, examples of sentences distinguished by the models, and drive links of the datasets under the following headings.

Python 3.6+

git clone https://github.com/Fixy-TR/fixy.git

To install the library:

pip install DeepCheckerFunctions you can use and their uses:

Correct functions return the correct version of the sentence. Check functions express the value coming from the sigmoid function of the sentence. Being close to 0 indicates that it should be written separately.

from DeepChecker import correct_de , correct_ki , correct_mi , check_de , check_ki , check_mi

print ( correct_de ( "bu yaz bizimkiler de tatile gelecek" )) # doğru hali output olarak gelecek

print ( check_de ( "bu yaz bizimkiler de tatile gelecek" ) # sigmoid değeri output olarak dönecek | Studies Done | Accuracy Rate |

|---|---|

| fixy | 87% |

| Bosphorus | 78% |

| Google Docs | 34% |

| Microsoft Office | 29% |

| ITU | 0% |

| Libra Office | 0% |

It was tested with 100 difficult sentences created by Boğaziçi University. Related article

The methodology used is completely original and is based on a different approach than other studies in the literature. The difference in performance results proves the accuracy of the approach.

from google . colab import drive

import pandas as pd

import keras

import pickle

from keras . preprocessing . text import Tokenizer

from keras . preprocessing . sequence import pad_sequences

from sklearn . model_selection import train_test_split

import tensorflow as tf

from keras . layers import Dense , LSTM , Flatten , Embedding , Dropout , Activation , GRU , Flatten , Input , Bidirectional , GlobalMaxPool1D , Convolution1D , TimeDistributed , Bidirectional

from keras . layers . embeddings import Embedding

from keras . models import Model , Sequential

from keras import initializers , regularizers , constraints , optimizers , layersAfter installing the libraries, we load the model and test it.

model . load_weights ( "/content/Model_deda.h5" ) pred = tokenizer . texts_to_sequences ([ "olsun demek x zor artık" ])

maxlen = 7

padded_pred = pad_sequences ( pred , maxlen = maxlen )

model . predict ( padded_pred ) # 0' yakın çıkması ekin ayrı yazılması gerektiğini gösteriyor.

array ([[ 0.04085088 ]], dtype = float32 )

- Accuracy on Test Data: 92.13%

- ROC AUC on Test Data: 0.921

Confusion Matrix [336706 20522] [ 36227 327591]

| class | precision | recall | f1-score | support |

|---|---|---|---|---|

| 0 | 0.9049 | 0.9397 | 0.9219 | 357228 |

| 1 | 0.9384 | 0.9030 | 0.9204 | 363818 |

Data Labeled dataset link containing 3605229 rows of data created: Data

After installing the libraries, we load the model and test it.

model . load_weights ( "/content/Model_ki.h5" ) pred = tokenizer . texts_to_sequences ([ "desem x böyle böyle oldu" ])

maxlen = 7

padded_pred = pad_sequences ( pred , maxlen = maxlen )

model . predict ( padded_pred ) # 0' yakın çıkması ekin ayrı yazılması gerektiğini gösteriyor.

array ([[ 0.00843348 ]], dtype = float32 )

- Accuracy on Test Data: 91.32%

- ROC AUC on Test Data: 0.913

Confusion Matrix [27113 3311] [ 1968 28457]

| class | precision | recall | f1-score | support |

|---|---|---|---|---|

| 0 | 0.9323 | 0.8912 | 0.9113 | 30424 |

| 1 | 0.8958 | 0.9353 | 0.9151 | 30425 |

Link to the dataset created containing 304244 rows of data, labeled: Data

After installing the libraries, we load the model and test it.

model . load_weights ( "/content/Model_mi.h5" ) pred = tokenizer . texts_to_sequences ([ "olsun demek x zor artık" ])

maxlen = 7

padded_pred = pad_sequences ( pred , maxlen = maxlen )

model . predict ( padded_pred ) # 0' yakın çıkması ekin ayrı yazılması gerektiğini gösteriyor.

array ([[ 0.04085088 ]], dtype = float32 )Link to the created labeled -mi dataset containing 9507636 rows of data: Data

- Accuracy on Test Data: 95.41%

- ROC AUC on Test Data: 0.954

Confusion Matrix [910361 40403] [ 46972 903792]

| class | precision | recall | f1-score | support |

|---|---|---|---|---|

| 0 | 0.9509 | 0.9575 | 0.9542 | 950764 |

| 1 | 0.9572 | 0.9506 | 0.9539 | 950764 |

The fact that there is no study on the and mi suffixes in the literature increases the originality of the project.

We trained the model we created with a three-layer LSTM neural network with approximately 260000 positive and negative labeled sentiment data. We added the embedding layer to our neural network with the word vectors we randomly created. We achieved an accuracy score of 94.57% from the model we trained with 10 epochs.

import numpy as np

import pandas as pd

from keras . preprocessing import sequence

from keras . models import Sequential

from keras . layers import Dense , Embedding , LSTM , Dropout

from tensorflow . python . keras . preprocessing . text import Tokenizer

from tensorflow . python . keras . preprocessing . sequence import pad_sequencesAfter installing the libraries, we load the model with Keras.

from keras . models import load_model

model = load_model ( 'hack_model.h5' ) # modeli yüklüyoruzWe create test inputs.

#test yorumları(inputlar)

text1 = "böyle bir şeyi kabul edemem"

text2 = "tasarımı güzel ancak ürün açılmış tavsiye etmem"

text3 = "bu işten çok sıkıldım artık"

text4 = "kötü yorumlar gözümü korkutmuştu ancak hiçbir sorun yaşamadım teşekkürler"

text5 = "yaptığın işleri hiç beğenmiyorum"

text6 = "tam bir fiyat performans ürünü beğendim"

text7 = "Bu ürünü beğenmedim"

texts = [ text1 , text2 , text3 , text4 , text5 , text6 , text7 ]We tokenize and padding for test inputs

#tokenize

tokens = turkish_tokenizer . texts_to_sequences ( texts )

#padding

tokens_pad = pad_sequences ( tokens , maxlen = max_tokens )The model predicts which emotion these inputs are close to

for i in model . predict ( tokens_pad ):

if i < 0.5 :

print ( "negatif" ) #negatif yorum yapmış

else

print ( "pozitif" ) #pozitif yorum yapmış negative

negative

negative

positive

negative

positive

positiveLink to the labeled positive-negative dataset containing 260000 rows of data: Data

In the model we created with the three-layer LSTM neural network, we labeled approximately 2504900 of the data we received from Twitter, newspaper and Wikipedia as formal (smooth) and informal (unsmooth) and trained our neural network. We added the embedding layer to our neural network with the word vectors we randomly created. We achieved a 95.37% accuracy score from the model we trained with 10 epochs.

import numpy as np

import pandas as pd

from keras . preprocessing import sequence

from keras . models import Sequential

from keras . layers import Dense , Embedding , LSTM , Dropout

from tensorflow . python . keras . preprocessing . text import Tokenizer

from tensorflow . python . keras . preprocessing . sequence import pad_sequencesAfter installing the libraries, we load the model with Keras.

from keras . models import load_model

model = load_model ( 'MODEL_FORMAL.h5' ) # modeli yüklüyoruzWe create test inputs.

# test inputları oluşturuyoruz

text1 = "atatürk, bu görevi en uzun süre yürüten kişi olmuştur."

text2 = "bdjfhdjfhdjkhj"

text3 = "hiç resimde gösterildiği gibi değil..."

text4 = "bir yirminci yüzyıl popüler kültür ikonu haline gelen ressam, resimlerinin yanı sıra inişli çıkışlı özel yaşamı ve politik görüşleri ile tanınır. "

text5 = "fransız halkı önceki döneme göre büyük bir evrim geçirmektedir. halk bilinçlenmektedir ve sarayın, kralın, seçkinlerin denetiminden çıkmaya başlamıştır. şehirlerde yaşayan pek çok burjuva, büyük bir atılım içindedir. kitaplar yaygınlaşmakta, aileler çocuklarını üniversitelere göndererek sağlam bir gelecek kurma yolunu tutarak kültürel seviyeyi yükseltmektedir. bağımsız yayıncıların çıkardıkları gazete, bildiri ve broşürler, kitlesel bilinçlenmeye yol açmaktadır. bu koşullar da toplumsal değişim taleplerinin olgunlaşmasına yol açmıştır."

text6 = "bunu çıkardım söylediklerinden"

text7 = "Bu koşullar da toplumsal değişim taleplerinin olgunlaşmasına yol açmıştır."

text8 = "bu çok saçma yaa"

text9 = "bana böyle bir yetki verilmedi."

text10 = "napıcaz bu işi böyle"

text11 = "Öncelikle Mercedes-Benz’e olan ilgin için teşekkür ederiz."

text12 = "Ekibimizle çalışma isteğin için teşekkür ediyor, sağlıklı günler ve kariyerinde başarılar diliyoruz. Farklı etkinlik ve programlarda tekrar bir araya gelmek dileğiyle."

text13 = "Ben de öyle olduğunu düşünmüyordum ama gittik yine de jzns"

texts = [ text1 , text2 , text3 , text4 , text5 , text6 , text7 , text8 , text9 , text10 , text11 , text12 , text13 ]We tokenize and padding for test inputs

#tokenize

tokens = tokenizer . texts_to_sequences ( texts )

#padding

tokens_pad = pad_sequences ( tokens , maxlen = max_tokens )The model predicts which emotion these inputs are close to

#test verisini tahminleme

for i in model . predict ( tokens_pad ):

if i < 0.5 :

print ( "informal" )

else :

print ( "formal" ) formal

informal

informal

formal

formal

informal

formal

informal

informal

informal

formal

informal

informalLabeled formal dataset link containing 1204900 rows of data created: Data Labeled informal dataset link containing 3934628 rows of data created: Data

We trained SVM linearSVC, MultinomialNB, LogisticRegression, RandomForestClassifier models with the dataset containing 27350 data labeled with 6 different emotions (Fear: Fear, Happy: Joy, Sadness: Sadness, Disgust: Disgust, Anger: Anger, Suprise: Surprise). Before modeling, we used tfidf vectorizer and Turkish stopword list to vectorize the words in the data. Among these models, we achieved the highest accuracy rate with the LinearSVC model.

| Model | Accuracy |

|---|---|

| LinearSVC | 0.80 |

| LogisticRegression | 0.79 |

| MultinomialNB | 0.78 |

| RandomForestClassifier | 0.60 |

from sklearn . linear_model import LogisticRegression

from sklearn . ensemble import RandomForestClassifier

from sklearn . naive_bayes import MultinomialNB

from sklearn . svm import LinearSVC

from sklearn . model_selection import cross_val_score

from sklearn . model_selection import train_test_split

from sklearn . metrics import accuracy_scoreAfter loading the libraries, we load and test the model with load_model.

# modeli yükleyip test ediyoruz

tfidf = TfidfVectorizer ( sublinear_tf = True , min_df = 5 , norm = 'l2' , encoding = 'latin-1' , ngram_range = ( 1 , 2 ), stop_words = myList )

loaded_model = pickle . load ( open ( "emotion_model.pickle" , 'rb' ))

corpus = [

"İşlerin ters gitmesinden endişe ediyorum" ,

"çok mutluyum" ,

"sana çok kızgınım" ,

"beni şaşırttın" ,

]

tfidf . fit_transform ( df . Entry ). toarray ()

features = tfidf . transform ( corpus ). toarray ()

result = loaded_model . predict ( features )

print ( result )[ 'Fear' 'Happy' 'Anger' 'Suprise' ]We requested the dataset we used for this model from TREMODATA. Also, the drive link where this dataset is located: Data

You can display models in an interface with the help of the Flask API you created in the virtual environment. Libraries required for this:

from flask_wtf import FlaskForm

from flask import Flask , request , render_template , redirect

import pickle

import re

from wtforms . validators import DataRequired

import pandas as pd

from os . path import joinYou can connect your models to the frontend by running the app.py file.

In the backend, we used Zemberek's Normalization module for the rule-based corrector. Additionally, we used Zemberek's Informal Word Analysis module to see the more formal versions of the texts in the backend.

In order for Deep Learning Based spell-checker models to learn spelling errors, it may be necessary to produce data in the form of correct sentences or incorrect versions of the sentence. We created noisy functions that distort proper words by identifying common spelling mistakes in Turkish and using them. Thus, we can obtain dirty data for modeling. You can find the functions in the file Noice_Adder_Functions.ipynb.

You can use the cleaned Turkish Wikipedia dataset of 2364897 lines in any Turkish NLP study :) Wikipedia Dataset

- https://acl-bg.org/proceedings/2019/RANLP%202019/pdf/RANLP009.pdf

- https://tscorpus.com

- https://github.com/ozturkberkay/Zemberek-Python-Examples

- https://www.kaggle.com/mustfkeskin/turkish-wikipedia-dump

- http://demir.cs.deu.edu.tr/tremo-dataset/