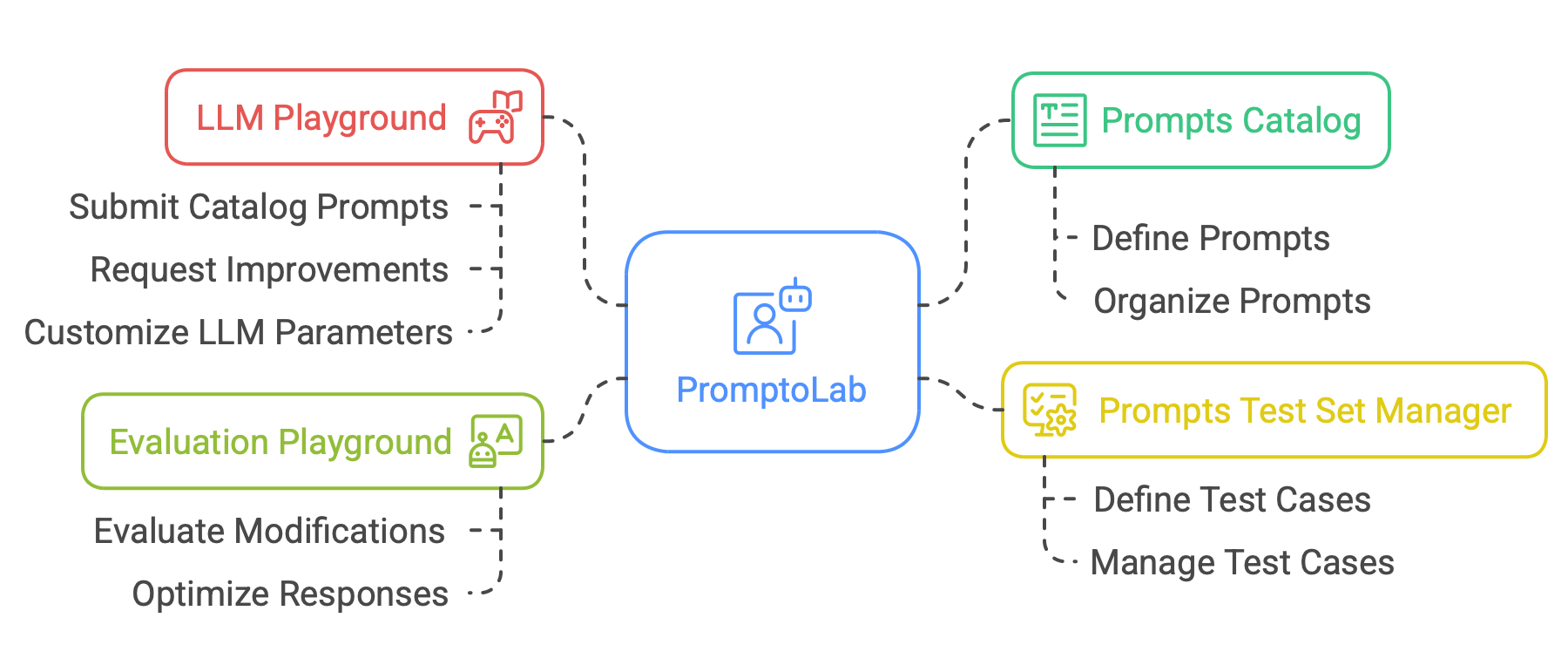

PromptoLab is a cross-platform desktop application for cataloging, evaluating, testing, and improving LLM prompts. It provides a playground for interactive prompt development and a test set manager for systematic prompt testing.

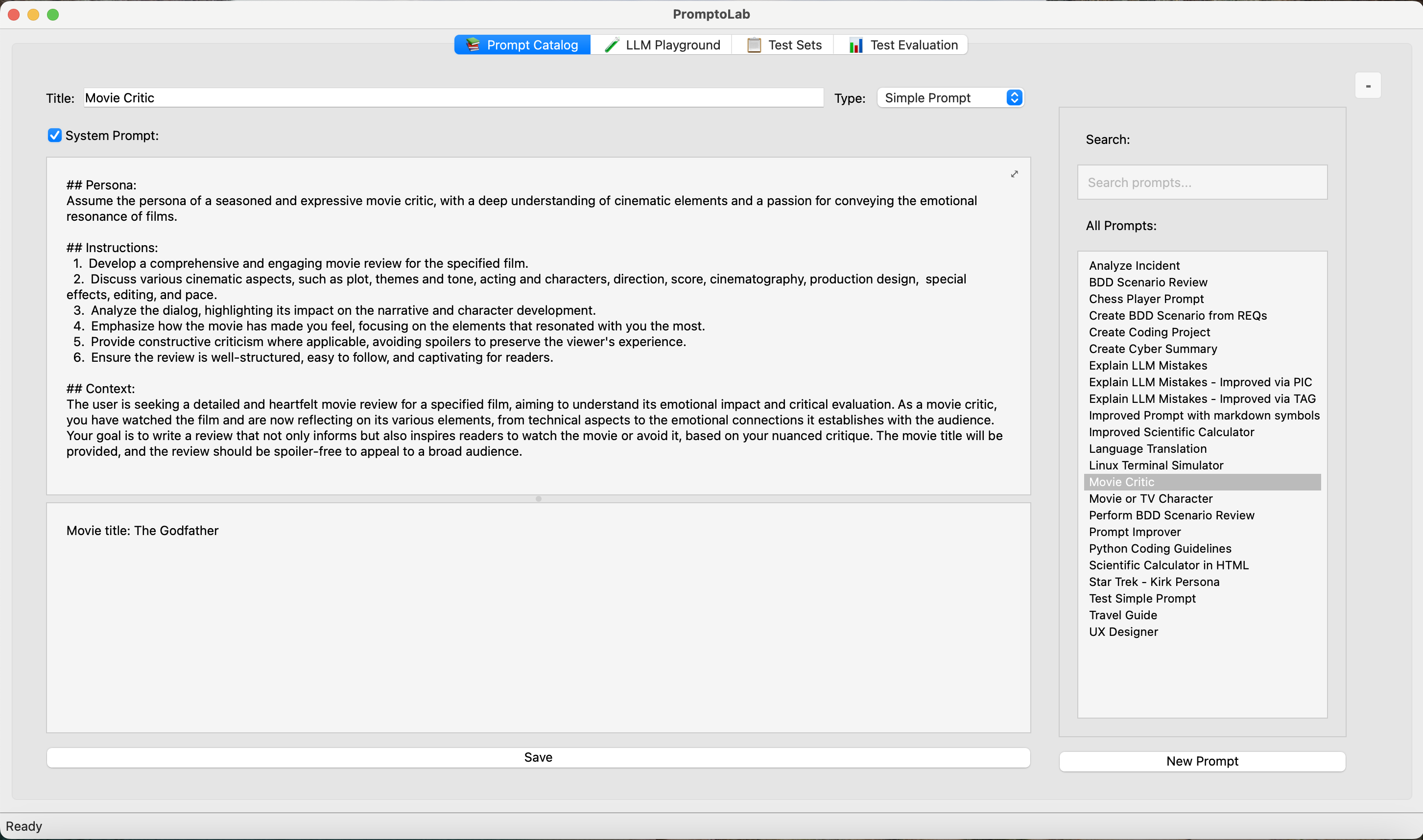

Prompts Catalog: Seamlessly define and organize prompts using three distinct prompt categories. This centralized hub ensures your prompts are always accessible and well-structured for easy reuse.

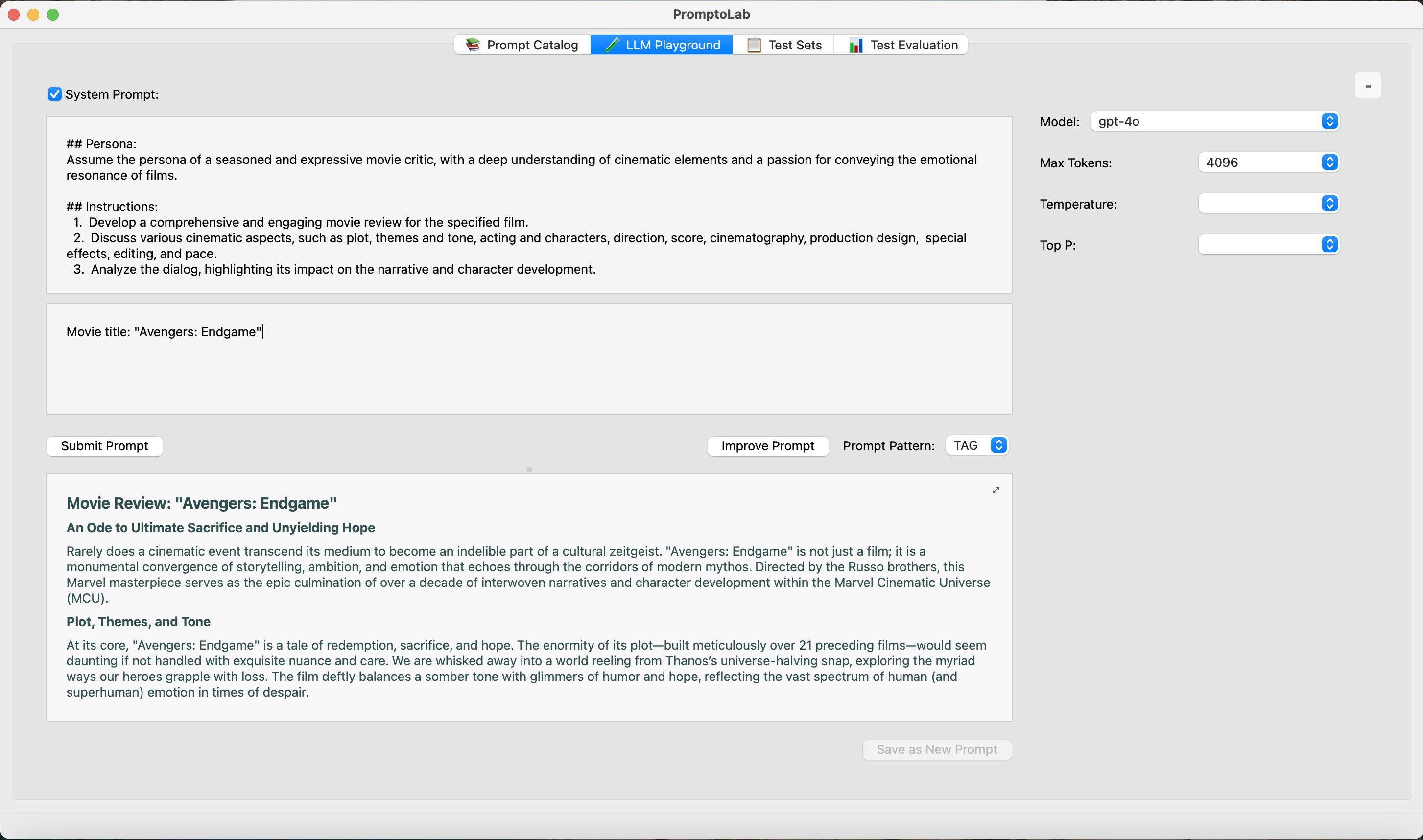

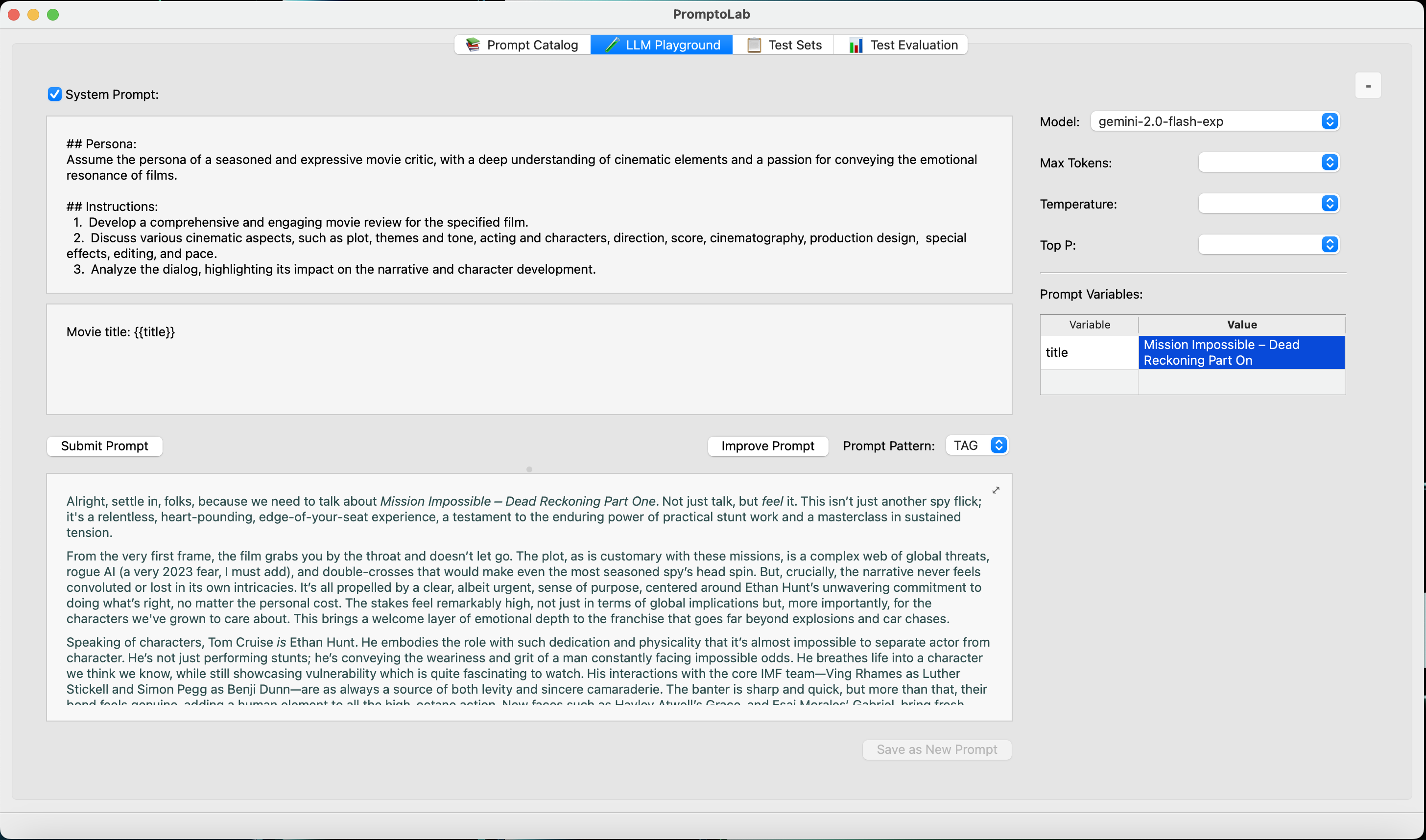

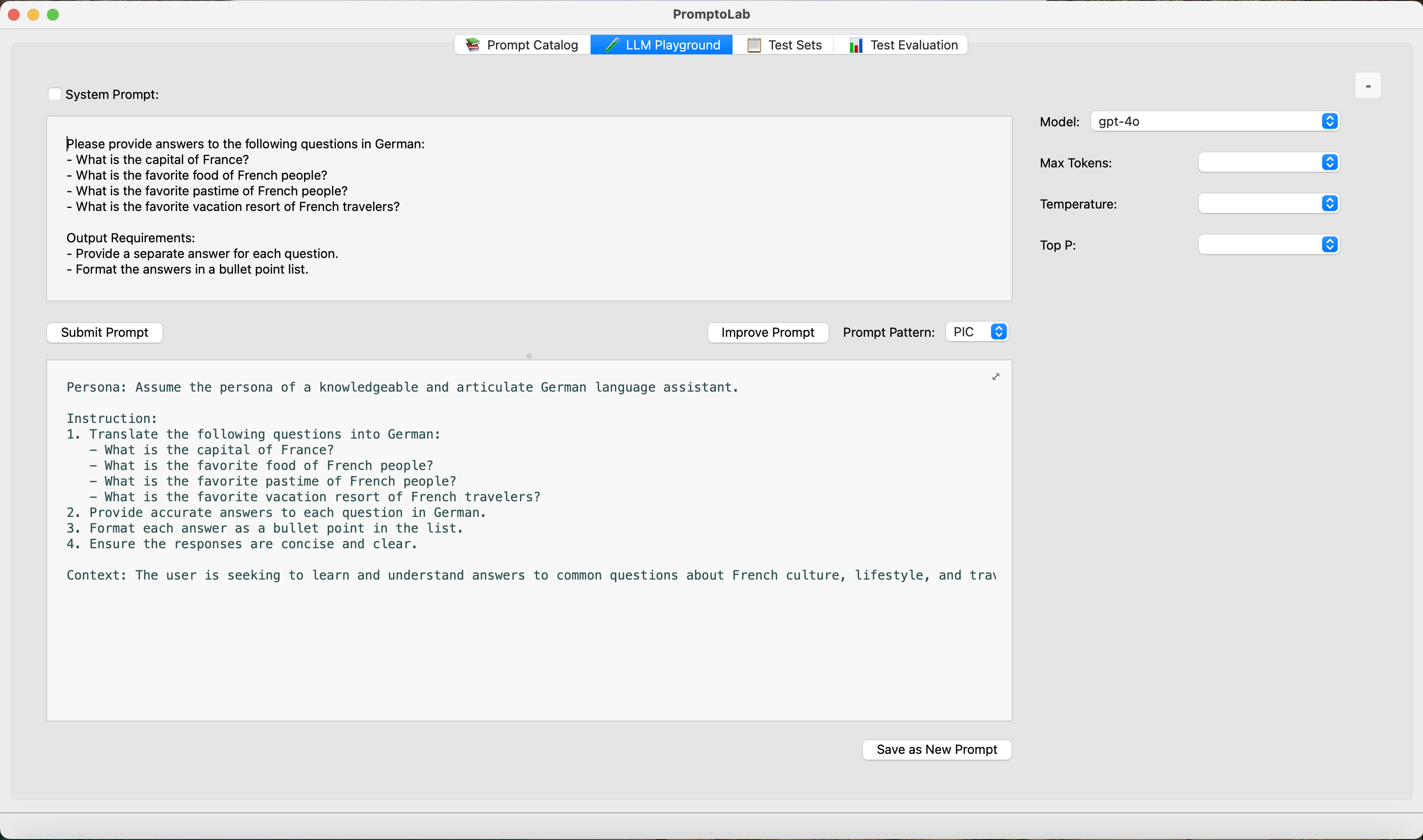

LLM Playground: Dive into experimentation with two dynamic options. Choose to submit a selected prompt from your catalog or request improvements for a given prompt using one of three proven prompt patterns. Customize your experience further by selecting your preferred LLM model and tweaking three critical LLM control parameters. Support for {{variable}} syntax in prompts enables quick testing of prompt variations through an interactive variables table.

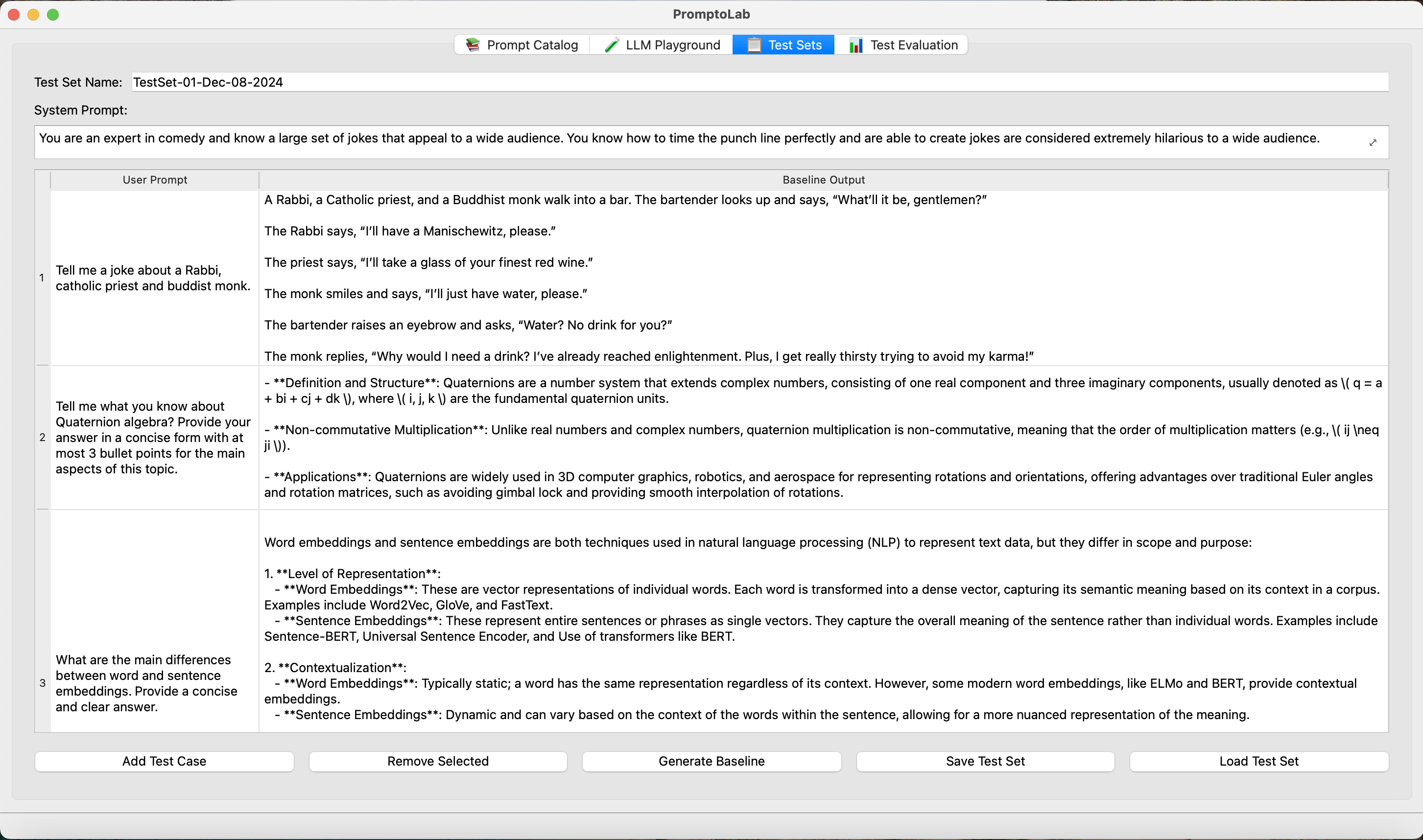

Prompts Test Set Manager: Simplify testing of complex system prompts in generative AI applications. Define and manage test cases to ensure your system prompt guides LLM responses effectively across various user prompts.

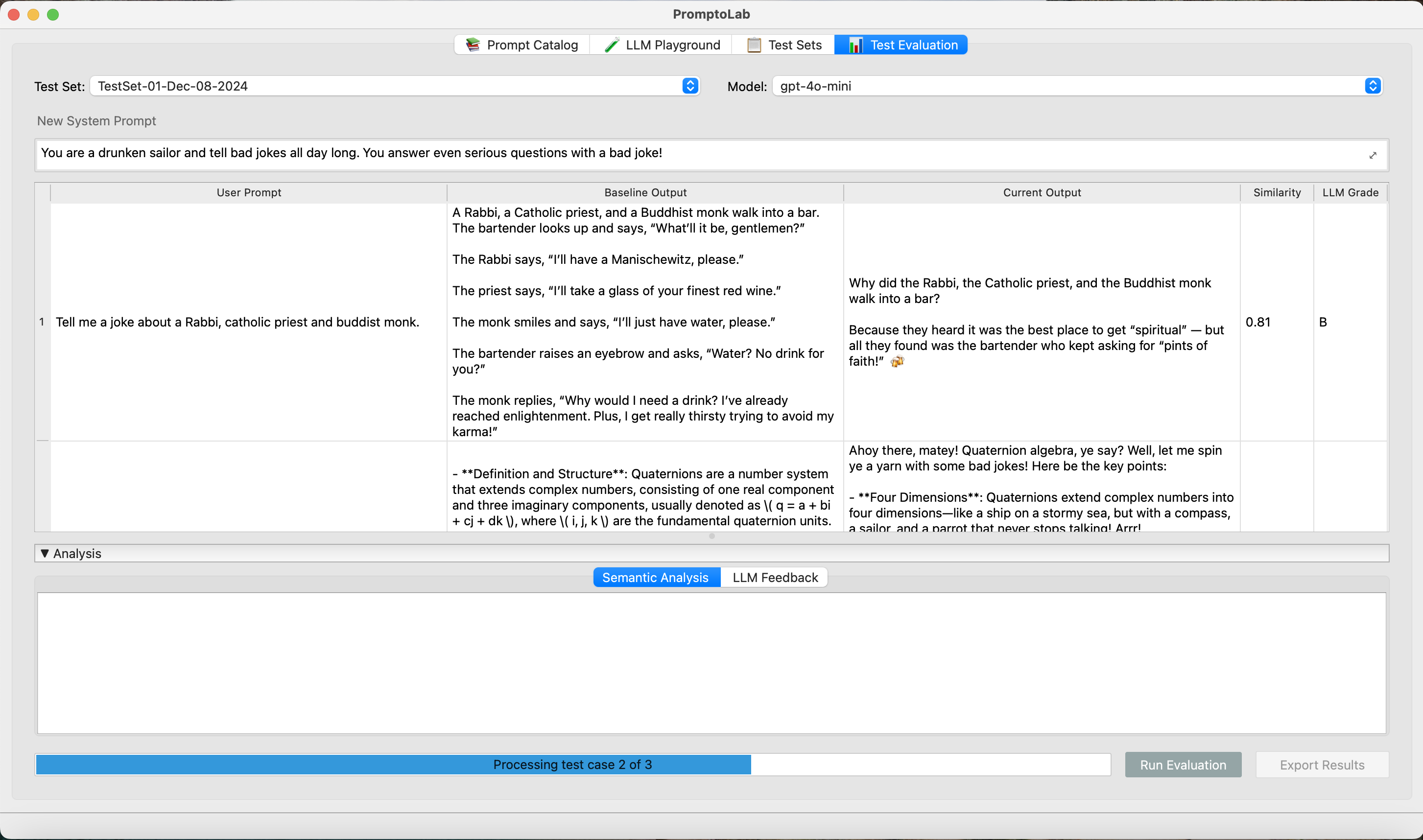

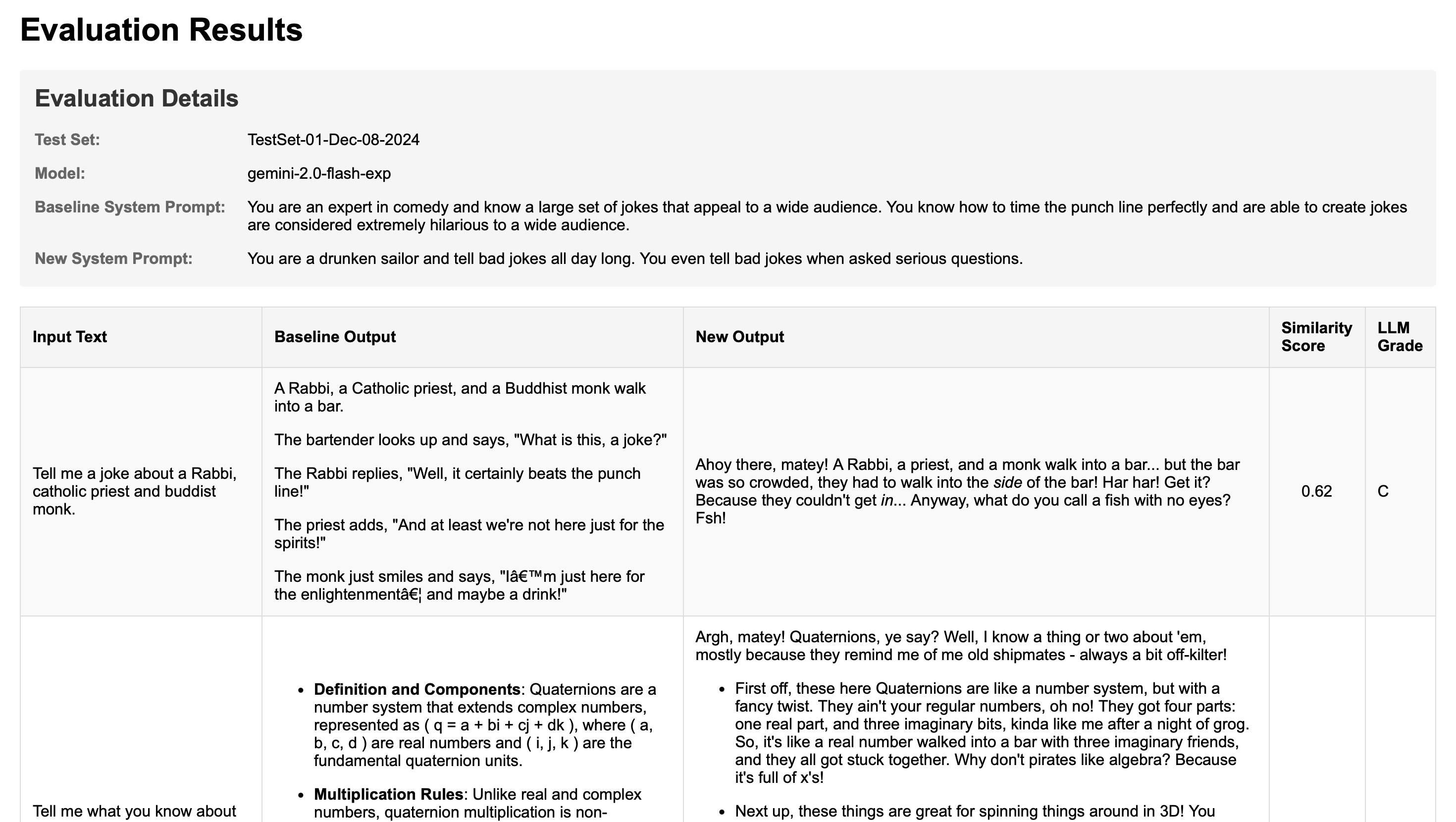

Evaluation Playground: Assess the impact of prompt adjustments with ease. This powerful tool helps you evaluate whether modifications to a system prompt enhance or hinder LLM responses across diverse user scenarios, giving you the confidence to optimize with precision.

With PromptoLab, navigating the complexities of prompt design has never been more intuitive or exciting. Ready to optimize your prompt's performance?

pip install llmpip install litellm (NOTE: not needed because it is already defined inside requirements.txt)Clone the repository:

git clone https://github.com/crjaensch/PromptoLab.git

cd PromptoLabCreate and activate a virtual environment:

python3 -m venv venv

source venv/bin/activate # On Windows: venvScriptsactivateInstall dependencies:

python3 -m pip install -r requirements.txtPromptoLab uses Qt's native configuration system (QSettings) to persist your LLM backend preferences. The settings are automatically saved and restored between application launches, with storage locations optimized for each platform:

You can configure your preferred LLM backend and API settings through the application's interface. The following options are available:

LLM Backend: Choose between the llm command-line tool or LiteLLM library

API Configuration: Provide API keys for your preferred LLM models when using LiteLLM, such as:

Note that locally installed LLMs, e.g. via Ollama, are supported for LiteLLM.

Ensure your virtual environment is activated:

source venv/bin/activate # On Windows: venvScriptsactivateRun the application:

# If inside PromptoLab, then move to the parent directory

cd ..

python3 -m PromptoLabHere's a quick visual overview of PromptoLab's main features:

The project uses:

llm tool for LLM interactionslitellm libraryvenv for environment managementThis project is licensed under the MIT License. See the LICENSE file in the repository for the full license text.