electra pytorch

0.1.2

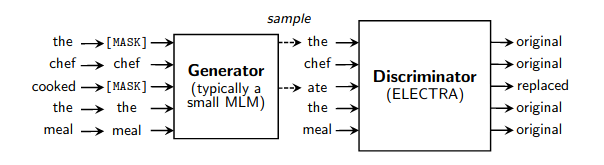

이 문서에 자세히 설명된 대로 언어 모델의 빠른 사전 학습을 위한 간단한 작업 래퍼입니다. 일반 마스크 언어 모델링에 비해 훈련 속도가 4배 빨라지고, 더 오랫동안 훈련하면 결국 더 나은 성능에 도달합니다. GLUE 결과를 재현하는 데 시간을 내어 주신 Erik Nijkamp에게 특별히 감사드립니다.

$ pip install electra-pytorch 다음 예에서는 pip 설치가 가능한 reformer-pytorch 사용합니다.

import torch

from torch import nn

from reformer_pytorch import ReformerLM

from electra_pytorch import Electra

# (1) instantiate the generator and discriminator, making sure that the generator is roughly a quarter to a half of the size of the discriminator

generator = ReformerLM (

num_tokens = 20000 ,

emb_dim = 128 ,

dim = 256 , # smaller hidden dimension

heads = 4 , # less heads

ff_mult = 2 , # smaller feed forward intermediate dimension

dim_head = 64 ,

depth = 12 ,

max_seq_len = 1024

)

discriminator = ReformerLM (

num_tokens = 20000 ,

emb_dim = 128 ,

dim = 1024 ,

dim_head = 64 ,

heads = 16 ,

depth = 12 ,

ff_mult = 4 ,

max_seq_len = 1024

)

# (2) weight tie the token and positional embeddings of generator and discriminator

generator . token_emb = discriminator . token_emb

generator . pos_emb = discriminator . pos_emb

# weight tie any other embeddings if available, token type embeddings, etc.

# (3) instantiate electra

trainer = Electra (

generator ,

discriminator ,

discr_dim = 1024 , # the embedding dimension of the discriminator

discr_layer = 'reformer' , # the layer name in the discriminator, whose output would be used for predicting token is still the same or replaced

mask_token_id = 2 , # the token id reserved for masking

pad_token_id = 0 , # the token id for padding

mask_prob = 0.15 , # masking probability for masked language modeling

mask_ignore_token_ids = [] # ids of tokens to ignore for mask modeling ex. (cls, sep)

)

# (4) train

data = torch . randint ( 0 , 20000 , ( 1 , 1024 ))

results = trainer ( data )

results . loss . backward ()

# after much training, the discriminator should have improved

torch . save ( discriminator , f'./pretrained-model.pt' )프레임워크가 자동으로 판별기의 숨겨진 출력을 가로채지 않도록 하려면 다음을 사용하여 판별기(추가 선형 [dim x 1] 사용)를 직접 전달할 수 있습니다.

import torch

from torch import nn

from reformer_pytorch import ReformerLM

from electra_pytorch import Electra

# (1) instantiate the generator and discriminator, making sure that the generator is roughly a quarter to a half of the size of the discriminator

generator = ReformerLM (

num_tokens = 20000 ,

emb_dim = 128 ,

dim = 256 , # smaller hidden dimension

heads = 4 , # less heads

ff_mult = 2 , # smaller feed forward intermediate dimension

dim_head = 64 ,

depth = 12 ,

max_seq_len = 1024

)

discriminator = ReformerLM (

num_tokens = 20000 ,

emb_dim = 128 ,

dim = 1024 ,

dim_head = 64 ,

heads = 16 ,

depth = 12 ,

ff_mult = 4 ,

max_seq_len = 1024 ,

return_embeddings = True

)

# (2) weight tie the token and positional embeddings of generator and discriminator

generator . token_emb = discriminator . token_emb

generator . pos_emb = discriminator . pos_emb

# weight tie any other embeddings if available, token type embeddings, etc.

# (3) instantiate electra

discriminator_with_adapter = nn . Sequential ( discriminator , nn . Linear ( 1024 , 1 ))

trainer = Electra (

generator ,

discriminator_with_adapter ,

mask_token_id = 2 , # the token id reserved for masking

pad_token_id = 0 , # the token id for padding

mask_prob = 0.15 , # masking probability for masked language modeling

mask_ignore_token_ids = [] # ids of tokens to ignore for mask modeling ex. (cls, sep)

)

# (4) train

data = torch . randint ( 0 , 20000 , ( 1 , 1024 ))

results = trainer ( data )

results . loss . backward ()

# after much training, the discriminator should have improved

torch . save ( discriminator , f'./pretrained-model.pt' )효과적인 훈련을 위해 생성기는 판별기 크기의 대략 1/4에서 최대 1/2이어야 합니다. 더 크면 생성기가 너무 좋아지고 적대적인 게임이 무너집니다. 이는 숨겨진 차원, 피드포워드 숨겨진 차원 및 논문의 관심 헤드 수를 줄임으로써 수행되었습니다.

$ python setup.py test $ mkdir data

$ cd data

$ pip3 install gdown

$ gdown --id 1EA5V0oetDCOke7afsktL_JDQ-ETtNOvx

$ tar -xf openwebtext.tar.xz

$ wget https://storage.googleapis.com/electra-data/vocab.txt

$ cd ..$ python pretraining/openwebtext/preprocess.py$ python pretraining/openwebtext/pretrain.py$ python examples/glue/download.py $ python examples/glue/run.py --model_name_or_path output/yyyy-mm-dd-hh-mm-ss/ckpt/200000 @misc { clark2020electra ,

title = { ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators } ,

author = { Kevin Clark and Minh-Thang Luong and Quoc V. Le and Christopher D. Manning } ,

year = { 2020 } ,

eprint = { 2003.10555 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CL }

}