Uma biblioteca Java para usar a API do OpenAI da maneira mais simples possível.

Simple-Openai é uma biblioteca de clientes Java HTTP para enviar solicitações e receber respostas da API do OpenAI. Ele expõe uma interface consistente em todos os serviços, mas tão simples quanto você pode encontrar em outros idiomas como Python ou NodeJs. É uma biblioteca não oficial.

Simple-Openai usa a CleverClient Library para comunicação HTTP, Jackson for JSON Parsing e Lombok para minimizar o código de caldeira, entre outras bibliotecas.

Simples-Openai, procura manter-se atualizado com as mudanças mais recentes no Openai. Atualmente, ele suporta a maioria dos recursos existentes e continuará atualizando com alterações futuras.

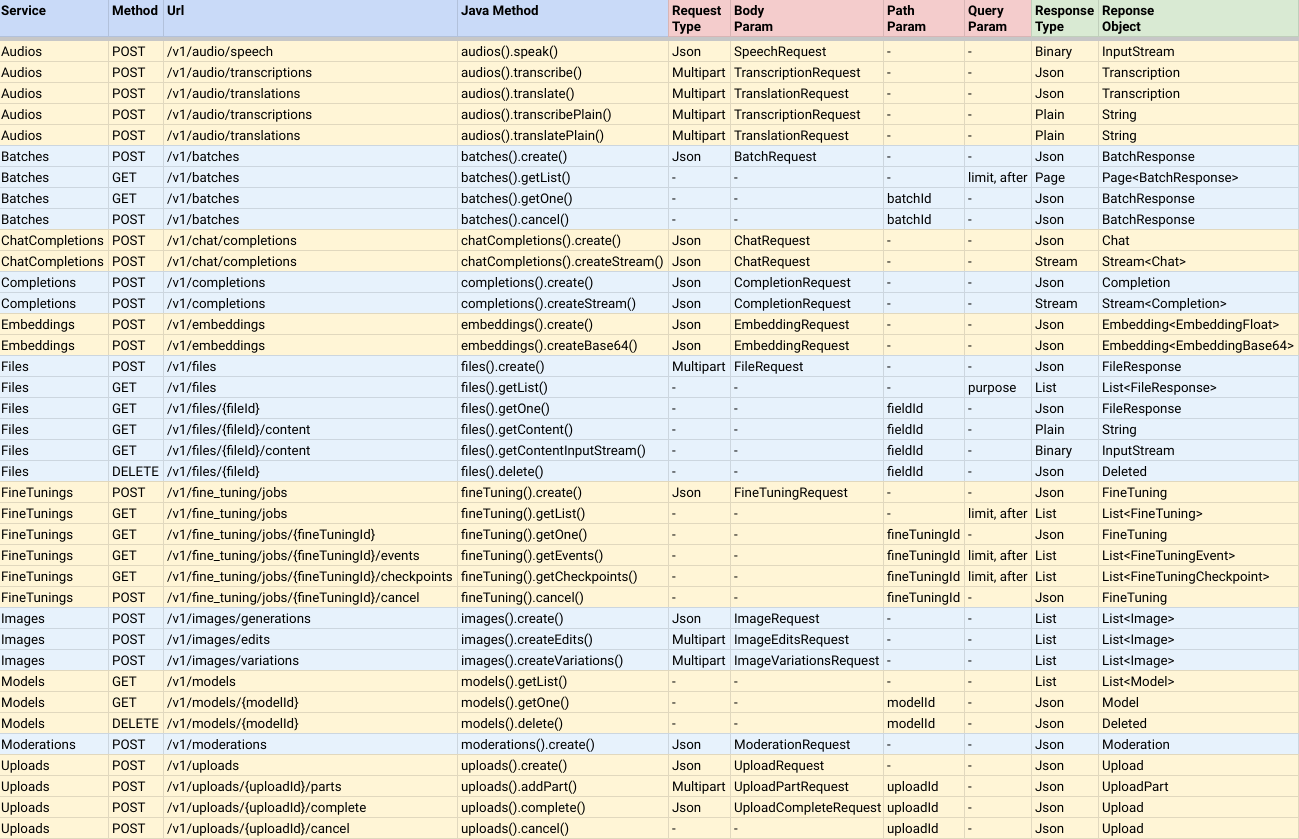

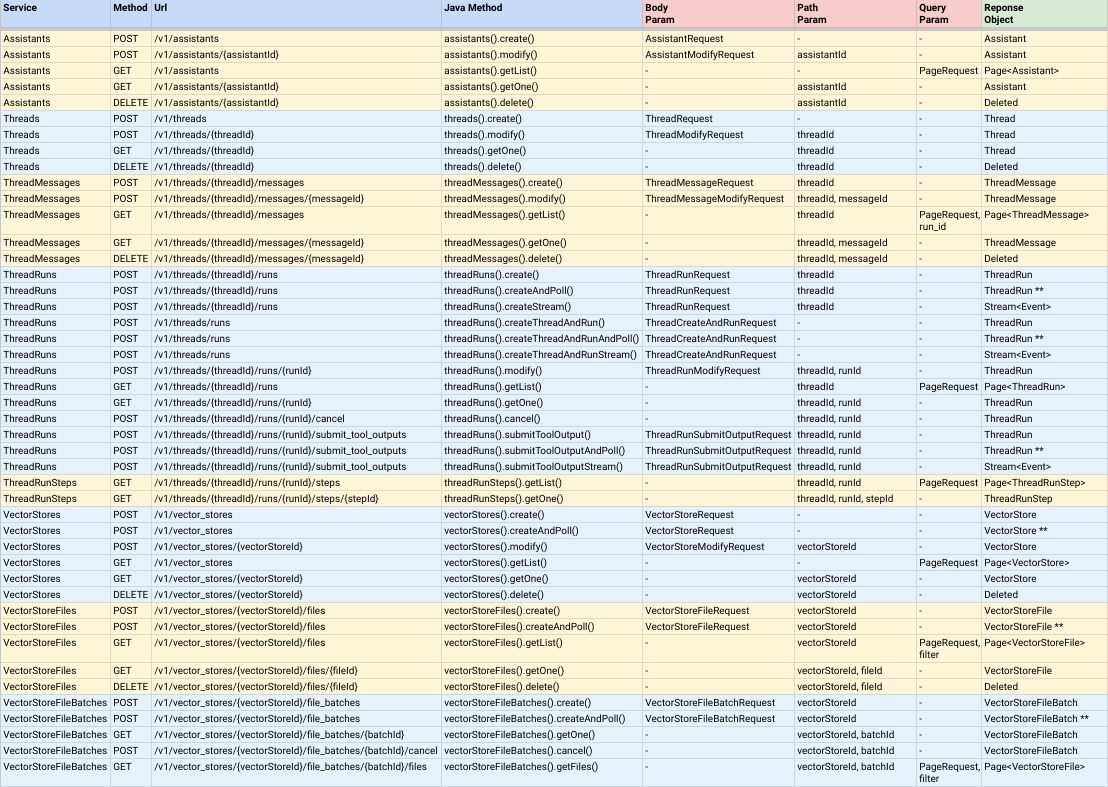

Suporte total para a maioria dos serviços do Openai:

Notas:

CompletableFuture<ResponseObject> , o que significa que elas são assíncronas, mas você pode chamar o método junção () para retornar o valor do resultado quando concluído.AndPoll() . Esses métodos são síncronos e bloqueiam até uma função de predicado que você fornece retornos falsos. Você pode instalar o simples-aberto, adicionando a seguinte dependência ao seu projeto MAVEN:

< dependency >

< groupId >io.github.sashirestela</ groupId >

< artifactId >simple-openai</ artifactId >

< version >[latest version]</ version >

</ dependency >Ou alternativamente usando o gradle:

dependencies {

implementation ' io.github.sashirestela:simple-openai:[latest version] '

} Esta é a primeira etapa que você precisa fazer antes para usar os serviços. Você deve fornecer pelo menos sua chave de API do OpenAI (veja aqui para obter mais detalhes). No exemplo a seguir, estamos recebendo a chave da API de uma variável de ambiente chamada OPENAI_API_KEY que criamos para mantê -la:

var openAI = SimpleOpenAI . builder ()

. apiKey ( System . getenv ( "OPENAI_API_KEY" ))

. build ();Opcionalmente, você pode passar seu ID da organização OpenAI, caso tenha várias organizações e queira identificar o uso por organização e/ou poderá passar seu ID do projeto OpenAI, caso deseje fornecer acesso a um único projeto. No exemplo a seguir, estamos usando a variável de ambiente para esses IDs:

var openAI = SimpleOpenAI . builder ()

. apiKey ( System . getenv ( "OPENAI_API_KEY" ))

. organizationId ( System . getenv ( "OPENAI_ORGANIZATION_ID" ))

. projectId ( System . getenv ( "OPENAI_PROJECT_ID" ))

. build ();Opcionalmente, também, você pode fornecer um objeto Java HTTPClient personalizado se desejar ter mais opções para a conexão HTTP, como executores, proxy, tempo limite, cookies etc. (consulte aqui mais detalhes). No exemplo seguinte, estamos fornecendo um httpclient personalizado:

var httpClient = HttpClient . newBuilder ()

. version ( Version . HTTP_1_1 )

. followRedirects ( Redirect . NORMAL )

. connectTimeout ( Duration . ofSeconds ( 20 ))

. executor ( Executors . newFixedThreadPool ( 3 ))

. proxy ( ProxySelector . of ( new InetSocketAddress ( "proxy.example.com" , 80 )))

. build ();

var openAI = SimpleOpenAI . builder ()

. apiKey ( System . getenv ( "OPENAI_API_KEY" ))

. httpClient ( httpClient )

. build ();Depois de criar um objeto simples, você está pronto para chamar seus serviços para se comunicar com a API OpenAI. Vamos ver alguns exemplos.

Exemplo para chamar o serviço de áudio para transformar o texto em áudio. Estamos pedindo para receber o áudio em formato binário (InputStream):

var speechRequest = SpeechRequest . builder ()

. model ( "tts-1" )

. input ( "Hello world, welcome to the AI universe!" )

. voice ( Voice . ALLOY )

. responseFormat ( SpeechResponseFormat . MP3 )

. speed ( 1.0 )

. build ();

var futureSpeech = openAI . audios (). speak ( speechRequest );

var speechResponse = futureSpeech . join ();

try {

var audioFile = new FileOutputStream ( speechFileName );

audioFile . write ( speechResponse . readAllBytes ());

System . out . println ( audioFile . getChannel (). size () + " bytes" );

audioFile . close ();

} catch ( Exception e ) {

e . printStackTrace ();

}Exemplo para chamar o serviço de áudio para transcrever um áudio para texto. Estamos solicitando receber a transcrição em formato de texto simples (consulte o nome do método):

var audioRequest = TranscriptionRequest . builder ()

. file ( Paths . get ( "hello_audio.mp3" ))

. model ( "whisper-1" )

. responseFormat ( AudioResponseFormat . VERBOSE_JSON )

. temperature ( 0.2 )

. timestampGranularity ( TimestampGranularity . WORD )

. timestampGranularity ( TimestampGranularity . SEGMENT )

. build ();

var futureAudio = openAI . audios (). transcribe ( audioRequest );

var audioResponse = futureAudio . join ();

System . out . println ( audioResponse );Exemplo para chamar o serviço de imagem para gerar duas imagens em resposta ao nosso prompt. Estamos pedindo para receber os URLs das imagens e estamos imprimindo -os no console:

var imageRequest = ImageRequest . builder ()

. prompt ( "A cartoon of a hummingbird that is flying around a flower." )

. n ( 2 )

. size ( Size . X256 )

. responseFormat ( ImageResponseFormat . URL )

. model ( "dall-e-2" )

. build ();

var futureImage = openAI . images (). create ( imageRequest );

var imageResponse = futureImage . join ();

imageResponse . stream (). forEach ( img -> System . out . println ( " n " + img . getUrl ()));Exemplo para ligar para o serviço de conclusão de bate -papo para fazer uma pergunta e aguardar uma resposta completa. Estamos imprimindo no console:

var chatRequest = ChatRequest . builder ()

. model ( "gpt-4o-mini" )

. message ( SystemMessage . of ( "You are an expert in AI." ))

. message ( UserMessage . of ( "Write a technical article about ChatGPT, no more than 100 words." ))

. temperature ( 0.0 )

. maxCompletionTokens ( 300 )

. build ();

var futureChat = openAI . chatCompletions (). create ( chatRequest );

var chatResponse = futureChat . join ();

System . out . println ( chatResponse . firstContent ());Exemplo para ligar para o serviço de conclusão de bate -papo para fazer uma pergunta e aguardar uma resposta em deltas de mensagem parcial. Estamos imprimindo -o no console assim que cada delta estiver chegando:

var chatRequest = ChatRequest . builder ()

. model ( "gpt-4o-mini" )

. message ( SystemMessage . of ( "You are an expert in AI." ))

. message ( UserMessage . of ( "Write a technical article about ChatGPT, no more than 100 words." ))

. temperature ( 0.0 )

. maxCompletionTokens ( 300 )

. build ();

var futureChat = openAI . chatCompletions (). createStream ( chatRequest );

var chatResponse = futureChat . join ();

chatResponse . filter ( chatResp -> chatResp . getChoices (). size () > 0 && chatResp . firstContent () != null )

. map ( Chat :: firstContent )

. forEach ( System . out :: print );

System . out . println (); Essa funcionalidade capacita o serviço de conclusão do bate -papo para resolver problemas específicos em nosso contexto. Neste exemplo, estamos definindo três funções e estamos inserindo um aviso que exigirá ligar para um deles (o product da função). Para definir funções, estamos usando classes adicionais que implementam a interface Functional . Essas classes definem um campo por cada argumento da função, anotando -as para descrevê -las e cada classe deve substituir o método execute pela lógica da função. Observe que estamos usando a classe FunctionExecutor Utility para inscrever as funções e executar a função selecionada pelo openai.chatCompletions() Calling:

public void demoCallChatWithFunctions () {

var functionExecutor = new FunctionExecutor ();

functionExecutor . enrollFunction (

FunctionDef . builder ()

. name ( "get_weather" )

. description ( "Get the current weather of a location" )

. functionalClass ( Weather . class )

. strict ( Boolean . TRUE )

. build ());

functionExecutor . enrollFunction (

FunctionDef . builder ()

. name ( "product" )

. description ( "Get the product of two numbers" )

. functionalClass ( Product . class )

. strict ( Boolean . TRUE )

. build ());

functionExecutor . enrollFunction (

FunctionDef . builder ()

. name ( "run_alarm" )

. description ( "Run an alarm" )

. functionalClass ( RunAlarm . class )

. strict ( Boolean . TRUE )

. build ());

var messages = new ArrayList < ChatMessage >();

messages . add ( UserMessage . of ( "What is the product of 123 and 456?" ));

chatRequest = ChatRequest . builder ()

. model ( "gpt-4o-mini" )

. messages ( messages )

. tools ( functionExecutor . getToolFunctions ())

. build ();

var futureChat = openAI . chatCompletions (). create ( chatRequest );

var chatResponse = futureChat . join ();

var chatMessage = chatResponse . firstMessage ();

var chatToolCall = chatMessage . getToolCalls (). get ( 0 );

var result = functionExecutor . execute ( chatToolCall . getFunction ());

messages . add ( chatMessage );

messages . add ( ToolMessage . of ( result . toString (), chatToolCall . getId ()));

chatRequest = ChatRequest . builder ()

. model ( "gpt-4o-mini" )

. messages ( messages )

. tools ( functionExecutor . getToolFunctions ())

. build ();

futureChat = openAI . chatCompletions (). create ( chatRequest );

chatResponse = futureChat . join ();

System . out . println ( chatResponse . firstContent ());

}

public static class Weather implements Functional {

@ JsonPropertyDescription ( "City and state, for example: León, Guanajuato" )

@ JsonProperty ( required = true )

public String location ;

@ JsonPropertyDescription ( "The temperature unit, can be 'celsius' or 'fahrenheit'" )

@ JsonProperty ( required = true )

public String unit ;

@ Override

public Object execute () {

return Math . random () * 45 ;

}

}

public static class Product implements Functional {

@ JsonPropertyDescription ( "The multiplicand part of a product" )

@ JsonProperty ( required = true )

public double multiplicand ;

@ JsonPropertyDescription ( "The multiplier part of a product" )

@ JsonProperty ( required = true )

public double multiplier ;

@ Override

public Object execute () {

return multiplicand * multiplier ;

}

}

public static class RunAlarm implements Functional {

@ Override

public Object execute () {

return "DONE" ;

}

}Exemplo para ligar para o serviço de conclusão de bate -papo para permitir que o modelo tome imagens externas e responda a perguntas sobre elas:

var chatRequest = ChatRequest . builder ()

. model ( "gpt-4o-mini" )

. messages ( List . of (

UserMessage . of ( List . of (

ContentPartText . of (

"What do you see in the image? Give in details in no more than 100 words." ),

ContentPartImageUrl . of ( ImageUrl . of (

"https://upload.wikimedia.org/wikipedia/commons/e/eb/Machu_Picchu%2C_Peru.jpg" ))))))

. temperature ( 0.0 )

. maxCompletionTokens ( 500 )

. build ();

var chatResponse = openAI . chatCompletions (). createStream ( chatRequest ). join ();

chatResponse . filter ( chatResp -> chatResp . getChoices (). size () > 0 && chatResp . firstContent () != null )

. map ( Chat :: firstContent )

. forEach ( System . out :: print );

System . out . println ();Exemplo para ligar para o serviço de conclusão de bate -papo para permitir que o modelo tome imagens locais e responda a perguntas sobre elas ( verifique o código da base64util neste repositório ):

var chatRequest = ChatRequest . builder ()

. model ( "gpt-4o-mini" )

. messages ( List . of (

UserMessage . of ( List . of (

ContentPartText . of (

"What do you see in the image? Give in details in no more than 100 words." ),

ContentPartImageUrl . of ( ImageUrl . of (

Base64Util . encode ( "src/demo/resources/machupicchu.jpg" , MediaType . IMAGE )))))))

. temperature ( 0.0 )

. maxCompletionTokens ( 500 )

. build ();

var chatResponse = openAI . chatCompletions (). createStream ( chatRequest ). join ();

chatResponse . filter ( chatResp -> chatResp . getChoices (). size () > 0 && chatResp . firstContent () != null )

. map ( Chat :: firstContent )

. forEach ( System . out :: print );

System . out . println ();Exemplo para chamar o serviço de conclusão de bate -papo para gerar uma resposta de áudio falada a um prompt e usar entradas de áudio para solicitar o modelo ( verifique o código do Base64util neste repositório ):

var messages = new ArrayList < ChatMessage >();

messages . add ( SystemMessage . of ( "Respond in a short and concise way." ));

messages . add ( UserMessage . of ( List . of ( ContentPartInputAudio . of ( InputAudio . of (

Base64Util . encode ( "src/demo/resources/question1.mp3" , null ), InputAudioFormat . MP3 )))));

chatRequest = ChatRequest . builder ()

. model ( "gpt-4o-audio-preview" )

. modality ( Modality . TEXT )

. modality ( Modality . AUDIO )

. audio ( Audio . of ( Voice . ALLOY , AudioFormat . MP3 ))

. messages ( messages )

. build ();

var chatResponse = openAI . chatCompletions (). create ( chatRequest ). join ();

var audio = chatResponse . firstMessage (). getAudio ();

Base64Util . decode ( audio . getData (), "src/demo/resources/answer1.mp3" );

System . out . println ( "Answer 1: " + audio . getTranscript ());

messages . add ( AssistantMessage . builder (). audioId ( audio . getId ()). build ());

messages . add ( UserMessage . of ( List . of ( ContentPartInputAudio . of ( InputAudio . of (

Base64Util . encode ( "src/demo/resources/question2.mp3" , null ), InputAudioFormat . MP3 )))));

chatRequest = ChatRequest . builder ()

. model ( "gpt-4o-audio-preview" )

. modality ( Modality . TEXT )

. modality ( Modality . AUDIO )

. audio ( Audio . of ( Voice . ALLOY , AudioFormat . MP3 ))

. messages ( messages )

. build ();

chatResponse = openAI . chatCompletions (). create ( chatRequest ). join ();

audio = chatResponse . firstMessage (). getAudio ();

Base64Util . decode ( audio . getData (), "src/demo/resources/answer2.mp3" );

System . out . println ( "Answer 2: " + audio . getTranscript ());Exemplo para ligar para o serviço de conclusão de bate -papo para garantir que o modelo sempre gerará respostas que aderem a um esquema JSON definido através das classes Java:

public void demoCallChatWithStructuredOutputs () {

var chatRequest = ChatRequest . builder ()

. model ( "gpt-4o-mini" )

. message ( SystemMessage

. of ( "You are a helpful math tutor. Guide the user through the solution step by step." ))

. message ( UserMessage . of ( "How can I solve 8x + 7 = -23" ))

. responseFormat ( ResponseFormat . jsonSchema ( JsonSchema . builder ()

. name ( "MathReasoning" )

. schemaClass ( MathReasoning . class )

. build ()))

. build ();

var chatResponse = openAI . chatCompletions (). createStream ( chatRequest ). join ();

chatResponse . filter ( chatResp -> chatResp . getChoices (). size () > 0 && chatResp . firstContent () != null )

. map ( Chat :: firstContent )

. forEach ( System . out :: print );

System . out . println ();

}

public static class MathReasoning {

public List < Step > steps ;

public String finalAnswer ;

public static class Step {

public String explanation ;

public String output ;

}

}Este exemplo simula um bate -papo de conversa pelo console de comando e demonstra o uso do ChatCompletion com as funções de streaming e chamada.

Você pode ver o código de demonstração completo, bem como os resultados da execução do código de demonstração:

package io . github . sashirestela . openai . demo ;

import com . fasterxml . jackson . annotation . JsonProperty ;

import com . fasterxml . jackson . annotation . JsonPropertyDescription ;

import io . github . sashirestela . openai . SimpleOpenAI ;

import io . github . sashirestela . openai . common . function . FunctionDef ;

import io . github . sashirestela . openai . common . function . FunctionExecutor ;

import io . github . sashirestela . openai . common . function . Functional ;

import io . github . sashirestela . openai . common . tool . ToolCall ;

import io . github . sashirestela . openai . domain . chat . Chat ;

import io . github . sashirestela . openai . domain . chat . Chat . Choice ;

import io . github . sashirestela . openai . domain . chat . ChatMessage ;

import io . github . sashirestela . openai . domain . chat . ChatMessage . AssistantMessage ;

import io . github . sashirestela . openai . domain . chat . ChatMessage . ResponseMessage ;

import io . github . sashirestela . openai . domain . chat . ChatMessage . ToolMessage ;

import io . github . sashirestela . openai . domain . chat . ChatMessage . UserMessage ;

import io . github . sashirestela . openai . domain . chat . ChatRequest ;

import java . util . ArrayList ;

import java . util . List ;

import java . util . stream . Stream ;

public class ConversationDemo {

private SimpleOpenAI openAI ;

private FunctionExecutor functionExecutor ;

private int indexTool ;

private StringBuilder content ;

private StringBuilder functionArgs ;

public ConversationDemo () {

openAI = SimpleOpenAI . builder (). apiKey ( System . getenv ( "OPENAI_API_KEY" )). build ();

}

public void prepareConversation () {

List < FunctionDef > functionList = new ArrayList <>();

functionList . add ( FunctionDef . builder ()

. name ( "getCurrentTemperature" )

. description ( "Get the current temperature for a specific location" )

. functionalClass ( CurrentTemperature . class )

. strict ( Boolean . TRUE )

. build ());

functionList . add ( FunctionDef . builder ()

. name ( "getRainProbability" )

. description ( "Get the probability of rain for a specific location" )

. functionalClass ( RainProbability . class )

. strict ( Boolean . TRUE )

. build ());

functionExecutor = new FunctionExecutor ( functionList );

}

public void runConversation () {

List < ChatMessage > messages = new ArrayList <>();

var myMessage = System . console (). readLine ( " n Welcome! Write any message: " );

messages . add ( UserMessage . of ( myMessage ));

while (! myMessage . toLowerCase (). equals ( "exit" )) {

var chatStream = openAI . chatCompletions ()

. createStream ( ChatRequest . builder ()

. model ( "gpt-4o-mini" )

. messages ( messages )

. tools ( functionExecutor . getToolFunctions ())

. temperature ( 0.2 )

. stream ( true )

. build ())

. join ();

indexTool = - 1 ;

content = new StringBuilder ();

functionArgs = new StringBuilder ();

var response = getResponse ( chatStream );

if ( response . getMessage (). getContent () != null ) {

messages . add ( AssistantMessage . of ( response . getMessage (). getContent ()));

}

if ( response . getFinishReason (). equals ( "tool_calls" )) {

messages . add ( response . getMessage ());

var toolCalls = response . getMessage (). getToolCalls ();

var toolMessages = functionExecutor . executeAll ( toolCalls ,

( toolCallId , result ) -> ToolMessage . of ( result , toolCallId ));

messages . addAll ( toolMessages );

} else {

myMessage = System . console (). readLine ( " n n Write any message (or write 'exit' to finish): " );

messages . add ( UserMessage . of ( myMessage ));

}

}

}

private Choice getResponse ( Stream < Chat > chatStream ) {

var choice = new Choice ();

choice . setIndex ( 0 );

var chatMsgResponse = new ResponseMessage ();

List < ToolCall > toolCalls = new ArrayList <>();

chatStream . forEach ( responseChunk -> {

var choices = responseChunk . getChoices ();

if ( choices . size () > 0 ) {

var innerChoice = choices . get ( 0 );

var delta = innerChoice . getMessage ();

if ( delta . getRole () != null ) {

chatMsgResponse . setRole ( delta . getRole ());

}

if ( delta . getContent () != null && ! delta . getContent (). isEmpty ()) {

content . append ( delta . getContent ());

System . out . print ( delta . getContent ());

}

if ( delta . getToolCalls () != null ) {

var toolCall = delta . getToolCalls (). get ( 0 );

if ( toolCall . getIndex () != indexTool ) {

if ( toolCalls . size () > 0 ) {

toolCalls . get ( toolCalls . size () - 1 ). getFunction (). setArguments ( functionArgs . toString ());

functionArgs = new StringBuilder ();

}

toolCalls . add ( toolCall );

indexTool ++;

} else {

functionArgs . append ( toolCall . getFunction (). getArguments ());

}

}

if ( innerChoice . getFinishReason () != null ) {

if ( content . length () > 0 ) {

chatMsgResponse . setContent ( content . toString ());

}

if ( toolCalls . size () > 0 ) {

toolCalls . get ( toolCalls . size () - 1 ). getFunction (). setArguments ( functionArgs . toString ());

chatMsgResponse . setToolCalls ( toolCalls );

}

choice . setMessage ( chatMsgResponse );

choice . setFinishReason ( innerChoice . getFinishReason ());

}

}

});

return choice ;

}

public static void main ( String [] args ) {

var demo = new ConversationDemo ();

demo . prepareConversation ();

demo . runConversation ();

}

public static class CurrentTemperature implements Functional {

@ JsonPropertyDescription ( "The city and state, e.g., San Francisco, CA" )

@ JsonProperty ( required = true )

public String location ;

@ JsonPropertyDescription ( "The temperature unit to use. Infer this from the user's location." )

@ JsonProperty ( required = true )

public String unit ;

@ Override

public Object execute () {

double centigrades = Math . random () * ( 40.0 - 10.0 ) + 10.0 ;

double fahrenheit = centigrades * 9.0 / 5.0 + 32.0 ;

String shortUnit = unit . substring ( 0 , 1 ). toUpperCase ();

return shortUnit . equals ( "C" ) ? centigrades : ( shortUnit . equals ( "F" ) ? fahrenheit : 0.0 );

}

}

public static class RainProbability implements Functional {

@ JsonPropertyDescription ( "The city and state, e.g., San Francisco, CA" )

@ JsonProperty ( required = true )

public String location ;

@ Override

public Object execute () {

return Math . random () * 100 ;

}

}

}Welcome! Write any message: Hi, can you help me with some quetions about Lima, Peru?

Of course! What would you like to know about Lima, Peru?

Write any message (or write 'exit' to finish): Tell me something brief about Lima Peru, then tell me how's the weather there right now. Finally give me three tips to travel there.

## # Brief About Lima, Peru

Lima, the capital city of Peru, is a bustling metropolis that blends modernity with rich historical heritage. Founded by Spanish conquistador Francisco Pizarro in 1535, Lima is known for its colonial architecture, vibrant culture, and delicious cuisine, particularly its world-renowned ceviche. The city is also a gateway to exploring Peru's diverse landscapes, from the coastal deserts to the Andean highlands and the Amazon rainforest.

## # Current Weather in Lima, Peru

I'll check the current temperature and the probability of rain in Lima for you. ## # Current Weather in Lima, Peru

- ** Temperature: ** Approximately 11.8°C

- ** Probability of Rain: ** Approximately 97.8%

## # Three Tips for Traveling to Lima, Peru

1. ** Explore the Historic Center: **

- Visit the Plaza Mayor, the Government Palace, and the Cathedral of Lima. These landmarks offer a glimpse into Lima's colonial past and are UNESCO World Heritage Sites.

2. ** Savor the Local Cuisine: **

- Don't miss out on trying ceviche, a traditional Peruvian dish made from fresh raw fish marinated in citrus juices. Also, explore the local markets and try other Peruvian delicacies.

3. ** Visit the Coastal Districts: **

- Head to Miraflores and Barranco for stunning ocean views, vibrant nightlife, and cultural experiences. These districts are known for their beautiful parks, cliffs, and bohemian atmosphere.

Enjoy your trip to Lima! If you have any more questions, feel free to ask.

Write any message (or write 'exit' to finish): exitEste exemplo simula um bate -papo de conversa pelo console de comando e demonstra o uso dos recursos mais recentes da API V2 dos Assistentes:

Você pode ver o código de demonstração completo, bem como os resultados da execução do código de demonstração:

package io . github . sashirestela . openai . demo ;

import com . fasterxml . jackson . annotation . JsonProperty ;

import com . fasterxml . jackson . annotation . JsonPropertyDescription ;

import io . github . sashirestela . cleverclient . Event ;

import io . github . sashirestela . openai . SimpleOpenAI ;

import io . github . sashirestela . openai . common . content . ContentPart . ContentPartTextAnnotation ;

import io . github . sashirestela . openai . common . function . FunctionDef ;

import io . github . sashirestela . openai . common . function . FunctionExecutor ;

import io . github . sashirestela . openai . common . function . Functional ;

import io . github . sashirestela . openai . domain . assistant . AssistantRequest ;

import io . github . sashirestela . openai . domain . assistant . AssistantTool ;

import io . github . sashirestela . openai . domain . assistant . ThreadMessageDelta ;

import io . github . sashirestela . openai . domain . assistant . ThreadMessageRequest ;

import io . github . sashirestela . openai . domain . assistant . ThreadMessageRole ;

import io . github . sashirestela . openai . domain . assistant . ThreadRequest ;

import io . github . sashirestela . openai . domain . assistant . ThreadRun ;

import io . github . sashirestela . openai . domain . assistant . ThreadRun . RunStatus ;

import io . github . sashirestela . openai . domain . assistant . ThreadRunRequest ;

import io . github . sashirestela . openai . domain . assistant . ThreadRunSubmitOutputRequest ;

import io . github . sashirestela . openai . domain . assistant . ThreadRunSubmitOutputRequest . ToolOutput ;

import io . github . sashirestela . openai . domain . assistant . ToolResourceFull ;

import io . github . sashirestela . openai . domain . assistant . ToolResourceFull . FileSearch ;

import io . github . sashirestela . openai . domain . assistant . VectorStoreRequest ;

import io . github . sashirestela . openai . domain . assistant . events . EventName ;

import io . github . sashirestela . openai . domain . file . FileRequest ;

import io . github . sashirestela . openai . domain . file . FileRequest . PurposeType ;

import java . nio . file . Paths ;

import java . util . ArrayList ;

import java . util . List ;

import java . util . stream . Stream ;

public class ConversationV2Demo {

private SimpleOpenAI openAI ;

private String fileId ;

private String vectorStoreId ;

private FunctionExecutor functionExecutor ;

private String assistantId ;

private String threadId ;

public ConversationV2Demo () {

openAI = SimpleOpenAI . builder (). apiKey ( System . getenv ( "OPENAI_API_KEY" )). build ();

}

public void prepareConversation () {

List < FunctionDef > functionList = new ArrayList <>();

functionList . add ( FunctionDef . builder ()

. name ( "getCurrentTemperature" )

. description ( "Get the current temperature for a specific location" )

. functionalClass ( CurrentTemperature . class )

. strict ( Boolean . TRUE )

. build ());

functionList . add ( FunctionDef . builder ()

. name ( "getRainProbability" )

. description ( "Get the probability of rain for a specific location" )

. functionalClass ( RainProbability . class )

. strict ( Boolean . TRUE )

. build ());

functionExecutor = new FunctionExecutor ( functionList );

var file = openAI . files ()

. create ( FileRequest . builder ()

. file ( Paths . get ( "src/demo/resources/mistral-ai.txt" ))

. purpose ( PurposeType . ASSISTANTS )

. build ())

. join ();

fileId = file . getId ();

System . out . println ( "File was created with id: " + fileId );

var vectorStore = openAI . vectorStores ()

. createAndPoll ( VectorStoreRequest . builder ()

. fileId ( fileId )

. build ());

vectorStoreId = vectorStore . getId ();

System . out . println ( "Vector Store was created with id: " + vectorStoreId );

var assistant = openAI . assistants ()

. create ( AssistantRequest . builder ()

. name ( "World Assistant" )

. model ( "gpt-4o" )

. instructions ( "You are a skilled tutor on geo-politic topics." )

. tools ( functionExecutor . getToolFunctions ())

. tool ( AssistantTool . fileSearch ())

. toolResources ( ToolResourceFull . builder ()

. fileSearch ( FileSearch . builder ()

. vectorStoreId ( vectorStoreId )

. build ())

. build ())

. temperature ( 0.2 )

. build ())

. join ();

assistantId = assistant . getId ();

System . out . println ( "Assistant was created with id: " + assistantId );

var thread = openAI . threads (). create ( ThreadRequest . builder (). build ()). join ();

threadId = thread . getId ();

System . out . println ( "Thread was created with id: " + threadId );

System . out . println ();

}

public void runConversation () {

var myMessage = System . console (). readLine ( " n Welcome! Write any message: " );

while (! myMessage . toLowerCase (). equals ( "exit" )) {

openAI . threadMessages ()

. create ( threadId , ThreadMessageRequest . builder ()

. role ( ThreadMessageRole . USER )

. content ( myMessage )

. build ())

. join ();

var runStream = openAI . threadRuns ()

. createStream ( threadId , ThreadRunRequest . builder ()

. assistantId ( assistantId )

. parallelToolCalls ( Boolean . FALSE )

. build ())

. join ();

handleRunEvents ( runStream );

myMessage = System . console (). readLine ( " n Write any message (or write 'exit' to finish): " );

}

}

private void handleRunEvents ( Stream < Event > runStream ) {

runStream . forEach ( event -> {

switch ( event . getName ()) {

case EventName . THREAD_RUN_CREATED :

case EventName . THREAD_RUN_COMPLETED :

case EventName . THREAD_RUN_REQUIRES_ACTION :

var run = ( ThreadRun ) event . getData ();

System . out . println ( "=====>> Thread Run: id=" + run . getId () + ", status=" + run . getStatus ());

if ( run . getStatus (). equals ( RunStatus . REQUIRES_ACTION )) {

var toolCalls = run . getRequiredAction (). getSubmitToolOutputs (). getToolCalls ();

var toolOutputs = functionExecutor . executeAll ( toolCalls ,

( toolCallId , result ) -> ToolOutput . builder ()

. toolCallId ( toolCallId )

. output ( result )

. build ());

var runSubmitToolStream = openAI . threadRuns ()

. submitToolOutputStream ( threadId , run . getId (), ThreadRunSubmitOutputRequest . builder ()

. toolOutputs ( toolOutputs )

. stream ( true )

. build ())

. join ();

handleRunEvents ( runSubmitToolStream );

}

break ;

case EventName . THREAD_MESSAGE_DELTA :

var msgDelta = ( ThreadMessageDelta ) event . getData ();

var content = msgDelta . getDelta (). getContent (). get ( 0 );

if ( content instanceof ContentPartTextAnnotation ) {

var textContent = ( ContentPartTextAnnotation ) content ;

System . out . print ( textContent . getText (). getValue ());

}

break ;

case EventName . THREAD_MESSAGE_COMPLETED :

System . out . println ();

break ;

default :

break ;

}

});

}

public void cleanConversation () {

var deletedFile = openAI . files (). delete ( fileId ). join ();

var deletedVectorStore = openAI . vectorStores (). delete ( vectorStoreId ). join ();

var deletedAssistant = openAI . assistants (). delete ( assistantId ). join ();

var deletedThread = openAI . threads (). delete ( threadId ). join ();

System . out . println ( "File was deleted: " + deletedFile . getDeleted ());

System . out . println ( "Vector Store was deleted: " + deletedVectorStore . getDeleted ());

System . out . println ( "Assistant was deleted: " + deletedAssistant . getDeleted ());

System . out . println ( "Thread was deleted: " + deletedThread . getDeleted ());

}

public static void main ( String [] args ) {

var demo = new ConversationV2Demo ();

demo . prepareConversation ();

demo . runConversation ();

demo . cleanConversation ();

}

public static class CurrentTemperature implements Functional {

@ JsonPropertyDescription ( "The city and state, e.g., San Francisco, CA" )

@ JsonProperty ( required = true )

public String location ;

@ JsonPropertyDescription ( "The temperature unit to use. Infer this from the user's location." )

@ JsonProperty ( required = true )

public String unit ;

@ Override

public Object execute () {

double centigrades = Math . random () * ( 40.0 - 10.0 ) + 10.0 ;

double fahrenheit = centigrades * 9.0 / 5.0 + 32.0 ;

String shortUnit = unit . substring ( 0 , 1 ). toUpperCase ();

return shortUnit . equals ( "C" ) ? centigrades : ( shortUnit . equals ( "F" ) ? fahrenheit : 0.0 );

}

}

public static class RainProbability implements Functional {

@ JsonPropertyDescription ( "The city and state, e.g., San Francisco, CA" )

@ JsonProperty ( required = true )

public String location ;

@ Override

public Object execute () {

return Math . random () * 100 ;

}

}

}File was created with id: file-oDFIF7o4SwuhpwBNnFIILhMK

Vector Store was created with id: vs_lG1oJmF2s5wLhqHUSeJpELMr

Assistant was created with id: asst_TYS5cZ05697tyn3yuhDrCCIv

Thread was created with id: thread_33n258gFVhZVIp88sQKuqMvg

Welcome! Write any message: Hello

=====>> Thread Run: id=run_nihN6dY0uyudsORg4xyUvQ5l, status=QUEUED

Hello! How can I assist you today?

=====>> Thread Run: id=run_nihN6dY0uyudsORg4xyUvQ5l, status=COMPLETED

Write any message (or write 'exit' to finish): Tell me something brief about Lima Peru, then tell me how's the weather there right now. Finally give me three tips to travel there.

=====>> Thread Run: id=run_QheimPyP5UK6FtmH5obon0fB, status=QUEUED

Lima, the capital city of Peru, is located on the country's arid Pacific coast. It's known for its vibrant culinary scene, rich history, and as a cultural hub with numerous museums, colonial architecture, and remnants of pre-Columbian civilizations. This bustling metropolis serves as a key gateway to visiting Peru’s more famous attractions, such as Machu Picchu and the Amazon rainforest.

Let me find the current weather conditions in Lima for you, and then I'll provide three travel tips.

=====>> Thread Run: id=run_QheimPyP5UK6FtmH5obon0fB, status=REQUIRES_ACTION

## # Current Weather in Lima, Peru:

- ** Temperature: ** 12.8°C

- ** Rain Probability: ** 82.7%

## # Three Travel Tips for Lima, Peru:

1. ** Best Time to Visit: ** Plan your trip during the dry season, from May to September, which offers clearer skies and milder temperatures. This period is particularly suitable for outdoor activities and exploring the city comfortably.

2. ** Local Cuisine: ** Don't miss out on tasting the local Peruvian dishes, particularly the ceviche, which is renowned worldwide. Lima is also known as the gastronomic capital of South America, so indulge in the wide variety of dishes available.

3. ** Cultural Attractions: ** Allocate enough time to visit Lima's rich array of museums, such as the Larco Museum, which showcases pre-Columbian art, and the historical center which is a UNESCO World Heritage Site. Moreover, exploring districts like Miraflores and Barranco can provide insights into the modern and bohemian sides of the city.

Enjoy planning your trip to Lima! If you need more information or help, feel free to ask.

=====>> Thread Run: id=run_QheimPyP5UK6FtmH5obon0fB, status=COMPLETED

Write any message (or write 'exit' to finish): Tell me something about the Mistral company

=====>> Thread Run: id=run_5u0t8kDQy87p5ouaTRXsCG8m, status=QUEUED

Mistral AI is a French company that specializes in selling artificial intelligence products. It was established in April 2023 by former employees of Meta Platforms and Google DeepMind. Notably, the company secured a significant amount of funding, raising €385 million in October 2023, and achieved a valuation exceeding $ 2 billion by December of the same year.

The prime focus of Mistral AI is on developing and producing open-source large language models. This approach underscores the foundational role of open-source software as a counter to proprietary models. As of March 2024, Mistral AI has published two models, which are available in terms of weights, while three more models—categorized as Small, Medium, and Large—are accessible only through an API[1].

=====>> Thread Run: id=run_5u0t8kDQy87p5ouaTRXsCG8m, status=COMPLETED

Write any message (or write 'exit' to finish): exit

File was deleted: true

Vector Store was deleted: true

Assistant was deleted: true

Thread was deleted: trueNeste exemplo, você pode ver o código para estabelecer uma conversa de fala a fala entre você e o modelo usando seu microfone e seu alto-falante. Veja o código completo em:

Realtimedemo.java

Simple-Openai pode ser usado com fornecedores adicionais compatíveis com a API OpenAI. Neste momento, há suporte para os seguintes fornecedores adicionais:

O Azure Openia é apoiado por simples abertura. Podemos usar a classe SimpleOpenAIAzure , que estende a classe BaseSimpleOpenAI , para começar a usar esse provedor.

var openai = SimpleOpenAIAzure . builder ()

. apiKey ( System . getenv ( "AZURE_OPENAI_API_KEY" ))

. baseUrl ( System . getenv ( "AZURE_OPENAI_BASE_URL" )) // Including resourceName and deploymentId

. apiVersion ( System . getenv ( "AZURE_OPENAI_API_VERSION" ))

//.httpClient(customHttpClient) Optionally you could pass a custom HttpClient

. build ();O Azure OpenAI é alimentado por um conjunto diversificado de modelos com recursos diferentes e requer uma implantação separada para cada modelo. A disponibilidade do modelo varia de acordo com a região e a nuvem. Veja mais detalhes sobre os modelos do Azure Openai.

Atualmente, estamos apoiando apenas os seguintes serviços:

chatCompletionService (geração de texto, streaming, chamada de função, visão, saídas estruturadas)fileService (Filme arquivos) Anyscale é suportado por simples abertura. Podemos usar a escala de classe SimpleOpenAIAnyscale da classe, que estende a classe BaseSimpleOpenAI , para começar a usar esse provedor.

var openai = SimpleOpenAIAnyscale . builder ()

. apiKey ( System . getenv ( "ANYSCALE_API_KEY" ))

//.baseUrl(customUrl) Optionally you could pass a custom baseUrl

//.httpClient(customHttpClient) Optionally you could pass a custom HttpClient

. build (); Atualmente, estamos apoiando apenas o serviço chatCompletionService . Foi testado com o modelo Mistral .

Exemplos para cada serviço OpenAI foram criados na demonstração da pasta e você pode seguir as próximas etapas para executá -las:

Clone este repositório:

git clone https://github.com/sashirestela/simple-openai.git

cd simple-openai

Construa o projeto:

mvn clean install

Crie uma variável de ambiente para sua chave de API do OpenAI:

export OPENAI_API_KEY=<here goes your api key>

Concessão de permissão de execução para o arquivo de script:

chmod +x rundemo.sh

Executar exemplos:

./rundemo.sh <demo> [debug]

Onde:

<demo> é obrigatório e deve ser um dos valores:

[debug] é opcional e cria o arquivo demo.log , onde você pode ver os detalhes do log para cada execução.

Por exemplo, para executar a ./rundemo.sh Chat debug bate

Indicações para a demonstração do Azure Openai

Os modelos recomendados para executar esta demonstração são:

Consulte os documentos do Azure Openai para obter mais detalhes: documentação do Azure Openai. Depois de ter o URL de implantação e a chave da API, defina as seguintes variáveis de ambiente:

export AZURE_OPENAI_BASE_URL=<https://YOUR_RESOURCE_NAME.openai.azure.com/openai/deployments/YOUR_DEPLOYMENT_NAME>

export AZURE_OPENAI_API_KEY=<here goes your regional API key>

export AZURE_OPENAI_API_VERSION=<for example: 2024-08-01-preview>

Observe que alguns modelos podem não estar disponíveis em todas as regiões. Se você tiver problemas para encontrar um modelo, tente uma região diferente. As chaves da API são regionais (por conta cognitiva). Se você fornecer vários modelos na mesma região, eles compartilharão a mesma chave da API (na verdade, existem duas chaves por região para suportar a rotação alternativa de chave).

Leia nosso guia contribuinte para aprender e entender como contribuir para este projeto.

Simple-Openai é licenciado sob a licença do MIT. Consulte o arquivo de licença para obter mais informações.

Lista dos principais usuários da nossa biblioteca:

Obrigado por usar o simples-aberto . Se você achar este projeto valioso, existem algumas maneiras de nos mostrar seu amor, de preferência todos eles ?: