นี่คือ repo สำหรับการสำรวจ TMLR ของเรารวมมุมมองของ NLP และวิศวกรรมซอฟต์แวร์: การสำรวจรูปแบบภาษาสำหรับรหัส - การทบทวนที่ครอบคลุมของการวิจัย LLM สำหรับรหัส ผลงานในแต่ละหมวดหมู่ได้รับคำสั่งตามลำดับเวลา หากคุณมีความเข้าใจขั้นพื้นฐานเกี่ยวกับการเรียนรู้ของเครื่อง แต่ยังใหม่กับ NLP เรายังให้รายการการอ่านที่แนะนำในส่วนที่ 9

[2024/11/28] เอกสารเด่น:

การเพิ่มประสิทธิภาพการตั้งค่าสำหรับการให้เหตุผลกับคำติชมหลอกจากมหาวิทยาลัยเทคโนโลยีนันง

Scribeagent: ไปยังตัวแทนเว็บเฉพาะทางที่ใช้ข้อมูลเวิร์กโฟลว์ระดับการผลิตจาก Scribe

การเขียนโปรแกรมที่ขับเคลื่อนด้วยการวางแผน: เวิร์กโฟลว์การเขียนโปรแกรมแบบจำลองภาษาขนาดใหญ่จากมหาวิทยาลัยเมลเบิร์น

เกณฑ์มาตรฐานการแปลระดับที่เก็บของการกำหนดเป้าหมายสนิมจากมหาวิทยาลัยซุนยัตเซ็น

การใช้ประโยชน์จากประสบการณ์ก่อนหน้านี้: ฐานความรู้เสริมที่ขยายได้สำหรับข้อความถึง SQL จากมหาวิทยาลัยวิทยาศาสตร์และเทคโนโลยีของจีน

CodexEmbed: ตระกูล Embedding Generalist สำหรับการดึงรหัสหลายภาษาและหลายงานจากการวิจัย Salesforce AI

POSEC: Fortifying Code LLMS ด้วยการจัดตำแหน่งความปลอดภัยเชิงรุกจาก Purdue University

[2024/10/22] เราได้รวบรวมเอกสาร 70 ฉบับตั้งแต่เดือนกันยายนและตุลาคม 2567 ในบทความ WeChat หนึ่งบทความ

[2024/09/06] การสำรวจของเราได้รับการยอมรับสำหรับการตีพิมพ์โดยการทำธุรกรรมเกี่ยวกับการวิจัยการเรียนรู้ของเครื่อง (TMLR)

[2024/09/14] เราได้รวบรวมเอกสาร 57 ฉบับตั้งแต่เดือนสิงหาคม 2567 (รวมถึง 48 ที่ ACL 2024) ในบทความ WeChat หนึ่งบทความ

หากคุณพบว่ากระดาษหายไปจากที่เก็บนี้วางผิดพลาดในหมวดหมู่หรือขาดการอ้างอิงถึงข้อมูลวารสาร/การประชุมโปรดอย่าลังเลที่จะสร้างปัญหา หากคุณพบว่า repo นี้มีประโยชน์โปรดอ้างอิงการสำรวจของเรา:

@article{zhang2024unifying,

title={Unifying the Perspectives of {NLP} and Software Engineering: A Survey on Language Models for Code},

author={Ziyin Zhang and Chaoyu Chen and Bingchang Liu and Cong Liao and Zi Gong and Hang Yu and Jianguo Li and Rui Wang},

journal={Transactions on Machine Learning Research},

issn={2835-8856},

year={2024},

url={https://openreview.net/forum?id=hkNnGqZnpa},

note={}

}

การสำรวจ

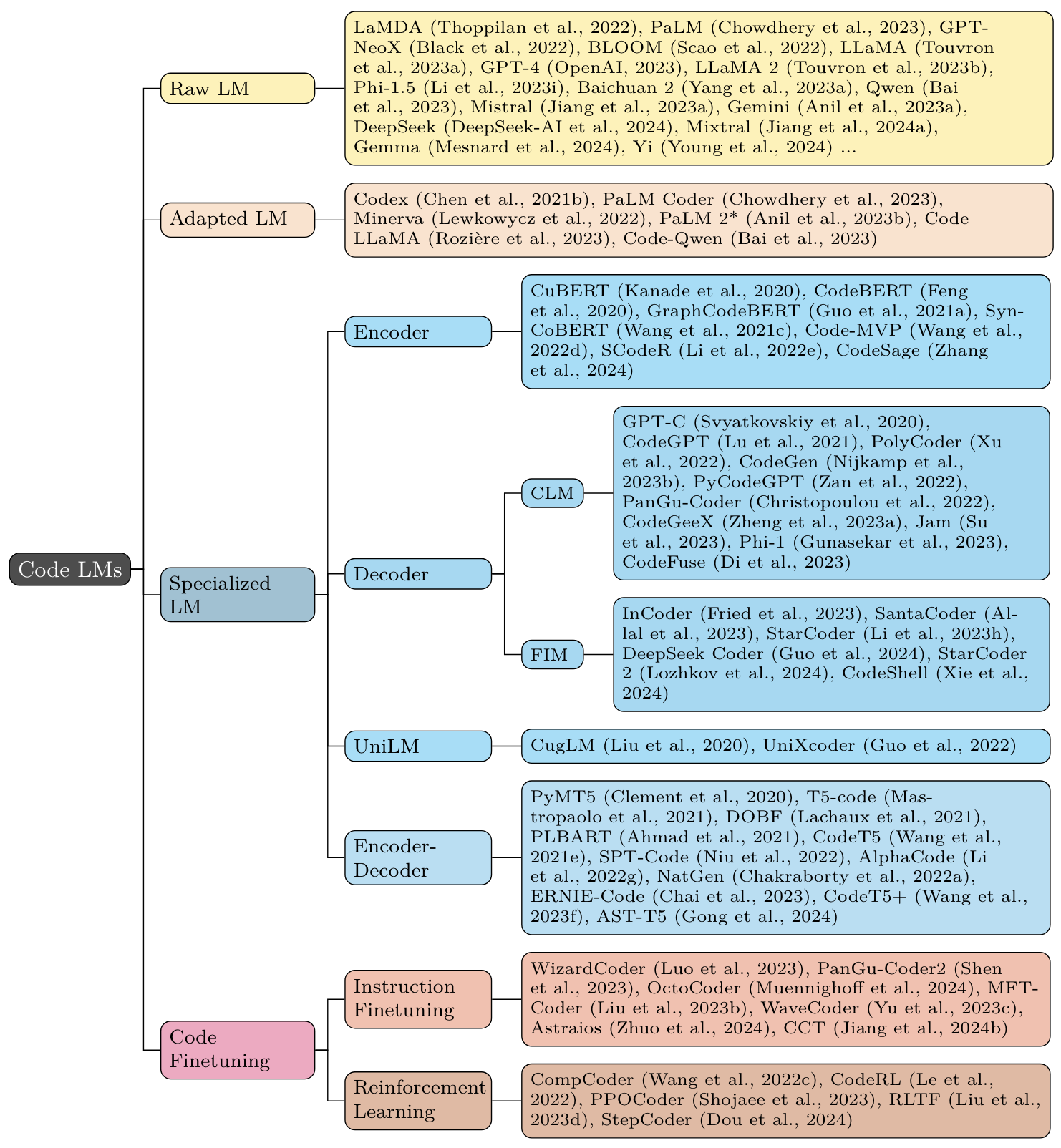

แบบจำลอง

2.1 ฐาน LLMS และกลยุทธ์การเตรียมการก่อน

2.2 LLM ที่มีอยู่ปรับให้เข้ากับรหัส

2.3 การเตรียมการทั่วไปในรหัส

2.4 (คำแนะนำ) การปรับแต่งในรหัส

2.5 การเรียนรู้การเสริมแรงทางรหัส

เมื่อการเขียนโค้ดตรงตามการใช้เหตุผล

3.1 การเข้ารหัสเพื่อการให้เหตุผล

3.2 การจำลองรหัส

3.3 CODE AGENTS

3.4 การเข้ารหัสแบบโต้ตอบ

3.5 การนำทางส่วนหน้า

รหัส LLM สำหรับภาษาที่มีทรัพยากรต่ำระดับต่ำและภาษาเฉพาะโดเมน

วิธีการ/รุ่นสำหรับงานดาวน์สตรีม

การเขียนโปรแกรม

การทดสอบและการปรับใช้

Devops

ความต้องการ

การวิเคราะห์รหัส Ai-Generated

การมีปฏิสัมพันธ์ของมนุษย์-LLM

ชุดข้อมูล

8.1 pretraining

8.2 มาตรฐาน

การอ่านที่แนะนำ

การอ้างอิง

ประวัติดาว

เข้าร่วมกับเรา

เราแสดงรายการแบบสำรวจล่าสุดหลายเรื่องในหัวข้อที่คล้ายกัน ในขณะที่พวกเขาทั้งหมดเกี่ยวกับรูปแบบภาษาสำหรับรหัส 1-2 มุ่งเน้นไปที่ด้าน NLP; 3-6 มุ่งเน้นไปที่ด้าน SE; 7-11 ได้รับการปล่อยตัวหลังจากเรา

"โมเดลภาษาขนาดใหญ่ตรงกับ NL2Code: การสำรวจ" [2022-12] [ACL 2023] [กระดาษ]

"การสำรวจแบบจำลองภาษาที่ผ่านการฝึกอบรมสำหรับสติปัญญารหัสประสาท" [2022-12] [กระดาษ]

"การเปรียบเทียบเชิงประจักษ์ของโมเดลที่ได้รับการฝึกอบรมมาก่อนของซอร์สโค้ด" [2023-02] [ICSE 2023] [กระดาษ]

"แบบจำลองภาษาขนาดใหญ่สำหรับวิศวกรรมซอฟต์แวร์: การทบทวนวรรณกรรมอย่างเป็นระบบ" [2023-08] [กระดาษ]

"สู่ความเข้าใจในรูปแบบภาษาขนาดใหญ่ในงานวิศวกรรมซอฟต์แวร์" [2023-08] [กระดาษ]

"ข้อผิดพลาดในรูปแบบภาษาสำหรับรหัสข่าวกรอง: อนุกรมวิธานและการสำรวจ" [2023-10] [กระดาษ]

"การสำรวจแบบจำลองภาษาขนาดใหญ่สำหรับวิศวกรรมซอฟต์แวร์" [2023-12] [กระดาษ]

"การเรียนรู้อย่างลึกซึ้งสำหรับรหัสข่าวกรอง: การสำรวจเกณฑ์มาตรฐานและชุดเครื่องมือ" [2023-12] [กระดาษ]

"การสำรวจความฉลาดของรหัสประสาท: กระบวนทัศน์ความก้าวหน้าและนอกเหนือจาก" [2024-03] [กระดาษ]

"งานที่ผู้คนแจ้ง: อนุกรมวิธานของงาน LLM ดาวน์สตรีมในการตรวจสอบซอฟต์แวร์และวิธีการปลอมแปลง" [2024-04] [กระดาษ]

"การเขียนโปรแกรมอัตโนมัติ: โมเดลภาษาขนาดใหญ่และเกินกว่า" [2024-05] [กระดาษ]

"โมเดลวิศวกรรมซอฟต์แวร์และรากฐาน: ข้อมูลเชิงลึกจากบล็อกอุตสาหกรรมโดยใช้คณะลูกขุนของโมเดลมูลนิธิ" [2024-10] [กระดาษ]

"วิศวกรรมซอฟต์แวร์ที่ใช้การเรียนรู้ลึก: ความคืบหน้าความท้าทายและโอกาส" [2024-10] [กระดาษ]

LLM เหล่านี้ไม่ได้รับการฝึกฝนเฉพาะสำหรับรหัส แต่ได้แสดงให้เห็นถึงความสามารถในการเข้ารหัสที่แตกต่างกัน

Lamda : "Lamda: รุ่นภาษาสำหรับแอปพลิเคชันโต้ตอบ" [2022-01] [กระดาษ]

ปาล์ม : "ปาล์ม: การสร้างแบบจำลองภาษาด้วยเส้นทาง" [2022-04] [JMLR] [กระดาษ]

GPT-NEOX : "GPT-NEOX-20B: โมเดลภาษาแบบอัตโนมัติโอเพนซอร์ซ" [2022-04] [ACL 2022 เวิร์กช็อปเกี่ยวกับความท้าทายและมุมมองในการสร้าง LLMS] [กระดาษ] [repo]

Bloom : "Bloom: รุ่นภาษาหลายภาษาแบบเปิดกว้าง 176b-parameter" [2022-11] [กระดาษ] [รุ่น]

LLAMA : "Llama: แบบเปิดและเปิดกว้างของรูปแบบภาษาพื้นฐาน" [2023-02] [กระดาษ]

GPT-4 : "รายงานทางเทคนิค GPT-4" [2023-03] [กระดาษ]

Llama 2 : "Llama 2: Open Foundation และ Models Chat ที่ปรับแต่งได้อย่างละเอียด" [2023-07] [กระดาษ] [repo]

Phi-1.5 : "ตำราเรียนเป็นสิ่งที่คุณต้องการ II: รายงานทางเทคนิค Phi-1.5" [2023-09] [กระดาษ] [รุ่น]

Baichuan 2 : "Baichuan 2: เปิดรุ่นภาษาขนาดใหญ่" [2023-09] [กระดาษ] [repo]

Qwen : "รายงานทางเทคนิค Qwen" [2023-09] [กระดาษ] [repo]

Mistral : "Mistral 7b" [2023-10] [กระดาษ] [repo]

ราศีเมถุน : "ราศีเมถุน: ตระกูลที่มีความสามารถสูงหลายรุ่น" [2023-12] [กระดาษ]

Phi-2 : "Phi-2: พลังที่น่าประหลาดใจของรุ่นภาษาขนาดเล็ก" [2023-12] [บล็อก]

Yayi2 : "Yayi 2: Multilingual Open-Source Language Models" [2023-12] [กระดาษ] [repo]

Deepseek : "Deepseek LLM: ปรับขนาดแบบจำลองภาษาโอเพ่นซอร์สด้วย longtermism" [2024-01] [กระดาษ] [repo]

Mixtral : "Mixtral of Experts" [2024-01] [Paper] [Blog]

Deepseekmoe : "DeepseekMoe: สู่ความเชี่ยวชาญเฉพาะด้านของผู้เชี่ยวชาญในรูปแบบภาษาผสมของ Experts" [2024-01] [กระดาษ] [repo]

Orion : "Orion-14b: Open-Source Multilingual Language Models" [2024-01] [กระดาษ] [repo]

Olmo : "Olmo: เร่งวิทยาศาสตร์แบบจำลองภาษา" [2024-02] [กระดาษ] [repo]

Gemma : "Gemma: แบบเปิดตามการวิจัยและเทคโนโลยีของราศีเมถุน" [2024-02] [กระดาษ] [บล็อก]

Claude 3 : "The Claude 3 Model Family: Opus, Sonnet, Haiku" [2024-03] [กระดาษ] [บล็อก]

Yi : "Yi: Open Foundation Model โดย 01.ai" [2024-03] [กระดาษ] [repo]

Poro : "Poro 34b และพรแห่งการพูดได้หลายภาษา" [2024-04] [กระดาษ] [รุ่น]

Jetmoe : "Jetmoe: ถึงประสิทธิภาพของ Llama2 ด้วย 0.1m ดอลลาร์" [2024-04] [กระดาษ] [repo]

ลามะ 3 : "The Llama 3 Herd of Models" [2024-04] [บล็อก] [repo] [กระดาษ]

Reka Core : "Reka Core, Flash และ Edge: ชุดของรุ่นภาษาหลายรูปแบบที่ทรงพลัง" [2024-04] [กระดาษ]

Phi-3 : "รายงานทางเทคนิค Phi-3: รูปแบบภาษาที่มีความสามารถสูงในโทรศัพท์ของคุณ" [2024-04] [กระดาษ]

OpenElm : "OpenElm: ครอบครัวโมเดลภาษาที่มีประสิทธิภาพพร้อมการฝึกอบรมโอเพนซอร์ซและกรอบการอนุมาน" [2024-04] [กระดาษ] [repo]

Tele-FLM : "รายงานทางเทคนิค Tele-FLM" [2024-04] [กระดาษ] [รุ่น]

Deepseek-V2 : "Deepseek-V2: โมเดลภาษาที่แข็งแกร่งประหยัดและมีประสิทธิภาพและมีประสิทธิภาพ" [2024-05] [กระดาษ] [repo]

Gecko : "Gecko: รูปแบบภาษากำเนิดสำหรับภาษาอังกฤษ, รหัสและเกาหลี" [2024-05] [กระดาษ] [รุ่น]

MAP-NEO : "MAP-NEO: ซีรี่ส์ภาษาขนาดใหญ่ที่มีความสามารถและโปร่งใสสูงและโปร่งใส" [2024-05] [กระดาษ] [repo]

Skywork-Moe : "Skywork-Moe: การดำน้ำลึกลงไปในเทคนิคการฝึกอบรมสำหรับแบบจำลองภาษาผสมของ Experts" [2024-06] [กระดาษ]

XMODEL-LM : "รายงานทางเทคนิค XMODEL-LM" [2024-06] [กระดาษ]

GEB : "GEB-1.3B: เปิดรุ่นภาษาขนาดใหญ่ที่มีน้ำหนักเบา" [2024-06] [กระดาษ]

กระต่าย : "กระต่าย: นักบวชมนุษย์กุญแจสู่ประสิทธิภาพของแบบจำลองภาษาขนาดเล็ก" [2024-06] [กระดาษ]

DCLM : "DataComp-LM: ในการค้นหาชุดการฝึกอบรมรุ่นต่อไปสำหรับรูปแบบภาษา" [2024-06] [กระดาษ]

Nemotron-4 : "Nemotron-4 340b รายงานทางเทคนิค" [2024-06] [กระดาษ]

chatglm : "chatglm: ครอบครัวของรุ่นภาษาขนาดใหญ่จาก GLM-130B ถึง GLM-4 เครื่องมือทั้งหมด" [2024-06] [กระดาษ]

Yulan : "Yulan: รูปแบบภาษาขนาดใหญ่โอเพนซอร์ซ" [2024-06] [กระดาษ]

Gemma 2 : "Gemma 2: การปรับปรุงโมเดลภาษาแบบเปิดในขนาดที่ใช้งานได้จริง" [2024-06] [กระดาษ]

H2O-Danube3 : "รายงานทางเทคนิค H2O-Danube3" [2024-07] [กระดาษ]

QWEN2 : "รายงานทางเทคนิค QWEN2" [2024-07] [กระดาษ]

Allam : "Allam: โมเดลภาษาขนาดใหญ่สำหรับภาษาอาหรับและภาษาอังกฤษ" [2024-07] [กระดาษ]

SEALLMS 3 : "SEALLMS 3: Foundation Open และ Chat Multilingual Language Models สำหรับภาษาเอเชียตะวันออกเฉียงใต้" [2024-07] [กระดาษ]

AFM : "โมเดลภาษา Apple Intelligence Foundation" [2024-07] [กระดาษ]

"ถึงรหัสหรือไม่เป็นรหัส? การสำรวจผลกระทบของรหัสในการฝึกอบรมล่วงหน้า" [2024-08] [กระดาษ]

Olmoe : "Olmoe: เปิดแบบจำลองภาษาผสมของ Experts" [2024-09] [กระดาษ]

"การฝึกฝนรหัสมีผลต่อประสิทธิภาพการทำงานของแบบจำลองภาษาอย่างไร" [2024-09] [กระดาษ]

EUROLLM : "EUROLLM: แบบจำลองภาษาหลายภาษาสำหรับยุโรป" [2024-09] [กระดาษ]

"ภาษาการเขียนโปรแกรมใดและคุณสมบัติใดในขั้นตอนการฝึกอบรมก่อนส่งผลกระทบต่อประสิทธิภาพการอนุมานเชิงตรรกะแบบปลายน้ำ" [2024-10] [กระดาษ]

GPT-4O : "การ์ดระบบ GPT-4O" [2024-10] [กระดาษ]

Hunyuan-Large : "Hunyuan-Large: โมเดลโมโอโอเพนซอร์สที่มีพารามิเตอร์เปิดใช้งาน 52 พันล้านโดย Tencent" [2024-11] [กระดาษ]

Crystal : "Crystal: การส่องสว่างความสามารถของ LLM ในภาษาและรหัส" [2024-11] [กระดาษ]

XMODEL-1.5 : "XMODEL-1.5: LLM หลายภาษา 1B" [2024-11] [กระดาษ]

โมเดลเหล่านี้เป็น LLM ที่มีวัตถุประสงค์ทั่วไปเพิ่มเติมเกี่ยวกับข้อมูลที่เกี่ยวข้องกับรหัส

Codex (GPT-3): "การประเมินรูปแบบภาษาขนาดใหญ่ที่ผ่านการฝึกอบรมเกี่ยวกับรหัส" [2021-07] [กระดาษ]

ปาล์มโคเดอร์ (ปาล์ม): "ปาล์ม: การสร้างแบบจำลองภาษาด้วยทางเดิน" [2022-04] [JMLR] [กระดาษ]

Minerva (Palm): "การแก้ปัญหาการใช้เหตุผลเชิงปริมาณด้วยโมเดลภาษา" [2022-06] [กระดาษ]

ปาล์ม 2 * (ปาล์ม 2): "รายงานทางเทคนิค Palm 2" [2023-05] [กระดาษ]

รหัส LLAMA (LLAMA 2): "Code Llama: Open Foundation Models สำหรับรหัส" [2023-08] [กระดาษ] [repo]

LeMur (Llama 2): "LeMur: Harmonizing ภาษาธรรมชาติและรหัสสำหรับตัวแทนภาษา" [2023-10] [ICLR 2024 Spotlight] [Paper]

BTX (LLAMA 2): "Branch-Train-Mix: Mixing Expert LLMS เป็นส่วนผสมของ Experts LLM" [2024-03] [กระดาษ]

Hirope : "Hirope: การคาดการณ์ความยาวสำหรับโมเดลรหัสโดยใช้ตำแหน่งลำดับชั้น" [2024-03] [ACL 2024] [กระดาษ]

"การเรียนรู้ข้อความรหัสและคณิตศาสตร์พร้อมกันผ่านการหลอมรวมรุ่นภาษาที่มีความเชี่ยวชาญสูง" [2024-03] [กระดาษ]

CodeGemma : "CodeGemma: แบบเปิดรหัสตาม Gemma" [2024-04] [Paper] [Model]

Deepseek-Coder-V2 : "DEEPSEEK-CODER-V2: การทำลายอุปสรรคของโมเดลซอร์ซแบบปิดในรหัสข่าวกรอง" [2024-06] [กระดาษ]

"สัญญาและอันตรายของแบบจำลองการสร้างรหัสการทำงานร่วมกัน: ประสิทธิภาพและประสิทธิภาพการท่องจำ" [2024-09] [กระดาษ]

QWEN2.5-CODER : "รายงานทางเทคนิค QWEN2.5-CODER" [2024-09] [กระดาษ]

Lingma SWE-GPT : "Lingma SWE-GPT: รูปแบบภาษาการพัฒนาแบบเปิดเป็นศูนย์กลางสำหรับการปรับปรุงซอฟต์แวร์อัตโนมัติ" [2024-11] [กระดาษ]

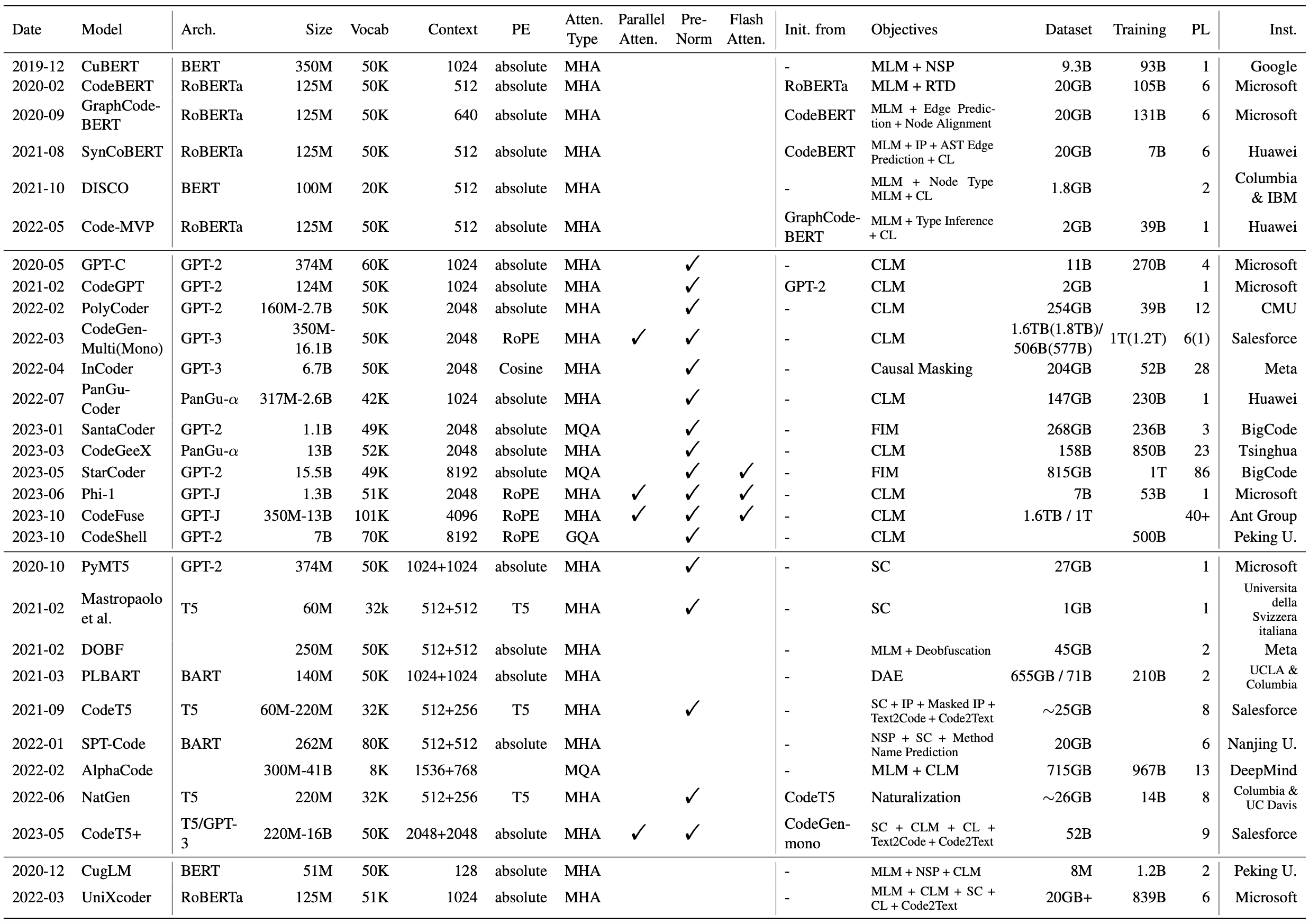

โมเดลเหล่านี้คือตัวเข้ารหัสหม้อแปลงตัวถอดรหัสและตัวพิมพ์ใหญ่ที่ได้รับการปรับแต่งจากศูนย์โดยใช้วัตถุประสงค์ที่มีอยู่สำหรับการสร้างแบบจำลองภาษาทั่วไป

Cubert (MLM + NSP): "การเรียนรู้และประเมินผลการฝังบริบทของซอร์สโค้ด" [2019-12] [ICML 2020] [กระดาษ] [repo]

Codebert (MLM + RTD): "Codebert: แบบจำลองที่ผ่านการฝึกอบรมมาก่อนสำหรับการเขียนโปรแกรมและภาษาธรรมชาติ" [2020-02] [EMNLP 2020 การค้นพบ] [กระดาษ] [repo]

GraphCodebert (MLM + DFG Edge Prediction + DFG การจัดตำแหน่งโหนด): "GraphCodebert: การแสดงรหัสก่อนการฝึกอบรมด้วยการไหลของข้อมูล" [2020-09] [ICLR 2021] [กระดาษ] [repo]

Syncobert (การทำนาย MLM + ตัวระบุ + การทำนาย Edge AST + การเรียนรู้แบบตรงกันข้าม): "Syncobert: การฝึกอบรมหลายแบบหลายโมดอลที่มีแนวทางสำหรับการเป็นตัวแทนรหัส" [2021-08] [กระดาษ]

Disco (MLM + ประเภทโหนด MLM + การเรียนรู้แบบตรงกันข้าม): "สู่การเรียนรู้ (DIS)-ความคล้ายคลึงกันของซอร์สโค้ดจากความแตกต่างของโปรแกรม" [2021-10] [ACL 2022] [กระดาษ]

Code-MVP (MLM + ประเภทการอนุมาน + การเรียนรู้แบบตรงกันข้าม): "Code-MVP: การเรียนรู้ที่จะเป็นตัวแทนซอร์สโค้ดจากหลายมุมมองด้วยการฝึกอบรมก่อนหน้า" [2022-05] [NAACL 2022 ทางเทคนิคแทร็ก] [กระดาษ]

รหัส (MLM + deobfuscation + การเรียนรู้แบบตัดกัน): "การเรียนรู้รหัสการเรียนรู้ในระดับ" [2024-02] [ICLR 2024] [กระดาษ]

Colsbert (MLM): "การปรับขนาดกฎหมายที่อยู่เบื้องหลังรูปแบบการทำความเข้าใจรหัส" [2024-02] [กระดาษ]

GPT-C (CLM): "IntelliCode Compose: การสร้างรหัสโดยใช้หม้อแปลง" [2020-05] [ESEC/FSE 2020] [กระดาษ]

CodeGpt (CLM): "CodexGlue: ชุดข้อมูลมาตรฐานการเรียนรู้ของเครื่องสำหรับการทำความเข้าใจรหัสและการสร้าง" [2021-02] [ชุดข้อมูล Neurips และมาตรฐาน 2021] [กระดาษ] [repo]

CodeParrot (CLM) [2021-12] [บล็อก]

Polycoder (CLM): "การประเมินอย่างเป็นระบบของแบบจำลองภาษาขนาดใหญ่ของรหัส" [2022-02] [DL4C@ICLR 2022] [กระดาษ] [repo]

codegen (CLM): "codegen: โมเดลภาษาขนาดใหญ่เปิดสำหรับรหัสที่มีการสังเคราะห์โปรแกรมหลายครั้ง" [2022-03] [ICLR 2023] [กระดาษ] [repo]

Incoder (การปิดบังเชิงสาเหตุ): "Incoder: แบบจำลองการกำเนิดสำหรับรหัสที่ infilling และการสังเคราะห์" [2022-04] [ICLR 2023] [กระดาษ] [repo]

PyCodegpt (CLM): "ใบรับรอง: การฝึกอบรมก่อนการสร้างภาพร่างสำหรับการสร้างรหัสที่เน้นห้องสมุด" [2022-06] [IJCAI-ECAI 2022] [กระดาษ] [repo]

Pangu-Coder (CLM): "Pangu-Coder: การสังเคราะห์โปรแกรมด้วยการสร้างแบบจำลองภาษาระดับฟังก์ชั่น" [2022-07] [กระดาษ]

Santacoder (FIM): "Santacoder: อย่าไปถึงดวงดาว!" [2023-01] [กระดาษ] [รุ่น]

Codegeex (CLM): "codegeex: โมเดลที่ผ่านการฝึกอบรมมาก่อนสำหรับการสร้างรหัสที่มีการประเมินหลายภาษาบน Humaneval-X" [2023-03] [กระดาษ] [repo]

StarCoder (FIM): "StarCoder: ขอให้แหล่งข่าวอยู่กับคุณ!" [2023-05] [กระดาษ] [รุ่น]

Phi-1 (CLM): "ตำราเรียนเป็นสิ่งที่คุณต้องการ" [2023-06] [กระดาษ] [รุ่น]

CodeFuse (CLM): "CodeFUSE-13B: รหัสภาษาหลายภาษาแบบหลายภาษาแบบจำลอง" [2023-10] [กระดาษ] [รุ่น]

Deepseek Coder (CLM+FIM): "Deepseek-Coder: เมื่อโมเดลภาษาขนาดใหญ่ตรงกับการเขียนโปรแกรม-การเพิ่มขึ้นของรหัสข่าวกรอง" [2024-01] [กระดาษ] [repo]

StarCoder2 (CLM+FIM): "StarCoder 2 และ Stack V2: The Next Generation" [2024-02] [Paper] [repo]

Codeshell (CLM+FIM): "รายงานทางเทคนิค Codeshell" [2024-03] [กระดาษ] [repo]

codeqwen1.5 [2024-04] [บล็อก]

หินแกรนิต : "โมเดลรหัสหินแกรนิต: ตระกูลของแบบจำลองฐานรากแบบเปิดสำหรับรหัสข่าวกรอง" [2024-05] [กระดาษ] "โมเดลรหัสหินแกรนิตเป็นบริบท 128K" [2024-07] [กระดาษ]

nt-java : "หม้อแปลงแคบ: Java-LM ที่ใช้ Starcoder สำหรับเดสก์ท็อป" [2024-07] [กระดาษ]

Arctic-SnowCoder : "Arctic-SnowCoder: ข้อมูลคุณภาพสูงในการฝึกฝนรหัส" [2024-09] [กระดาษ]

AixCoder : "AixCoder-7B: โมเดลภาษาขนาดใหญ่ที่มีน้ำหนักเบาและมีประสิทธิภาพสำหรับการกรอกรหัส" [2024-10] [กระดาษ]

OpenCoder : "OpenCoder: Open Cookbook สำหรับรหัสชั้นบนสุด ๆ รุ่นภาษาขนาดใหญ่" [2024-11] [กระดาษ]

PYMT5 (ช่วงการทุจริต): "PYMT5: การแปลหลายโหมดของภาษาธรรมชาติและรหัส Python ด้วยหม้อแปลง" [2020-10] [EMNLP 2020] [กระดาษ]

Mastropaolo และคณะ (MLM + deobfuscation): "DOBF: วัตถุประสงค์การฝึกอบรมล่วงหน้าสำหรับการเขียนโปรแกรมภาษา" [2021-02] [ICSE 2021] [กระดาษ] [repo]

DOBF (Span Corruption): "การศึกษาการใช้หม้อแปลงการถ่ายโอนข้อความเป็นข้อความเพื่อสนับสนุนงานที่เกี่ยวข้องกับรหัส" [2021-02] [Neurips 2021] [กระดาษ] [repo]

PLBART (DAE): "การฝึกอบรมล่วงหน้าเพื่อความเข้าใจและการสร้างโปรแกรม" [2021-03] [NAACL 2021] [กระดาษ] [repo]

codet5 (span corruption + identifier tagging + การทำนายตัวระบุหน้ากาก + text2Code + code2Text): "codet5: ตัวระบุตัวตนที่ได้รับการฝึกฝนก่อนที่จะได้รับการฝึกฝนล่วงหน้าสำหรับการทำความเข้าใจรหัสและการสร้าง" [2021-09]

SPT-Code (Span Corruption + NSP + การทำนายชื่อวิธี): "SPT-Code: ลำดับก่อนการฝึกอบรมสำหรับการเป็นตัวแทนซอร์สโค้ดการเรียนรู้" [2022-01] [ICSE 2022 ทางเทคนิคแทร็ก] [กระดาษ]

Alphacode (MLM + CLM): "การสร้างรหัสระดับการแข่งขันด้วย Alphacode" [2022-02] [วิทยาศาสตร์] [กระดาษ] [บล็อก]

Natgen (การแปลงสัญชาติโค้ด): "Natgen: การฝึกอบรมก่อนการกำเนิดโดย" การทำให้เป็นธรรมชาติ "ซอร์สโค้ด" [2022-06] [ESEC/FSE 2022] [กระดาษ] [repo]

Ernie-Code (Span Corruption + LM การแปลที่ใช้เดือย): "Ernie-Code: นอกเหนือจากภาษาอังกฤษที่เน้นภาษาอังกฤษเป็นศูนย์กลางสำหรับภาษาการเขียนโปรแกรม" [2022-12] [ACL23 (ผลการวิจัย)

Codet5 + (Span Corruption + CLM + การเรียนรู้แบบ code-code การเรียนรู้ + การแปลรหัสข้อความ): "codet5 +: เปิดรหัสแบบจำลองภาษาขนาดใหญ่สำหรับการทำความเข้าใจรหัสและการสร้าง" [2023-05] [EMNLP 2023] [กระดาษ] [repo]

AST-T5 (Span Corruption): "AST-T5: การเตรียมโครงสร้างให้ทราบถึงการสร้างรหัสและความเข้าใจ" [2024-01] [ICML 2024] [กระดาษ]

CUGLM (MLM + NSP + CLM): "รูปแบบภาษาที่ได้รับการฝึกอบรมมาหลายครั้งเพื่อการเรียนรู้แบบหลายงานสำหรับการกรอกรหัส" [2020-12] [ASE 2020] [กระดาษ]

UnixCoder (MLM + NSP + CLM + Span Corruption + การเรียนรู้แบบตรงกันข้าม + Code2Text): "UnixCoder: Unified Cross-Modal Pre-Training สำหรับการเป็นตัวแทนรหัส" [2022-03] [ACL 2022] [กระดาษ] [repo]

โมเดลเหล่านี้ใช้เทคนิคการปรับแต่งการเรียนการสอนเพื่อเพิ่มความสามารถของรหัส LLMS

WizardCoder (StarCoder + Evol-Instruct): "WizardCoder: เพิ่มขีดความสามารถรหัสภาษาขนาดใหญ่ที่มี Evol-Instruct" [2023-06] [ICLR 2024] [กระดาษ] [repo]

Pangu-Coder 2 (StarCoder + Evol-Instruct + RRTF): "Pangu-Coder2: การเพิ่มโมเดลภาษาขนาดใหญ่สำหรับรหัสที่มีข้อเสนอแนะการจัดอันดับ" [2023-07] [กระดาษ]

Octocoder (StarCoder) / Octogeex (codegeex2): "Octopack: รหัสคำสั่งการปรับแต่งโมเดลภาษาขนาดใหญ่" [2023-08] [ICLR 2024 Spotlight] [กระดาษ] [repo]

"ขั้นตอนการฝึกอบรมข้อมูลรหัสช่วย LLMS ให้เหตุผล" [2023-09] [ICLR 2024 Spotlight] [กระดาษ]

InstructCoder : "InstructCoder: คำแนะนำในการปรับแต่งแบบจำลองภาษาขนาดใหญ่สำหรับการแก้ไขรหัส" [กระดาษ] [repo]

MFTCoder : "MFTCODER: การเพิ่มรหัส LLMS ด้วยการปรับแต่งมัลติทาสก์" [2023-11] [KDD 2024] [กระดาษ] [repo]

"การทำความสะอาดรหัส LLM ช่วยสำหรับการฝึกอบรมเครื่องกำเนิดรหัสที่ถูกต้อง" [2023-11] [ICLR 2024] [กระดาษ]

Magicoder : "Magicoder: เสริมสร้างพลังการสร้างรหัสด้วย OSS-Instruct" [2023-12] [ICML 2024] [กระดาษ]

WaveCoder : "WaveCoder: การปรับปรุงที่แพร่หลายและหลากหลายสำหรับรหัสโมเดลภาษาขนาดใหญ่โดยการปรับแต่งคำสั่ง" [2023-12] [ACL 2024] [กระดาษ]

Astraios : "Astraios: พารามิเตอร์-ประสิทธิภาพการสอนการปรับแต่งรหัสภาษาขนาดใหญ่" [2024-01] [กระดาษ]

Dolphcoder : "Dolphcoder: รหัส echo-locating โมเดลภาษาขนาดใหญ่ที่มีการปรับแต่งการเรียนการสอนที่หลากหลายและหลากหลาย" [2024-02] [ACL 2024] [กระดาษ]

Safecoder : "คำสั่งการปรับแต่งสำหรับการสร้างรหัสที่ปลอดภัย" [2024-02] [ICML 2024] [กระดาษ]

"รหัสต้องการความคิดเห็น: การเพิ่มรหัส LLMS พร้อมการเพิ่มความคิดเห็น" [ACL 2024 การค้นพบ] [กระดาษ]

CCT : "การปรับการเปรียบเทียบรหัสสำหรับรหัสภาษาขนาดใหญ่" [2024-03] [กระดาษ]

SAT : "การปรับแต่งโครงสร้างอย่างละเอียดสำหรับโมเดลที่ผ่านการฝึกอบรมมาก่อนรหัส" [2024-04] [กระดาษ]

Codefort : "Codefort: การฝึกอบรมที่แข็งแกร่งสำหรับโมเดลการสร้างรหัส" [2024-04] [กระดาษ]

XFT : "XFT: ปลดล็อคพลังของการปรับแต่งรหัสโดยเพียงแค่รวมส่วนผสมของ experts" [2024-04] [ACL 2024] [กระดาษ] [repo]

AIEV-Instruct : "Autocoder: การเพิ่มรหัสโมเดลภาษาขนาดใหญ่ด้วย AIEV-Instruct" [2024-05] [กระดาษ]

AlchemistCoder : "AlchemistCoder: การประสานและความสามารถในการใช้รหัสโดยการปรับค่า Hindsight บนข้อมูลหลายแหล่ง" [2024-05] [กระดาษ]

"จากงานสัญลักษณ์ไปจนถึงการสร้างรหัส: การกระจายผลงานให้ผลงานที่ดีกว่า" [2024-05] [กระดาษ]

"การเปิดตัวผลกระทบของการเขียนโค้ดข้อมูลการปรับแต่งการปรับแต่งการให้เหตุผลแบบจำลองภาษาขนาดใหญ่" [2024-05] [กระดาษ]

พลัม : "พลัม: การเรียนรู้การตั้งค่าบวกกับกรณีทดสอบให้รูปแบบภาษารหัสที่ดีขึ้น" [2024-06] [กระดาษ]

McOder : "McEval: การประเมินรหัสหลายภาษาที่หลากหลาย" [2024-06] [กระดาษ]

"ปลดล็อกความสัมพันธ์ระหว่างการปรับแต่งการปรับแต่งและการเรียนรู้การเสริมแรงในรหัสการฝึกอบรมแบบจำลองภาษาขนาดใหญ่" [2024-06] [กระดาษ]

Code-Optimise : "รหัสที่เหมาะสม: ข้อมูลการตั้งค่าที่สร้างขึ้นด้วยตนเองเพื่อความถูกต้องและประสิทธิภาพ" [2024-06] [กระดาษ]

Unicoder : "Unicoder: การปรับรหัสแบบจำลองภาษาขนาดใหญ่ผ่านรหัสสากล" [2024-06] [ACL 2024] [กระดาษ]

"ความกะทัดรัดคือจิตวิญญาณของปัญญา: การตัดแต่งไฟล์ยาวสำหรับการสร้างรหัส" [2024-06] [กระดาษ]

"รหัสน้อยลงจัดตำแหน่งเพิ่มเติม: การปรับแต่ง LLM ที่มีประสิทธิภาพสำหรับการสร้างรหัสด้วยการตัดแต่งข้อมูล" [2024-07] [กระดาษ]

Inversecoder : "Inversecoder: ปลดปล่อยพลังของรหัสที่ปรับแต่งด้วยคำสั่ง LLMs ด้วย Instruct Instruct" [2024-07] [Paper]

"การเรียนรู้หลักสูตรสำหรับรูปแบบภาษารหัสขนาดเล็ก" [2024-07] [กระดาษ]

Instruct ทางพันธุกรรม : "คำสั่งทางพันธุกรรม: การปรับขนาดการสร้างคำแนะนำการเข้ารหัสสำหรับแบบจำลองภาษาขนาดใหญ่" [2024-07] [กระดาษ]

DataScope : "การสังเคราะห์ชุดข้อมูล Api-Guided ไปยังรูปแบบรหัสขนาดใหญ่" [2024-08] [กระดาษ]

** xcoder **: "รหัส LLM ของคุณทำงานได้อย่างไร? เพิ่มขีดความสามารถในการปรับแต่งรหัสการปรับแต่งด้วยข้อมูลคุณภาพสูง" [2024-09] [กระดาษ]

Galla : "Galla: กราฟจัดเรียงโมเดลภาษาขนาดใหญ่สำหรับการทำความเข้าใจซอร์สโค้ดที่ดีขึ้น" [2024-09] [กระดาษ]

Hexacoder : "Hexacoder: การสร้างรหัสที่ปลอดภัยผ่านข้อมูลการฝึกอบรมสังเคราะห์แบบออราเคิล-ไกด์" [2024-09] [กระดาษ]

AMR-EVOL : "AMR-EVOL: การตอบสนองแบบแยกส่วนวิวัฒนาการ Evolution ทำให้เกิดการกลั่นความรู้ที่ดีขึ้นสำหรับแบบจำลองภาษาขนาดใหญ่ในการสร้างรหัส" [2024-10] [กระดาษ]

LINTSEQ : "แบบจำลองภาษาการฝึกอบรมเกี่ยวกับลำดับการแก้ไขสังเคราะห์ปรับปรุงการสังเคราะห์รหัส" [2024-10] [กระดาษ]

Coba : "Coba: Convergence Balancer สำหรับ Multitask Finetuning ของรุ่นภาษาขนาดใหญ่" [2024-10] [EMNLP 2024] [กระดาษ]

Cursorcore : "Cursorcore: ช่วยเหลือการเขียนโปรแกรมผ่านการจัดตำแหน่งอะไรก็ได้" [2024-10] [กระดาษ]

SelfCodealign : "SelfCodealign: การจัดตำแหน่งตนเองสำหรับการสร้างรหัส" [2024-10] [กระดาษ]

"การควบคุมงานฝีมือของการสังเคราะห์ข้อมูลสำหรับ codellms" [2024-10] [กระดาษ]

Codelutra : "Codelutra: การเพิ่มการสร้างรหัส LLM ผ่านการปรับแต่งการปรับแต่ง" [2024-11] [กระดาษ]

DSTC : "DSTC: การเรียนรู้การตั้งค่าโดยตรงด้วยการทดสอบและรหัสที่สร้างขึ้นเองเท่านั้นเพื่อปรับปรุงรหัส LMS" [2024-11] [กระดาษ]

compcoder : "การสร้างรหัสประสาทที่รวบรวมได้ด้วยคำติชมของคอมไพเลอร์" [2022-03] [ACL 2022] [กระดาษ]

Coderl : "Coderl: การสร้างรหัสการเรียนรู้ผ่านแบบจำลองที่ผ่านการฝึกอบรมและการเรียนรู้การเสริมแรงอย่างลึกซึ้ง" [2022-07] [Neurips 2022] [Paper] [repo]

PPOCODER : "การสร้างรหัสตามการดำเนินการโดยใช้การเรียนรู้การเสริมแรงลึก" [2023-01] [TMLR 2023] [กระดาษ] [repo]

RLTF : "RLTF: การเรียนรู้การเสริมแรงจากข้อเสนอแนะการทดสอบหน่วย" [2023-07] [กระดาษ] [repo]

B-coder : "B-coder: การเรียนรู้การเสริมแรงลึกตามมูลค่าสำหรับการสังเคราะห์โปรแกรม" [2023-10] [ICLR 2024] [กระดาษ]

Ircoco : "Ircoco: การเรียนรู้การเสริมแรงอย่างลึกซึ้งในการเรียนรู้การเสริมกำลังเพื่อการจบรหัส" [2024-01] [FSE 2024] [กระดาษ]

stepcoder : "stepcoder: ปรับปรุงการสร้างรหัสด้วยการเรียนรู้การเสริมแรงจากคำติชมคอมไพเลอร์" [2024-02] [ACL 2024] [กระดาษ]

RLPF & DPA : "LLM ที่สอดคล้องกับประสิทธิภาพสำหรับการสร้างรหัสที่รวดเร็ว" [2024-04] [กระดาษ]

"การวัดการท่องจำใน RLHF สำหรับการกรอกรหัส" [2024-06] [กระดาษ]

"การใช้ RLAIF สำหรับการสร้างรหัสด้วย API-USAGE ใน LLM ที่มีน้ำหนักเบา" [2024-06] [กระดาษ]

RLCODER : "RLCODER: การเรียนรู้การเสริมแรงสำหรับการกรอกรหัสระดับที่เก็บ" [2024-07] [กระดาษ]

PF-PPO : "การกรองนโยบายใน RLHF เพื่อปรับแต่ง LLM สำหรับการสร้างรหัส" [2024-09] [กระดาษ]

Coffee-Gym : "Coffee-Gym: สภาพแวดล้อมสำหรับการประเมินและปรับปรุงข้อเสนอแนะภาษาธรรมชาติเกี่ยวกับรหัสที่ผิดพลาด" [2024-09] [กระดาษ]

RLEF : "RLEF: การลงดินรหัส LLMS ในการดำเนินการตอบรับด้วยการเรียนรู้การเสริมแรง" [2024-10] [กระดาษ]

codepmp : "codepmp: รูปแบบการตั้งค่าที่ปรับขนาดได้ก่อนการเตรียมการสำหรับการใช้เหตุผลแบบจำลองภาษาขนาดใหญ่" [2024-10] [กระดาษ]

CodedPo : "CodedPo: การจัดแนวโมเดลการจัดแนวด้วยซอร์สโค้ดที่สร้างขึ้นและตรวจสอบด้วยตนเอง" [2024-10] [กระดาษ]

"การเพิ่มประสิทธิภาพนโยบายการกำกับดูแลการกำกับดูแลสำหรับการสร้างรหัส" [2024-10] [กระดาษ]

"จัดตำแหน่ง codellms กับการเพิ่มประสิทธิภาพการตั้งค่าโดยตรง" [2024-10] [กระดาษ]

Falcon : "Falcon: ระบบปรับแต่งการเพิ่มประสิทธิภาพการเข้ารหัสแบบยาว/ระยะสั้นที่ขับเคลื่อนด้วยการตอบกลับ" [2024-10] [กระดาษ]

PFPO : "การเพิ่มประสิทธิภาพการตั้งค่าสำหรับการให้เหตุผลกับคำติชมแบบหลอก" [2024-11] [กระดาษ]

PAL : "PAL: โมเดลภาษาโดยโปรแกรมช่วย" [2022-11] [ICML 2023] [กระดาษ] [repo]

POT : "โปรแกรมการกระตุ้นความคิด: การคำนวณการคำนวณจากการใช้เหตุผลสำหรับงานการใช้เหตุผลเชิงตัวเลข" [2022-11] [TMLR 2023] [กระดาษ] [repo]

PAD : "PAD: การกลั่นด้วยโปรแกรมช่วยสอนรุ่นเล็ก ๆ ให้เหตุผลได้ดีกว่าการปรับแต่งโซ่ของการปรับแต่ง" [2023-05] [NAACL 2024] [กระดาษ]

CSV : "การแก้ปัญหาคำศัพท์ทางคณิตศาสตร์ที่ท้าทายโดยใช้ล่ามโค้ด GPT-4 ด้วยการตรวจสอบด้วยตนเองตามรหัส" [2023-08] [ICLR 2024] [กระดาษ]

MathCoder : "MathCoder: การรวมรหัสไร้รอยต่อใน LLMS เพื่อเพิ่มการใช้เหตุผลทางคณิตศาสตร์" [2023-10] [ICLR 2024] [กระดาษ]

COC : "Chain of Code: การใช้เหตุผลกับ Emulator รหัสแบบจำลองภาษา" [2023-12] [ICML 2024] [กระดาษ]

มาริโอ : "มาริโอ: การใช้เหตุผลทางคณิตศาสตร์ด้วยเอาท์พุทโค้ดล่าม-ไปป์ไลน์ที่ทำซ้ำได้" [2024-01] [ACL 2024 การค้นพบ] [กระดาษ]

Regal : "Regal: โปรแกรม refactoring เพื่อค้นหา abstractions ทั่วไป" [2024-01] [ICML 2024] [กระดาษ]

"การดำเนินการรหัสปฏิบัติการทำให้เกิดตัวแทน LLM ที่ดีกว่า" [2024-02] [ICML 2024] [กระดาษ]

HPROPRO : "การสำรวจคำถามไฮบริดผ่านการแจ้งเตือนด้วยโปรแกรม" [2024-02] [ACL 2024] [กระดาษ]

Xstreet : "ทำให้การใช้เหตุผลเชิงโครงสร้างหลายภาษาดีขึ้นจาก LLMs ผ่านรหัส" [2024-03] [ACL 2024] [กระดาษ]

FlowMind : "FlowMind: การสร้างเวิร์กโฟลว์อัตโนมัติด้วย LLMS" [2024-03] [กระดาษ]

Think-and-Execute : "แบบจำลองภาษาเป็นคอมไพเลอร์: การจำลองการดำเนินการ pseudocode ช่วยปรับปรุงการใช้เหตุผลอัลกอริทึมในรูปแบบภาษา" [2024-04] [กระดาษ]

Core : "Core: LLM เป็นล่ามสำหรับการเขียนโปรแกรมภาษาธรรมชาติการเขียนโปรแกรมรหัสหลอกและการเขียนโปรแกรมการไหลของตัวแทน AI" [2024-05] [กระดาษ]

Mumath-Code : "Mumath-Code: การรวมโมเดลภาษาขนาดใหญ่ใช้เครื่องมือกับการเพิ่มข้อมูลหลายมุมมองสำหรับการใช้เหตุผลทางคณิตศาสตร์" [2024-05] [กระดาษ]

Cogex : "การเรียนรู้ที่จะให้เหตุผลผ่านการสร้างโปรแกรมการจำลองและการค้นหา" [2024-05] [กระดาษ]

"การใช้เหตุผลทางคณิตศาสตร์ด้วย LLM: การสร้างและการเปลี่ยนแปลง" [2024-05] [กระดาษ]

"LLMs สามารถให้เหตุผลในป่าด้วยโปรแกรมได้หรือไม่" [2024-06] [กระดาษ]

Dotamath : "Dotamath: การสลายตัวของความคิดด้วยความช่วยเหลือรหัสและการแก้ไขตนเองสำหรับการใช้เหตุผลทางคณิตศาสตร์" [2024-07] [กระดาษ]

Cibench : "Cibench: การประเมิน LLM ของคุณด้วยปลั๊กอินโค้ดล่าม" [2024-07] [กระดาษ]

Pybench : "Pybench: การประเมิน Agent LLM ในงานการเข้ารหัสในโลกแห่งความเป็นจริงต่างๆ" [2024-07] [กระดาษ]

Adacoder : "Adacoder: Adaptive Promption การบีบอัดสำหรับการตอบคำถามด้วยภาพแบบโปรแกรม" [2024-07] [กระดาษ]

PyramidCoder : "Pyramid Coder: ตัวสร้างรหัสลำดับชั้นสำหรับการตอบคำถามด้วยภาพประกอบ" [2024-07] [กระดาษ]

CodeGraph : "CodeGraph: การเพิ่มเหตุผลกราฟของ LLMS ด้วยรหัส" [2024-08] [กระดาษ]

SIAM : "SIAM: การปรับปรุงตัวเองด้วยการให้เหตุผลทางคณิตศาสตร์ด้วยความช่วยเหลือทางคณิตศาสตร์ของแบบจำลองภาษาขนาดใหญ่" [2024-08] [กระดาษ]

CodePlan : "CodePlan: ปลดล็อคการใช้เหตุผลที่มีศักยภาพในรุ่น Langauge ขนาดใหญ่โดยการปรับขนาดการวางแผนรูปแบบรหัส" [2024-09] [กระดาษ]

POT : "การพิสูจน์ความคิด: การสังเคราะห์โปรแกรม neurosymbolic ช่วยให้การใช้เหตุผลที่แข็งแกร่งและตีความได้" [2024-09] [กระดาษ]

Metamath : "Metamath: การรวมภาษาธรรมชาติและรหัสเพื่อการใช้เหตุผลทางคณิตศาสตร์ที่เพิ่มขึ้นในแบบจำลองภาษาขนาดใหญ่" [2024-09] [กระดาษ]

"Babelbench: มาตรฐาน OMNI สำหรับการวิเคราะห์รหัสที่ขับเคลื่อนด้วยรหัสของข้อมูลหลายรูปแบบและหลายโครงสร้าง" [2024-10] [กระดาษ]

Codesteer : "การควบคุมแบบจำลองภาษาขนาดใหญ่ระหว่างการดำเนินการรหัสและการใช้เหตุผลเชิงข้อความ" [2024-10] [กระดาษ]

MathCoder2 : "MathCoder2: การใช้เหตุผลทางคณิตศาสตร์ที่ดีขึ้นจากการเตรียมการอย่างต่อเนื่องเกี่ยวกับรหัสคณิตศาสตร์แบบแปลนแบบจำลอง" [2024-10] [กระดาษ]

LLMFP : "การวางแผนอะไรก็ตามที่มีความเข้มงวด: การวางแผนแบบศูนย์อเนกประสงค์ด้วยการเขียนโปรแกรมอย่างเป็นทางการที่ใช้ LLM" [2024-10] [กระดาษ]

พิสูจน์ว่า : "ไม่ได้รับคะแนนเสียงทั้งหมด!

พิสูจน์ว่า : "ความน่าเชื่อถือ แต่ตรวจสอบ: การประเมิน VLM แบบโปรแกรมในป่า" [2024-10] [กระดาษ]

Geocoder : "Geocoder: การแก้ปัญหาเรขาคณิตโดยการสร้างรหัสโมดูลาร์ผ่านแบบจำลอง Vision-Language" [2024-10] [กระดาษ]

เหตุผล : "เหตุผล: การใช้โปรแกรมสัญลักษณ์ที่สกัดได้เพื่อประเมินการใช้เหตุผลทางคณิตศาสตร์" [2024-10] [กระดาษ]

GFP : "การกระตุ้นการเติมช่องว่างช่วยเพิ่มการใช้เหตุผลทางคณิตศาสตร์ด้วยรหัส" [2024-11] [กระดาษ]

Utmath : "Utmath: การประเมินคณิตศาสตร์ด้วยการทดสอบหน่วยผ่านความคิดที่ให้เหตุผลถึงการเข้ารหัส" [2024-11] [กระดาษ]

COCOP : "COCOP: การเพิ่มการจำแนกประเภทข้อความด้วย LLM ผ่าน Promption Code Prompt" [2024-11] [กระดาษ]

REPL-PLAN : "การวางแผนการเสริมรหัสแบบโต้ตอบและการแสดงออกด้วยรูปแบบภาษาขนาดใหญ่" [2024-11] [กระดาษ]

"ความท้าทายในการจำลองรหัสสำหรับแบบจำลองภาษาขนาดใหญ่" [2024-01] [กระดาษ]

"CodeMind: กรอบการทำงานที่ท้าทายรูปแบบภาษาขนาดใหญ่สำหรับการให้เหตุผลรหัส" [2024-02] [กระดาษ]

"การดำเนินการอัลกอริทึมที่อธิบายภาษาธรรมชาติด้วยแบบจำลองภาษาขนาดใหญ่: การสอบสวน" [2024-02] [กระดาษ]

"โมเดลภาษาสามารถแกล้งทำเป็นแก้ปัญหารหัสลอจิกด้วย LLMS" [2024-03] [กระดาษ]

"การประเมินแบบจำลองภาษาขนาดใหญ่ที่มีพฤติกรรมรันไทม์ของการดำเนินการโปรแกรม" [2024-03] [กระดาษ]

"ถัดไป: การสอนแบบจำลองภาษาขนาดใหญ่เพื่อเหตุผลเกี่ยวกับการดำเนินการรหัส" [2024-04] [ICML 2024] [กระดาษ]

"Selfpico: การดำเนินการรหัสบางส่วนด้วยตนเองด้วย LLMS" [2024-07] [กระดาษ]

"แบบจำลองภาษาขนาดใหญ่เป็นผู้ดำเนินการรหัส: การศึกษาเชิงสำรวจ" [2024-10] [กระดาษ]

"VisualCoder: ชี้นำโมเดลภาษาขนาดใหญ่ในการดำเนินการรหัสด้วยการใช้เหตุผลหลายรูปแบบที่มีความคิดหลายรูปแบบ" [2024-10] [กระดาษ]

การรวบรวมตนเอง : "การสร้างรหัสการรวมตัวเองผ่าน chatgpt" [2023-04] [กระดาษ]

chatdev : "ตัวแทนสื่อสารเพื่อการพัฒนาซอฟต์แวร์" [2023-07] [กระดาษ] [repo]

MetAgpt : "MetAgpt: การเขียนโปรแกรมเมตาสำหรับกรอบการทำงานร่วมกันหลายตัวแทน" [2023-08] [กระดาษ] [repo]

Codechain : "Codechain: ไปสู่การสร้างรหัสแบบแยกส่วนผ่านห่วงโซ่การแก้ไขตนเองด้วยโมดูลย่อยตัวแทน" [2023-10] [ICLR 2024] [กระดาษ]

CodeAgent : "CodeAgent: การเพิ่มการสร้างรหัสด้วยระบบตัวแทนแบบรวมเครื่องมือสำหรับความท้าทายในการเข้ารหัสระดับ Repo ระดับโลก" [2024-01] [ACL 2024] [กระดาษ]

Conline : "Conline: การสร้างรหัสที่ซับซ้อนและการปรับแต่งด้วยการทดสอบการค้นหาออนไลน์และการทดสอบความถูกต้อง" [2024-03] [กระดาษ]

LCG : "เมื่อการสร้างรหัสที่ใช้ LLM เป็นไปตามกระบวนการพัฒนาซอฟต์แวร์" [2024-03] [กระดาษ]

repairagent : "repairagent: ตัวแทนอิสระ, LLM สำหรับการซ่อมแซมโปรแกรม" [2024-03] [กระดาษ]

Magis :: "Magis: กรอบหลายตัวแทนที่ใช้ LLM สำหรับการแก้ไขปัญหาปัญหา GitHub" [2024-03] [กระดาษ]

SOA : "ตัวแทนที่จัดระเบียบตนเอง: LLM Multi-Agent Framework ไปสู่การสร้างรหัสขนาดใหญ่พิเศษและการเพิ่มประสิทธิภาพ" [2024-04] [กระดาษ]

Autocoderover : "Autocoderover: การปรับปรุงโปรแกรมอัตโนมัติ" [2024-04] [กระดาษ]

SWE-Agent : "SWE-Agent: อินเตอร์เฟสตัวแทนคอมพิวเตอร์เปิดใช้งานวิศวกรรมซอฟต์แวร์อัตโนมัติ" [2024-05] [กระดาษ]

MAPCODER : "MAPCODER: การสร้างรหัสหลายตัวแทนสำหรับการแก้ปัญหาการแข่งขัน" [2024-05] [ACL 2024] [กระดาษ]

"Fight Fire With Fire: เราจะไว้วางใจ CHATGPT ได้มากแค่ไหนในงานที่เกี่ยวข้องกับรหัสแหล่งที่มา" [2024-05] [กระดาษ]

FunCoder : "Divide-and-Conquer Meets Consensus: Unleashing the Power of Functions in Code Generation" [2024-05] [paper]

CTC : "Multi-Agent Software Development through Cross-Team Collaboration" [2024-06] [paper]

MASAI : "MASAI: Modular Architecture for Software-engineering AI Agents" [2024-06] [paper]

AgileCoder : "AgileCoder: Dynamic Collaborative Agents for Software Development based on Agile Methodology" [2024-06] [paper]

CodeNav : "CodeNav: Beyond tool-use to using real-world codebases with LLM agents" [2024-06] [paper]

INDICT : "INDICT: Code Generation with Internal Dialogues of Critiques for Both Security and Helpfulness" [2024-06] [paper]

AppWorld : "AppWorld: A Controllable World of Apps and People for Benchmarking Interactive Coding Agents" [2024-07] [paper]

CortexCompile : "CortexCompile: Harnessing Cortical-Inspired Architectures for Enhanced Multi-Agent NLP Code Synthesis" [2024-08] [paper]

Survey : "Large Language Model-Based Agents for Software Engineering: A Survey" [2024-09] [paper]

AutoSafeCoder : "AutoSafeCoder: A Multi-Agent Framework for Securing LLM Code Generation through Static Analysis and Fuzz Testing" [2024-09] [paper]

SuperCoder2.0 : "SuperCoder2.0: Technical Report on Exploring the feasibility of LLMs as Autonomous Programmer" [2024-09] [paper]

Survey : "Agents in Software Engineering: Survey, Landscape, and Vision" [2024-09] [paper]

MOSS : "MOSS: Enabling Code-Driven Evolution and Context Management for AI Agents" [2024-09] [paper]

HyperAgent : "HyperAgent: Generalist Software Engineering Agents to Solve Coding Tasks at Scale" [2024-09] [paper]

"Compositional Hardness of Code in Large Language Models -- A Probabilistic Perspective" [2024-09] [paper]

RGD : "RGD: Multi-LLM Based Agent Debugger via Refinement and Generation Guidance" [2024-10] [paper]

AutoML-Agent : "AutoML-Agent: A Multi-Agent LLM Framework for Full-Pipeline AutoML" [2024-10] [paper]

Seeker : "Seeker: Enhancing Exception Handling in Code with LLM-based Multi-Agent Approach" [2024-10] [paper]

REDO : "REDO: Execution-Free Runtime Error Detection for COding Agents" [2024-10] [paper]

"Evaluating Software Development Agents: Patch Patterns, Code Quality, and Issue Complexity in Real-World GitHub Scenarios" [2024-10] [paper]

EvoMAC : "Self-Evolving Multi-Agent Collaboration Networks for Software Development" [2024-10] [paper]

VisionCoder : "VisionCoder: Empowering Multi-Agent Auto-Programming for Image Processing with Hybrid LLMs" [2024-10] [paper]

AutoKaggle : "AutoKaggle: A Multi-Agent Framework for Autonomous Data Science Competitions" [2024-10] [paper]

Watson : "Watson: A Cognitive Observability Framework for the Reasoning of Foundation Model-Powered Agents" [2024-11] [paper]

CodeTree : "CodeTree: Agent-guided Tree Search for Code Generation with Large Language Models" [2024-11] [paper]

EvoCoder : "LLMs as Continuous Learners: Improving the Reproduction of Defective Code in Software Issues" [2024-11] [paper]

"Interactive Program Synthesis" [2017-03] [paper]

"Question selection for interactive program synthesis" [2020-06] [PLDI 2020] [paper]

"Interactive Code Generation via Test-Driven User-Intent Formalization" [2022-08] [paper]

"Improving Code Generation by Training with Natural Language Feedback" [2023-03] [TMLR] [paper]

"Self-Refine: Iterative Refinement with Self-Feedback" [2023-03] [NeurIPS 2023] [paper]

"Teaching Large Language Models to Self-Debug" [2023-04] [paper]

"Self-Edit: Fault-Aware Code Editor for Code Generation" [2023-05] [ACL 2023] [paper]

"LeTI: Learning to Generate from Textual Interactions" [2023-05] [paper]

"Is Self-Repair a Silver Bullet for Code Generation?" [2023-06] [ICLR 2024] [paper]

"InterCode: Standardizing and Benchmarking Interactive Coding with Execution Feedback" [2023-06] [NeurIPS 2023] [paper]

"INTERVENOR: Prompting the Coding Ability of Large Language Models with the Interactive Chain of Repair" [2023-11] [ACL 2024 Findings] [paper]

"OpenCodeInterpreter: Integrating Code Generation with Execution and Refinement" [2024-02] [ACL 2024 Findings] [paper]

"Iterative Refinement of Project-Level Code Context for Precise Code Generation with Compiler Feedback" [2024-03] [ACL 2024 Findings] [paper]

"CYCLE: Learning to Self-Refine the Code Generation" [2024-03] [paper]

"LLM-based Test-driven Interactive Code Generation: User Study and Empirical Evaluation" [2024-04] [paper]

"SOAP: Enhancing Efficiency of Generated Code via Self-Optimization" [2024-05] [paper]

"Code Repair with LLMs gives an Exploration-Exploitation Tradeoff" [2024-05] [paper]

"ReflectionCoder: Learning from Reflection Sequence for Enhanced One-off Code Generation" [2024-05] [paper]

"Training LLMs to Better Self-Debug and Explain Code" [2024-05] [paper]

"Requirements are All You Need: From Requirements to Code with LLMs" [2024-06] [paper]

"I Need Help! Evaluating LLM's Ability to Ask for Users' Support: A Case Study on Text-to-SQL Generation" [2024-07] [paper]

"An Empirical Study on Self-correcting Large Language Models for Data Science Code Generation" [2024-08] [paper]

"RethinkMCTS: Refining Erroneous Thoughts in Monte Carlo Tree Search for Code Generation" [2024-09] [paper]

"From Code to Correctness: Closing the Last Mile of Code Generation with Hierarchical Debugging" [2024-10] [paper] [repo]

"What Makes Large Language Models Reason in (Multi-Turn) Code Generation?" [2024-10] [paper]

"The First Prompt Counts the Most! An Evaluation of Large Language Models on Iterative Example-based Code Generation" [2024-11] [paper]

"Planning-Driven Programming: A Large Language Model Programming Workflow" [2024-11] [paper]

"ConAIR:Consistency-Augmented Iterative Interaction Framework to Enhance the Reliability of Code Generation" [2024-11] [paper]

"MarkupLM: Pre-training of Text and Markup Language for Visually-rich Document Understanding" [2021-10] [ACL 2022] [paper]

"WebKE: Knowledge Extraction from Semi-structured Web with Pre-trained Markup Language Model" [2021-10] [CIKM 2021] [paper]

"WebGPT: Browser-assisted question-answering with human feedback" [2021-12] [paper]

"CM3: A Causal Masked Multimodal Model of the Internet" [2022-01] [paper]

"DOM-LM: Learning Generalizable Representations for HTML Documents" [2022-01] [paper]

"WebFormer: The Web-page Transformer for Structure Information Extraction" [2022-02] [WWW 2022] [paper]

"A Dataset for Interactive Vision-Language Navigation with Unknown Command Feasibility" [2022-02] [ECCV 2022] [paper]

"WebShop: Towards Scalable Real-World Web Interaction with Grounded Language Agents" [2022-07] [NeurIPS 2022] [paper]

"Pix2Struct: Screenshot Parsing as Pretraining for Visual Language Understanding" [2022-10] [ICML 2023] [paper]

"Understanding HTML with Large Language Models" [2022-10] [EMNLP 2023 findings] [paper]

"WebUI: A Dataset for Enhancing Visual UI Understanding with Web Semantics" [2023-01] [CHI 2023] [paper]

"Mind2Web: Towards a Generalist Agent for the Web" [2023-06] [NeurIPS 2023] [paper]

"A Real-World WebAgent with Planning, Long Context Understanding, and Program Synthesis", [2023-07] [ICLR 2024] [paper]

"WebArena: A Realistic Web Environment for Building Autonomous Agents" [2023-07] [paper]

"CogAgent: A Visual Language Model for GUI Agents" [2023-12] [paper]

"GPT-4V(ision) is a Generalist Web Agent, if Grounded" [2024-01] [paper]

"WebVoyager: Building an End-to-End Web Agent with Large Multimodal Models" [2024-01] [paper]

"WebLINX: Real-World Website Navigation with Multi-Turn Dialogue" [2024-02] [paper]

"OmniACT: A Dataset and Benchmark for Enabling Multimodal Generalist Autonomous Agents for Desktop and Web" [2024-02] [paper]

"AutoWebGLM: Bootstrap And Reinforce A Large Language Model-based Web Navigating Agent" [2024-04] [paper]

"WILBUR: Adaptive In-Context Learning for Robust and Accurate Web Agents" [2024-04] [paper]

"AutoCrawler: A Progressive Understanding Web Agent for Web Crawler Generation" [2024-04] [paper]

"GUICourse: From General Vision Language Models to Versatile GUI Agents" [2024-06] [paper]

"NaviQAte: Functionality-Guided Web Application Navigation" [2024-09] [paper]

"MobileVLM: A Vision-Language Model for Better Intra- and Inter-UI Understanding" [2024-09] [paper]

"Multimodal Auto Validation For Self-Refinement in Web Agents" [2024-10] [paper]

"Navigating the Digital World as Humans Do: Universal Visual Grounding for GUI Agents" [2024-10] [paper]

"Web Agents with World Models: Learning and Leveraging Environment Dynamics in Web Navigation" [2024-10] [paper]

"Harnessing Webpage UIs for Text-Rich Visual Understanding" [2024-10] [paper]

"AgentOccam: A Simple Yet Strong Baseline for LLM-Based Web Agents" [2024-10] [paper]

"Beyond Browsing: API-Based Web Agents" [2024-10] [paper]

"Large Language Models Empowered Personalized Web Agents" [2024-10] [paper]

"AdvWeb: Controllable Black-box Attacks on VLM-powered Web Agents" [2024-10] [paper]

"Auto-Intent: Automated Intent Discovery and Self-Exploration for Large Language Model Web Agents" [2024-10] [paper]

"OS-ATLAS: A Foundation Action Model for Generalist GUI Agents" [2024-10] [paper]

"From Context to Action: Analysis of the Impact of State Representation and Context on the Generalization of Multi-Turn Web Navigation Agents" [2024-10] [paper]

"AutoGLM: Autonomous Foundation Agents for GUIs" [2024-10] [paper]

"WebRL: Training LLM Web Agents via Self-Evolving Online Curriculum Reinforcement Learning" [2024-11] [paper]

"The Dawn of GUI Agent: A Preliminary Case Study with Claude 3.5 Computer Use" [2024-11] [paper]

"ScribeAgent: Towards Specialized Web Agents Using Production-Scale Workflow Data" [2024-11] [paper]

"ShowUI: One Vision-Language-Action Model for GUI Visual Agent" [2024-11] [paper]

[ Ruby ] "On the Transferability of Pre-trained Language Models for Low-Resource Programming Languages" [2022-04] [ICPC 2022] [paper]

[ Verilog ] "Benchmarking Large Language Models for Automated Verilog RTL Code Generation" [2022-12] [DATE 2023] [paper]

[ OCL ] "On Codex Prompt Engineering for OCL Generation: An Empirical Study" [2023-03] [MSR 2023] [paper]

[ Ansible-YAML ] "Automated Code generation for Information Technology Tasks in YAML through Large Language Models" [2023-05] [DAC 2023] [paper]

[ Hansl ] "The potential of LLMs for coding with low-resource and domain-specific programming languages" [2023-07] [paper]

[ Verilog ] "VeriGen: A Large Language Model for Verilog Code Generation" [2023-07] [paper]

[ Verilog ] "RTLLM: An Open-Source Benchmark for Design RTL Generation with Large Language Model" [2023-08] [paper]

[ Racket, OCaml, Lua, R, Julia ] "Knowledge Transfer from High-Resource to Low-Resource Programming Languages for Code LLMs" [2023-08] [paper]

[ Verilog ] "VerilogEval: Evaluating Large Language Models for Verilog Code Generation" [2023-09] [ICCAD 2023] [paper]

[ Verilog ] "RTLFixer: Automatically Fixing RTL Syntax Errors with Large Language Models" [2023-11] [paper]

[ Verilog ] "Advanced Large Language Model (LLM)-Driven Verilog Development: Enhancing Power, Performance, and Area Optimization in Code Synthesis" [2023-12] [paper]

[ Verilog ] "RTLCoder: Outperforming GPT-3.5 in Design RTL Generation with Our Open-Source Dataset and Lightweight Solution" [2023-12] [paper]

[ Verilog ] "BetterV: Controlled Verilog Generation with Discriminative Guidance" [2024-02] [ICML 2024] [paper]

[ R ] "Empirical Studies of Parameter Efficient Methods for Large Language Models of Code and Knowledge Transfer to R" [2024-03] [paper]

[ Haskell ] "Investigating the Performance of Language Models for Completing Code in Functional Programming Languages: a Haskell Case Study" [2024-03] [paper]

[ Verilog ] "A Multi-Expert Large Language Model Architecture for Verilog Code Generation" [2024-04] [paper]

[ Verilog ] "CreativEval: Evaluating Creativity of LLM-Based Hardware Code Generation" [2024-04] [paper]

[ Alloy ] "An Empirical Evaluation of Pre-trained Large Language Models for Repairing Declarative Formal Specifications" [2024-04] [paper]

[ Verilog ] "Evaluating LLMs for Hardware Design and Test" [2024-04] [paper]

[ Kotlin, Swift, and Rust ] "Software Vulnerability Prediction in Low-Resource Languages: An Empirical Study of CodeBERT and ChatGPT" [2024-04] [paper]

[ Verilog ] "MEIC: Re-thinking RTL Debug Automation using LLMs" [2024-05] [paper]

[ Bash ] "Tackling Execution-Based Evaluation for NL2Bash" [2024-05] [paper]

[ Fortran, Julia, Matlab, R, Rust ] "Evaluating AI-generated code for C++, Fortran, Go, Java, Julia, Matlab, Python, R, and Rust" [2024-05] [paper]

[ OpenAPI ] "Optimizing Large Language Models for OpenAPI Code Completion" [2024-05] [paper]

[ Kotlin ] "Kotlin ML Pack: Technical Report" [2024-05] [paper]

[ Verilog ] "VerilogReader: LLM-Aided Hardware Test Generation" [2024-06] [paper]

"Benchmarking Generative Models on Computational Thinking Tests in Elementary Visual Programming" [2024-06] [paper]

[ Logo ] "Program Synthesis Benchmark for Visual Programming in XLogoOnline Environment" [2024-06] [paper]

[ Ansible YAML, Bash ] "DocCGen: Document-based Controlled Code Generation" [2024-06] [paper]

[ Qiskit ] "Qiskit HumanEval: An Evaluation Benchmark For Quantum Code Generative Models" [2024-06] [paper]

[ Perl, Golang, Swift ] "DistiLRR: Transferring Code Repair for Low-Resource Programming Languages" [2024-06] [paper]

[ Verilog ] "AssertionBench: A Benchmark to Evaluate Large-Language Models for Assertion Generation" [2024-06] [paper]

"A Comparative Study of DSL Code Generation: Fine-Tuning vs. Optimized Retrieval Augmentation" [2024-07] [paper]

[ Json, XLM, YAML ] "ConCodeEval: Evaluating Large Language Models for Code Constraints in Domain-Specific Languages" [2024-07] [paper]

[ Verilog ] "AutoBench: Automatic Testbench Generation and Evaluation Using LLMs for HDL Design" [2024-07] [paper]

[ Verilog ] "CodeV: Empowering LLMs for Verilog Generation through Multi-Level Summarization" [2024-07] [paper]

[ Verilog ] "ITERTL: An Iterative Framework for Fine-tuning LLMs for RTL Code Generation" [2024-07] [paper]

[ Verilog ] "OriGen:Enhancing RTL Code Generation with Code-to-Code Augmentation and Self-Reflection" [2024-07] [paper]

[ Verilog ] "Large Language Model for Verilog Generation with Golden Code Feedback" [2024-07] [paper]

[ Verilog ] "AutoVCoder: A Systematic Framework for Automated Verilog Code Generation using LLMs" [2024-07] [paper]

[ RPA ] "Plan with Code: Comparing approaches for robust NL to DSL generation" [2024-08] [paper]

[ Verilog ] "VerilogCoder: Autonomous Verilog Coding Agents with Graph-based Planning and Abstract Syntax Tree (AST)-based Waveform Tracing Tool" [2024-08] [paper]

[ Verilog ] "Revisiting VerilogEval: Newer LLMs, In-Context Learning, and Specification-to-RTL Tasks" [2024-08] [paper]

[ MaxMSP, Web Audio ] "Benchmarking LLM Code Generation for Audio Programming with Visual Dataflow Languages" [2024-09] [paper]

[ Verilog ] "RTLRewriter: Methodologies for Large Models aided RTL Code Optimization" [2024-09] [paper]

[ Verilog ] "CraftRTL: High-quality Synthetic Data Generation for Verilog Code Models with Correct-by-Construction Non-Textual Representations and Targeted Code Repair" [2024-09] [paper]

[ Bash ] "ScriptSmith: A Unified LLM Framework for Enhancing IT Operations via Automated Bash Script Generation, Assessment, and Refinement" [2024-09] [paper]

[ Survey ] "Survey on Code Generation for Low resource and Domain Specific Programming Languages" [2024-10] [paper]

[ R ] "Do Current Language Models Support Code Intelligence for R Programming Language?" [2024-10] [paper]

"Can Large Language Models Generate Geospatial Code?" [2024-10] [paper]

[ PLC ] "Agents4PLC: Automating Closed-loop PLC Code Generation and Verification in Industrial Control Systems using LLM-based Agents" [2024-10] [paper]

[ Lua ] "Evaluating Quantized Large Language Models for Code Generation on Low-Resource Language Benchmarks" [2024-10] [paper]

"Improving Parallel Program Performance Through DSL-Driven Code Generation with LLM Optimizers" [2024-10] [paper]

"GeoCode-GPT: A Large Language Model for Geospatial Code Generation Tasks" [2024-10] [paper]

[ R, D, Racket, Bash ]: "Bridge-Coder: Unlocking LLMs' Potential to Overcome Language Gaps in Low-Resource Code" [2024-10] [paper]

[ SPICE ]: "SPICEPilot: Navigating SPICE Code Generation and Simulation with AI Guidance" [2024-10] [paper]

[ IEC 61131-3 ST ]: "Training LLMs for Generating IEC 61131-3 Structured Text with Online Feedback" [2024-10] [paper]

[ Verilog ] "MetRex: A Benchmark for Verilog Code Metric Reasoning Using LLMs" [2024-11] [paper]

[ Verilog ] "CorrectBench: Automatic Testbench Generation with Functional Self-Correction using LLMs for HDL Design" [2024-11] [paper]

[ MUMPS, ALC ] "Leveraging LLMs for Legacy Code Modernization: Challenges and Opportunities for LLM-Generated Documentation" [2024-11] [paper]

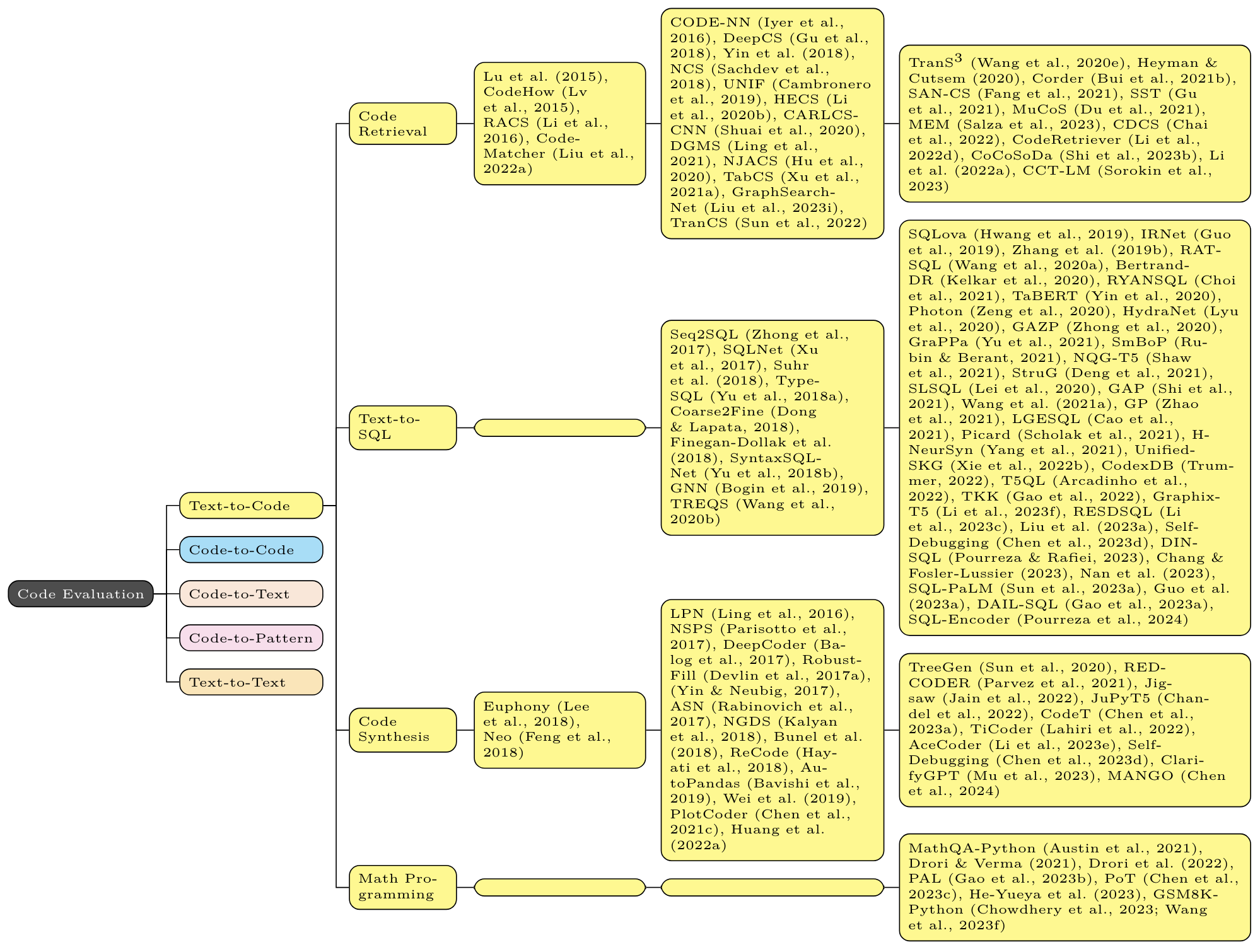

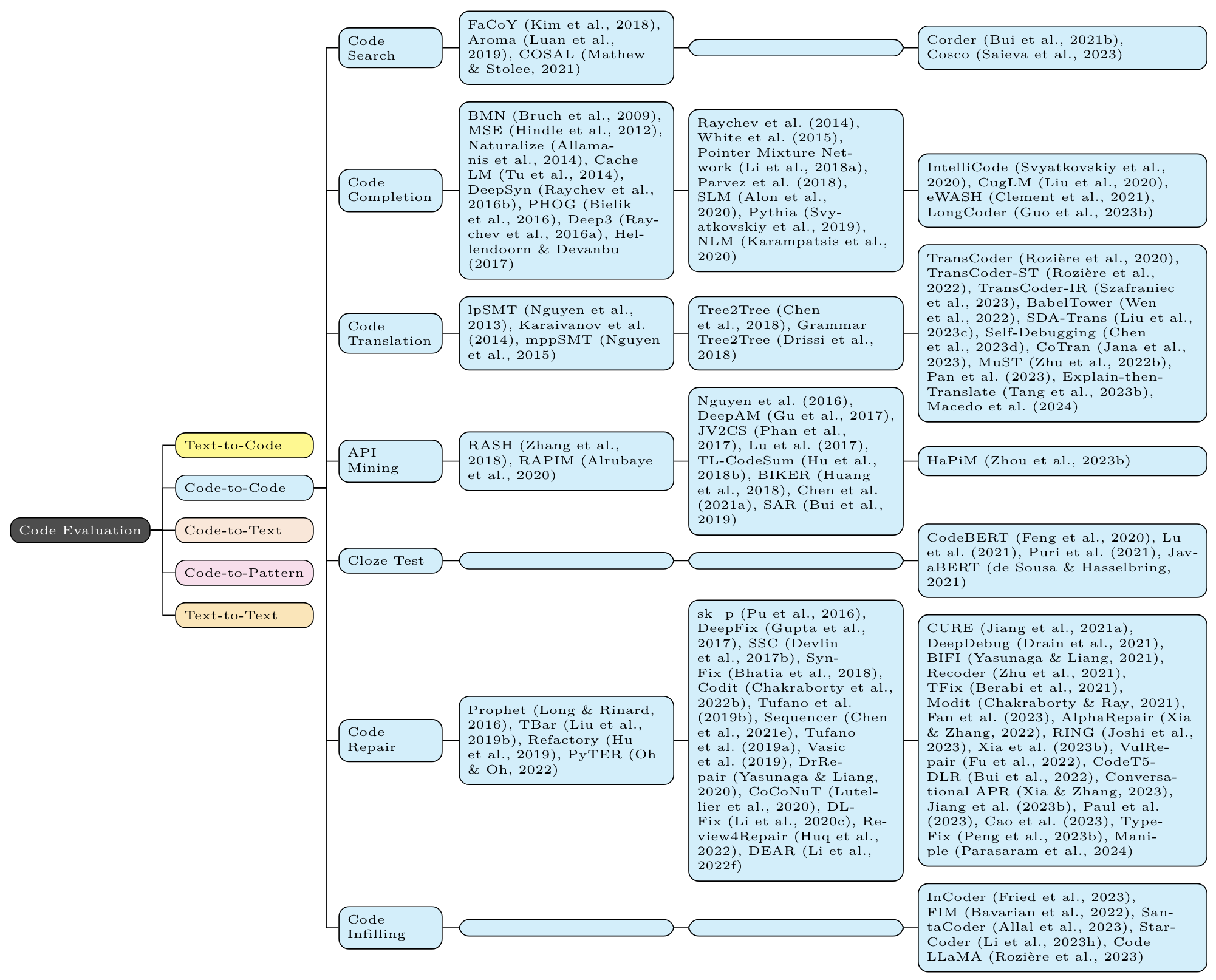

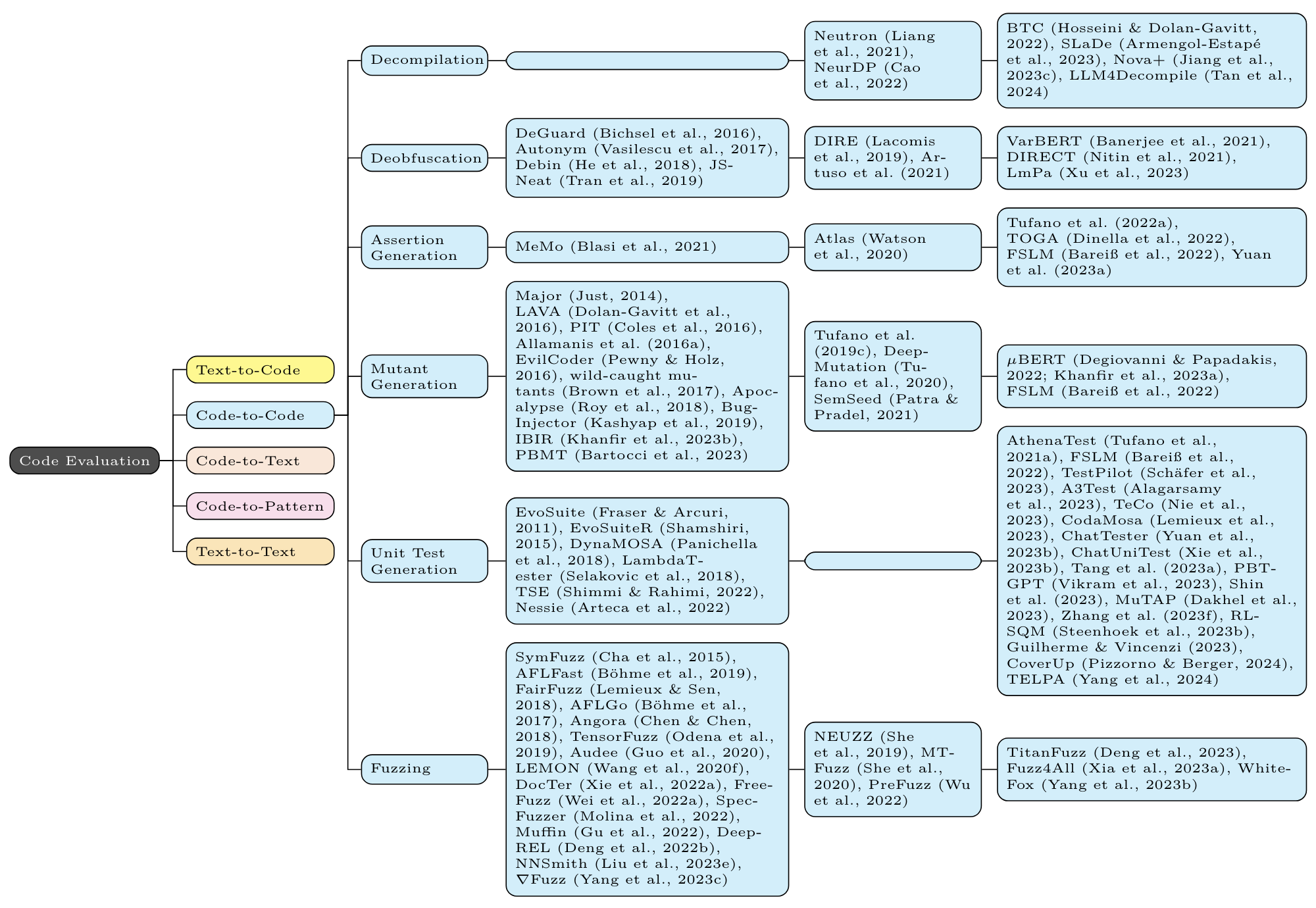

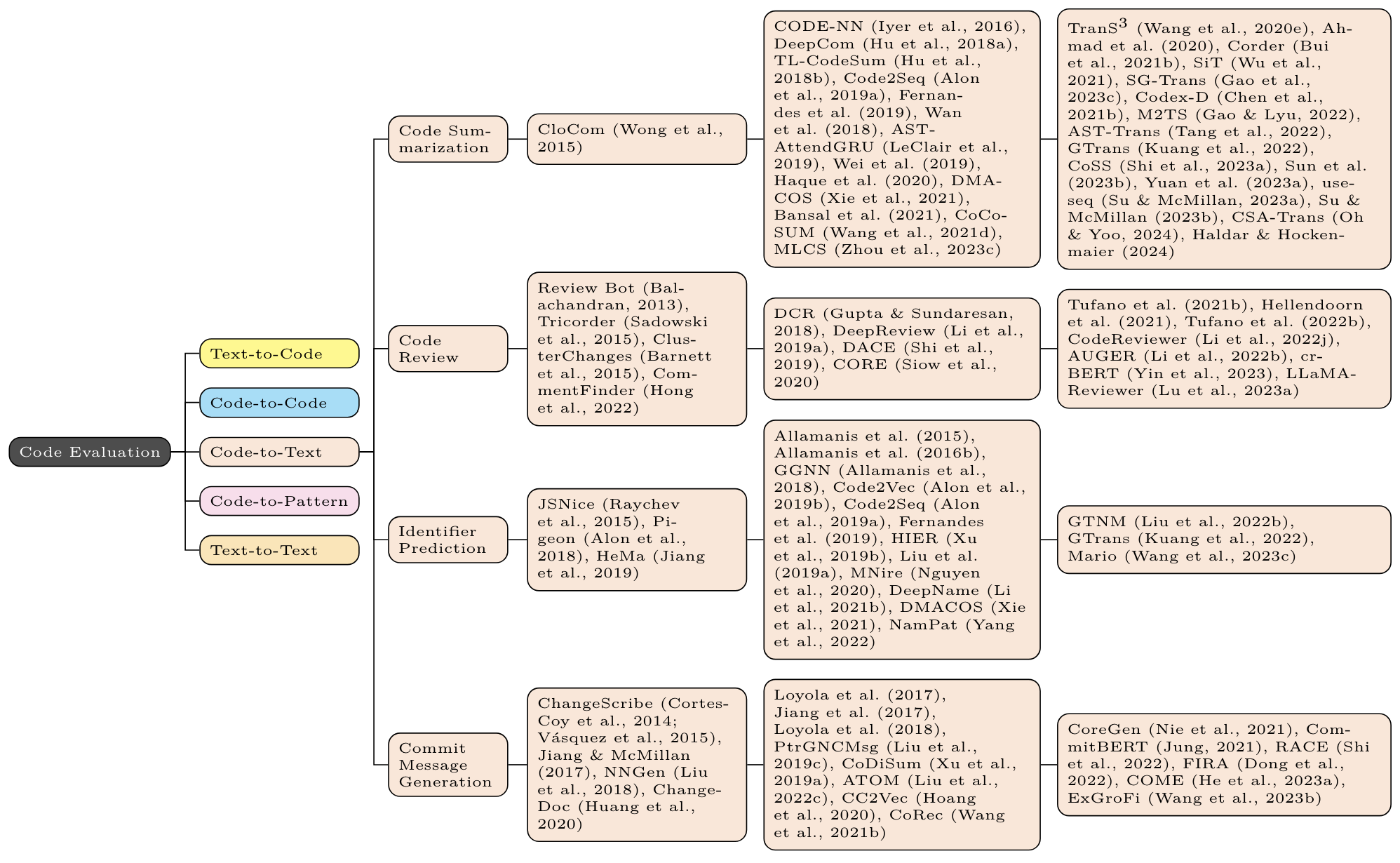

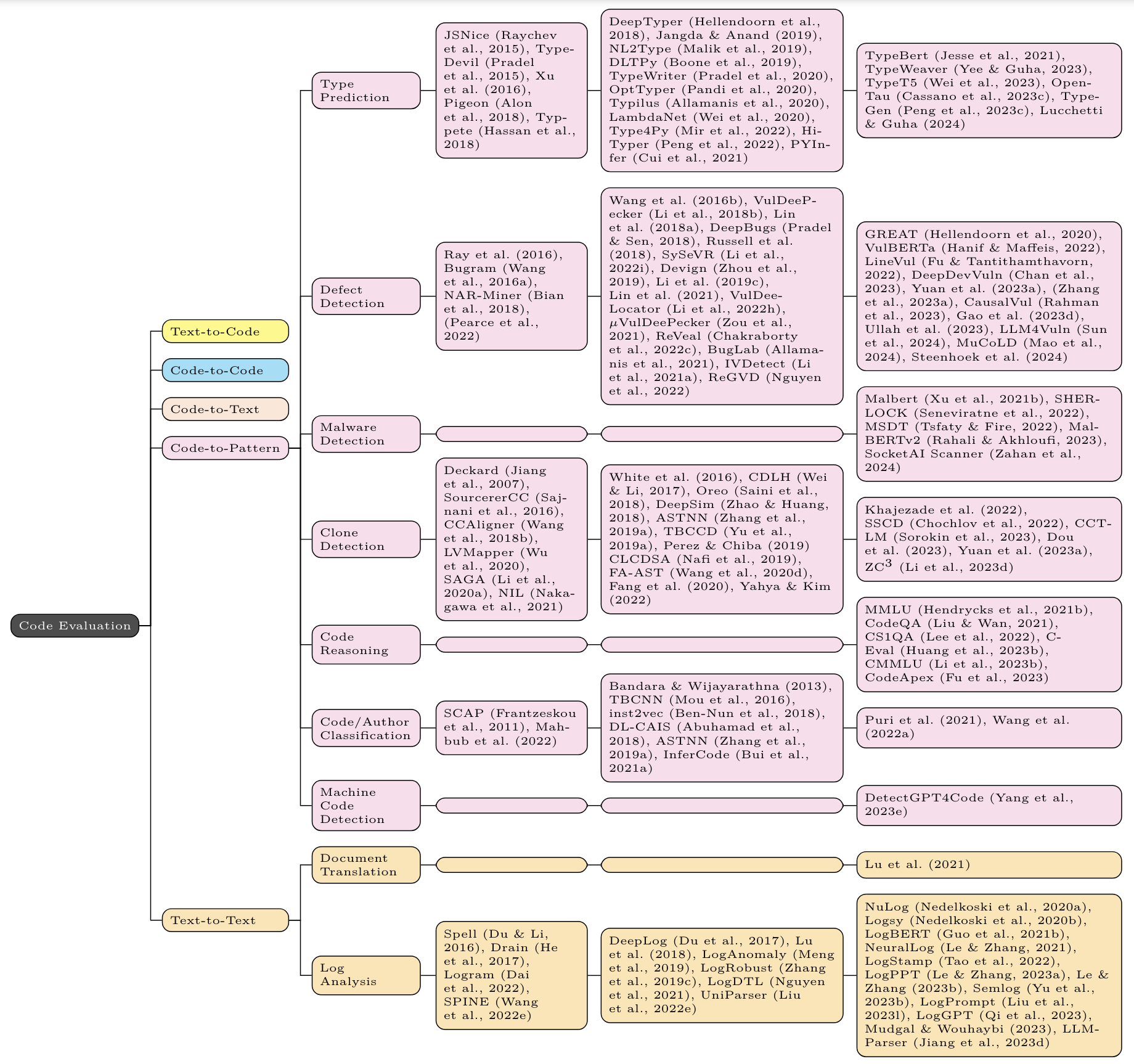

For each task, the first column contains non-neural methods (eg n-gram, TF-IDF, and (occasionally) static program analysis); the second column contains non-Transformer neural methods (eg LSTM, CNN, GNN); the third column contains Transformer based methods (eg BERT, GPT, T5).

"Enhancing Large Language Models in Coding Through Multi-Perspective Self-Consistency" [2023-09] [ACL 2024] [paper]

"Self-Infilling Code Generation" [2023-11] [ICML 2024] [paper]

"JumpCoder: Go Beyond Autoregressive Coder via Online Modification" [2024-01] [ACL 2024] [paper]

"Unsupervised Evaluation of Code LLMs with Round-Trip Correctness" [2024-02] [ICML 2024] [paper]

"The Larger the Better? Improved LLM Code-Generation via Budget Reallocation" [2024-03] [paper]

"Quantifying Contamination in Evaluating Code Generation Capabilities of Language Models" [2024-03] [ACL 2024] [paper]

"Comments as Natural Logic Pivots: Improve Code Generation via Comment Perspective" [2024-04] [ACL 2024 Findings] [paper]

"Distilling Algorithmic Reasoning from LLMs via Explaining Solution Programs" [2024-04] [paper]

"Quality Assessment of Prompts Used in Code Generation" [2024-04] [paper]

"Assessing GPT-4-Vision's Capabilities in UML-Based Code Generation" [2024-04] [paper]

"Large Language Models Synergize with Automated Machine Learning" [2024-05] [paper]

"Model Cascading for Code: Reducing Inference Costs with Model Cascading for LLM Based Code Generation" [2024-05] [paper]

"A Survey on Large Language Models for Code Generation" [2024-06] [paper]

"Is Programming by Example solved by LLMs?" [2024-06] [paper]

"Benchmarks and Metrics for Evaluations of Code Generation: A Critical Review" [2024-06] [paper]

"MPCODER: Multi-user Personalized Code Generator with Explicit and Implicit Style Representation Learning" [2024-06] [ACL 2024] [paper]

"Revisiting the Impact of Pursuing Modularity for Code Generation" [2024-07] [paper]

"Evaluating Long Range Dependency Handling in Code Generation Models using Multi-Step Key Retrieval" [2024-07] [paper]

"When to Stop? Towards Efficient Code Generation in LLMs with Excess Token Prevention" [2024-07] [paper]

"Assessing Programming Task Difficulty for Efficient Evaluation of Large Language Models" [2024-07] [paper]

"ArchCode: Incorporating Software Requirements in Code Generation with Large Language Models" [2024-08] [ACL 2024] [paper]

"Fine-tuning Language Models for Joint Rewriting and Completion of Code with Potential Bugs" [2024-08] [ACL 2024 Findings] [paper]

"Selective Prompt Anchoring for Code Generation" [2024-08] [paper]

"Bridging the Language Gap: Enhancing Multilingual Prompt-Based Code Generation in LLMs via Zero-Shot Cross-Lingual Transfer" [2024-08] [paper]

"Optimizing Large Language Model Hyperparameters for Code Generation" [2024-08] [paper]

"EPiC: Cost-effective Search-based Prompt Engineering of LLMs for Code Generation" [2024-08] [paper]

"CodeRefine: A Pipeline for Enhancing LLM-Generated Code Implementations of Research Papers" [2024-08] [paper]

"No Man is an Island: Towards Fully Automatic Programming by Code Search, Code Generation and Program Repair" [2024-09] [paper]

"Planning In Natural Language Improves LLM Search For Code Generation" [2024-09] [paper]

"Multi-Programming Language Ensemble for Code Generation in Large Language Model" [2024-09] [paper]

"A Pair Programming Framework for Code Generation via Multi-Plan Exploration and Feedback-Driven Refinement" [2024-09] [paper]

"USCD: Improving Code Generation of LLMs by Uncertainty-Aware Selective Contrastive Decoding" [2024-09] [paper]

"Eliciting Instruction-tuned Code Language Models' Capabilities to Utilize Auxiliary Function for Code Generation" [2024-09] [paper]

"Selection of Prompt Engineering Techniques for Code Generation through Predicting Code Complexity" [2024-09] [paper]

"Horizon-Length Prediction: Advancing Fill-in-the-Middle Capabilities for Code Generation with Lookahead Planning" [2024-10] [paper]

"Showing LLM-Generated Code Selectively Based on Confidence of LLMs" [2024-10] [paper]

"AutoFeedback: An LLM-based Framework for Efficient and Accurate API Request Generation" [2024-10] [paper]

"Enhancing LLM Agents for Code Generation with Possibility and Pass-rate Prioritized Experience Replay" [2024-10] [paper]

"From Solitary Directives to Interactive Encouragement! LLM Secure Code Generation by Natural Language Prompting" [2024-10] [paper]

"Self-Explained Keywords Empower Large Language Models for Code Generation" [2024-10] [paper]

"Context-Augmented Code Generation Using Programming Knowledge Graphs" [2024-10] [paper]

"In-Context Code-Text Learning for Bimodal Software Engineering" [2024-10] [paper]

"Combining LLM Code Generation with Formal Specifications and Reactive Program Synthesis" [2024-10] [paper]

"Less is More: DocString Compression in Code Generation" [2024-10] [paper]

"Multi-Programming Language Sandbox for LLMs" [2024-10] [paper]

"Personality-Guided Code Generation Using Large Language Models" [2024-10] [paper]

"Do Advanced Language Models Eliminate the Need for Prompt Engineering in Software Engineering?" [2024-11] [paper]

"Scattered Forest Search: Smarter Code Space Exploration with LLMs" [2024-11] [paper]

"Anchor Attention, Small Cache: Code Generation with Large Language Models" [2024-11] [paper]

"ROCODE: Integrating Backtracking Mechanism and Program Analysis in Large Language Models for Code Generation" [2024-11] [paper]

"SRA-MCTS: Self-driven Reasoning Aurmentation with Monte Carlo Tree Search for Enhanced Code Generation" [2024-11] [paper]

"CodeGRAG: Extracting Composed Syntax Graphs for Retrieval Augmented Cross-Lingual Code Generation" [2024-05] [paper]

"Prompt-based Code Completion via Multi-Retrieval Augmented Generation" [2024-05] [paper]

"A Lightweight Framework for Adaptive Retrieval In Code Completion With Critique Model" [2024-06] [papaer]

"Preference-Guided Refactored Tuning for Retrieval Augmented Code Generation" [2024-09] [paper]

"Building A Coding Assistant via the Retrieval-Augmented Language Model" [2024-10] [paper]

"DroidCoder: Enhanced Android Code Completion with Context-Enriched Retrieval-Augmented Generation" [2024-10] [ASE 2024] [paper]

"Assessing the Answerability of Queries in Retrieval-Augmented Code Generation" [2024-11] [paper]

"Fault-Aware Neural Code Rankers" [2022-06] [NeurIPS 2022] [paper]

"Functional Overlap Reranking for Neural Code Generation" [2023-10] [ACL 2024 Findings] [paper]

"Top Pass: Improve Code Generation by Pass@k-Maximized Code Ranking" [2024-08] [paper]

"DOCE: Finding the Sweet Spot for Execution-Based Code Generation" [2024-08] [paper]

"Sifting through the Chaff: On Utilizing Execution Feedback for Ranking the Generated Code Candidates" [2024-08] [paper]

"B4: Towards Optimal Assessment of Plausible Code Solutions with Plausible Tests" [2024-09] [paper]

"Learning Code Preference via Synthetic Evolution" [2024-10] [paper]

"Tree-to-tree Neural Networks for Program Translation" [2018-02] [NeurIPS 2018] [paper]

"Program Language Translation Using a Grammar-Driven Tree-to-Tree Model" [2018-07] [paper]

"Unsupervised Translation of Programming Languages" [2020-06] [NeurIPS 2020] [paper]

"Leveraging Automated Unit Tests for Unsupervised Code Translation" [2021-10] [ICLR 2022] paper]

"Code Translation with Compiler Representations" [2022-06] [ICLR 2023] [paper]

"Multilingual Code Snippets Training for Program Translation" [2022-06] [AAAI 2022] [paper]

"BabelTower: Learning to Auto-parallelized Program Translation" [2022-07] [ICML 2022] [paper]

"Syntax and Domain Aware Model for Unsupervised Program Translation" [2023-02] [ICSE 2023] [paper]

"CoTran: An LLM-based Code Translator using Reinforcement Learning with Feedback from Compiler and Symbolic Execution" [2023-06] [paper]

"Lost in Translation: A Study of Bugs Introduced by Large Language Models while Translating Code" [2023-08] [ICSE 2024] [paper]

"On the Evaluation of Neural Code Translation: Taxonomy and Benchmark", 2023-08, ASE 2023, [paper]

"Program Translation via Code Distillation" [2023-10] [EMNLP 2023] [paper]

"Explain-then-Translate: An Analysis on Improving Program Translation with Self-generated Explanations" [2023-11] [EMNLP 2023 Findings] [paper]

"Exploring the Impact of the Output Format on the Evaluation of Large Language Models for Code Translation" [2024-03] [paper]

"Exploring and Unleashing the Power of Large Language Models in Automated Code Translation" [2024-04] [paper]

"VERT: Verified Equivalent Rust Transpilation with Few-Shot Learning" [2024-04] [paper]

"Towards Translating Real-World Code with LLMs: A Study of Translating to Rust" [2024-05] [paper]

"An interpretable error correction method for enhancing code-to-code translation" [2024-05] [ICLR 2024] [paper]

"LASSI: An LLM-based Automated Self-Correcting Pipeline for Translating Parallel Scientific Codes" [2024-06] [paper]

"Rectifier: Code Translation with Corrector via LLMs" [2024-07] [paper]

"Enhancing Code Translation in Language Models with Few-Shot Learning via Retrieval-Augmented Generation" [2024-07] [paper]

"A Joint Learning Model with Variational Interaction for Multilingual Program Translation" [2024-08] [paper]

"Automatic Library Migration Using Large Language Models: First Results" [2024-08] [paper]

"Context-aware Code Segmentation for C-to-Rust Translation using Large Language Models" [2024-09] [paper]

"TRANSAGENT: An LLM-Based Multi-Agent System for Code Translation" [2024-10] [paper]

"Unraveling the Potential of Large Language Models in Code Translation: How Far Are We?" [2024-10] [paper]

"CodeRosetta: Pushing the Boundaries of Unsupervised Code Translation for Parallel Programming" [2024-10] [paper]

"A test-free semantic mistakes localization framework in Neural Code Translation" [2024-10] [paper]

"Repository-Level Compositional Code Translation and Validation" [2024-10] [paper]

"Leveraging Large Language Models for Code Translation and Software Development in Scientific Computing" [2024-10] [paper]

"InterTrans: Leveraging Transitive Intermediate Translations to Enhance LLM-based Code Translation" [2024-11] [paper]

"Translating C To Rust: Lessons from a User Study" [2024-11] [paper]

"A Transformer-based Approach for Source Code Summarization" [2020-05] [ACL 2020] [paper]

"Code Summarization with Structure-induced Transformer" [2020-12] [ACL 2021 Findings] [paper]

"Code Structure Guided Transformer for Source Code Summarization" [2021-04] [ACM TSEM] [paper]

"M2TS: Multi-Scale Multi-Modal Approach Based on Transformer for Source Code Summarization" [2022-03] [ICPC 2022] [paper]

"AST-trans: code summarization with efficient tree-structured attention" [2022-05] [ICSE 2022] [paper]

"CoSS: Leveraging Statement Semantics for Code Summarization" [2023-03] [IEEE TSE] [paper]

"Automatic Code Summarization via ChatGPT: How Far Are We?" [2023-05] [paper]

"Semantic Similarity Loss for Neural Source Code Summarization" [2023-08] [paper]

"Distilled GPT for Source Code Summarization" [2023-08] [ASE] [paper]

"CSA-Trans: Code Structure Aware Transformer for AST" [2024-04] [paper]

"Analyzing the Performance of Large Language Models on Code Summarization" [2024-04] [paper]

"Enhancing Trust in LLM-Generated Code Summaries with Calibrated Confidence Scores" [2024-04] [paper]

"DocuMint: Docstring Generation for Python using Small Language Models" [2024-05] [paper] [repo]

"Natural Is The Best: Model-Agnostic Code Simplification for Pre-trained Large Language Models" [2024-05] [paper]

"Large Language Models for Code Summarization" [2024-05] [paper]

"Exploring the Efficacy of Large Language Models (GPT-4) in Binary Reverse Engineering" [2024-06] [paper]

"Identifying Inaccurate Descriptions in LLM-generated Code Comments via Test Execution" [2024-06] [paper]

"MALSIGHT: Exploring Malicious Source Code and Benign Pseudocode for Iterative Binary Malware Summarization" [2024-06] [paper]

"ESALE: Enhancing Code-Summary Alignment Learning for Source Code Summarization" [2024-07] [paper]

"Source Code Summarization in the Era of Large Language Models" [2024-07] [paper]

"Natural Language Outlines for Code: Literate Programming in the LLM Era" [2024-08] [paper]

"Context-aware Code Summary Generation" [2024-08] [paper]

"AUTOGENICS: Automated Generation of Context-Aware Inline Comments for Code Snippets on Programming Q&A Sites Using LLM" [2024-08] [paper]

"LLMs as Evaluators: A Novel Approach to Evaluate Bug Report Summarization" [2024-09] [paper]

"Evaluating the Quality of Code Comments Generated by Large Language Models for Novice Programmers" [2024-09] [paper]

"Generating Equivalent Representations of Code By A Self-Reflection Approach" [2024-10] [paper]

"A review of automatic source code summarization" [2024-10] [Empirical Software Engineering] [paper]

"DeepDebug: Fixing Python Bugs Using Stack Traces, Backtranslation, and Code Skeletons" [2021-05] [paper]

"Break-It-Fix-It: Unsupervised Learning for Program Repair" [2021-06] [ICML 2021] [paper]

"TFix: Learning to Fix Coding Errors with a Text-to-Text Transformer" [2021-07] [ICML 2021] [paper]

"Automated Repair of Programs from Large Language Models" [2022-05] [ICSE 2023] [paper]

"Less Training, More Repairing Please: Revisiting Automated Program Repair via Zero-shot Learning" [2022-07] [ESEC/FSE 2022] [paper]

"Repair Is Nearly Generation: Multilingual Program Repair with LLMs" [2022-08] [AAAI 2023] [paper]

"Practical Program Repair in the Era of Large Pre-trained Language Models" [2022-10] [paper]

"VulRepair: a T5-based automated software vulnerability repair" [2022-11] [ESEC/FSE 2022] [paper]

"Conversational Automated Program Repair" [2023-01] [paper]

"Impact of Code Language Models on Automated Program Repair" [2023-02] [ICSE 2023] [paper]

"InferFix: End-to-End Program Repair with LLMs" [2023-03] [ESEC/FSE 2023] [paper]

"Enhancing Automated Program Repair through Fine-tuning and Prompt Engineering" [2023-04] [paper]

"A study on Prompt Design, Advantages and Limitations of ChatGPT for Deep Learning Program Repair" [2023-04] [paper]

"Domain Knowledge Matters: Improving Prompts with Fix Templates for Repairing Python Type Errors" [2023-06] [ICSE 2024] [paper]

"RepairLLaMA: Efficient Representations and Fine-Tuned Adapters for Program Repair" [2023-12] [paper]

"The Fact Selection Problem in LLM-Based Program Repair" [2024-04] [paper]

"Aligning LLMs for FL-free Program Repair" [2024-04] [paper]

"A Deep Dive into Large Language Models for Automated Bug Localization and Repair" [2024-04] [paper]

"Multi-Objective Fine-Tuning for Enhanced Program Repair with LLMs" [2024-04] [paper]

"How Far Can We Go with Practical Function-Level Program Repair?" [2024-04] [paper]

"Revisiting Unnaturalness for Automated Program Repair in the Era of Large Language Models" [2024-04] [paper]

"A Unified Debugging Approach via LLM-Based Multi-Agent Synergy" [2024-04] [paper]

"A Systematic Literature Review on Large Language Models for Automated Program Repair" [2024-05] [paper]

"NAVRepair: Node-type Aware C/C++ Code Vulnerability Repair" [2024-05] [paper]

"Automated Program Repair: Emerging trends pose and expose problems for benchmarks" [2024-05] [paper]

"Automated Repair of AI Code with Large Language Models and Formal Verification" [2024-05] [paper]

"A Case Study of LLM for Automated Vulnerability Repair: Assessing Impact of Reasoning and Patch Validation Feedback" [2024-05] [paper]

"CREF: An LLM-based Conversational Software Repair Framework for Programming Tutors" [2024-06] [paper]

"Towards Practical and Useful Automated Program Repair for Debugging" [2024-07] [paper]

"ThinkRepair: Self-Directed Automated Program Repair" [2024-07] [paper]

"MergeRepair: An Exploratory Study on Merging Task-Specific Adapters in Code LLMs for Automated Program Repair" [2024-08] [paper]

"RePair: Automated Program Repair with Process-based Feedback" [2024-08] [ACL 2024 Findings] [paper]

"Enhancing LLM-Based Automated Program Repair with Design Rationales" [2024-08] [paper]

"Automated Software Vulnerability Patching using Large Language Models" [2024-08] [paper]

"Enhancing Source Code Security with LLMs: Demystifying The Challenges and Generating Reliable Repairs" [2024-09] [paper]

"MarsCode Agent: AI-native Automated Bug Fixing" [2024-09] [paper]

"Co-Learning: Code Learning for Multi-Agent Reinforcement Collaborative Framework with Conversational Natural Language Interfaces" [2024-09] [paper]

"Debugging with Open-Source Large Language Models: An Evaluation" [2024-09] [paper]

"VulnLLMEval: A Framework for Evaluating Large Language Models in Software Vulnerability Detection and Patching" [2024-09] [paper]

"ContractTinker: LLM-Empowered Vulnerability Repair for Real-World Smart Contracts" [2024-09] [paper]

"Can GPT-O1 Kill All Bugs? An Evaluation of GPT-Family LLMs on QuixBugs" [2024-09] [paper]

"Exploring and Lifting the Robustness of LLM-powered Automated Program Repair with Metamorphic Testing" [2024-10] [paper]

"LecPrompt: A Prompt-based Approach for Logical Error Correction with CodeBERT" [2024-10] [paper]

"Semantic-guided Search for Efficient Program Repair with Large Language Models" [2024-10] [paper]

"A Comprehensive Survey of AI-Driven Advancements and Techniques in Automated Program Repair and Code Generation" [2024-11] [paper]

"Self-Supervised Contrastive Learning for Code Retrieval and Summarization via Semantic-Preserving Transformations" [2020-09] [SIGIR 2021] [paper]

"REINFOREST: Reinforcing Semantic Code Similarity for Cross-Lingual Code Search Models" [2023-05] [paper]

"Rewriting the Code: A Simple Method for Large Language Model Augmented Code Search" [2024-01] [ACL 2024] [paper]

"Revisiting Code Similarity Evaluation with Abstract Syntax Tree Edit Distance" [2024-04] [ACL 2024 short] [paper]

"Is Next Token Prediction Sufficient for GPT? Exploration on Code Logic Comprehension" [2024-04] [paper]

"Refining Joint Text and Source Code Embeddings for Retrieval Task with Parameter-Efficient Fine-Tuning" [2024-05] [paper]

"Typhon: Automatic Recommendation of Relevant Code Cells in Jupyter Notebooks" [2024-05] [paper]

"Toward Exploring the Code Understanding Capabilities of Pre-trained Code Generation Models" [2024-06] [paper]

"Aligning Programming Language and Natural Language: Exploring Design Choices in Multi-Modal Transformer-Based Embedding for Bug Localization" [2024-06] [paper]

"Assessing the Code Clone Detection Capability of Large Language Models" [2024-07] [paper]

"CodeCSE: A Simple Multilingual Model for Code and Comment Sentence Embeddings" [2024-07] [paper]

"Large Language Models for cross-language code clone detection" [2024-08] [paper]

"Coding-PTMs: How to Find Optimal Code Pre-trained Models for Code Embedding in Vulnerability Detection?" [2024-08] [paper]

"You Augment Me: Exploring ChatGPT-based Data Augmentation for Semantic Code Search" [2024-08] [paper]

"Improving Source Code Similarity Detection Through GraphCodeBERT and Integration of Additional Features" [2024-08] [paper]

"LLM Agents Improve Semantic Code Search" [2024-08] [paper]

"zsLLMCode: An Effective Approach for Functional Code Embedding via LLM with Zero-Shot Learning" [2024-09] [paper]

"Exploring Demonstration Retrievers in RAG for Coding Tasks: Yeas and Nays!" [2024-10] [paper]

"Instructive Code Retriever: Learn from Large Language Model's Feedback for Code Intelligence Tasks" [2024-10] [paper]

"Binary Code Similarity Detection via Graph Contrastive Learning on Intermediate Representations" [2024-10] [paper]

"Are Decoder-Only Large Language Models the Silver Bullet for Code Search?" [2024-10] [paper]

"CodeXEmbed: A Generalist Embedding Model Family for Multiligual and Multi-task Code Retrieval" [2024-11] [paper]

"CodeSAM: Source Code Representation Learning by Infusing Self-Attention with Multi-Code-View Graphs" [2024-11] [paper]

"EnStack: An Ensemble Stacking Framework of Large Language Models for Enhanced Vulnerability Detection in Source Code" [2024-11] [paper]

"Isotropy Matters: Soft-ZCA Whitening of Embeddings for Semantic Code Search" [2024-11] [paper]

"An Empirical Study on the Code Refactoring Capability of Large Language Models" [2024-11] [paper]

"Automated Update of Android Deprecated API Usages with Large Language Models" [2024-11] [paper]

"An Empirical Study on the Potential of LLMs in Automated Software Refactoring" [2024-11] [paper]

"CODECLEANER: Elevating Standards with A Robust Data Contamination Mitigation Toolkit" [2024-11] [paper]

"Instruct or Interact? Exploring and Eliciting LLMs' Capability in Code Snippet Adaptation Through Prompt Engineering" [2024-11] [paper]

"Learning type annotation: is big data enough?" [2021-08] [ESEC/FSE 2021] [paper]

"Do Machine Learning Models Produce TypeScript Types That Type Check?" [2023-02] [ECOOP 2023] [paper]

"TypeT5: Seq2seq Type Inference using Static Analysis" [2023-03] [ICLR 2023] [paper]

"Type Prediction With Program Decomposition and Fill-in-the-Type Training" [2023-05] [paper]

"Generative Type Inference for Python" [2023-07] [ASE 2023] [paper]

"Activation Steering for Robust Type Prediction in CodeLLMs" [2024-04] [paper]

"An Empirical Study of Large Language Models for Type and Call Graph Analysis" [2024-10] [paper]

"Repository-Level Prompt Generation for Large Language Models of Code" [2022-06] [ICML 2023] [paper]

"CoCoMIC: Code Completion By Jointly Modeling In-file and Cross-file Context" [2022-12] [paper]

"RepoCoder: Repository-Level Code Completion Through Iterative Retrieval and Generation" [2023-03] [EMNLP 2023] [paper]

"Coeditor: Leveraging Repo-level Diffs for Code Auto-editing" [2023-05] [ICLR 2024 Spotlight] [paper]

"RepoBench: Benchmarking Repository-Level Code Auto-Completion Systems" [2023-06] [ICLR 2024] [paper]

"Guiding Language Models of Code with Global Context using Monitors" [2023-06] [paper]

"RepoFusion: Training Code Models to Understand Your Repository" [2023-06] [paper]

"CodePlan: Repository-level Coding using LLMs and Planning" [2023-09] [paper]

"SWE-bench: Can Language Models Resolve Real-World GitHub Issues?" [2023-10] [ICLR 2024] [paper]

"CrossCodeEval: A Diverse and Multilingual Benchmark for Cross-File Code Completion" [2023-10] [NeurIPS 2023] [paper]

"A^3-CodGen: A Repository-Level Code Generation Framework for Code Reuse with Local-Aware, Global-Aware, and Third-Party-Library-Aware" [2023-12] [paper]

"Teaching Code LLMs to Use Autocompletion Tools in Repository-Level Code Generation" [2024-01] [paper]

"RepoHyper: Better Context Retrieval Is All You Need for Repository-Level Code Completion" [2024-03] [paper]

"Repoformer: Selective Retrieval for Repository-Level Code Completion" [2024-03] [ICML 2024] [paper]

"CodeS: Natural Language to Code Repository via Multi-Layer Sketch" [2024-03] [paper]

"Class-Level Code Generation from Natural Language Using Iterative, Tool-Enhanced Reasoning over Repository" [2024-04] [paper]

"Contextual API Completion for Unseen Repositories Using LLMs" [2024-05] [paper]

"Dataflow-Guided Retrieval Augmentation for Repository-Level Code Completion" [2024-05][ACL 2024] [paper]

"How to Understand Whole Software Repository?" [2024-06] [paper]

"R2C2-Coder: Enhancing and Benchmarking Real-world Repository-level Code Completion Abilities of Code Large Language Models" [2024-06] [paper]

"CodeR: Issue Resolving with Multi-Agent and Task Graphs" [2024-06] [paper]

"Enhancing Repository-Level Code Generation with Integrated Contextual Information" [2024-06] [paper]

"On The Importance of Reasoning for Context Retrieval in Repository-Level Code Editing" [2024-06] [paper]

"GraphCoder: Enhancing Repository-Level Code Completion via Code Context Graph-based Retrieval and Language Model" [2024-06] [ASE 2024] [paper]

"STALL+: Boosting LLM-based Repository-level Code Completion with Static Analysis" [2024-06] [paper]

"Hierarchical Context Pruning: Optimizing Real-World Code Completion with Repository-Level Pretrained Code LLMs" [2024-06] [paper]

"Agentless: Demystifying LLM-based Software Engineering Agents" [2024-07] [paper]

"RLCoder: Reinforcement Learning for Repository-Level Code Completion" [2024-07] [paper]

"CoEdPilot: Recommending Code Edits with Learned Prior Edit Relevance, Project-wise Awareness, and Interactive Nature" [2024-08] [paper] [repo]

"RAMBO: Enhancing RAG-based Repository-Level Method Body Completion" [2024-09] [paper]

"Exploring the Potential of Conversational Test Suite Based Program Repair on SWE-bench" [2024-10] [paper]

"RepoGraph: Enhancing AI Software Engineering with Repository-level Code Graph" [2024-10] [paper]

"See-Saw Generative Mechanism for Scalable Recursive Code Generation with Generative AI" [2024-11] [paper]

"Seeking the user interface", 2014-09, ASE 2014, [paper]

"pix2code: Generating Code from a Graphical User Interface Screenshot", 2017-05, EICS 2018, [paper]

"Machine Learning-Based Prototyping of Graphical User Interfaces for Mobile Apps", 2018-02, TSE 2020, [paper]

"Automatic HTML Code Generation from Mock-Up Images Using Machine Learning Techniques", 2019-04, EBBT 2019, [paper]

"Sketch2code: Generating a website from a paper mockup", 2019-05, [paper]

"HTLM: Hyper-Text Pre-Training and Prompting of Language Models", 2021-07, ICLR 2022, [paper]

"Learning UI-to-Code Reverse Generator Using Visual Critic Without Rendering", 2023-05, [paper]

"Design2Code: How Far Are We From Automating Front-End Engineering?" [2024-03] [paper]

"Unlocking the conversion of Web Screenshots into HTML Code with the WebSight Dataset" [2024-03] [paper]

"VISION2UI: A Real-World Dataset with Layout for Code Generation from UI Designs" [2024-04] [paper]

"LogoMotion: Visually Grounded Code Generation for Content-Aware Animation" [2024-05] [paper]

"PosterLLaVa: Constructing a Unified Multi-modal Layout Generator with LLM" [2024-06] [paper]

"UICoder: Finetuning Large Language Models to Generate User Interface Code through Automated Feedback" [2024-06] [paper]

"On AI-Inspired UI-Design" [2024-06] [paper]

"Identifying User Goals from UI Trajectories" [2024-06] [paper]

"Automatically Generating UI Code from Screenshot: A Divide-and-Conquer-Based Approach" [2024-06] [paper]

"Web2Code: A Large-scale Webpage-to-Code Dataset and Evaluation Framework for Multimodal LLMs" [2024-06] [paper]

"Vision-driven Automated Mobile GUI Testing via Multimodal Large Language Model" [2024-07] [paper]

"AUITestAgent: Automatic Requirements Oriented GUI Function Testing" [2024-07] [paper]

"LLM-based Abstraction and Concretization for GUI Test Migration" [2024-09] [paper]

"Enabling Cost-Effective UI Automation Testing with Retrieval-Based LLMs: A Case Study in WeChat" [2024-09] [paper]

"Self-Elicitation of Requirements with Automated GUI Prototyping" [2024-09] [paper]

"Infering Alt-text For UI Icons With Large Language Models During App Development" [2024-09] [paper]

"Leveraging Large Vision Language Model For Better Automatic Web GUI Testing" [2024-10] [paper]

"Sketch2Code: Evaluating Vision-Language Models for Interactive Web Design Prototyping" [2024-10] [paper]

"WAFFLE: Multi-Modal Model for Automated Front-End Development" [2024-10] [paper]

"DesignRepair: Dual-Stream Design Guideline-Aware Frontend Repair with Large Language Models" [2024-11] [paper]

"Interaction2Code: How Far Are We From Automatic Interactive Webpage Generation?" [2024-11] [paper]

"A Multi-Agent Approach for REST API Testing with Semantic Graphs and LLM-Driven Inputs" [2024-11] [paper]

"PICARD: Parsing Incrementally for Constrained Auto-Regressive Decoding from Language Models" [2021-09] [EMNLP 2021] [paper]

"CodexDB: Generating Code for Processing SQL Queries using GPT-3 Codex" [2022-04] [paper]

"T5QL: Taming language models for SQL generation" [2022-09] [paper]

"Towards Generalizable and Robust Text-to-SQL Parsing" [2022-10] [EMNLP 2022 Findings] [paper]

"XRICL: Cross-lingual Retrieval-Augmented In-Context Learning for Cross-lingual Text-to-SQL Semantic Parsing" [2022-10] [EMNLP 2022 Findings] [paper]

"A comprehensive evaluation of ChatGPT's zero-shot Text-to-SQL capability" [2023-03] [paper]

"DIN-SQL: Decomposed In-Context Learning of Text-to-SQL with Self-Correction" [2023-04] [NeurIPS 2023] [paper]

"How to Prompt LLMs for Text-to-SQL: A Study in Zero-shot, Single-domain, and Cross-domain Settings" [2023-05] [paper]

"Enhancing Few-shot Text-to-SQL Capabilities of Large Language Models: A Study on Prompt Design Strategies" [2023-05] [paper]

"SQL-PaLM: Improved Large Language Model Adaptation for Text-to-SQL" [2023-05] [paper]

"Retrieval-augmented GPT-3.5-based Text-to-SQL Framework with Sample-aware Prompting and Dynamic Revision Chain" [2023-07] [ICONIP 2023] [paper]

"Text-to-SQL Empowered by Large Language Models: A Benchmark Evaluation" [2023-08] [paper]

"MAC-SQL: A Multi-Agent Collaborative Framework for Text-to-SQL" [2023-12] [paper]

"Investigating the Impact of Data Contamination of Large Language Models in Text-to-SQL Translation" [2024-02] [ACL 2024 Findings] [paper]

"Decomposition for Enhancing Attention: Improving LLM-based Text-to-SQL through Workflow Paradigm" [2024-02] [ACL 2024 Findings] [paper]

"Knowledge-to-SQL: Enhancing SQL Generation with Data Expert LLM" [2024-02] [ACL 2024 Findings] [paper]

"Understanding the Effects of Noise in Text-to-SQL: An Examination of the BIRD-Bench Benchmark" [2024-02] [ACL 2024 short] [paper]

"SQL-Encoder: Improving NL2SQL In-Context Learning Through a Context-Aware Encoder" [2024-03] [paper]

"LLM-R2: A Large Language Model Enhanced Rule-based Rewrite System for Boosting Query Efficiency" [2024-04] [paper]

"Dubo-SQL: Diverse Retrieval-Augmented Generation and Fine Tuning for Text-to-SQL" [2024-04] [paper]

"EPI-SQL: Enhancing Text-to-SQL Translation with Error-Prevention Instructions" [2024-04] [paper]