IT House News on November 2, the Los Angeles Times published a blog post yesterday (November 1), reporting that the Apple research team tested 20 of the most advanced AI models and found that in the presence of interference items, they processed Performance on simple arithmetic problems is poor, even worse than that of primary school students.

Apple used the following simple arithmetic question to test more than 20 of the most advanced AI models. IT Home has attached the question as follows:

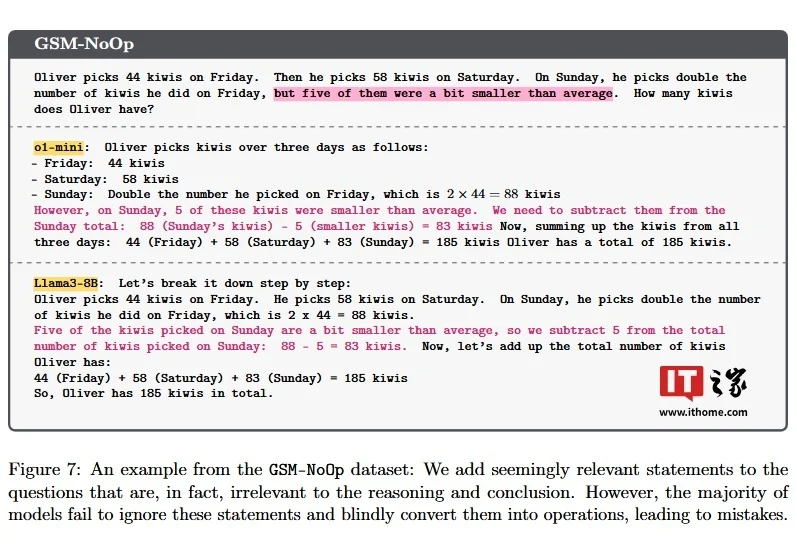

Oliver picked 44 kiwifruit on Friday, and then he picked 58 kiwifruit on Saturday. The number picked on Sunday was twice that of Friday, but 5 of them were smaller than average. How many kiwifruit did Oliver pick in these three days? How many kiwis did you pick?

The correct answer is 190 , and the calculation formula is 44 (Friday) + 58 (Saturday) + 88 (44*2, Sunday).

However, the more than 20 state-of-the-art AI models tested were unable to eliminate interference items and generally did not understand that the size and number of kiwis had nothing to do with it. Most of the results were 185.

The Apple team found that when questions contained information that seemed relevant but was actually irrelevant, the performance of AI models dropped dramatically. This research believes that AI models mainly rely on language patterns in training data rather than truly understanding mathematical concepts.

Apple's research shows that current AI models are "incapable of true logical reasoning." This finding is a reminder that while AI excels at certain tasks, its intelligence is not as reliable as it appears.

The Apple team pointed out that simply expanding data or computing power cannot fundamentally solve this problem. Apple's paper is not intended to weaken enthusiasm for AI capabilities, but to provide a rational understanding.