Recently, the turmoil of the OpenAI and sora projects has made the outside world worried about the bustling AI video industry.

At the end of September, Mira Murati, OpenAI’s chief technology officer, and Barrett Zoff, vice president of research, who played an important role in the development of the o1 model, GPT-4o, and GPT-4v models. Barret Zoph and chief research officer Bob McGrew also announced their departures.

On October 4, Sora project leader Tim Brooks officially announced his resignation on social platform X and joined Google DeepMind.

If you eat it together with the fact that there is no definite timetable for the official version of Sora, it will be like a familiar drama where the bubble of a star project is burst and everyone in the game has their own plans.

Recently, the AI video competition has begun to become more intense.

According to SimilarWeb statistics, the total number of visits to the Luma AI website of the world's leading AI video generation company in September was only 11.81 million times, a month-on-month decrease of 38.49%. The smash hit pika also saw a drop in total visits in September. Although it became popular again with a batch of new template effects in the new version in October, the question of how the product can continue to attract user interest has gradually surfaced.

Some initially wary of Sora are now being re-evaluated, such as filmmaker Patrick Cederberg saying in April that he had to have the model generate hundreds of short clips before he could find one. Short clips available. In other words, Sora is very difficult to use.

Fortunately, Tim Brooks has not said goodbye to AI video. He will most likely join DeepMind's AI video generation tool Veo. The rise and fall of Sora may not be the only benchmark for the AI video industry. At least in terms of capabilities, challengers like Meta Movie Gen are beginning to claim to have killed Sora. The domestic AI video product ecosystem has also been undergoing new changes.

The cooling of the AI video track, in other words, is also the gestation period before the next batch of better products are iterated out. Recently, it seems that a sufficiently amazing product has entered the public eye.

01

PixVerse V3 really breaks the dimensional wall

As the AI video products on the market have become so abundant that people are beginning to experience "visual fatigue", is PixVerse V3 really special enough?

If you have similar doubts, the appearance of this Pikachu is enough to dispel most of them.

Except for those press conference demonstrations that cannot be reproduced, this is probably the first time that two-dimensional images and the real world can interact so harmoniously in AI video generation.

In the picture, the image of this Pikachu is exactly the same as what we remember from the cartoon, but it appeared on a real busy street, and then jumped into the arms of a little brother.

This is the prompt required for this video:

Center front camera: Pikachu stands on a bustling city street, next to a backpacker. The backpacker walks past the camera, and Pikachu runs from behind. Jumped into the backpacker's arms. Pikachu happily hugged his neck and was very close. Pedestrians hurried, and backpackers carried Pikachu forward, whose cheeks sparkled with excitement, lighting up the moment. Charming and effortless.

In 1934, "Hollywood Party" produced by MGM, Jimmy Durant's action of holding "Mickey Mouse" with his fingers became the first classic scene in the history of world cinema that combined cartoons and real images. 90 years later, this Effects that are full of ingenuity but extremely cumbersome to implement can finally be done by AI.

What’s outrageous is that Pikachu was made by AI, and the real world where Pikachu lives in was also made by AI. Judging from the effects, Pixverse V3 has a very smooth understanding of animation entering the real world.

Not only Pikachu, you can also use the following prompt, which seems to have too many elements, to generate a video of Uncle Mario entering the train station:

The video shows a bustling train station filled with a diverse crowd of passengers eagerly waiting for their trains. The camera sweeps across the scene, capturing the lively atmosphere. Super Mario, a stout Italian character with a round face, dons his iconic red hat and blue overalls. The camera closely follows Mario as he steps confidently onto the platform, his face beaming with excitement.. The video is in a realistic style.

In the video, the train station is bustling with passengers of all kinds anxiously waiting for the train. The camera pans across the scene, capturing the lively atmosphere. As the train slowly approaches the platform, the camera follows the tall, round-faced Italian character Super Mario. Wearing his iconic red hat and blue overalls, he confidently walks onto the platform with an excited smile on his face. . The style of the video is very realistic.

There is a distinct protagonist, Mario, in the picture. Each of the bustling crowds behind him has different details of his movements. As Mario walks forward along the camera, the edges where the animated characters intersect with the real environment are also handled very clearly and cleanly. , at the same time, the train also pulled into the station.

If cartoon characters can't satisfy your appetite, let's take a look at how PixVerse V3 performs on big scenes.

——Keywords: The dragon falls asleep.

The complete prompt is like this:

Steadycam tracking shot of a dragon diving into the water, the monk raises his arms in appreciation.

Steadicam tracking: A dragon dives into the water and a monk raises his arms in appreciation.

Although the video still lacks some of the details mentioned in Prompt, overall, whether it is the consistency of the camera movement, the ability to use gloomy tones to subtly express the tension of the picture, and the use of monsters in the distance and abandoned cars in the foreground The video generation capabilities of PixVerse V3 have begun to approach movie-level picture quality.

In addition to Vincent videos, this time PixVerse V3 also demonstrated excellent image-based video capabilities.

Tusheng's video is very imaginative. You can try to find a handsome American western movie poster, add a prompt, and let him do some "outrageous" things - such as encountering a poor quality one. Revolver:

Prompt is like this:

The complete prompt is like this:

The pistol misfires with black smoke, which makes the man's face dirty.

The pistol misfired and black smoke came out, staining the man's face.

The most distinct feeling of PixVerse V3 in terms of its Wensheng video and Tusheng video capabilities is that it is extremely close to the points of interest in ordinary people's daily lives. In fact, except for professional video creation-related practitioners, not many people have the need to use AI video software to carve a perfect film and television work. On the contrary, more people who have just used the video generation ability are curious about whether this ability can be played with the real world or even themselves. For example, you can introduce your favorite cartoon characters into the places where you go out every day, or even turn yourself into cartoon characters like Iron Man.

Many of the difficulties currently encountered by AI video products are due to the fact that they are stuck in the self-promotion of technical capabilities, and the users have disappeared. At this stage when Vincent video technology has just emerged, what most ordinary people may like is an AI video product that is close enough to them and friendly enough.

From this perspective, the current PixVerse V3 may be the product that most accurately guesses the user's mind.

The amazingness of PixVerse V3 is not only due to the iterative capabilities of the large AI video model behind PixVerse V3, but also its optimization of prompt word understanding capabilities. I believe that careful people will have noticed this after seeing the above prompt examples. .

"Subject + Subject Description + Movement + Environment" is a Prompt formula that maximizes the effectiveness of AI video generation. Compared with V2.5, you can now add a "shot description" dimension to PixVerse V3.

Of course, in addition to following this formula, Prompt needs to describe the character's actions in as much detail as possible and avoid oversimplified descriptions.

At the same time, PixVerse V3 also has a richer selection of output videos. In terms of output video formats, PixVerse V3 supports a variety of video ratios including 16:9, 9:16, 3:4, 4:3, and 1:1. , the stylization function has also been upgraded after this version update. Now the two modes of Vincent Video and Tusheng Video support four style choices of animation, reality, clay and 3D.

In a word, PixVerse V3 is not only stronger this time, it even wants to put product descriptions into your hands line by line.

On social platform X, many followers of AI products have begun to use PixVerse V3, such as Pierrick Chevallier, who has many fans. He even put together a dedicated post to show off the videos he generated using PixVerse V3. In addition to his powerful generation ability, what is eye-catching is that he shows many very "Halloween-flavored" videos.

02

This Halloween, let PixVerse V3 do the “effects”

In order to break through the circle of an interesting enough AI video product, in addition to having sufficient technical support, it also needs a good opportunity. Speaking of which, the release of PixVerse V3 comes at the right time.

November 1st is Halloween, and this moment at the end of October is the annual peak of imagination for young people. In conjunction with the Halloween theme, PixVerse V3 has released a series of Halloween-themed templates, allowing you to use AI to "cast spells" on everything around you.

There are a total of 8 Halloween-themed templates released in PixVerse V3 this time, one of which focuses on "Transformation into a Living Person".

For example, a monster appears out of nowhere in the city.

In addition to such big scenes, PixVerse V3 can directly make elements in a photo "alive", such as making the puppy in "I'm Waiting for You in the Rain" truly walk out of the photo:

The puppy even has a natural movement of raising its head before standing up. After walking out of the photo, the original photo only left a calm lawn, and the whole effect was quite stunning.

This template also has more imaginative gameplay. For example, can the Vitruvian Man in Leonardo da Vinci's works be allowed to come out of the circle that frames him?

Something like this:

Being able to put on such a show on Halloween is enough to amaze the audience.

This time, the second type of template prepared by PixVerse V3 for Halloween takes the abstract route.

For example, let an iron box stand up and run away:

Or smash a Porsche into pieces and turn it into a pile of blocks:

Of course, this time PixVerse V3’s templates also have many more Halloween-like effects that can be played, such as putting a wizard hat on a character photo and then moving it, like this:

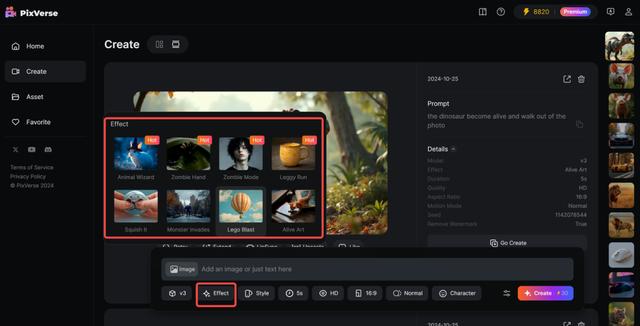

These Halloween templates are included in PixVerse V3's new Effect feature. It is very simple to use. After uploading the image, select the effect you want and click to generate it. There is no need to worry about entering prompts in the middle. It is very friendly for people who only think about Halloween.

This time PixVerse V3 shows stronger multi-modal generation capabilities. Based on its own Wensheng video and Tusheng video, PixVerse V3 can now make the generated video carry the sound content the user wants, and if the original video Not long enough, PixVerse V3 now has the ability to create further continuations of the original video.

These multi-modal generation capabilities have also become two new features debuting in PixVerse V3 along with Effect. The first function is Lipsync, a lip-sync function that can generate synchronized voices of multi-lingual characters for videos.

The Lipsync function allows users to enter their own copywriting or upload audio files based on the generated video, and then PixVerse will automatically adapt the mouth shape of the characters in the video based on the copywriting or audio file content. Currently, Lipsync can support a video length of 30 seconds, and the languages that can lip-sync include English, Chinese, French, and Japanese.

Another function is to extend the video, or it can be understood as a continuation of the story.

In response to the problem that the length of the currently generated video is too short, in PixVerse V3, users can select the generated video, click the "Extend" button, enter prompt words related to how to further develop the video, click "create", and the original video will be The proposed direction achieves plot progression while maintaining a high degree of coherence among the characters and actions.

With the addition of multi-modal video generation capabilities, PixVerse V3 can now generate AI videos with larger narratives and better audio-visual effects, and the boundaries of AI video creation have been further broadened.

Make an AI video product that is truly playable

"The moment for ChatGPT will be when ordinary users can use it." Wang Changhu, founder and CEO of Aishi Technology, said in an interview in April this year.

In the past two years, every new glimmer of large-scale model technology has been transformed into new grand narratives about production methods, as if human life will be completely subverted in a short period of time. But so far, this has not happened.

At the same time, the excitement brought by Sora and the overly ambitious technical imagination have gradually caused the entire field of AI video products to lose focus and find no connection with public life. Therefore, on the one hand, AI video products such as Runway, which are positioned as professional tools, are difficult to break out of the circle due to the high threshold for use. On the other hand, products that are positioned more towards the general public have fallen into a "burn after reading" dilemma after being tried by everyone. Users After the novelty wears off, it is difficult to retain the product. The product lacks clear and specific development ideas, so it has no choice but to sink towards the simple logic of "filterization" and "special effects".

In other words, the huge openness of AI generation capabilities makes almost all current products in the field of AI video look like some kind of semi-finished product. The randomness and uncontrollability of the generated content are packaged into a novel experience, which also means that it is difficult to use in the field. used in a specific and persistent scenario.

Just like when the outside world was amazed by Sora's balloon man generation capabilities, Patrick Cederberg was troubled by the lack of consistency in Sora's content generation. He complained that the color of the balloons would change with each generation, and this Imperfections mean that a lot of post-production work is still unavoidable. There are many similar problems, which is why although Hollywood began to strike out a year ago for the potential disruption of the film industry by big models, a year later, sora still cannot really enter the workflow of film and television.

At present, AI video products are more or less trapped in a similar situation - although AI video generation capabilities have been exciting everyone for a long time, AI video generation products are still a beautiful-looking "seller show" ”.

In the face of the new technological torrent, it is difficult for a product to remain "concrete". However, what Aishi Technology has delivered from the original PixVerse web version to the current PixVerse V3 is a rare clear and orderly iteration path.

In January this year, Aishi Technology officially released the web version of PixVerse, a PixVerse video product. But at that time, including PixVerse, the problem encountered by almost all Wensheng video products was that the generated images were uncontrollable, which meant that users could not continuously generate video content around a unified character. Three months later, the PixVerse web version was equipped with the C2V (Character to Video) function developed based on the self-developed large video model, which initially solved the consistency problem in AI video creation by accurately extracting character features to lock the character.

After fixing the "role" in the video, PixVerse focused subsequent iterations on the "controllability" of the behavior in the generated content. In June this year, Aishi Technology released the Magic Brush motion brush. Users can precisely control the movement and direction of each element by simply smearing on the elements in the video screen. PixVerse V2 was launched at the end of July. In addition to showing enough control over the generated content, the difficulty of prompts has also been significantly reduced. At the same time, the means of fine-tuning the generated effects have become more abundant.

This is another iteration with very clear intentions - "The specific product form needs to be tried, but in the end we still hope to serve the majority of ordinary users," as Wang Changhu said in a previous interview.

When video generation capabilities cannot directly meet the needs of ordinary users, serving professional creators and developing next-generation tools based on new content generation paradigms is a higher priority. "When production factors are included by technology - for example, AI replaces actors, scenes, and cameras during filming - then AI video generation capabilities can begin to be used by the public, and the circle of users will gradually expand, creating huge opportunity."

This time PixVerse 3V began to try to introduce more gameplay related to ordinary people's lives, and tried to use Prompt to set the lens. The latter can be seen as an attempt to partially replace the camera. From this point of view, Aishi Technology has been on a very determined path in polishing its product direction.

In the technological wave of AI video generation, where divergence is the beauty, Aishi Technology's choice is to go against the overall trend, stay away from grand narratives, and provide the most specific plan for how AI video products can be played.

Only when people can hold it in their hands and play with it as much as possible can the AI video application transition from a "seller show" led by Sora to a vibrant "buyer show".

The stunning debut of PixVerse V3 may be the beginning of another positive change behind this "cooling down" of AI videos.