The editor of Downcodes will take you to understand Google ReCapture technology and how this disruptive technology will change video editing! ReCapture allows ordinary users to easily realize professional-level camera motion adjustments, redesigns the video lens language, and brings revolutionary changes to video post-production. This technology no longer relies on traditional 4D intermediate representation methods, but cleverly utilizes the motion knowledge of generative video models to transform video editing into a video-to-video conversion process, greatly simplifying the operation process and retaining the characteristics of the video. Details and picture quality.

The latest ReCapture technology launched by the Google research team is subverting the traditional video editing method. This innovation allows ordinary users to easily implement professional-level camera movement adjustments and redesign the lens language for already captured videos.

In traditional video post-production, changing the camera angle of a captured video has always been a technical problem. When existing solutions handle different types of video content, it is often difficult to maintain complex camera movement effects and picture details at the same time. ReCapture takes a different approach and does not use the traditional 4D intermediate representation method. Instead, it cleverly uses the motion knowledge stored in the generative video model and redefines the task as a video-to-video conversion process through Stable Video Diffusion.

The system uses a two-stage workflow. The first stage generates the anchor video, which is the initial output version with the new camera position. This stage can be achieved by creating multi-angle videos through diffusion models such as CAT3D, or by frame-by-frame depth estimation and point cloud rendering. While this version may have some timing inconsistencies and visual flaws, it laid the foundation for Phase Two.

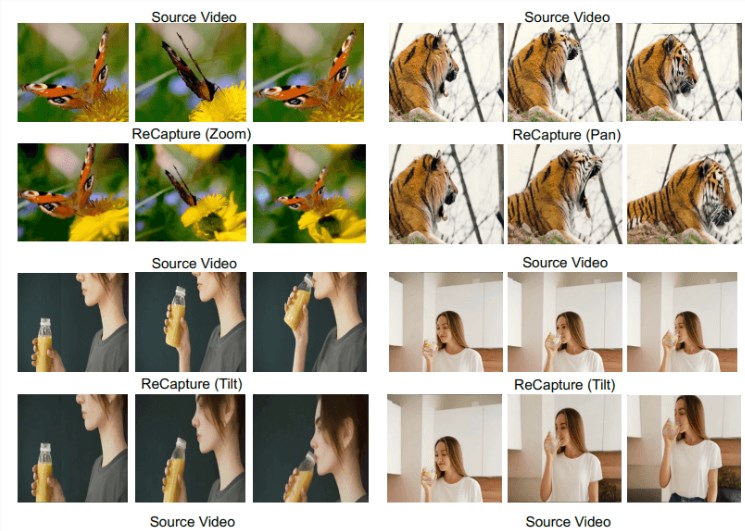

The second stage applies masked video fine-tuning, leveraging a generative video model trained on existing footage to create realistic motion effects and timing changes. The system introduces a temporal LoRA (Low Rank Adaptation) layer to optimize the model so that it can understand and replicate the specific dynamic characteristics of anchor videos without retraining the entire model. At the same time, the spatial LoRA layer ensures that picture details and content are consistent with the new camera movement. This enables the generative video model to complete operations such as zooming, panning, and tilting while maintaining the characteristic motion of the original video.

Although ReCapture has made important progress in user-friendly video processing, it is still in the research stage and is still some way away from commercial application. It is worth noting that although Google has many video AI projects, it has not yet brought them to the market. Among them, the Veo project may be the closest to commercial use. Similarly, Meta's recently launched Movie-Gen model and OpenAI's Sora released at the beginning of the year have not yet been commercialized. Currently, the video AI market is mainly led by startups such as Runway, which launched its latest Gen-3Alpha model last summer.

The emergence of ReCapture technology heralds the future development direction in the field of video editing. Although it is still in the research stage, its powerful functions and convenient operation methods will undoubtedly bring more possibilities to video creation. We look forward to the early maturity and commercial application of this technology in the future, bringing a more convenient and efficient video editing experience to the majority of users.