The editor of Downcodes learned that the Tongyi Qianwen team has recently open sourced its latest Qwen2.5-Coder full series of models, which marks another milestone in the field of open source large models. The Qwen2.5-Coder series models have attracted much attention due to their powerful coding capabilities, diverse functions and convenient practicality. They have demonstrated excellent performance in code generation, repair and reasoning, providing developers with powerful Tools promote the further development of Open Code LLMs. The open source of this series of models will greatly promote the application and innovation of artificial intelligence technology in the field of programming.

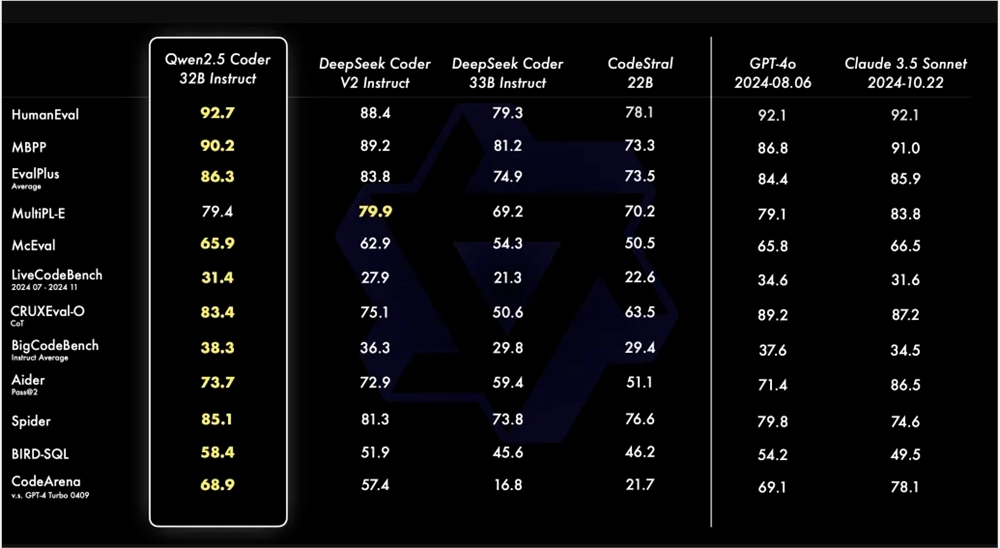

The Tongyi Qianwen team recently announced that it will open source its latest Qwen2.5-Coder series. This move is aimed at promoting the development of Open Code LLMs. Qwen2.5-Coder has attracted attention for its power, diversity and practicality. The Qwen2.5-Coder-32B-Instruct model has reached the SOTA level in terms of code capabilities, which is equivalent to GPT-4o, showing comprehensive capabilities, including code generation, code repair and code reasoning. It achieves top performance on multiple code generation benchmarks and achieves a score of 73.7 on the Aider benchmark, which is comparable to GPT-4o.

Qwen2.5-Coder supports more than 40 programming languages and scored 65.9 points on McEval, with languages such as Haskell and Racket performing particularly well. This is due to its unique data cleaning and matching in the pre-training stage. In addition, Qwen2.5-Coder-32B-Instruct also performs well in code repair capabilities in multiple programming languages, scoring 75.2 in the MdEval benchmark, ranking first.

In order to test the alignment performance of Qwen2.5-Coder-32B-Instruct on human preferences, an internally annotated code preference evaluation benchmark Code Arena was constructed. The results show that Qwen2.5-Coder-32B-Instruct has advantages in preferred alignment.

The Qwen2.5-Coder series has open sourced models in four sizes this time, including 0.5B/3B/14B/32B, covering the six mainstream model sizes to meet the needs of different developers. The official provides two models: Base and Instruct. The former serves as the basis for developers to fine-tune the model, and the latter serves as the officially aligned chat model. There is a positive correlation between model size and performance, and Qwen2.5-Coder achieves SOTA performance in all sizes.

The 0.5B/1.5B/7B/14B/32B model of Qwen2.5-Coder adopts the Apache2.0 license, while the 3B model is Research Only licensed. The team verified the effectiveness of Scaling on Code LLMs by evaluating the performance of Qwen2.5-Coder of different sizes on all data sets.

The open source of Qwen2.5-Coder provides developers with a powerful, diverse and practical programming model choice, helping to promote the development and application of programming language models.

Qwen2.5-Coder model link:

https://modelscope.cn/collections/Qwen25-Coder-9d375446e8f5814a

In short, the open source of Qwen2.5-Coder brings a powerful tool to developers, and its excellent performance and wide applicability will greatly promote the development of the field of code generation and programming. We look forward to Qwen2.5-Coder being able to play a role in more application scenarios in the future.