Interacting with AI in real time is a major challenge in the field of artificial intelligence, especially in integrating multimodal information and maintaining conversational fluency. Many existing AI systems still have deficiencies in real-time conversation fluency, contextual understanding, and multi-modal understanding, which limits their practical applications. The editor of Downcodes will introduce to you Ultravox v0.4.1 launched by Fixie AI, an open source multi-modal model series designed to solve these problems.

In the application of artificial intelligence, how to achieve real-time interaction with AI has always been a major challenge faced by developers and researchers. Among them, integrating multi-modal information (such as text, images, and audio) to form a coherent dialogue system is particularly complex.

Despite some progress in advanced large-scale language models like GPT-4, many AI systems still have difficulties in achieving real-time conversational fluency, context awareness, and multi-modal understanding, which limits their effectiveness in practical applications. Additionally, the computational requirements of these models make real-time deployment extremely difficult without extensive infrastructure support.

To solve these problems, Fixie AI launched Ultravox v0.4.1, a series of multi-modal open source models designed to enable real-time dialogue with AI.

Ultravox v0.4.1 has the ability to handle multiple input formats (such as text, images, etc.) and aims to provide an alternative to closed source models such as GPT-4. This edition focuses not only on language proficiency but also on enabling fluent, context-aware conversations across different media types.

As an open source project, Fixie AI hopes to use Ultravox to give developers and researchers around the world equal access to the most advanced conversational technology, suitable for a variety of applications from customer support to entertainment.

The Ultravox v0.4.1 model is based on an optimized transformer architecture and is capable of processing multiple types of data in parallel. By using a technique called cross-modal attention, these models can simultaneously integrate and interpret information from different sources.

This means users can show an AI an image, ask relevant questions, and get informed answers in real time. Fixie AI hosts these open source models on Hugging Face to facilitate developers to access and experiment, and provides detailed API documentation to promote seamless integration in practical applications.

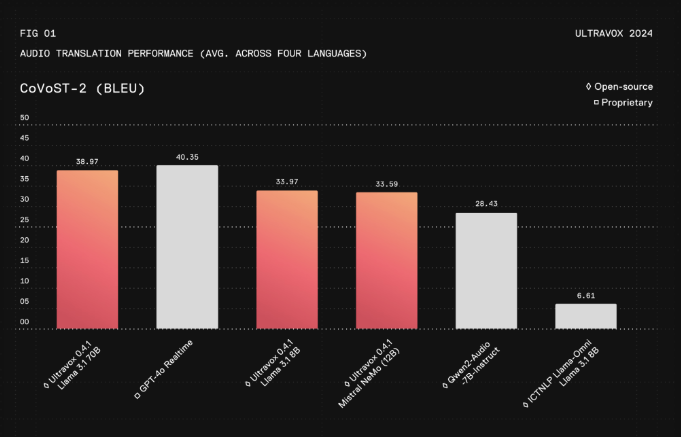

Based on recent evaluation data, Ultravox v0.4.1 achieves significant reductions in response latency and is approximately 30% faster than leading commercial models, while maintaining comparable accuracy and contextual understanding. The cross-modal capabilities of this model make it excellent in complex use cases, such as combining images with text for comprehensive analysis in healthcare, or providing rich interactive content in education.

Ultravox's openness enables community-driven development, enhances flexibility and drives transparency. By reducing the computational burden required to deploy this model, Ultravox makes advanced conversational AI more accessible, especially for small businesses and independent developers, breaking down barriers previously created by resource constraints.

Project page: https://www.ultravox.ai/blog/ultravox-an-open-weight-alternative-to-gpt-4o-realtime

Model: https://huggingface.co/fixie-ai

All in all, Ultravox v0.4.1 provides developers with a powerful and easily accessible real-time multi-modal dialogue AI model. Its open source nature and efficient performance are expected to promote the development of the field of artificial intelligence. Visit the project page and Hugging Face for more information.