Google DeepMind's Gemini experimental version (Exp1114) performs amazingly on the Chatbot Arena platform! After community testing with over 6,000 votes, it outperformed its competitors in several key areas, especially math, complex prompts, and creative writing, demonstrating strong overall capabilities. The editor of Downcodes will give you an in-depth understanding of the outstanding performance of Gemini-Exp-1114 and the industry’s interpretation of it.

Google DeepMind’s latest Gemini experimental version (Exp1114) has achieved impressive results on the Chatbot Arena platform. After more than a week of community testing, the accumulated data of more than 6,000 votes show that this new model surpasses competing products with significant advantages and shows amazing strength in many key areas.

In terms of overall score, Gemini-Exp-1114 tied for first place with GPT-4-latest with an excellent score of over 40 points, surpassing the previously leading GPT-4-preview version. What’s even more amazing is that this model has reached the top in core areas such as mathematics, complex prompts, and creative writing, showing its strong comprehensive strength.

Specifically, the progress of Gemini-Exp-1114 is impressive:

Jumped from 3rd to first in the overall rankings

Mathematics ability assessment rose from 3rd to 1st

Complex prompt processing climbed from 4th to 1st

Creative writing performance improved from 2nd to 1st

Visual processing capabilities also top the list

Programming level also improved from 5th to 3rd

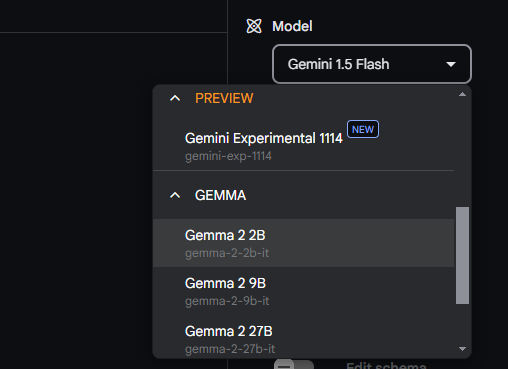

Google AI Studio has officially launched this new version for users to experience. However, the community has also expressed concern about some specific issues, such as whether the 1,000 token limit still exists, and how to handle practical application issues such as extremely long text output.

Industry analysts believe that this breakthrough shows that Google’s long-term investment in the field of AI is beginning to bear fruit. Interestingly, the model maintains a ranking of 4th in style control, which may imply that the development team mainly adopted new post-training methods rather than making changes to the pre-trained model.

This major breakthrough also triggered discussions on the industry structure. OpenAI has often launched new products when competitors release important updates, but this time Google's progress has been so large that it has attracted the attention of the industry. Some people believe that this may herald the arrival of Gemini2, and that Google's competitiveness in the field of large models is significantly improving.

The excellent performance of Gemini-Exp-1114 marks another milestone breakthrough for Google in the field of large AI models, and also brings more possibilities for future AI development. We look forward to more surprises in subsequent versions of Gemini!